mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-04 03:25:30 +08:00

Compare commits

24 Commits

v0.23.0

...

4ec6a4e493

| Author | SHA1 | Date | |

|---|---|---|---|

| 4ec6a4e493 | |||

| 2d5ad42128 | |||

| dccda35f65 | |||

| d142b9095e | |||

| c2c079886f | |||

| c3ae1aaecd | |||

| f099bc1236 | |||

| 0b5d1ebefa | |||

| 082c2ed11c | |||

| a764f0a5b2 | |||

| 651d9fff9f | |||

| fddfce303c | |||

| a24fc8291b | |||

| 37e4485415 | |||

| 8d3f9d61da | |||

| 27c55f6514 | |||

| 9883c572cd | |||

| f9619defcc | |||

| 01f0ced1e6 | |||

| 647fb115a0 | |||

| 2114b9e3ad | |||

| 45b96acf6b | |||

| 3305215144 | |||

| 86b03f399a |

@ -303,6 +303,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

Or if you are behind a proxy, you can pass proxy arguments:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 Launch service from source for development

|

||||

|

||||

1. Install `uv` and `pre-commit`, or skip this step if they are already installed:

|

||||

|

||||

@ -277,6 +277,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

Jika berada di belakang proxy, Anda dapat melewatkan argumen proxy:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 Menjalankan Aplikasi dari untuk Pengembangan

|

||||

|

||||

1. Instal `uv` dan `pre-commit`, atau lewati langkah ini jika sudah terinstal:

|

||||

|

||||

@ -277,6 +277,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

プロキシ環境下にいる場合は、プロキシ引数を指定できます:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 ソースコードからサービスを起動する方法

|

||||

|

||||

1. `uv` と `pre-commit` をインストールする。すでにインストールされている場合は、このステップをスキップしてください:

|

||||

|

||||

@ -271,6 +271,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

프록시 환경인 경우, 프록시 인수를 전달할 수 있습니다:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 소스 코드로 서비스를 시작합니다.

|

||||

|

||||

1. `uv` 와 `pre-commit` 을 설치하거나, 이미 설치된 경우 이 단계를 건너뜁니다:

|

||||

|

||||

@ -294,6 +294,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

Se você estiver atrás de um proxy, pode passar argumentos de proxy:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 Lançar o serviço a partir do código-fonte para desenvolvimento

|

||||

|

||||

1. Instale o `uv` e o `pre-commit`, ou pule esta etapa se eles já estiverem instalados:

|

||||

|

||||

@ -303,6 +303,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

若您位於代理環境,可傳遞代理參數:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 以原始碼啟動服務

|

||||

|

||||

1. 安裝 `uv` 和 `pre-commit`。如已安裝,可跳過此步驟:

|

||||

|

||||

@ -302,6 +302,15 @@ cd ragflow/

|

||||

docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

如果您处在代理环境下,可以传递代理参数:

|

||||

|

||||

```bash

|

||||

docker build --platform linux/amd64 \

|

||||

--build-arg http_proxy=http://YOUR_PROXY:PORT \

|

||||

--build-arg https_proxy=http://YOUR_PROXY:PORT \

|

||||

-f Dockerfile -t infiniflow/ragflow:nightly .

|

||||

```

|

||||

|

||||

## 🔨 以源代码启动服务

|

||||

|

||||

1. 安装 `uv` 和 `pre-commit`。如已经安装,可跳过本步骤:

|

||||

|

||||

@ -746,6 +746,7 @@ async def change_parser():

|

||||

tenant_id = DocumentService.get_tenant_id(req["doc_id"])

|

||||

if not tenant_id:

|

||||

return get_data_error_result(message="Tenant not found!")

|

||||

DocumentService.delete_chunk_images(doc, tenant_id)

|

||||

if settings.docStoreConn.index_exist(search.index_name(tenant_id), doc.kb_id):

|

||||

settings.docStoreConn.delete({"doc_id": doc.id}, search.index_name(tenant_id), doc.kb_id)

|

||||

return None

|

||||

|

||||

@ -1286,6 +1286,9 @@ async def rm_chunk(tenant_id, dataset_id, document_id):

|

||||

if "chunk_ids" in req:

|

||||

unique_chunk_ids, duplicate_messages = check_duplicate_ids(req["chunk_ids"], "chunk")

|

||||

condition["id"] = unique_chunk_ids

|

||||

else:

|

||||

unique_chunk_ids = []

|

||||

duplicate_messages = []

|

||||

chunk_number = settings.docStoreConn.delete(condition, search.index_name(tenant_id), dataset_id)

|

||||

if chunk_number != 0:

|

||||

DocumentService.decrement_chunk_num(document_id, dataset_id, 1, chunk_number, 0)

|

||||

|

||||

@ -342,21 +342,7 @@ class DocumentService(CommonService):

|

||||

cls.clear_chunk_num(doc.id)

|

||||

try:

|

||||

TaskService.filter_delete([Task.doc_id == doc.id])

|

||||

page = 0

|

||||

page_size = 1000

|

||||

all_chunk_ids = []

|

||||

while True:

|

||||

chunks = settings.docStoreConn.search(["img_id"], [], {"doc_id": doc.id}, [], OrderByExpr(),

|

||||

page * page_size, page_size, search.index_name(tenant_id),

|

||||

[doc.kb_id])

|

||||

chunk_ids = settings.docStoreConn.get_doc_ids(chunks)

|

||||

if not chunk_ids:

|

||||

break

|

||||

all_chunk_ids.extend(chunk_ids)

|

||||

page += 1

|

||||

for cid in all_chunk_ids:

|

||||

if settings.STORAGE_IMPL.obj_exist(doc.kb_id, cid):

|

||||

settings.STORAGE_IMPL.rm(doc.kb_id, cid)

|

||||

cls.delete_chunk_images(doc, tenant_id)

|

||||

if doc.thumbnail and not doc.thumbnail.startswith(IMG_BASE64_PREFIX):

|

||||

if settings.STORAGE_IMPL.obj_exist(doc.kb_id, doc.thumbnail):

|

||||

settings.STORAGE_IMPL.rm(doc.kb_id, doc.thumbnail)

|

||||

@ -378,6 +364,23 @@ class DocumentService(CommonService):

|

||||

pass

|

||||

return cls.delete_by_id(doc.id)

|

||||

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def delete_chunk_images(cls, doc, tenant_id):

|

||||

page = 0

|

||||

page_size = 1000

|

||||

while True:

|

||||

chunks = settings.docStoreConn.search(["img_id"], [], {"doc_id": doc.id}, [], OrderByExpr(),

|

||||

page * page_size, page_size, search.index_name(tenant_id),

|

||||

[doc.kb_id])

|

||||

chunk_ids = settings.docStoreConn.get_doc_ids(chunks)

|

||||

if not chunk_ids:

|

||||

break

|

||||

for cid in chunk_ids:

|

||||

if settings.STORAGE_IMPL.obj_exist(doc.kb_id, cid):

|

||||

settings.STORAGE_IMPL.rm(doc.kb_id, cid)

|

||||

page += 1

|

||||

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def get_newly_uploaded(cls):

|

||||

|

||||

@ -65,6 +65,7 @@ class EvaluationService(CommonService):

|

||||

(success, dataset_id or error_message)

|

||||

"""

|

||||

try:

|

||||

timestamp= current_timestamp()

|

||||

dataset_id = get_uuid()

|

||||

dataset = {

|

||||

"id": dataset_id,

|

||||

@ -73,8 +74,8 @@ class EvaluationService(CommonService):

|

||||

"description": description,

|

||||

"kb_ids": kb_ids,

|

||||

"created_by": user_id,

|

||||

"create_time": current_timestamp(),

|

||||

"update_time": current_timestamp(),

|

||||

"create_time": timestamp,

|

||||

"update_time": timestamp,

|

||||

"status": StatusEnum.VALID.value

|

||||

}

|

||||

|

||||

|

||||

@ -64,10 +64,13 @@ class TenantLangfuseService(CommonService):

|

||||

|

||||

@classmethod

|

||||

def save(cls, **kwargs):

|

||||

kwargs["create_time"] = current_timestamp()

|

||||

kwargs["create_date"] = datetime_format(datetime.now())

|

||||

kwargs["update_time"] = current_timestamp()

|

||||

kwargs["update_date"] = datetime_format(datetime.now())

|

||||

current_ts = current_timestamp()

|

||||

current_date = datetime_format(datetime.now())

|

||||

|

||||

kwargs["create_time"] = current_ts

|

||||

kwargs["create_date"] = current_date

|

||||

kwargs["update_time"] = current_ts

|

||||

kwargs["update_date"] = current_date

|

||||

obj = cls.model.create(**kwargs)

|

||||

return obj

|

||||

|

||||

|

||||

@ -169,11 +169,12 @@ class PipelineOperationLogService(CommonService):

|

||||

operation_status=operation_status,

|

||||

avatar=avatar,

|

||||

)

|

||||

log["create_time"] = current_timestamp()

|

||||

log["create_date"] = datetime_format(datetime.now())

|

||||

log["update_time"] = current_timestamp()

|

||||

log["update_date"] = datetime_format(datetime.now())

|

||||

|

||||

timestamp = current_timestamp()

|

||||

datetime_now = datetime_format(datetime.now())

|

||||

log["create_time"] = timestamp

|

||||

log["create_date"] = datetime_now

|

||||

log["update_time"] = timestamp

|

||||

log["update_date"] = datetime_now

|

||||

with DB.atomic():

|

||||

obj = cls.save(**log)

|

||||

|

||||

|

||||

@ -28,10 +28,13 @@ class SearchService(CommonService):

|

||||

|

||||

@classmethod

|

||||

def save(cls, **kwargs):

|

||||

kwargs["create_time"] = current_timestamp()

|

||||

kwargs["create_date"] = datetime_format(datetime.now())

|

||||

kwargs["update_time"] = current_timestamp()

|

||||

kwargs["update_date"] = datetime_format(datetime.now())

|

||||

current_ts = current_timestamp()

|

||||

current_date = datetime_format(datetime.now())

|

||||

|

||||

kwargs["create_time"] = current_ts

|

||||

kwargs["create_date"] = current_date

|

||||

kwargs["update_time"] = current_ts

|

||||

kwargs["update_date"] = current_date

|

||||

obj = cls.model.create(**kwargs)

|

||||

return obj

|

||||

|

||||

|

||||

@ -116,10 +116,13 @@ class UserService(CommonService):

|

||||

kwargs["password"] = generate_password_hash(

|

||||

str(kwargs["password"]))

|

||||

|

||||

kwargs["create_time"] = current_timestamp()

|

||||

kwargs["create_date"] = datetime_format(datetime.now())

|

||||

kwargs["update_time"] = current_timestamp()

|

||||

kwargs["update_date"] = datetime_format(datetime.now())

|

||||

current_ts = current_timestamp()

|

||||

current_date = datetime_format(datetime.now())

|

||||

|

||||

kwargs["create_time"] = current_ts

|

||||

kwargs["create_date"] = current_date

|

||||

kwargs["update_time"] = current_ts

|

||||

kwargs["update_date"] = current_date

|

||||

obj = cls.model(**kwargs).save(force_insert=True)

|

||||

return obj

|

||||

|

||||

|

||||

@ -42,7 +42,7 @@ def filename_type(filename):

|

||||

if re.match(r".*\.pdf$", filename):

|

||||

return FileType.PDF.value

|

||||

|

||||

if re.match(r".*\.(msg|eml|doc|docx|ppt|pptx|yml|xml|htm|json|jsonl|ldjson|csv|txt|ini|xls|xlsx|wps|rtf|hlp|pages|numbers|key|md|py|js|java|c|cpp|h|php|go|ts|sh|cs|kt|html|sql)$", filename):

|

||||

if re.match(r".*\.(msg|eml|doc|docx|ppt|pptx|yml|xml|htm|json|jsonl|ldjson|csv|txt|ini|xls|xlsx|wps|rtf|hlp|pages|numbers|key|md|mdx|py|js|java|c|cpp|h|php|go|ts|sh|cs|kt|html|sql)$", filename):

|

||||

return FileType.DOC.value

|

||||

|

||||

if re.match(r".*\.(wav|flac|ape|alac|wavpack|wv|mp3|aac|ogg|vorbis|opus)$", filename):

|

||||

|

||||

@ -69,6 +69,7 @@ CONTENT_TYPE_MAP = {

|

||||

# Web

|

||||

"md": "text/markdown",

|

||||

"markdown": "text/markdown",

|

||||

"mdx": "text/markdown",

|

||||

"htm": "text/html",

|

||||

"html": "text/html",

|

||||

"json": "application/json",

|

||||

|

||||

@ -129,7 +129,8 @@ class FileSource(StrEnum):

|

||||

OCI_STORAGE = "oci_storage"

|

||||

GOOGLE_CLOUD_STORAGE = "google_cloud_storage"

|

||||

AIRTABLE = "airtable"

|

||||

|

||||

ASANA = "asana"

|

||||

GITLAB = "gitlab"

|

||||

|

||||

class PipelineTaskType(StrEnum):

|

||||

PARSE = "Parse"

|

||||

|

||||

@ -37,6 +37,7 @@ from .teams_connector import TeamsConnector

|

||||

from .webdav_connector import WebDAVConnector

|

||||

from .moodle_connector import MoodleConnector

|

||||

from .airtable_connector import AirtableConnector

|

||||

from .asana_connector import AsanaConnector

|

||||

from .config import BlobType, DocumentSource

|

||||

from .models import Document, TextSection, ImageSection, BasicExpertInfo

|

||||

from .exceptions import (

|

||||

@ -73,4 +74,5 @@ __all__ = [

|

||||

"InsufficientPermissionsError",

|

||||

"UnexpectedValidationError",

|

||||

"AirtableConnector",

|

||||

"AsanaConnector",

|

||||

]

|

||||

|

||||

454

common/data_source/asana_connector.py

Normal file

454

common/data_source/asana_connector.py

Normal file

@ -0,0 +1,454 @@

|

||||

from collections.abc import Iterator

|

||||

import time

|

||||

from datetime import datetime

|

||||

import logging

|

||||

from typing import Any, Dict

|

||||

import asana

|

||||

import requests

|

||||

from common.data_source.config import CONTINUE_ON_CONNECTOR_FAILURE, INDEX_BATCH_SIZE, DocumentSource

|

||||

from common.data_source.interfaces import LoadConnector, PollConnector

|

||||

from common.data_source.models import Document, GenerateDocumentsOutput, SecondsSinceUnixEpoch

|

||||

from common.data_source.utils import extract_size_bytes, get_file_ext

|

||||

|

||||

|

||||

|

||||

# https://github.com/Asana/python-asana/tree/master?tab=readme-ov-file#documentation-for-api-endpoints

|

||||

class AsanaTask:

|

||||

def __init__(

|

||||

self,

|

||||

id: str,

|

||||

title: str,

|

||||

text: str,

|

||||

link: str,

|

||||

last_modified: datetime,

|

||||

project_gid: str,

|

||||

project_name: str,

|

||||

) -> None:

|

||||

self.id = id

|

||||

self.title = title

|

||||

self.text = text

|

||||

self.link = link

|

||||

self.last_modified = last_modified

|

||||

self.project_gid = project_gid

|

||||

self.project_name = project_name

|

||||

|

||||

def __str__(self) -> str:

|

||||

return f"ID: {self.id}\nTitle: {self.title}\nLast modified: {self.last_modified}\nText: {self.text}"

|

||||

|

||||

|

||||

class AsanaAPI:

|

||||

def __init__(

|

||||

self, api_token: str, workspace_gid: str, team_gid: str | None

|

||||

) -> None:

|

||||

self._user = None

|

||||

self.workspace_gid = workspace_gid

|

||||

self.team_gid = team_gid

|

||||

|

||||

self.configuration = asana.Configuration()

|

||||

self.api_client = asana.ApiClient(self.configuration)

|

||||

self.tasks_api = asana.TasksApi(self.api_client)

|

||||

self.attachments_api = asana.AttachmentsApi(self.api_client)

|

||||

self.stories_api = asana.StoriesApi(self.api_client)

|

||||

self.users_api = asana.UsersApi(self.api_client)

|

||||

self.project_api = asana.ProjectsApi(self.api_client)

|

||||

self.project_memberships_api = asana.ProjectMembershipsApi(self.api_client)

|

||||

self.workspaces_api = asana.WorkspacesApi(self.api_client)

|

||||

|

||||

self.api_error_count = 0

|

||||

self.configuration.access_token = api_token

|

||||

self.task_count = 0

|

||||

|

||||

def get_tasks(

|

||||

self, project_gids: list[str] | None, start_date: str

|

||||

) -> Iterator[AsanaTask]:

|

||||

"""Get all tasks from the projects with the given gids that were modified since the given date.

|

||||

If project_gids is None, get all tasks from all projects in the workspace."""

|

||||

logging.info("Starting to fetch Asana projects")

|

||||

projects = self.project_api.get_projects(

|

||||

opts={

|

||||

"workspace": self.workspace_gid,

|

||||

"opt_fields": "gid,name,archived,modified_at",

|

||||

}

|

||||

)

|

||||

start_seconds = int(time.mktime(datetime.now().timetuple()))

|

||||

projects_list = []

|

||||

project_count = 0

|

||||

for project_info in projects:

|

||||

project_gid = project_info["gid"]

|

||||

if project_gids is None or project_gid in project_gids:

|

||||

projects_list.append(project_gid)

|

||||

else:

|

||||

logging.debug(

|

||||

f"Skipping project: {project_gid} - not in accepted project_gids"

|

||||

)

|

||||

project_count += 1

|

||||

if project_count % 100 == 0:

|

||||

logging.info(f"Processed {project_count} projects")

|

||||

logging.info(f"Found {len(projects_list)} projects to process")

|

||||

for project_gid in projects_list:

|

||||

for task in self._get_tasks_for_project(

|

||||

project_gid, start_date, start_seconds

|

||||

):

|

||||

yield task

|

||||

logging.info(f"Completed fetching {self.task_count} tasks from Asana")

|

||||

if self.api_error_count > 0:

|

||||

logging.warning(

|

||||

f"Encountered {self.api_error_count} API errors during task fetching"

|

||||

)

|

||||

|

||||

def _get_tasks_for_project(

|

||||

self, project_gid: str, start_date: str, start_seconds: int

|

||||

) -> Iterator[AsanaTask]:

|

||||

project = self.project_api.get_project(project_gid, opts={})

|

||||

project_name = project.get("name", project_gid)

|

||||

team = project.get("team") or {}

|

||||

team_gid = team.get("gid")

|

||||

|

||||

if project.get("archived"):

|

||||

logging.info(f"Skipping archived project: {project_name} ({project_gid})")

|

||||

return

|

||||

if not team_gid:

|

||||

logging.info(

|

||||

f"Skipping project without a team: {project_name} ({project_gid})"

|

||||

)

|

||||

return

|

||||

if project.get("privacy_setting") == "private":

|

||||

if self.team_gid and team_gid != self.team_gid:

|

||||

logging.info(

|

||||

f"Skipping private project not in configured team: {project_name} ({project_gid})"

|

||||

)

|

||||

return

|

||||

logging.info(

|

||||

f"Processing private project in configured team: {project_name} ({project_gid})"

|

||||

)

|

||||

|

||||

simple_start_date = start_date.split(".")[0].split("+")[0]

|

||||

logging.info(

|

||||

f"Fetching tasks modified since {simple_start_date} for project: {project_name} ({project_gid})"

|

||||

)

|

||||

|

||||

opts = {

|

||||

"opt_fields": "name,memberships,memberships.project,completed_at,completed_by,created_at,"

|

||||

"created_by,custom_fields,dependencies,due_at,due_on,external,html_notes,liked,likes,"

|

||||

"modified_at,notes,num_hearts,parent,projects,resource_subtype,resource_type,start_on,"

|

||||

"workspace,permalink_url",

|

||||

"modified_since": start_date,

|

||||

}

|

||||

tasks_from_api = self.tasks_api.get_tasks_for_project(project_gid, opts)

|

||||

for data in tasks_from_api:

|

||||

self.task_count += 1

|

||||

if self.task_count % 10 == 0:

|

||||

end_seconds = time.mktime(datetime.now().timetuple())

|

||||

runtime_seconds = end_seconds - start_seconds

|

||||

if runtime_seconds > 0:

|

||||

logging.info(

|

||||

f"Processed {self.task_count} tasks in {runtime_seconds:.0f} seconds "

|

||||

f"({self.task_count / runtime_seconds:.2f} tasks/second)"

|

||||

)

|

||||

|

||||

logging.debug(f"Processing Asana task: {data['name']}")

|

||||

|

||||

text = self._construct_task_text(data)

|

||||

|

||||

try:

|

||||

text += self._fetch_and_add_comments(data["gid"])

|

||||

|

||||

last_modified_date = self.format_date(data["modified_at"])

|

||||

text += f"Last modified: {last_modified_date}\n"

|

||||

|

||||

task = AsanaTask(

|

||||

id=data["gid"],

|

||||

title=data["name"],

|

||||

text=text,

|

||||

link=data["permalink_url"],

|

||||

last_modified=datetime.fromisoformat(data["modified_at"]),

|

||||

project_gid=project_gid,

|

||||

project_name=project_name,

|

||||

)

|

||||

yield task

|

||||

except Exception:

|

||||

logging.error(

|

||||

f"Error processing task {data['gid']} in project {project_gid}",

|

||||

exc_info=True,

|

||||

)

|

||||

self.api_error_count += 1

|

||||

|

||||

def _construct_task_text(self, data: Dict) -> str:

|

||||

text = f"{data['name']}\n\n"

|

||||

|

||||

if data["notes"]:

|

||||

text += f"{data['notes']}\n\n"

|

||||

|

||||

if data["created_by"] and data["created_by"]["gid"]:

|

||||

creator = self.get_user(data["created_by"]["gid"])["name"]

|

||||

created_date = self.format_date(data["created_at"])

|

||||

text += f"Created by: {creator} on {created_date}\n"

|

||||

|

||||

if data["due_on"]:

|

||||

due_date = self.format_date(data["due_on"])

|

||||

text += f"Due date: {due_date}\n"

|

||||

|

||||

if data["completed_at"]:

|

||||

completed_date = self.format_date(data["completed_at"])

|

||||

text += f"Completed on: {completed_date}\n"

|

||||

|

||||

text += "\n"

|

||||

return text

|

||||

|

||||

def _fetch_and_add_comments(self, task_gid: str) -> str:

|

||||

text = ""

|

||||

stories_opts: Dict[str, str] = {}

|

||||

story_start = time.time()

|

||||

stories = self.stories_api.get_stories_for_task(task_gid, stories_opts)

|

||||

|

||||

story_count = 0

|

||||

comment_count = 0

|

||||

|

||||

for story in stories:

|

||||

story_count += 1

|

||||

if story["resource_subtype"] == "comment_added":

|

||||

comment = self.stories_api.get_story(

|

||||

story["gid"], opts={"opt_fields": "text,created_by,created_at"}

|

||||

)

|

||||

commenter = self.get_user(comment["created_by"]["gid"])["name"]

|

||||

text += f"Comment by {commenter}: {comment['text']}\n\n"

|

||||

comment_count += 1

|

||||

|

||||

story_duration = time.time() - story_start

|

||||

logging.debug(

|

||||

f"Processed {story_count} stories (including {comment_count} comments) in {story_duration:.2f} seconds"

|

||||

)

|

||||

|

||||

return text

|

||||

|

||||

def get_attachments(self, task_gid: str) -> list[dict]:

|

||||

"""

|

||||

Fetch full attachment info (including download_url) for a task.

|

||||

"""

|

||||

attachments: list[dict] = []

|

||||

|

||||

try:

|

||||

# Step 1: list attachment compact records

|

||||

for att in self.attachments_api.get_attachments_for_object(

|

||||

parent=task_gid,

|

||||

opts={}

|

||||

):

|

||||

gid = att.get("gid")

|

||||

if not gid:

|

||||

continue

|

||||

|

||||

try:

|

||||

# Step 2: expand to full attachment

|

||||

full = self.attachments_api.get_attachment(

|

||||

attachment_gid=gid,

|

||||

opts={

|

||||

"opt_fields": "name,download_url,size,created_at"

|

||||

}

|

||||

)

|

||||

|

||||

if full.get("download_url"):

|

||||

attachments.append(full)

|

||||

|

||||

except Exception:

|

||||

logging.exception(

|

||||

f"Failed to fetch attachment detail {gid} for task {task_gid}"

|

||||

)

|

||||

self.api_error_count += 1

|

||||

|

||||

except Exception:

|

||||

logging.exception(f"Failed to list attachments for task {task_gid}")

|

||||

self.api_error_count += 1

|

||||

|

||||

return attachments

|

||||

|

||||

def get_accessible_emails(

|

||||

self,

|

||||

workspace_id: str,

|

||||

project_ids: list[str] | None,

|

||||

team_id: str | None,

|

||||

):

|

||||

|

||||

ws_users = self.users_api.get_users(

|

||||

opts={

|

||||

"workspace": workspace_id,

|

||||

"opt_fields": "gid,name,email"

|

||||

}

|

||||

)

|

||||

|

||||

workspace_users = {

|

||||

u["gid"]: u.get("email")

|

||||

for u in ws_users

|

||||

if u.get("email")

|

||||

}

|

||||

|

||||

if not project_ids:

|

||||

return set(workspace_users.values())

|

||||

|

||||

|

||||

project_emails = set()

|

||||

|

||||

for pid in project_ids:

|

||||

project = self.project_api.get_project(

|

||||

pid,

|

||||

opts={"opt_fields": "team,privacy_setting"}

|

||||

)

|

||||

|

||||

if project["privacy_setting"] == "private":

|

||||

if team_id and project.get("team", {}).get("gid") != team_id:

|

||||

continue

|

||||

|

||||

memberships = self.project_memberships_api.get_project_membership(

|

||||

pid,

|

||||

opts={"opt_fields": "user.gid,user.email"}

|

||||

)

|

||||

|

||||

for m in memberships:

|

||||

email = m["user"].get("email")

|

||||

if email:

|

||||

project_emails.add(email)

|

||||

|

||||

return project_emails

|

||||

|

||||

def get_user(self, user_gid: str) -> Dict:

|

||||

if self._user is not None:

|

||||

return self._user

|

||||

self._user = self.users_api.get_user(user_gid, {"opt_fields": "name,email"})

|

||||

|

||||

if not self._user:

|

||||

logging.warning(f"Unable to fetch user information for user_gid: {user_gid}")

|

||||

return {"name": "Unknown"}

|

||||

return self._user

|

||||

|

||||

def format_date(self, date_str: str) -> str:

|

||||

date = datetime.fromisoformat(date_str)

|

||||

return time.strftime("%Y-%m-%d", date.timetuple())

|

||||

|

||||

def get_time(self) -> str:

|

||||

return time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())

|

||||

|

||||

|

||||

class AsanaConnector(LoadConnector, PollConnector):

|

||||

def __init__(

|

||||

self,

|

||||

asana_workspace_id: str,

|

||||

asana_project_ids: str | None = None,

|

||||

asana_team_id: str | None = None,

|

||||

batch_size: int = INDEX_BATCH_SIZE,

|

||||

continue_on_failure: bool = CONTINUE_ON_CONNECTOR_FAILURE,

|

||||

) -> None:

|

||||

self.workspace_id = asana_workspace_id

|

||||

self.project_ids_to_index: list[str] | None = (

|

||||

asana_project_ids.split(",") if asana_project_ids else None

|

||||

)

|

||||

self.asana_team_id = asana_team_id if asana_team_id else None

|

||||

self.batch_size = batch_size

|

||||

self.continue_on_failure = continue_on_failure

|

||||

self.size_threshold = None

|

||||

logging.info(

|

||||

f"AsanaConnector initialized with workspace_id: {asana_workspace_id}"

|

||||

)

|

||||

|

||||

def load_credentials(self, credentials: dict[str, Any]) -> dict[str, Any] | None:

|

||||

self.api_token = credentials["asana_api_token_secret"]

|

||||

self.asana_client = AsanaAPI(

|

||||

api_token=self.api_token,

|

||||

workspace_gid=self.workspace_id,

|

||||

team_gid=self.asana_team_id,

|

||||

)

|

||||

self.workspace_users_email = self.asana_client.get_accessible_emails(self.workspace_id, self.project_ids_to_index, self.asana_team_id)

|

||||

logging.info("Asana credentials loaded and API client initialized")

|

||||

return None

|

||||

|

||||

def poll_source(

|

||||

self, start: SecondsSinceUnixEpoch, end: SecondsSinceUnixEpoch | None

|

||||

) -> GenerateDocumentsOutput:

|

||||

start_time = datetime.fromtimestamp(start).isoformat()

|

||||

logging.info(f"Starting Asana poll from {start_time}")

|

||||

docs_batch: list[Document] = []

|

||||

tasks = self.asana_client.get_tasks(self.project_ids_to_index, start_time)

|

||||

for task in tasks:

|

||||

docs = self._task_to_documents(task)

|

||||

docs_batch.extend(docs)

|

||||

|

||||

if len(docs_batch) >= self.batch_size:

|

||||

logging.info(f"Yielding batch of {len(docs_batch)} documents")

|

||||

yield docs_batch

|

||||

docs_batch = []

|

||||

|

||||

if docs_batch:

|

||||

logging.info(f"Yielding final batch of {len(docs_batch)} documents")

|

||||

yield docs_batch

|

||||

|

||||

logging.info("Asana poll completed")

|

||||

|

||||

def load_from_state(self) -> GenerateDocumentsOutput:

|

||||

logging.info("Starting full index of all Asana tasks")

|

||||

return self.poll_source(start=0, end=None)

|

||||

|

||||

def _task_to_documents(self, task: AsanaTask) -> list[Document]:

|

||||

docs: list[Document] = []

|

||||

|

||||

attachments = self.asana_client.get_attachments(task.id)

|

||||

|

||||

for att in attachments:

|

||||

try:

|

||||

resp = requests.get(att["download_url"], timeout=30)

|

||||

resp.raise_for_status()

|

||||

file_blob = resp.content

|

||||

filename = att.get("name", "attachment")

|

||||

size_bytes = extract_size_bytes(att)

|

||||

if (

|

||||

self.size_threshold is not None

|

||||

and isinstance(size_bytes, int)

|

||||

and size_bytes > self.size_threshold

|

||||

):

|

||||

logging.warning(

|

||||

f"{filename} exceeds size threshold of {self.size_threshold}. Skipping."

|

||||

)

|

||||

continue

|

||||

docs.append(

|

||||

Document(

|

||||

id=f"asana:{task.id}:{att['gid']}",

|

||||

blob=file_blob,

|

||||

extension=get_file_ext(filename) or "",

|

||||

size_bytes=size_bytes,

|

||||

doc_updated_at=task.last_modified,

|

||||

source=DocumentSource.ASANA,

|

||||

semantic_identifier=filename,

|

||||

primary_owners=list(self.workspace_users_email),

|

||||

)

|

||||

)

|

||||

except Exception:

|

||||

logging.exception(

|

||||

f"Failed to download attachment {att.get('gid')} for task {task.id}"

|

||||

)

|

||||

|

||||

return docs

|

||||

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

import time

|

||||

import os

|

||||

|

||||

logging.info("Starting Asana connector test")

|

||||

connector = AsanaConnector(

|

||||

os.environ["WORKSPACE_ID"],

|

||||

os.environ["PROJECT_IDS"],

|

||||

os.environ["TEAM_ID"],

|

||||

)

|

||||

connector.load_credentials(

|

||||

{

|

||||

"asana_api_token_secret": os.environ["API_TOKEN"],

|

||||

}

|

||||

)

|

||||

logging.info("Loading all documents from Asana")

|

||||

all_docs = connector.load_from_state()

|

||||

current = time.time()

|

||||

one_day_ago = current - 24 * 60 * 60 # 1 day

|

||||

logging.info("Polling for documents updated in the last 24 hours")

|

||||

latest_docs = connector.poll_source(one_day_ago, current)

|

||||

for docs in all_docs:

|

||||

for doc in docs:

|

||||

print(doc.id)

|

||||

logging.info("Asana connector test completed")

|

||||

@ -54,6 +54,9 @@ class DocumentSource(str, Enum):

|

||||

DROPBOX = "dropbox"

|

||||

BOX = "box"

|

||||

AIRTABLE = "airtable"

|

||||

ASANA = "asana"

|

||||

GITHUB = "github"

|

||||

GITLAB = "gitlab"

|

||||

|

||||

class FileOrigin(str, Enum):

|

||||

"""File origins"""

|

||||

@ -256,6 +259,10 @@ AIRTABLE_CONNECTOR_SIZE_THRESHOLD = int(

|

||||

os.environ.get("AIRTABLE_CONNECTOR_SIZE_THRESHOLD", 10 * 1024 * 1024)

|

||||

)

|

||||

|

||||

ASANA_CONNECTOR_SIZE_THRESHOLD = int(

|

||||

os.environ.get("ASANA_CONNECTOR_SIZE_THRESHOLD", 10 * 1024 * 1024)

|

||||

)

|

||||

|

||||

_USER_NOT_FOUND = "Unknown Confluence User"

|

||||

|

||||

_COMMENT_EXPANSION_FIELDS = ["body.storage.value"]

|

||||

|

||||

@ -18,6 +18,7 @@ class UploadMimeTypes:

|

||||

"text/plain",

|

||||

"text/markdown",

|

||||

"text/x-markdown",

|

||||

"text/mdx",

|

||||

"text/x-config",

|

||||

"text/tab-separated-values",

|

||||

"application/json",

|

||||

|

||||

340

common/data_source/gitlab_connector.py

Normal file

340

common/data_source/gitlab_connector.py

Normal file

@ -0,0 +1,340 @@

|

||||

import fnmatch

|

||||

import itertools

|

||||

from collections import deque

|

||||

from collections.abc import Iterable

|

||||

from collections.abc import Iterator

|

||||

from datetime import datetime

|

||||

from datetime import timezone

|

||||

from typing import Any

|

||||

from typing import TypeVar

|

||||

import gitlab

|

||||

from gitlab.v4.objects import Project

|

||||

|

||||

from common.data_source.config import DocumentSource, INDEX_BATCH_SIZE

|

||||

from common.data_source.exceptions import ConnectorMissingCredentialError

|

||||

from common.data_source.exceptions import ConnectorValidationError

|

||||

from common.data_source.exceptions import CredentialExpiredError

|

||||

from common.data_source.exceptions import InsufficientPermissionsError

|

||||

from common.data_source.exceptions import UnexpectedValidationError

|

||||

from common.data_source.interfaces import GenerateDocumentsOutput

|

||||

from common.data_source.interfaces import LoadConnector

|

||||

from common.data_source.interfaces import PollConnector

|

||||

from common.data_source.interfaces import SecondsSinceUnixEpoch

|

||||

from common.data_source.models import BasicExpertInfo

|

||||

from common.data_source.models import Document

|

||||

from common.data_source.utils import get_file_ext

|

||||

|

||||

T = TypeVar("T")

|

||||

|

||||

|

||||

|

||||

# List of directories/Files to exclude

|

||||

exclude_patterns = [

|

||||

"logs",

|

||||

".github/",

|

||||

".gitlab/",

|

||||

".pre-commit-config.yaml",

|

||||

]

|

||||

|

||||

|

||||

def _batch_gitlab_objects(git_objs: Iterable[T], batch_size: int) -> Iterator[list[T]]:

|

||||

it = iter(git_objs)

|

||||

while True:

|

||||

batch = list(itertools.islice(it, batch_size))

|

||||

if not batch:

|

||||

break

|

||||

yield batch

|

||||

|

||||

|

||||

def get_author(author: Any) -> BasicExpertInfo:

|

||||

return BasicExpertInfo(

|

||||

display_name=author.get("name"),

|

||||

)

|

||||

|

||||

|

||||

def _convert_merge_request_to_document(mr: Any) -> Document:

|

||||

mr_text = mr.description or ""

|

||||

doc = Document(

|

||||

id=mr.web_url,

|

||||

blob=mr_text,

|

||||

source=DocumentSource.GITLAB,

|

||||

semantic_identifier=mr.title,

|

||||

extension=".md",

|

||||

# updated_at is UTC time but is timezone unaware, explicitly add UTC

|

||||

# as there is logic in indexing to prevent wrong timestamped docs

|

||||

# due to local time discrepancies with UTC

|

||||

doc_updated_at=mr.updated_at.replace(tzinfo=timezone.utc),

|

||||

size_bytes=len(mr_text.encode("utf-8")),

|

||||

primary_owners=[get_author(mr.author)],

|

||||

metadata={"state": mr.state, "type": "MergeRequest", "web_url": mr.web_url},

|

||||

)

|

||||

return doc

|

||||

|

||||

|

||||

def _convert_issue_to_document(issue: Any) -> Document:

|

||||

issue_text = issue.description or ""

|

||||

doc = Document(

|

||||

id=issue.web_url,

|

||||

blob=issue_text,

|

||||

source=DocumentSource.GITLAB,

|

||||

semantic_identifier=issue.title,

|

||||

extension=".md",

|

||||

# updated_at is UTC time but is timezone unaware, explicitly add UTC

|

||||

# as there is logic in indexing to prevent wrong timestamped docs

|

||||

# due to local time discrepancies with UTC

|

||||

doc_updated_at=issue.updated_at.replace(tzinfo=timezone.utc),

|

||||

size_bytes=len(issue_text.encode("utf-8")),

|

||||

primary_owners=[get_author(issue.author)],

|

||||

metadata={

|

||||

"state": issue.state,

|

||||

"type": issue.type if issue.type else "Issue",

|

||||

"web_url": issue.web_url,

|

||||

},

|

||||

)

|

||||

return doc

|

||||

|

||||

|

||||

def _convert_code_to_document(

|

||||

project: Project, file: Any, url: str, projectName: str, projectOwner: str

|

||||

) -> Document:

|

||||

|

||||

# Dynamically get the default branch from the project object

|

||||

default_branch = project.default_branch

|

||||

|

||||

# Fetch the file content using the correct branch

|

||||

file_content_obj = project.files.get(

|

||||

file_path=file["path"], ref=default_branch # Use the default branch

|

||||

)

|

||||

# BoxConnector uses raw bytes for blob. Keep the same here.

|

||||

file_content_bytes = file_content_obj.decode()

|

||||

file_url = f"{url}/{projectOwner}/{projectName}/-/blob/{default_branch}/{file['path']}"

|

||||

|

||||

# Try to use the last commit timestamp for incremental sync.

|

||||

# Falls back to "now" if the commit lookup fails.

|

||||

last_commit_at = None

|

||||

try:

|

||||

# Query commit history for this file on the default branch.

|

||||

commits = project.commits.list(

|

||||

ref_name=default_branch,

|

||||

path=file["path"],

|

||||

per_page=1,

|

||||

)

|

||||

if commits:

|

||||

# committed_date is ISO string like "2024-01-01T00:00:00.000+00:00"

|

||||

committed_date = commits[0].committed_date

|

||||

if isinstance(committed_date, str):

|

||||

last_commit_at = datetime.strptime(

|

||||

committed_date, "%Y-%m-%dT%H:%M:%S.%f%z"

|

||||

).astimezone(timezone.utc)

|

||||

elif isinstance(committed_date, datetime):

|

||||

last_commit_at = committed_date.astimezone(timezone.utc)

|

||||

except Exception:

|

||||

last_commit_at = None

|

||||

|

||||

# Create and return a Document object

|

||||

doc = Document(

|

||||

# Use a stable ID so reruns don't create duplicates.

|

||||

id=file_url,

|

||||

blob=file_content_bytes,

|

||||

source=DocumentSource.GITLAB,

|

||||

semantic_identifier=file.get("name"),

|

||||

extension=get_file_ext(file.get("name")),

|

||||

doc_updated_at=last_commit_at or datetime.now(tz=timezone.utc),

|

||||

size_bytes=len(file_content_bytes) if file_content_bytes is not None else 0,

|

||||

primary_owners=[], # Add owners if needed

|

||||

metadata={

|

||||

"type": "CodeFile",

|

||||

"path": file.get("path"),

|

||||

"ref": default_branch,

|

||||

"project": f"{projectOwner}/{projectName}",

|

||||

"web_url": file_url,

|

||||

},

|

||||

)

|

||||

return doc

|

||||

|

||||

|

||||

def _should_exclude(path: str) -> bool:

|

||||

"""Check if a path matches any of the exclude patterns."""

|

||||

return any(fnmatch.fnmatch(path, pattern) for pattern in exclude_patterns)

|

||||

|

||||

|

||||

class GitlabConnector(LoadConnector, PollConnector):

|

||||

def __init__(

|

||||

self,

|

||||

project_owner: str,

|

||||

project_name: str,

|

||||

batch_size: int = INDEX_BATCH_SIZE,

|

||||

state_filter: str = "all",

|

||||

include_mrs: bool = True,

|

||||

include_issues: bool = True,

|

||||

include_code_files: bool = False,

|

||||

) -> None:

|

||||

self.project_owner = project_owner

|

||||

self.project_name = project_name

|

||||

self.batch_size = batch_size

|

||||

self.state_filter = state_filter

|

||||

self.include_mrs = include_mrs

|

||||

self.include_issues = include_issues

|

||||

self.include_code_files = include_code_files

|

||||

self.gitlab_client: gitlab.Gitlab | None = None

|

||||

|

||||

def load_credentials(self, credentials: dict[str, Any]) -> dict[str, Any] | None:

|

||||

self.gitlab_client = gitlab.Gitlab(

|

||||

credentials["gitlab_url"], private_token=credentials["gitlab_access_token"]

|

||||

)

|

||||

return None

|

||||

|

||||

def validate_connector_settings(self) -> None:

|

||||

if self.gitlab_client is None:

|

||||

raise ConnectorMissingCredentialError("GitLab")

|

||||

|

||||

try:

|

||||

self.gitlab_client.auth()

|

||||

self.gitlab_client.projects.get(

|

||||

f"{self.project_owner}/{self.project_name}",

|

||||

lazy=True,

|

||||

)

|

||||

|

||||

except gitlab.exceptions.GitlabAuthenticationError as e:

|

||||

raise CredentialExpiredError(

|

||||

"Invalid or expired GitLab credentials."

|

||||

) from e

|

||||

|

||||

except gitlab.exceptions.GitlabAuthorizationError as e:

|

||||

raise InsufficientPermissionsError(

|

||||

"Insufficient permissions to access GitLab resources."

|

||||

) from e

|

||||

|

||||

except gitlab.exceptions.GitlabGetError as e:

|

||||

raise ConnectorValidationError(

|

||||

"GitLab project not found or not accessible."

|

||||

) from e

|

||||

|

||||

except Exception as e:

|

||||

raise UnexpectedValidationError(

|

||||

f"Unexpected error while validating GitLab settings: {e}"

|

||||

) from e

|

||||

|

||||

def _fetch_from_gitlab(

|

||||

self, start: datetime | None = None, end: datetime | None = None

|

||||

) -> GenerateDocumentsOutput:

|

||||

if self.gitlab_client is None:

|

||||

raise ConnectorMissingCredentialError("Gitlab")

|

||||

project: Project = self.gitlab_client.projects.get(

|

||||

f"{self.project_owner}/{self.project_name}"

|

||||

)

|

||||

|

||||

start_utc = start.astimezone(timezone.utc) if start else None

|

||||

end_utc = end.astimezone(timezone.utc) if end else None

|

||||

|

||||

# Fetch code files

|

||||

if self.include_code_files:

|

||||

# Fetching using BFS as project.report_tree with recursion causing slow load

|

||||

queue = deque([""]) # Start with the root directory

|

||||

while queue:

|

||||

current_path = queue.popleft()

|

||||

files = project.repository_tree(path=current_path, all=True)

|

||||

for file_batch in _batch_gitlab_objects(files, self.batch_size):

|

||||

code_doc_batch: list[Document] = []

|

||||

for file in file_batch:

|

||||

if _should_exclude(file["path"]):

|

||||

continue

|

||||

|

||||

if file["type"] == "blob":

|

||||

|

||||

doc = _convert_code_to_document(

|

||||

project,

|

||||

file,

|

||||

self.gitlab_client.url,

|

||||

self.project_name,

|

||||

self.project_owner,

|

||||

)

|

||||

|

||||

# Apply incremental window filtering for code files too.

|

||||

if start_utc is not None and doc.doc_updated_at <= start_utc:

|

||||

continue

|

||||

if end_utc is not None and doc.doc_updated_at > end_utc:

|

||||

continue

|

||||

|

||||

code_doc_batch.append(doc)

|

||||

elif file["type"] == "tree":

|

||||

queue.append(file["path"])

|

||||

|

||||

if code_doc_batch:

|

||||

yield code_doc_batch

|

||||

|

||||

if self.include_mrs:

|

||||

merge_requests = project.mergerequests.list(

|

||||

state=self.state_filter,

|

||||

order_by="updated_at",

|

||||

sort="desc",

|

||||

iterator=True,

|

||||

)

|

||||

|

||||

for mr_batch in _batch_gitlab_objects(merge_requests, self.batch_size):

|

||||

mr_doc_batch: list[Document] = []

|

||||

for mr in mr_batch:

|

||||

mr.updated_at = datetime.strptime(

|

||||

mr.updated_at, "%Y-%m-%dT%H:%M:%S.%f%z"

|

||||

)

|

||||

if start_utc is not None and mr.updated_at <= start_utc:

|

||||

yield mr_doc_batch

|

||||

return

|

||||

if end_utc is not None and mr.updated_at > end_utc:

|

||||

continue

|

||||

mr_doc_batch.append(_convert_merge_request_to_document(mr))

|

||||

yield mr_doc_batch

|

||||

|

||||

if self.include_issues:

|

||||

issues = project.issues.list(state=self.state_filter, iterator=True)

|

||||

|

||||

for issue_batch in _batch_gitlab_objects(issues, self.batch_size):

|

||||

issue_doc_batch: list[Document] = []

|

||||

for issue in issue_batch:

|

||||

issue.updated_at = datetime.strptime(

|

||||

issue.updated_at, "%Y-%m-%dT%H:%M:%S.%f%z"

|

||||

)

|

||||

# Avoid re-syncing the last-seen item.

|

||||

if start_utc is not None and issue.updated_at <= start_utc:

|

||||

yield issue_doc_batch

|

||||

return

|

||||

if end_utc is not None and issue.updated_at > end_utc:

|

||||

continue

|

||||

issue_doc_batch.append(_convert_issue_to_document(issue))

|

||||

yield issue_doc_batch

|

||||

|

||||

def load_from_state(self) -> GenerateDocumentsOutput:

|

||||

return self._fetch_from_gitlab()

|

||||

|

||||

def poll_source(

|

||||

self, start: SecondsSinceUnixEpoch, end: SecondsSinceUnixEpoch

|

||||

) -> GenerateDocumentsOutput:

|

||||

start_datetime = datetime.fromtimestamp(start, tz=timezone.utc)

|

||||

end_datetime = datetime.fromtimestamp(end, tz=timezone.utc)

|

||||

return self._fetch_from_gitlab(start_datetime, end_datetime)

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

import os

|

||||

|

||||

connector = GitlabConnector(

|

||||

# gitlab_url="https://gitlab.com/api/v4",

|

||||

project_owner=os.environ["PROJECT_OWNER"],

|

||||

project_name=os.environ["PROJECT_NAME"],

|

||||

batch_size=INDEX_BATCH_SIZE,

|

||||

state_filter="all",

|

||||

include_mrs=True,

|

||||

include_issues=True,

|

||||

include_code_files=True,

|

||||

)

|

||||

|

||||

connector.load_credentials(

|

||||

{

|

||||

"gitlab_access_token": os.environ["GITLAB_ACCESS_TOKEN"],

|

||||

"gitlab_url": os.environ["GITLAB_URL"],

|

||||

}

|

||||

)

|

||||

document_batches = connector.load_from_state()

|

||||

for f in document_batches:

|

||||

print("Batch:", f)

|

||||

print("Finished loading from state.")

|

||||

@ -5,7 +5,7 @@ from abc import ABC, abstractmethod

|

||||

from enum import IntFlag, auto

|

||||

from types import TracebackType

|

||||

from typing import Any, Dict, Generator, TypeVar, Generic, Callable, TypeAlias

|

||||

|

||||

from collections.abc import Iterator

|

||||

from anthropic import BaseModel

|

||||

|

||||

from common.data_source.models import (

|

||||

@ -16,6 +16,7 @@ from common.data_source.models import (

|

||||

SecondsSinceUnixEpoch, GenerateSlimDocumentOutput

|

||||

)

|

||||

|

||||

GenerateDocumentsOutput = Iterator[list[Document]]

|

||||

|

||||

class LoadConnector(ABC):

|

||||

"""Load connector interface"""

|

||||

|

||||

@ -78,14 +78,21 @@ class DoclingParser(RAGFlowPdfParser):

|

||||

def __images__(self, fnm, zoomin: int = 1, page_from=0, page_to=600, callback=None):

|

||||

self.page_from = page_from

|

||||

self.page_to = page_to

|

||||

bytes_io = None

|

||||

try:

|

||||

opener = pdfplumber.open(fnm) if isinstance(fnm, (str, PathLike)) else pdfplumber.open(BytesIO(fnm))

|

||||

if not isinstance(fnm, (str, PathLike)):

|

||||

bytes_io = BytesIO(fnm)

|

||||

|

||||

opener = pdfplumber.open(fnm) if isinstance(fnm, (str, PathLike)) else pdfplumber.open(bytes_io)

|

||||

with opener as pdf:

|

||||

pages = pdf.pages[page_from:page_to]

|

||||

self.page_images = [p.to_image(resolution=72 * zoomin, antialias=True).original for p in pages]

|

||||

except Exception as e:

|

||||

self.page_images = []

|

||||

self.logger.exception(e)

|

||||

finally:

|

||||

if bytes_io:

|

||||

bytes_io.close()

|

||||

|

||||

def _make_line_tag(self,bbox: _BBox) -> str:

|

||||

if bbox is None:

|

||||

|

||||

@ -16,6 +16,7 @@

|

||||

|

||||

import logging

|

||||

import sys

|

||||

import ast

|

||||

import six

|

||||

import cv2

|

||||

import numpy as np

|

||||

@ -108,7 +109,14 @@ class NormalizeImage:

|

||||

|

||||

def __init__(self, scale=None, mean=None, std=None, order='chw', **kwargs):

|

||||

if isinstance(scale, str):

|

||||

scale = eval(scale)

|

||||

try:

|

||||

scale = float(scale)

|

||||

except ValueError:

|

||||

if '/' in scale:

|

||||

parts = scale.split('/')

|

||||

scale = ast.literal_eval(parts[0]) / ast.literal_eval(parts[1])

|

||||

else:

|

||||

scale = ast.literal_eval(scale)

|

||||

self.scale = np.float32(scale if scale is not None else 1.0 / 255.0)

|

||||

mean = mean if mean is not None else [0.485, 0.456, 0.406]

|

||||

std = std if std is not None else [0.229, 0.224, 0.225]

|

||||

|

||||

@ -1,3 +1,10 @@

|

||||

# -----------------------------------------------------------------------------

|

||||

# SECURITY WARNING: DO NOT DEPLOY WITH DEFAULT PASSWORDS

|

||||

# For non-local deployments, please change all passwords (ELASTIC_PASSWORD,

|

||||

# MYSQL_PASSWORD, MINIO_PASSWORD, etc.) to strong, unique values.

|

||||

# You can generate a random string using: openssl rand -hex 32

|

||||

# -----------------------------------------------------------------------------

|

||||

|

||||

# ------------------------------

|

||||

# docker env var for specifying vector db type at startup

|

||||

# (based on the vector db type, the corresponding docker

|

||||

@ -30,6 +37,7 @@ ES_HOST=es01

|

||||

ES_PORT=1200

|

||||

|

||||

# The password for Elasticsearch.

|

||||

# WARNING: Change this for production!

|

||||

ELASTIC_PASSWORD=infini_rag_flow

|

||||

|

||||

# the hostname where OpenSearch service is exposed, set it not the same as elasticsearch

|

||||

@ -85,6 +93,7 @@ OB_DATAFILE_SIZE=${OB_DATAFILE_SIZE:-20G}

|

||||

OB_LOG_DISK_SIZE=${OB_LOG_DISK_SIZE:-20G}

|

||||

|

||||

# The password for MySQL.

|

||||

# WARNING: Change this for production!

|

||||

MYSQL_PASSWORD=infini_rag_flow

|

||||

# The hostname where the MySQL service is exposed

|

||||

MYSQL_HOST=mysql

|

||||

|

||||

@ -34,7 +34,7 @@ Enabling TOC extraction requires significant memory, computational resources, an

|

||||

|

||||

|

||||

|

||||

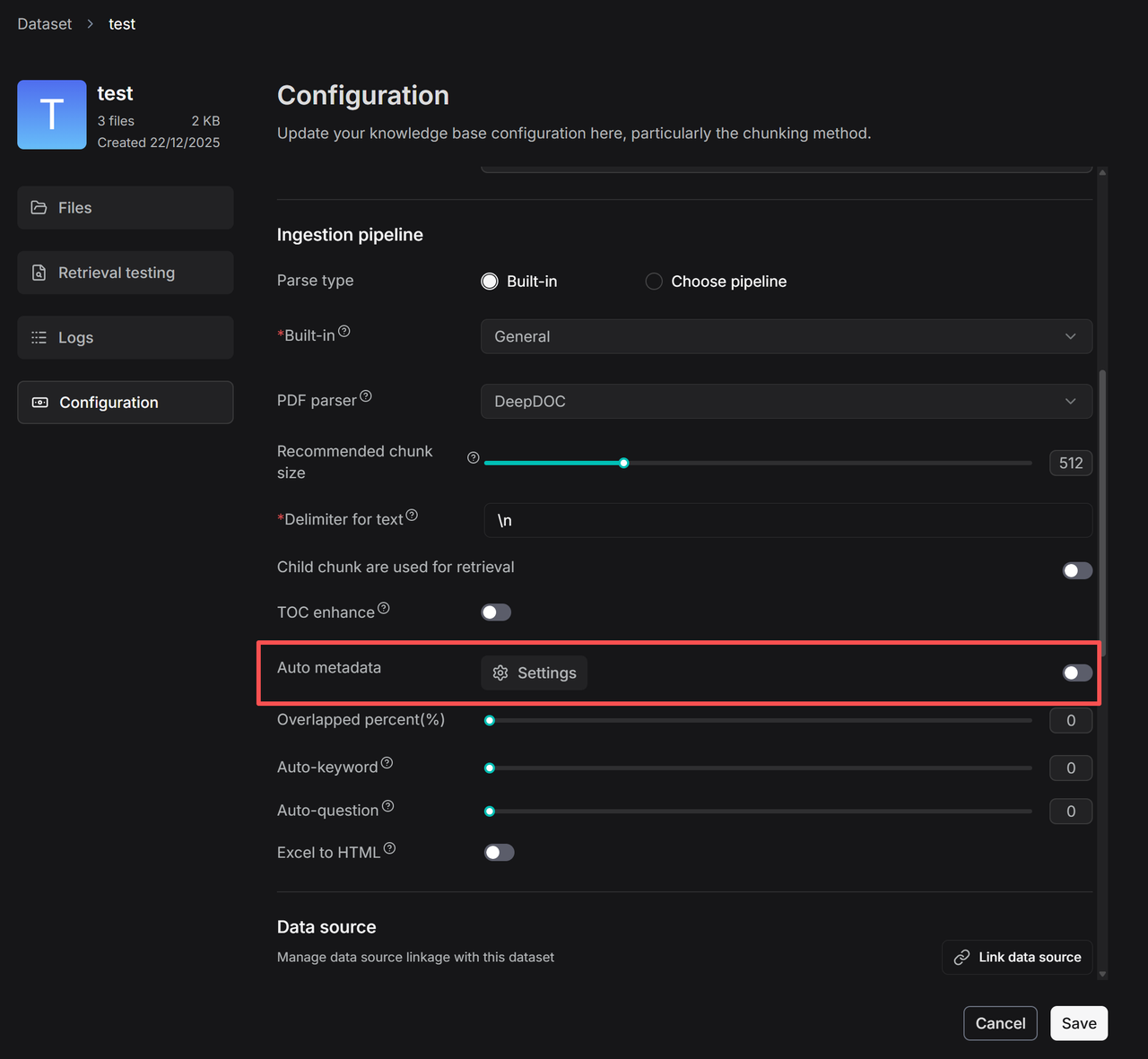

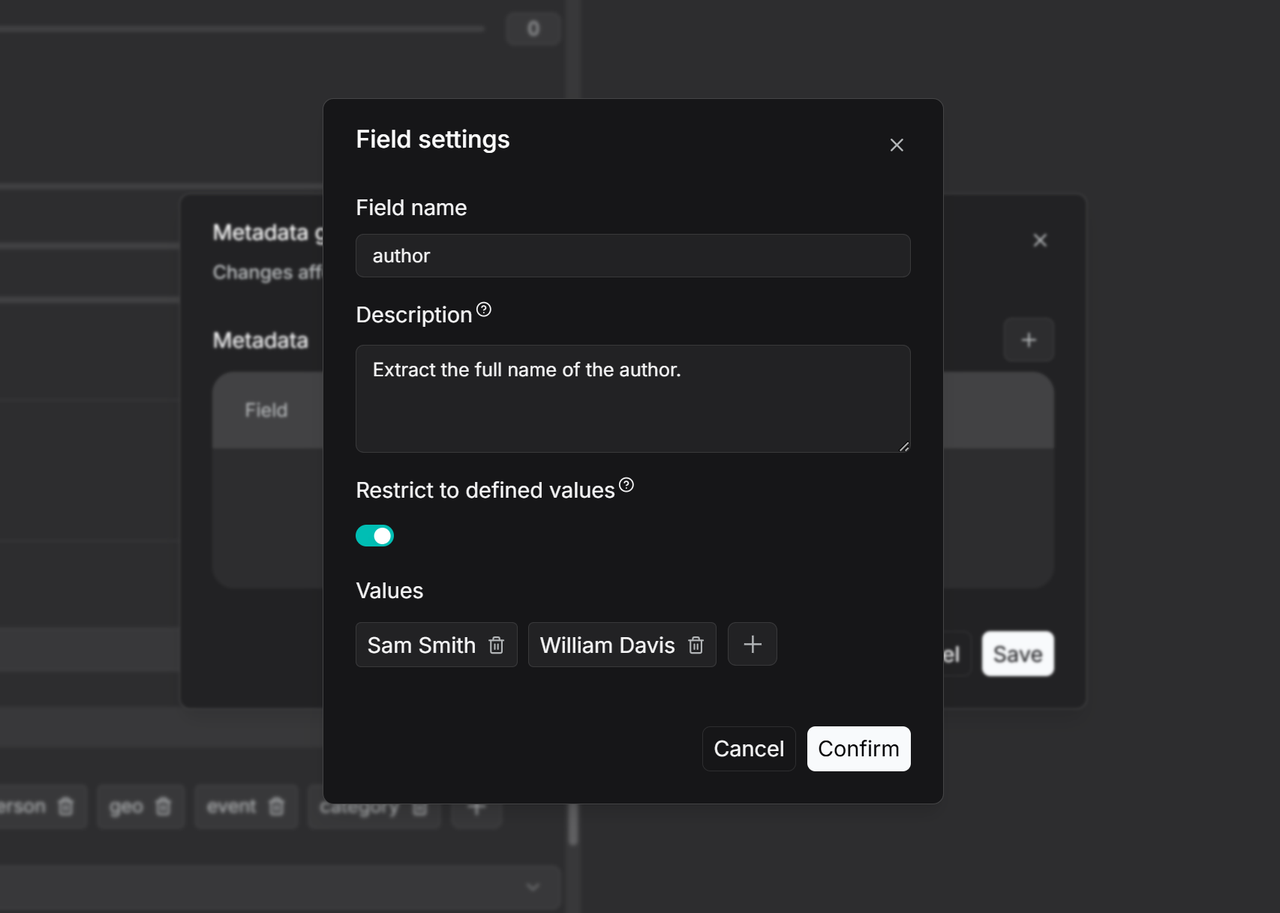

3. Click **+** to add new fields and enter the congiruation page.

|

||||

3. Click **+** to add new fields and enter the configuration page.

|

||||

|

||||

|

||||

|

||||

|

||||

@ -340,13 +340,13 @@ Application startup complete.

|

||||

|

||||

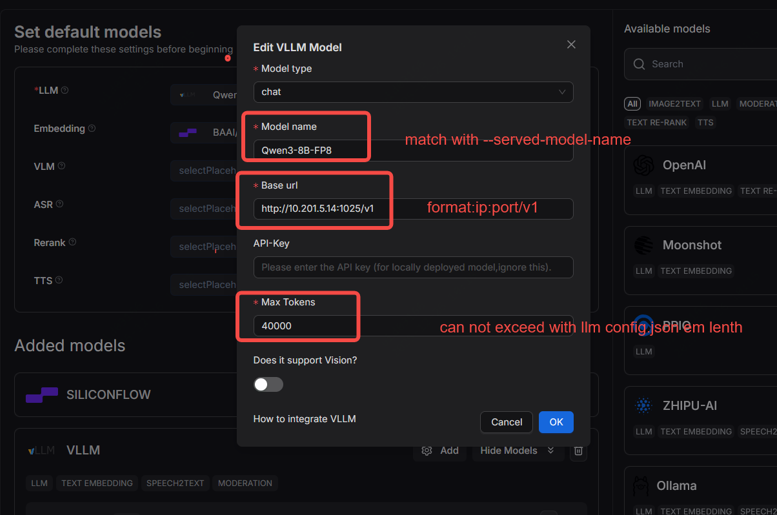

setting->model providers->search->vllm->add ,configure as follow:

|

||||

|

||||

|

||||

|

||||

|

||||

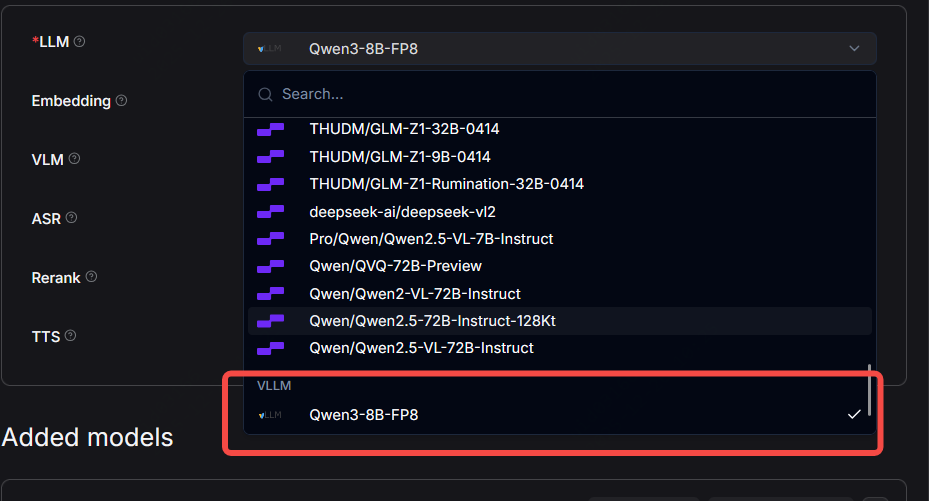

select vllm chat model as default llm model as follow:

|

||||

|

||||

|

||||

### 5.3 chat with vllm chat model

|

||||

create chat->create conversations-chat as follow:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1603,7 +1603,7 @@ In streaming mode, not all responses include a reference, as this depends on the

|

||||

|

||||

##### question: `str`

|

||||

|

||||

The question to start an AI-powered conversation. Ifthe **Begin** component takes parameters, a question is not required.

|

||||

The question to start an AI-powered conversation. If the **Begin** component takes parameters, a question is not required.

|

||||

|

||||

##### stream: `bool`

|

||||

|

||||

|

||||

@ -9,7 +9,7 @@ Key features, improvements and bug fixes in the latest releases.

|

||||

|

||||

## v0.23.0

|

||||

|

||||

Released on December 29, 2025.

|

||||

Released on December 27, 2025.

|

||||

|

||||

### New features

|

||||

|

||||

@ -32,7 +32,7 @@ Released on December 29, 2025.

|

||||

|

||||

### Improvements

|

||||

|

||||

- Bumps RAGFlow's document engine, [Infinity](https://github.com/infiniflow/infinity) to v0.6.13 (backward compatible).

|

||||

- Bumps RAGFlow's document engine, [Infinity](https://github.com/infiniflow/infinity) to v0.6.15 (backward compatible).

|

||||

|

||||

### Data sources

|

||||

|

||||

|

||||

133

helm/README.md

Normal file

133

helm/README.md

Normal file

@ -0,0 +1,133 @@

|

||||

# RAGFlow Helm Chart

|

||||

|

||||

A Helm chart to deploy RAGFlow and its dependencies on Kubernetes.

|

||||

|

||||

- Components: RAGFlow (web/api) and optional dependencies (Infinity/Elasticsearch/OpenSearch, MySQL, MinIO, Redis)

|

||||

- Requirements: Kubernetes >= 1.24, Helm >= 3.10

|

||||

|

||||

## Install

|

||||

|

||||

```bash

|

||||

helm upgrade --install ragflow ./ \

|

||||

--namespace ragflow --create-namespace

|

||||

```

|

||||

|

||||

Uninstall:

|

||||

```bash

|

||||

helm uninstall ragflow -n ragflow

|

||||

```

|

||||

|

||||

## Global Settings

|

||||

|

||||

- `global.repo`: Prepend a global image registry prefix for all images.

|

||||

- Behavior: Replaces the registry part and keeps the image path (e.g., `quay.io/minio/minio` -> `registry.example.com/myproj/minio/minio`).

|

||||

- Example: `global.repo: "registry.example.com/myproj"`

|

||||

- `global.imagePullSecrets`: List of image pull secrets applied to all Pods.

|

||||

- Example:

|

||||

```yaml

|

||||

global:

|

||||

imagePullSecrets:

|

||||

- name: regcred

|

||||

```

|

||||

|

||||

## External Services (MySQL / MinIO / Redis)

|

||||

|

||||

The chart can deploy in-cluster services or connect to external ones. Toggle with `*.enabled`. When disabled, provide host/port via `env.*`.

|

||||

|

||||

- MySQL

|

||||

- `mysql.enabled`: default `true`

|

||||

- If `false`, set:

|

||||

- `env.MYSQL_HOST` (required), `env.MYSQL_PORT` (default `3306`)

|

||||

- `env.MYSQL_DBNAME` (default `rag_flow`), `env.MYSQL_PASSWORD` (required)

|

||||

- `env.MYSQL_USER` (default `root` if omitted)

|

||||

- MinIO

|

||||

- `minio.enabled`: default `true`

|

||||

- Configure:

|

||||

- `env.MINIO_HOST` (optional external host), `env.MINIO_PORT` (default `9000`)

|

||||

- `env.MINIO_ROOT_USER` (default `rag_flow`), `env.MINIO_PASSWORD` (optional)

|

||||

- Redis (Valkey)

|

||||

- `redis.enabled`: default `true`

|

||||

- If `false`, set:

|

||||

- `env.REDIS_HOST` (required), `env.REDIS_PORT` (default `6379`)

|

||||

- `env.REDIS_PASSWORD` (optional; empty disables auth if server allows)

|

||||

|

||||

Notes:

|

||||

- When `*.enabled=true`, the chart renders in-cluster resources and injects corresponding `*_HOST`/`*_PORT` automatically.

|

||||

- Sensitive variables like `MYSQL_PASSWORD` are required; `MINIO_PASSWORD` and `REDIS_PASSWORD` are optional. All secrets are stored in a Secret.

|

||||

|

||||

### Example: use external MySQL, MinIO, and Redis

|

||||

|

||||

```yaml

|

||||

# values.override.yaml

|

||||

mysql:

|

||||

enabled: false # use external MySQL

|

||||

minio:

|

||||

enabled: false # use external MinIO (S3 compatible)

|

||||

redis:

|

||||

enabled: false # use external Redis/Valkey

|

||||

|

||||

env:

|

||||

# MySQL

|

||||

MYSQL_HOST: mydb.example.com

|

||||

MYSQL_PORT: "3306"

|

||||

MYSQL_USER: root

|

||||

MYSQL_DBNAME: rag_flow

|

||||

MYSQL_PASSWORD: "<your-mysql-password>"

|

||||

|

||||

# MinIO

|

||||

MINIO_HOST: s3.example.com

|

||||

MINIO_PORT: "9000"

|

||||

MINIO_ROOT_USER: rag_flow

|

||||

MINIO_PASSWORD: "<your-minio-secret>"

|

||||

|

||||

# Redis

|

||||

REDIS_HOST: redis.example.com

|

||||

REDIS_PORT: "6379"

|

||||

REDIS_PASSWORD: "<your-redis-pass>"

|

||||

```

|

||||

|

||||

Apply:

|

||||

```bash

|

||||

helm upgrade --install ragflow ./helm -n ragflow -f values.override.yaml

|

||||

```

|

||||

|

||||

## Document Engine Selection

|

||||

|

||||

Choose one of `infinity` (default), `elasticsearch`, or `opensearch` via `env.DOC_ENGINE`. The chart renders only the selected engine and sets the appropriate host variables.

|

||||

|

||||

```yaml

|

||||

env:

|

||||

DOC_ENGINE: infinity # or: elasticsearch | opensearch

|

||||

# For elasticsearch

|

||||

ELASTIC_PASSWORD: "<es-pass>"

|

||||

# For opensearch

|

||||

OPENSEARCH_PASSWORD: "<os-pass>"

|

||||

```

|

||||

|

||||

## Ingress

|

||||

|

||||

Expose the web UI via Ingress:

|

||||

|

||||

```yaml

|

||||

ingress:

|

||||

enabled: true

|

||||

className: nginx

|

||||

hosts:

|

||||

- host: ragflow.example.com

|

||||

paths:

|

||||

- path: /

|

||||

pathType: Prefix

|

||||

```

|

||||

|

||||

## Validate the Chart

|

||||

|

||||

```bash

|

||||

helm lint ./helm

|

||||

helm template ragflow ./helm > rendered.yaml

|

||||

```

|

||||

|

||||

## Notes

|

||||

|

||||

- By default, the chart uses `DOC_ENGINE: infinity` and deploys in-cluster MySQL, MinIO, and Redis.

|

||||

- The chart injects derived `*_HOST`/`*_PORT` and required secrets into a single Secret (`<release>-ragflow-env-config`).

|

||||

- `global.repo` and `global.imagePullSecrets` apply to all Pods; per-component `*.image.pullSecrets` still work and are merged with global settings.

|

||||

@ -42,6 +42,31 @@ app.kubernetes.io/version: {{ .Chart.AppVersion | quote }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

{{- end }}