mirror of

https://github.com/infiniflow/ragflow.git

synced 2025-12-29 16:05:35 +08:00

Update deploy_local_llm.mdx (#12276)

### Type of change - [x] Documentation Update

This commit is contained in:

@ -340,13 +340,13 @@ Application startup complete.

|

||||

|

||||

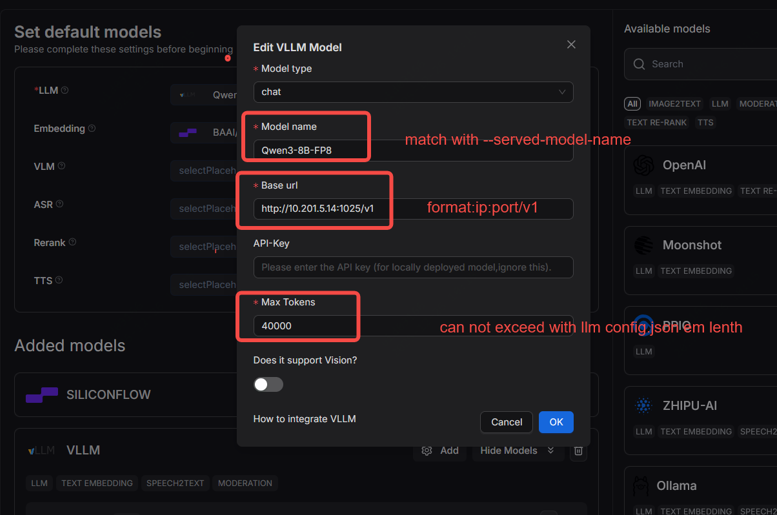

setting->model providers->search->vllm->add ,configure as follow:

|

||||

|

||||

|

||||

|

||||

|

||||

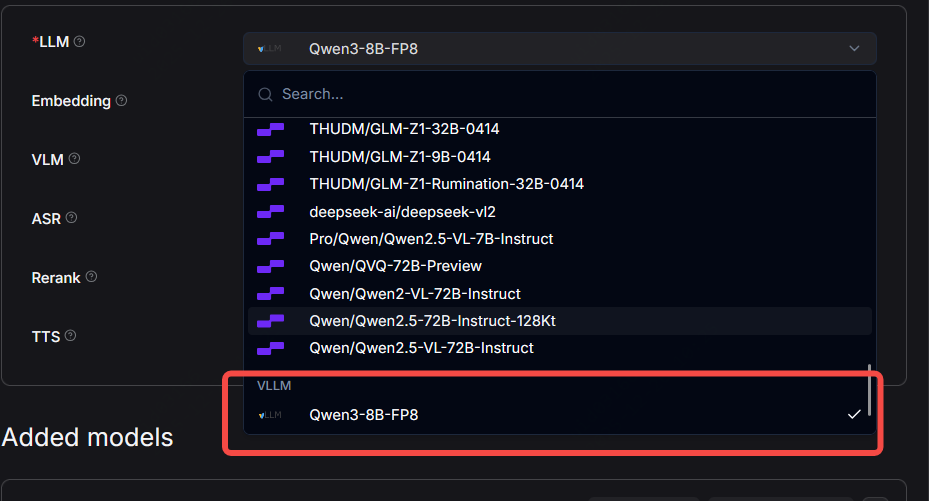

select vllm chat model as default llm model as follow:

|

||||

|

||||

|

||||

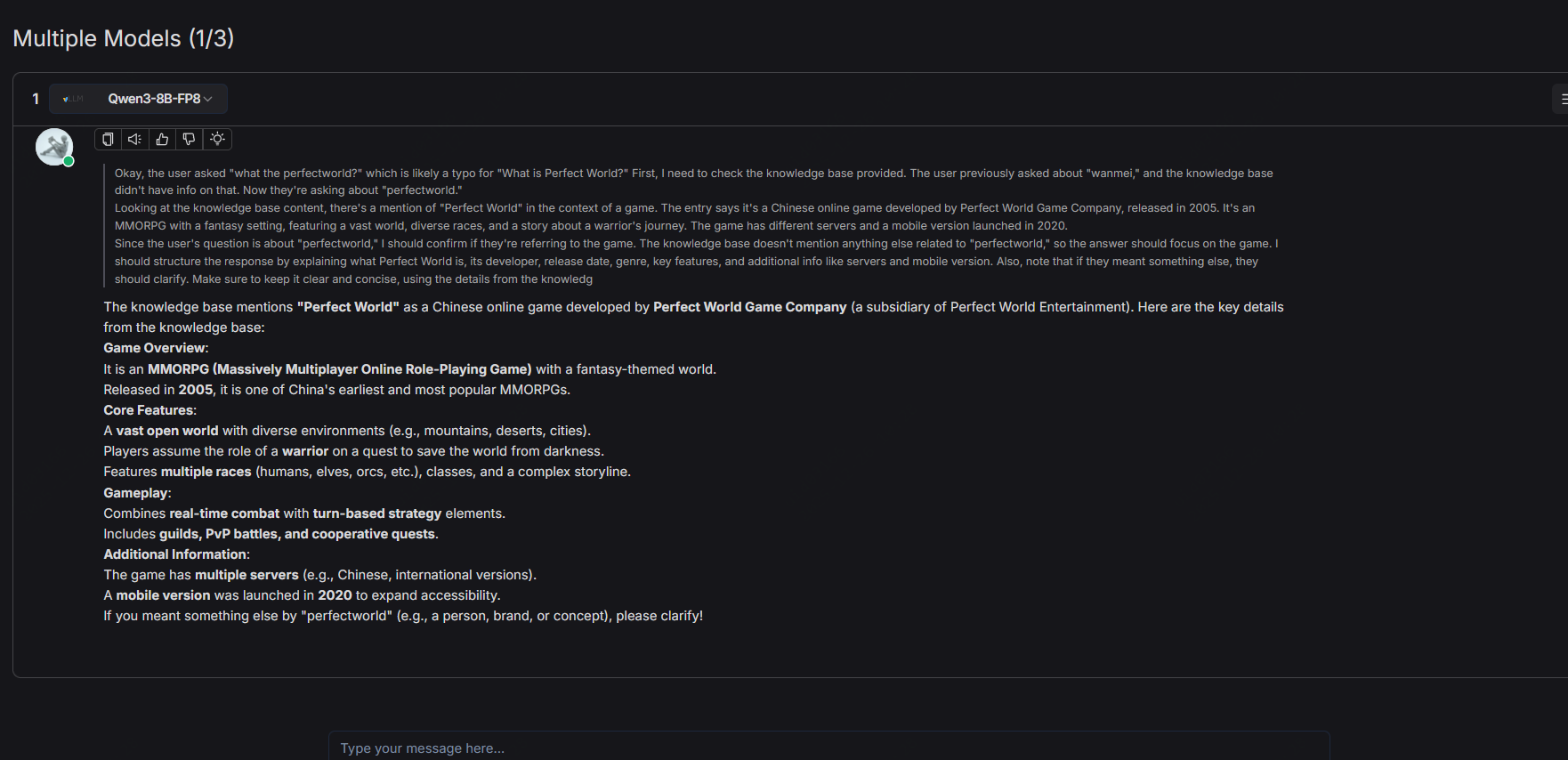

### 5.3 chat with vllm chat model

|

||||

create chat->create conversations-chat as follow:

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Reference in New Issue

Block a user