Compare commits

21 Commits

v0.22.0

...

d039d1e73d

| Author | SHA1 | Date | |

|---|---|---|---|

| d039d1e73d | |||

| d050ef568d | |||

| 028c2d83e9 | |||

| b5d6a6e8f2 | |||

| 5dfdbcce3a | |||

| 4fae40f66a | |||

| a1b947ffd6 | |||

| f9c7404bee | |||

| 5c1791d7f0 | |||

| e82617f6de | |||

| a7abc57f68 | |||

| cf1f523d03 | |||

| ccb255919a | |||

| b68c84b52e | |||

| 93cf0258c3 | |||

| b79fef1ca8 | |||

| 2b50de3186 | |||

| d8ef22db68 | |||

| 592f3b1555 | |||

| 3404469e2a | |||

| 63d7382dc9 |

8

.github/workflows/release.yml

vendored

@ -88,7 +88,9 @@ jobs:

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: infiniflow/ragflow:${{ env.RELEASE_TAG }}

|

||||

tags: |

|

||||

infiniflow/ragflow:${{ env.RELEASE_TAG }}

|

||||

infiniflow/ragflow:latest-full

|

||||

file: Dockerfile

|

||||

platforms: linux/amd64

|

||||

|

||||

@ -98,7 +100,9 @@ jobs:

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: infiniflow/ragflow:${{ env.RELEASE_TAG }}-slim

|

||||

tags: |

|

||||

infiniflow/ragflow:${{ env.RELEASE_TAG }}-slim

|

||||

infiniflow/ragflow:latest-slim

|

||||

file: Dockerfile

|

||||

build-args: LIGHTEN=1

|

||||

platforms: linux/amd64

|

||||

|

||||

@ -83,7 +83,7 @@

|

||||

},

|

||||

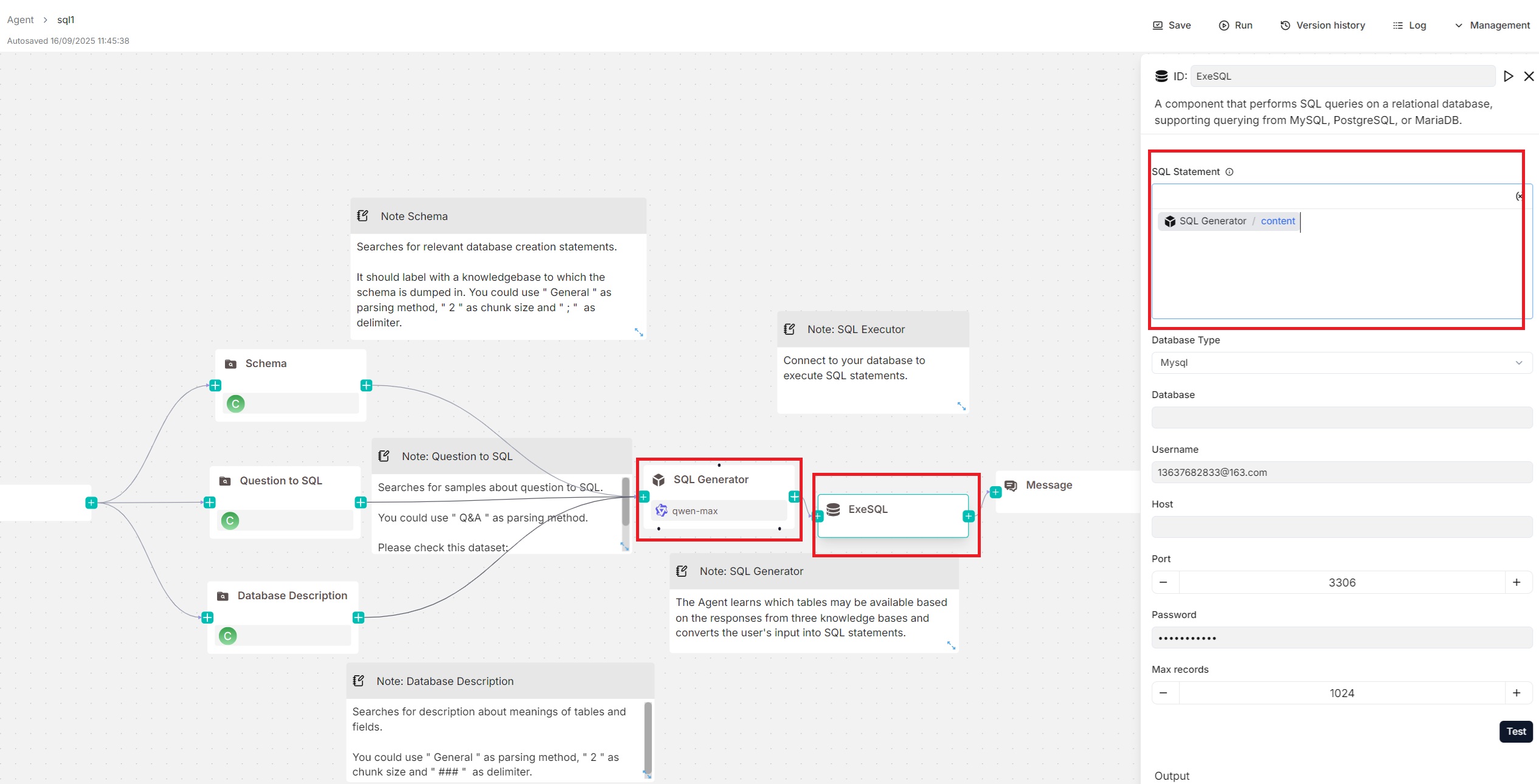

"password": "20010812Yy!",

|

||||

"port": 3306,

|

||||

"sql": "Agent:WickedGoatsDivide@content",

|

||||

"sql": "{Agent:WickedGoatsDivide@content}",

|

||||

"username": "13637682833@163.com"

|

||||

}

|

||||

},

|

||||

@ -114,9 +114,7 @@

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"ed31364c727211f0bdb2bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -124,7 +122,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -145,9 +143,7 @@

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"0f968106727311f08357bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -155,7 +151,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -176,9 +172,7 @@

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"4ad1f9d0727311f0827dbafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -186,7 +180,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -347,9 +341,7 @@

|

||||

"form": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"ed31364c727211f0bdb2bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -357,7 +349,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -387,9 +379,7 @@

|

||||

"form": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"0f968106727311f08357bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -397,7 +387,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -427,9 +417,7 @@

|

||||

"form": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"4ad1f9d0727311f0827dbafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -437,7 +425,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -539,7 +527,7 @@

|

||||

},

|

||||

"password": "20010812Yy!",

|

||||

"port": 3306,

|

||||

"sql": "Agent:WickedGoatsDivide@content",

|

||||

"sql": "{Agent:WickedGoatsDivide@content}",

|

||||

"username": "13637682833@163.com"

|

||||

},

|

||||

"label": "ExeSQL",

|

||||

|

||||

@ -157,7 +157,7 @@ class CodeExec(ToolBase, ABC):

|

||||

|

||||

try:

|

||||

resp = requests.post(url=f"http://{settings.SANDBOX_HOST}:9385/run", json=code_req, timeout=os.environ.get("COMPONENT_EXEC_TIMEOUT", 10*60))

|

||||

logging.info(f"http://{settings.SANDBOX_HOST}:9385/run", code_req, resp.status_code)

|

||||

logging.info(f"http://{settings.SANDBOX_HOST}:9385/run, code_req: {code_req}, resp.status_code {resp.status_code}:")

|

||||

if resp.status_code != 200:

|

||||

resp.raise_for_status()

|

||||

body = resp.json()

|

||||

|

||||

@ -53,7 +53,7 @@ class ExeSQLParam(ToolParamBase):

|

||||

self.max_records = 1024

|

||||

|

||||

def check(self):

|

||||

self.check_valid_value(self.db_type, "Choose DB type", ['mysql', 'postgresql', 'mariadb', 'mssql'])

|

||||

self.check_valid_value(self.db_type, "Choose DB type", ['mysql', 'postgres', 'mariadb', 'mssql'])

|

||||

self.check_empty(self.database, "Database name")

|

||||

self.check_empty(self.username, "database username")

|

||||

self.check_empty(self.host, "IP Address")

|

||||

@ -111,7 +111,7 @@ class ExeSQL(ToolBase, ABC):

|

||||

if self._param.db_type in ["mysql", "mariadb"]:

|

||||

db = pymysql.connect(db=self._param.database, user=self._param.username, host=self._param.host,

|

||||

port=self._param.port, password=self._param.password)

|

||||

elif self._param.db_type == 'postgresql':

|

||||

elif self._param.db_type == 'postgres':

|

||||

db = psycopg2.connect(dbname=self._param.database, user=self._param.username, host=self._param.host,

|

||||

port=self._param.port, password=self._param.password)

|

||||

elif self._param.db_type == 'mssql':

|

||||

|

||||

105

api/apps/HEALTHCHECK_TESTING.md

Normal file

@ -0,0 +1,105 @@

|

||||

# 健康检查与 Kubernetes 探针简明说明

|

||||

|

||||

本文件说明:什么是 K8s 探针、如何用 `/v1/system/healthz` 做健康检查,以及下文用例中的关键词含义。

|

||||

|

||||

## 什么是 K8s 探针(Probe)

|

||||

- 探针是 K8s 用来“探测”容器是否健康/可对外服务的机制。

|

||||

- 常见三类:

|

||||

- livenessProbe:活性探针。失败时 K8s 会重启容器,用于“应用卡死/失去连接时自愈”。

|

||||

- readinessProbe:就绪探针。失败时 Endpoint 不会被加入 Service 负载均衡,用于“应用尚未准备好时不接流量”。

|

||||

- startupProbe:启动探针。给慢启动应用更长的初始化窗口,期间不执行 liveness/readiness。

|

||||

- 这些探针通常通过 HTTP GET 访问一个公开且轻量的健康端点(无需鉴权),以 HTTP 状态码判定结果:200=通过;5xx/超时=失败。

|

||||

|

||||

## 本项目健康端点

|

||||

- 已实现:`GET /v1/system/healthz`(无需认证)。

|

||||

- 语义:

|

||||

- 200:关键依赖正常。

|

||||

- 500:任一关键依赖异常(当前判定为 DB 或 Chat)。

|

||||

- 响应体:JSON,最小字段 `status, db, chat`;并包含 `redis, doc_engine, storage` 等可观测项。失败项会在 `_meta` 中包含 `error/elapsed`。

|

||||

- 示例(DB 故障):

|

||||

```json

|

||||

{"status":"nok","chat":"ok","db":"nok"}

|

||||

```

|

||||

|

||||

## 用例背景(Problem/use case)

|

||||

- 现状:Ragflow 跑在 K8s,数据库是 AWS RDS Postgres,凭证由 Secret Manager 管理并每 7 天轮换。轮换后应用连接失效,需要手动重启 Pod 才能重新建立连接。

|

||||

- 目标:通过 K8s 探针自动化检测并重启异常 Pod,减少人工操作。

|

||||

- 需求:一个“无需鉴权”的公共健康端点,能在依赖异常时返回非 200(如 500)且提供 JSON 详情。

|

||||

- 现已满足:`/v1/system/healthz` 正是为此设计。

|

||||

|

||||

## 关键术语解释(对应你提供的描述)

|

||||

- Ragflow instance:部署在 K8s 的 Ragflow 服务。

|

||||

- AWS RDS Postgres:托管的 PostgreSQL 数据库实例。

|

||||

- Secret Manager rotation:Secrets 定期轮换(每 7 天),会导致旧连接失效。

|

||||

- Probes(K8s 探针):liveness/readiness,用于自动重启或摘除不健康实例。

|

||||

- Public endpoint without API key:无需 Authorization 的 HTTP 路由,便于探针直接访问。

|

||||

- Dependencies statuses:依赖健康状态(db、chat、redis、doc_engine、storage 等)。

|

||||

- HTTP 500 with JSON:当依赖异常时返回 500,并附带 JSON 说明哪个子系统失败。

|

||||

|

||||

## 快速测试

|

||||

- 正常:

|

||||

```bash

|

||||

curl -i http://<host>/v1/system/healthz

|

||||

```

|

||||

- 制造 DB 故障(docker-compose 示例):

|

||||

```bash

|

||||

docker compose stop db && curl -i http://<host>/v1/system/healthz

|

||||

```

|

||||

(预期 500,JSON 中 `db:"nok"`)

|

||||

|

||||

## 更完整的测试清单

|

||||

### 1) 仅查看 HTTP 状态码

|

||||

```bash

|

||||

curl -s -o /dev/null -w "%{http_code}\n" http://<host>/v1/system/healthz

|

||||

```

|

||||

期望:`200` 或 `500`。

|

||||

|

||||

### 2) Windows PowerShell

|

||||

```powershell

|

||||

# 状态码

|

||||

(Invoke-WebRequest -Uri "http://<host>/v1/system/healthz" -Method GET -TimeoutSec 3 -ErrorAction SilentlyContinue).StatusCode

|

||||

# 完整响应

|

||||

Invoke-RestMethod -Uri "http://<host>/v1/system/healthz" -Method GET

|

||||

```

|

||||

|

||||

### 3) 通过 kubectl 端口转发本地测试

|

||||

```bash

|

||||

# 前端/网关暴露端口不同环境自行调整

|

||||

kubectl port-forward deploy/<your-deploy> 8080:80 -n <ns>

|

||||

curl -i http://127.0.0.1:8080/v1/system/healthz

|

||||

```

|

||||

|

||||

### 4) 制造常见失败场景

|

||||

- DB 失败(推荐):

|

||||

```bash

|

||||

docker compose stop db

|

||||

curl -i http://<host>/v1/system/healthz # 预期 500

|

||||

```

|

||||

- Chat 失败(可选):将 `CHAT_CFG` 的 `factory`/`base_url` 设为无效并重启后端,再请求应为 500,且 `chat:"nok"`。

|

||||

- Redis/存储/文档引擎:停用对应服务后再次请求,可在 JSON 中看到相应字段为 `"nok"`(不影响 200/500 判定)。

|

||||

|

||||

### 5) 浏览器验证

|

||||

- 直接打开 `http://<host>/v1/system/healthz`,在 DevTools Network 查看 200/500;页面正文就是 JSON。

|

||||

- 反向代理注意:若有自定义 500 错页,需对 `/healthz` 关闭错误页拦截(如 `proxy_intercept_errors off;`)。

|

||||

|

||||

## K8s 探针示例

|

||||

```yaml

|

||||

readinessProbe:

|

||||

httpGet:

|

||||

path: /v1/system/healthz

|

||||

port: 80

|

||||

initialDelaySeconds: 5

|

||||

periodSeconds: 10

|

||||

timeoutSeconds: 2

|

||||

failureThreshold: 1

|

||||

livenessProbe:

|

||||

httpGet:

|

||||

path: /v1/system/healthz

|

||||

port: 80

|

||||

initialDelaySeconds: 10

|

||||

periodSeconds: 10

|

||||

timeoutSeconds: 2

|

||||

failureThreshold: 3

|

||||

```

|

||||

|

||||

提示:如有反向代理(Nginx)自定义 500 错页,需对 `/healthz` 关闭错误页拦截,以便保留 JSON。

|

||||

@ -28,6 +28,7 @@ from api.db import CanvasCategory, FileType

|

||||

from api.db.services.canvas_service import CanvasTemplateService, UserCanvasService, API4ConversationService

|

||||

from api.db.services.document_service import DocumentService

|

||||

from api.db.services.file_service import FileService

|

||||

from api.db.services.task_service import queue_dataflow

|

||||

from api.db.services.user_service import TenantService

|

||||

from api.db.services.user_canvas_version import UserCanvasVersionService

|

||||

from api.settings import RetCode

|

||||

@ -48,14 +49,6 @@ def templates():

|

||||

return get_json_result(data=[c.to_dict() for c in CanvasTemplateService.query(canvas_category=CanvasCategory.Agent)])

|

||||

|

||||

|

||||

@manager.route('/list', methods=['GET']) # noqa: F821

|

||||

@login_required

|

||||

def canvas_list():

|

||||

return get_json_result(data=sorted([c.to_dict() for c in \

|

||||

UserCanvasService.query(user_id=current_user.id, canvas_category=CanvasCategory.Agent)], key=lambda x: x["update_time"]*-1)

|

||||

)

|

||||

|

||||

|

||||

@manager.route('/rm', methods=['POST']) # noqa: F821

|

||||

@validate_request("canvas_ids")

|

||||

@login_required

|

||||

@ -77,9 +70,10 @@ def save():

|

||||

if not isinstance(req["dsl"], str):

|

||||

req["dsl"] = json.dumps(req["dsl"], ensure_ascii=False)

|

||||

req["dsl"] = json.loads(req["dsl"])

|

||||

cate = req.get("canvas_category", CanvasCategory.Agent)

|

||||

if "id" not in req:

|

||||

req["user_id"] = current_user.id

|

||||

if UserCanvasService.query(user_id=current_user.id, title=req["title"].strip(), canvas_category=CanvasCategory.Agent):

|

||||

if UserCanvasService.query(user_id=current_user.id, title=req["title"].strip(), canvas_category=cate):

|

||||

return get_data_error_result(message=f"{req['title'].strip()} already exists.")

|

||||

req["id"] = get_uuid()

|

||||

if not UserCanvasService.save(**req):

|

||||

@ -148,6 +142,13 @@ def run():

|

||||

if not isinstance(cvs.dsl, str):

|

||||

cvs.dsl = json.dumps(cvs.dsl, ensure_ascii=False)

|

||||

|

||||

if cvs.canvas_category == CanvasCategory.DataFlow:

|

||||

task_id = get_uuid()

|

||||

ok, error_message = queue_dataflow(tenant_id=user_id, flow_id=req["id"], task_id=task_id, file=files[0], priority=0)

|

||||

if not ok:

|

||||

return get_data_error_result(message=error_message)

|

||||

return get_json_result(data={"message_id": task_id})

|

||||

|

||||

try:

|

||||

canvas = Canvas(cvs.dsl, current_user.id, req["id"])

|

||||

except Exception as e:

|

||||

@ -332,7 +333,7 @@ def test_db_connect():

|

||||

if req["db_type"] in ["mysql", "mariadb"]:

|

||||

db = MySQLDatabase(req["database"], user=req["username"], host=req["host"], port=req["port"],

|

||||

password=req["password"])

|

||||

elif req["db_type"] == 'postgresql':

|

||||

elif req["db_type"] == 'postgres':

|

||||

db = PostgresqlDatabase(req["database"], user=req["username"], host=req["host"], port=req["port"],

|

||||

password=req["password"])

|

||||

elif req["db_type"] == 'mssql':

|

||||

@ -383,22 +384,31 @@ def getversion( version_id):

|

||||

return get_json_result(data=f"Error getting history file: {e}")

|

||||

|

||||

|

||||

@manager.route('/listteam', methods=['GET']) # noqa: F821

|

||||

@manager.route('/list', methods=['GET']) # noqa: F821

|

||||

@login_required

|

||||

def list_canvas():

|

||||

keywords = request.args.get("keywords", "")

|

||||

page_number = int(request.args.get("page", 1))

|

||||

items_per_page = int(request.args.get("page_size", 150))

|

||||

orderby = request.args.get("orderby", "create_time")

|

||||

desc = request.args.get("desc", True)

|

||||

try:

|

||||

canvas_category = request.args.get("canvas_category")

|

||||

if request.args.get("desc", "true").lower() == "false":

|

||||

desc = False

|

||||

else:

|

||||

desc = True

|

||||

owner_ids = request.args.get("owner_ids", [])

|

||||

if not owner_ids:

|

||||

tenants = TenantService.get_joined_tenants_by_user_id(current_user.id)

|

||||

tenants = [m["tenant_id"] for m in tenants]

|

||||

canvas, total = UserCanvasService.get_by_tenant_ids(

|

||||

[m["tenant_id"] for m in tenants], current_user.id, page_number,

|

||||

items_per_page, orderby, desc, keywords, canvas_category=CanvasCategory.Agent)

|

||||

return get_json_result(data={"canvas": canvas, "total": total})

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

tenants, current_user.id, page_number,

|

||||

items_per_page, orderby, desc, keywords, canvas_category)

|

||||

else:

|

||||

tenants = owner_ids

|

||||

canvas, total = UserCanvasService.get_by_tenant_ids(

|

||||

tenants, current_user.id, 0,

|

||||

0, orderby, desc, keywords, canvas_category)

|

||||

return get_json_result(data={"canvas": canvas, "total": total})

|

||||

|

||||

|

||||

@manager.route('/setting', methods=['POST']) # noqa: F821

|

||||

|

||||

@ -182,6 +182,7 @@ def create():

|

||||

"id": get_uuid(),

|

||||

"kb_id": kb.id,

|

||||

"parser_id": kb.parser_id,

|

||||

"pipeline_id": kb.pipeline_id,

|

||||

"parser_config": kb.parser_config,

|

||||

"created_by": current_user.id,

|

||||

"type": FileType.VIRTUAL,

|

||||

@ -546,31 +547,22 @@ def get(doc_id):

|

||||

|

||||

@manager.route("/change_parser", methods=["POST"]) # noqa: F821

|

||||

@login_required

|

||||

@validate_request("doc_id", "parser_id")

|

||||

@validate_request("doc_id")

|

||||

def change_parser():

|

||||

req = request.json

|

||||

|

||||

if not DocumentService.accessible(req["doc_id"], current_user.id):

|

||||

return get_json_result(data=False, message="No authorization.", code=settings.RetCode.AUTHENTICATION_ERROR)

|

||||

try:

|

||||

e, doc = DocumentService.get_by_id(req["doc_id"])

|

||||

if not e:

|

||||

return get_data_error_result(message="Document not found!")

|

||||

if doc.parser_id.lower() == req["parser_id"].lower():

|

||||

if "parser_config" in req:

|

||||

if req["parser_config"] == doc.parser_config:

|

||||

return get_json_result(data=True)

|

||||

else:

|

||||

return get_json_result(data=True)

|

||||

|

||||

if (doc.type == FileType.VISUAL and req["parser_id"] != "picture") or (re.search(r"\.(ppt|pptx|pages)$", doc.name) and req["parser_id"] != "presentation"):

|

||||

return get_data_error_result(message="Not supported yet!")

|

||||

e, doc = DocumentService.get_by_id(req["doc_id"])

|

||||

if not e:

|

||||

return get_data_error_result(message="Document not found!")

|

||||

|

||||

def reset_doc():

|

||||

nonlocal doc

|

||||

e = DocumentService.update_by_id(doc.id, {"parser_id": req["parser_id"], "progress": 0, "progress_msg": "", "run": TaskStatus.UNSTART.value})

|

||||

if not e:

|

||||

return get_data_error_result(message="Document not found!")

|

||||

if "parser_config" in req:

|

||||

DocumentService.update_parser_config(doc.id, req["parser_config"])

|

||||

if doc.token_num > 0:

|

||||

e = DocumentService.increment_chunk_num(doc.id, doc.kb_id, doc.token_num * -1, doc.chunk_num * -1, doc.process_duration * -1)

|

||||

if not e:

|

||||

@ -581,6 +573,26 @@ def change_parser():

|

||||

if settings.docStoreConn.indexExist(search.index_name(tenant_id), doc.kb_id):

|

||||

settings.docStoreConn.delete({"doc_id": doc.id}, search.index_name(tenant_id), doc.kb_id)

|

||||

|

||||

try:

|

||||

if req.get("pipeline_id"):

|

||||

if doc.pipeline_id == req["pipeline_id"]:

|

||||

return get_json_result(data=True)

|

||||

DocumentService.update_by_id(doc.id, {"pipeline_id": req["pipeline_id"]})

|

||||

reset_doc()

|

||||

return get_json_result(data=True)

|

||||

|

||||

if doc.parser_id.lower() == req["parser_id"].lower():

|

||||

if "parser_config" in req:

|

||||

if req["parser_config"] == doc.parser_config:

|

||||

return get_json_result(data=True)

|

||||

else:

|

||||

return get_json_result(data=True)

|

||||

|

||||

if (doc.type == FileType.VISUAL and req["parser_id"] != "picture") or (re.search(r"\.(ppt|pptx|pages)$", doc.name) and req["parser_id"] != "presentation"):

|

||||

return get_data_error_result(message="Not supported yet!")

|

||||

if "parser_config" in req:

|

||||

DocumentService.update_parser_config(doc.id, req["parser_config"])

|

||||

reset_doc()

|

||||

return get_json_result(data=True)

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

|

||||

@ -64,7 +64,7 @@ def create():

|

||||

e, t = TenantService.get_by_id(current_user.id)

|

||||

if not e:

|

||||

return get_data_error_result(message="Tenant not found.")

|

||||

req["embd_id"] = t.embd_id

|

||||

#req["embd_id"] = t.embd_id

|

||||

if not KnowledgebaseService.save(**req):

|

||||

return get_data_error_result()

|

||||

return get_json_result(data={"kb_id": req["id"]})

|

||||

@ -379,3 +379,19 @@ def get_meta():

|

||||

code=settings.RetCode.AUTHENTICATION_ERROR

|

||||

)

|

||||

return get_json_result(data=DocumentService.get_meta_by_kbs(kb_ids))

|

||||

|

||||

|

||||

@manager.route("/basic_info", methods=["GET"]) # noqa: F821

|

||||

@login_required

|

||||

def get_basic_info():

|

||||

kb_id = request.args.get("kb_id", "")

|

||||

if not KnowledgebaseService.accessible(kb_id, current_user.id):

|

||||

return get_json_result(

|

||||

data=False,

|

||||

message='No authorization.',

|

||||

code=settings.RetCode.AUTHENTICATION_ERROR

|

||||

)

|

||||

|

||||

basic_info = DocumentService.knowledgebase_basic_info(kb_id)

|

||||

|

||||

return get_json_result(data=basic_info)

|

||||

|

||||

@ -414,7 +414,7 @@ def agents_completion_openai_compatibility(tenant_id, agent_id):

|

||||

tenant_id,

|

||||

agent_id,

|

||||

question,

|

||||

session_id=req.get("session_id", req.get("id", "") or req.get("metadata", {}).get("id", "")),

|

||||

session_id=req.pop("session_id", req.get("id", "")) or req.get("metadata", {}).get("id", ""),

|

||||

stream=True,

|

||||

**req,

|

||||

),

|

||||

@ -432,7 +432,7 @@ def agents_completion_openai_compatibility(tenant_id, agent_id):

|

||||

tenant_id,

|

||||

agent_id,

|

||||

question,

|

||||

session_id=req.get("session_id", req.get("id", "") or req.get("metadata", {}).get("id", "")),

|

||||

session_id=req.pop("session_id", req.get("id", "")) or req.get("metadata", {}).get("id", ""),

|

||||

stream=False,

|

||||

**req,

|

||||

)

|

||||

|

||||

@ -36,6 +36,8 @@ from rag.utils.storage_factory import STORAGE_IMPL, STORAGE_IMPL_TYPE

|

||||

from timeit import default_timer as timer

|

||||

|

||||

from rag.utils.redis_conn import REDIS_CONN

|

||||

from flask import jsonify

|

||||

from api.utils.health import run_health_checks

|

||||

|

||||

@manager.route("/version", methods=["GET"]) # noqa: F821

|

||||

@login_required

|

||||

@ -169,6 +171,12 @@ def status():

|

||||

return get_json_result(data=res)

|

||||

|

||||

|

||||

@manager.route("/healthz", methods=["GET"]) # noqa: F821

|

||||

def healthz():

|

||||

result, all_ok = run_health_checks()

|

||||

return jsonify(result), (200 if all_ok else 500)

|

||||

|

||||

|

||||

@manager.route("/new_token", methods=["POST"]) # noqa: F821

|

||||

@login_required

|

||||

def new_token():

|

||||

|

||||

@ -646,6 +646,7 @@ class Knowledgebase(DataBaseModel):

|

||||

vector_similarity_weight = FloatField(default=0.3, index=True)

|

||||

|

||||

parser_id = CharField(max_length=32, null=False, help_text="default parser ID", default=ParserType.NAIVE.value, index=True)

|

||||

pipeline_id = CharField(max_length=32, null=True, help_text="Pipeline ID", index=True)

|

||||

parser_config = JSONField(null=False, default={"pages": [[1, 1000000]]})

|

||||

pagerank = IntegerField(default=0, index=False)

|

||||

status = CharField(max_length=1, null=True, help_text="is it validate(0: wasted, 1: validate)", default="1", index=True)

|

||||

@ -662,6 +663,7 @@ class Document(DataBaseModel):

|

||||

thumbnail = TextField(null=True, help_text="thumbnail base64 string")

|

||||

kb_id = CharField(max_length=256, null=False, index=True)

|

||||

parser_id = CharField(max_length=32, null=False, help_text="default parser ID", index=True)

|

||||

pipeline_id = CharField(max_length=32, null=True, help_text="pipleline ID", index=True)

|

||||

parser_config = JSONField(null=False, default={"pages": [[1, 1000000]]})

|

||||

source_type = CharField(max_length=128, null=False, default="local", help_text="where dose this document come from", index=True)

|

||||

type = CharField(max_length=32, null=False, help_text="file extension", index=True)

|

||||

@ -1020,7 +1022,6 @@ def migrate_db():

|

||||

migrate(migrator.add_column("dialog", "meta_data_filter", JSONField(null=True, default={})))

|

||||

except Exception:

|

||||

pass

|

||||

|

||||

try:

|

||||

migrate(migrator.alter_column_type("canvas_template", "title", JSONField(null=True, default=dict, help_text="Canvas title")))

|

||||

except Exception:

|

||||

@ -1037,4 +1038,12 @@ def migrate_db():

|

||||

migrate(migrator.add_column("canvas_template", "canvas_category", CharField(max_length=32, null=False, default="agent_canvas", help_text="agent_canvas|dataflow_canvas", index=True)))

|

||||

except Exception:

|

||||

pass

|

||||

try:

|

||||

migrate(migrator.add_column("knowledgebase", "pipeline_id", CharField(max_length=32, null=True, help_text="default parser ID", index=True)))

|

||||

except Exception:

|

||||

pass

|

||||

try:

|

||||

migrate(migrator.add_column("document", "pipeline_id", CharField(max_length=32, null=True, help_text="default parser ID", index=True)))

|

||||

except Exception:

|

||||

pass

|

||||

logging.disable(logging.NOTSET)

|

||||

|

||||

@ -95,7 +95,7 @@ class UserCanvasService(CommonService):

|

||||

@DB.connection_context()

|

||||

def get_by_tenant_ids(cls, joined_tenant_ids, user_id,

|

||||

page_number, items_per_page,

|

||||

orderby, desc, keywords, canvas_category=CanvasCategory.Agent,

|

||||

orderby, desc, keywords, canvas_category=None

|

||||

):

|

||||

fields = [

|

||||

cls.model.id,

|

||||

@ -122,7 +122,8 @@ class UserCanvasService(CommonService):

|

||||

TenantPermission.TEAM.value)) | (

|

||||

cls.model.user_id == user_id))

|

||||

)

|

||||

agents = agents.where(cls.model.canvas_category == canvas_category)

|

||||

if canvas_category:

|

||||

agents = agents.where(cls.model.canvas_category == canvas_category)

|

||||

if desc:

|

||||

agents = agents.order_by(cls.model.getter_by(orderby).desc())

|

||||

else:

|

||||

|

||||

@ -24,7 +24,7 @@ from io import BytesIO

|

||||

|

||||

import trio

|

||||

import xxhash

|

||||

from peewee import fn

|

||||

from peewee import fn, Case

|

||||

|

||||

from api import settings

|

||||

from api.constants import IMG_BASE64_PREFIX, FILE_NAME_LEN_LIMIT

|

||||

@ -674,6 +674,53 @@ class DocumentService(CommonService):

|

||||

return False

|

||||

|

||||

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def knowledgebase_basic_info(cls, kb_id: str) -> dict[str, int]:

|

||||

# cancelled: run == "2" but progress can vary

|

||||

cancelled = (

|

||||

cls.model.select(fn.COUNT(1))

|

||||

.where((cls.model.kb_id == kb_id) & (cls.model.run == TaskStatus.CANCEL))

|

||||

.scalar()

|

||||

)

|

||||

|

||||

row = (

|

||||

cls.model.select(

|

||||

# finished: progress == 1

|

||||

fn.COALESCE(fn.SUM(Case(None, [(cls.model.progress == 1, 1)], 0)), 0).alias("finished"),

|

||||

|

||||

# failed: progress == -1

|

||||

fn.COALESCE(fn.SUM(Case(None, [(cls.model.progress == -1, 1)], 0)), 0).alias("failed"),

|

||||

|

||||

# processing: 0 <= progress < 1

|

||||

fn.COALESCE(

|

||||

fn.SUM(

|

||||

Case(

|

||||

None,

|

||||

[

|

||||

(((cls.model.progress == 0) | ((cls.model.progress > 0) & (cls.model.progress < 1))), 1),

|

||||

],

|

||||

0,

|

||||

)

|

||||

),

|

||||

0,

|

||||

).alias("processing"),

|

||||

)

|

||||

.where(

|

||||

(cls.model.kb_id == kb_id)

|

||||

& ((cls.model.run.is_null(True)) | (cls.model.run != TaskStatus.CANCEL))

|

||||

)

|

||||

.dicts()

|

||||

.get()

|

||||

)

|

||||

|

||||

return {

|

||||

"processing": int(row["processing"]),

|

||||

"finished": int(row["finished"]),

|

||||

"failed": int(row["failed"]),

|

||||

"cancelled": int(cancelled),

|

||||

}

|

||||

|

||||

def queue_raptor_o_graphrag_tasks(doc, ty, priority):

|

||||

chunking_config = DocumentService.get_chunking_config(doc["id"])

|

||||

hasher = xxhash.xxh64()

|

||||

@ -702,6 +749,8 @@ def queue_raptor_o_graphrag_tasks(doc, ty, priority):

|

||||

|

||||

def get_queue_length(priority):

|

||||

group_info = REDIS_CONN.queue_info(get_svr_queue_name(priority), SVR_CONSUMER_GROUP_NAME)

|

||||

if not group_info:

|

||||

return 0

|

||||

return int(group_info.get("lag", 0) or 0)

|

||||

|

||||

|

||||

@ -847,3 +896,4 @@ def doc_upload_and_parse(conversation_id, file_objs, user_id):

|

||||

doc_id, kb.id, token_counts[doc_id], chunk_counts[doc_id], 0)

|

||||

|

||||

return [d["id"] for d, _ in files]

|

||||

|

||||

|

||||

@ -440,6 +440,7 @@ class FileService(CommonService):

|

||||

"id": doc_id,

|

||||

"kb_id": kb.id,

|

||||

"parser_id": self.get_parser(filetype, filename, kb.parser_id),

|

||||

"pipeline_id": kb.pipeline_id,

|

||||

"parser_config": kb.parser_config,

|

||||

"created_by": user_id,

|

||||

"type": filetype,

|

||||

@ -495,7 +496,7 @@ class FileService(CommonService):

|

||||

return ParserType.AUDIO.value

|

||||

if re.search(r"\.(ppt|pptx|pages)$", filename):

|

||||

return ParserType.PRESENTATION.value

|

||||

if re.search(r"\.(eml)$", filename):

|

||||

if re.search(r"\.(msg|eml)$", filename):

|

||||

return ParserType.EMAIL.value

|

||||

return default

|

||||

|

||||

|

||||

@ -472,33 +472,25 @@ def has_canceled(task_id):

|

||||

return False

|

||||

|

||||

|

||||

def queue_dataflow(dsl:str, tenant_id:str, doc_id:str, task_id:str, flow_id:str, priority: int, callback=None) -> tuple[bool, str]:

|

||||

"""

|

||||

Returns a tuple (success: bool, error_message: str).

|

||||

"""

|

||||

_ = callback

|

||||

def queue_dataflow(tenant_id:str, flow_id:str, task_id:str, doc_id:str="x", file:dict=None, priority: int=0) -> tuple[bool, str]:

|

||||

|

||||

task = dict(

|

||||

id=get_uuid() if not task_id else task_id,

|

||||

doc_id=doc_id,

|

||||

from_page=0,

|

||||

to_page=100000000,

|

||||

task_type="dataflow",

|

||||

priority=priority,

|

||||

id=task_id,

|

||||

doc_id=doc_id,

|

||||

from_page=0,

|

||||

to_page=100000000,

|

||||

task_type="dataflow",

|

||||

priority=priority,

|

||||

)

|

||||

|

||||

TaskService.model.delete().where(TaskService.model.id == task["id"]).execute()

|

||||

bulk_insert_into_db(model=Task, data_source=[task], replace_on_conflict=True)

|

||||

|

||||

kb_id = DocumentService.get_knowledgebase_id(doc_id)

|

||||

if not kb_id:

|

||||

return False, f"Can't find KB of this document: {doc_id}"

|

||||

|

||||

task["kb_id"] = kb_id

|

||||

task["kb_id"] = DocumentService.get_knowledgebase_id(doc_id)

|

||||

task["tenant_id"] = tenant_id

|

||||

task["task_type"] = "dataflow"

|

||||

task["dsl"] = dsl

|

||||

task["dataflow_id"] = get_uuid() if not flow_id else flow_id

|

||||

task["dataflow_id"] = flow_id

|

||||

task["file"] = file

|

||||

|

||||

if not REDIS_CONN.queue_product(

|

||||

get_svr_queue_name(priority), message=task

|

||||

|

||||

@ -1,3 +1,51 @@

|

||||

import base64

|

||||

from functools import partial

|

||||

from io import BytesIO

|

||||

|

||||

from PIL import Image

|

||||

|

||||

test_image_base64 = "iVBORw0KGgoAAAANSUhEUgAAAGQAAABkCAIAAAD/gAIDAAAA6ElEQVR4nO3QwQ3AIBDAsIP9d25XIC+EZE8QZc18w5l9O+AlZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBWYFZgVmBT+IYAHHLHkdEgAAAABJRU5ErkJggg=="

|

||||

test_image = base64.b64decode(test_image_base64)

|

||||

test_image = base64.b64decode(test_image_base64)

|

||||

|

||||

async def image2id(d: dict, storage_put_func: partial, bucket:str, objname:str):

|

||||

import logging

|

||||

from io import BytesIO

|

||||

import trio

|

||||

from rag.svr.task_executor import minio_limiter

|

||||

if not d.get("image"):

|

||||

return

|

||||

|

||||

with BytesIO() as output_buffer:

|

||||

if isinstance(d["image"], bytes):

|

||||

output_buffer.write(d["image"])

|

||||

output_buffer.seek(0)

|

||||

else:

|

||||

# If the image is in RGBA mode, convert it to RGB mode before saving it in JPEG format.

|

||||

if d["image"].mode in ("RGBA", "P"):

|

||||

converted_image = d["image"].convert("RGB")

|

||||

d["image"] = converted_image

|

||||

try:

|

||||

d["image"].save(output_buffer, format='JPEG')

|

||||

except OSError as e:

|

||||

logging.warning(

|

||||

"Saving image exception, ignore: {}".format(str(e)))

|

||||

|

||||

async with minio_limiter:

|

||||

await trio.to_thread.run_sync(lambda: storage_put_func(bucket=bucket, fnm=objname, binary=output_buffer.getvalue()))

|

||||

d["img_id"] = f"{bucket}-{objname}"

|

||||

if not isinstance(d["image"], bytes):

|

||||

d["image"].close()

|

||||

del d["image"] # Remove image reference

|

||||

|

||||

|

||||

def id2image(image_id:str|None, storage_get_func: partial):

|

||||

if not image_id:

|

||||

return

|

||||

arr = image_id.split("-")

|

||||

if len(arr) != 2:

|

||||

return

|

||||

bkt, nm = image_id.split("-")

|

||||

blob = storage_get_func(bucket=bkt, filename=nm)

|

||||

if not blob:

|

||||

return

|

||||

return Image.open(BytesIO(blob))

|

||||

@ -155,7 +155,7 @@ def filename_type(filename):

|

||||

if re.match(r".*\.pdf$", filename):

|

||||

return FileType.PDF.value

|

||||

|

||||

if re.match(r".*\.(eml|doc|docx|ppt|pptx|yml|xml|htm|json|jsonl|ldjson|csv|txt|ini|xls|xlsx|wps|rtf|hlp|pages|numbers|key|md|py|js|java|c|cpp|h|php|go|ts|sh|cs|kt|html|sql)$", filename):

|

||||

if re.match(r".*\.(msg|eml|doc|docx|ppt|pptx|yml|xml|htm|json|jsonl|ldjson|csv|txt|ini|xls|xlsx|wps|rtf|hlp|pages|numbers|key|md|py|js|java|c|cpp|h|php|go|ts|sh|cs|kt|html|sql)$", filename):

|

||||

return FileType.DOC.value

|

||||

|

||||

if re.match(r".*\.(wav|flac|ape|alac|wavpack|wv|mp3|aac|ogg|vorbis|opus)$", filename):

|

||||

|

||||

104

api/utils/health.py

Normal file

@ -0,0 +1,104 @@

|

||||

from timeit import default_timer as timer

|

||||

|

||||

from api import settings

|

||||

from api.db.db_models import DB

|

||||

from rag.utils.redis_conn import REDIS_CONN

|

||||

from rag.utils.storage_factory import STORAGE_IMPL

|

||||

|

||||

|

||||

def _ok_nok(ok: bool) -> str:

|

||||

return "ok" if ok else "nok"

|

||||

|

||||

|

||||

def check_db() -> tuple[bool, dict]:

|

||||

st = timer()

|

||||

try:

|

||||

# lightweight probe; works for MySQL/Postgres

|

||||

DB.execute_sql("SELECT 1")

|

||||

return True, {"elapsed": f"{(timer() - st) * 1000.0:.1f}"}

|

||||

except Exception as e:

|

||||

return False, {"elapsed": f"{(timer() - st) * 1000.0:.1f}", "error": str(e)}

|

||||

|

||||

|

||||

def check_redis() -> tuple[bool, dict]:

|

||||

st = timer()

|

||||

try:

|

||||

ok = bool(REDIS_CONN.health())

|

||||

return ok, {"elapsed": f"{(timer() - st) * 1000.0:.1f}"}

|

||||

except Exception as e:

|

||||

return False, {"elapsed": f"{(timer() - st) * 1000.0:.1f}", "error": str(e)}

|

||||

|

||||

|

||||

def check_doc_engine() -> tuple[bool, dict]:

|

||||

st = timer()

|

||||

try:

|

||||

meta = settings.docStoreConn.health()

|

||||

# treat any successful call as ok

|

||||

return True, {"elapsed": f"{(timer() - st) * 1000.0:.1f}", **(meta or {})}

|

||||

except Exception as e:

|

||||

return False, {"elapsed": f"{(timer() - st) * 1000.0:.1f}", "error": str(e)}

|

||||

|

||||

|

||||

def check_storage() -> tuple[bool, dict]:

|

||||

st = timer()

|

||||

try:

|

||||

STORAGE_IMPL.health()

|

||||

return True, {"elapsed": f"{(timer() - st) * 1000.0:.1f}"}

|

||||

except Exception as e:

|

||||

return False, {"elapsed": f"{(timer() - st) * 1000.0:.1f}", "error": str(e)}

|

||||

|

||||

|

||||

def check_chat() -> tuple[bool, dict]:

|

||||

st = timer()

|

||||

try:

|

||||

cfg = getattr(settings, "CHAT_CFG", None)

|

||||

ok = bool(cfg and cfg.get("factory"))

|

||||

return ok, {"elapsed": f"{(timer() - st) * 1000.0:.1f}"}

|

||||

except Exception as e:

|

||||

return False, {"elapsed": f"{(timer() - st) * 1000.0:.1f}", "error": str(e)}

|

||||

|

||||

|

||||

def run_health_checks() -> tuple[dict, bool]:

|

||||

result: dict[str, str | dict] = {}

|

||||

|

||||

db_ok, db_meta = check_db()

|

||||

chat_ok, chat_meta = check_chat()

|

||||

|

||||

result["db"] = _ok_nok(db_ok)

|

||||

if not db_ok:

|

||||

result.setdefault("_meta", {})["db"] = db_meta

|

||||

|

||||

result["chat"] = _ok_nok(chat_ok)

|

||||

if not chat_ok:

|

||||

result.setdefault("_meta", {})["chat"] = chat_meta

|

||||

|

||||

# Optional probes (do not change minimal contract but exposed for observability)

|

||||

try:

|

||||

redis_ok, redis_meta = check_redis()

|

||||

result["redis"] = _ok_nok(redis_ok)

|

||||

if not redis_ok:

|

||||

result.setdefault("_meta", {})["redis"] = redis_meta

|

||||

except Exception:

|

||||

result["redis"] = "nok"

|

||||

|

||||

try:

|

||||

doc_ok, doc_meta = check_doc_engine()

|

||||

result["doc_engine"] = _ok_nok(doc_ok)

|

||||

if not doc_ok:

|

||||

result.setdefault("_meta", {})["doc_engine"] = doc_meta

|

||||

except Exception:

|

||||

result["doc_engine"] = "nok"

|

||||

|

||||

try:

|

||||

sto_ok, sto_meta = check_storage()

|

||||

result["storage"] = _ok_nok(sto_ok)

|

||||

if not sto_ok:

|

||||

result.setdefault("_meta", {})["storage"] = sto_meta

|

||||

except Exception:

|

||||

result["storage"] = "nok"

|

||||

|

||||

all_ok = (result.get("db") == "ok") and (result.get("chat") == "ok")

|

||||

result["status"] = "ok" if all_ok else "nok"

|

||||

return result, all_ok

|

||||

|

||||

|

||||

@ -219,6 +219,70 @@

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "TokenPony",

|

||||

"logo": "",

|

||||

"tags": "LLM",

|

||||

"status": "1",

|

||||

"llm": [

|

||||

{

|

||||

"llm_name": "qwen3-8b",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-v3-0324",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "qwen3-32b",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "kimi-k2-instruct",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-r1-0528",

|

||||

"tags": "LLM,CHAT,164k",

|

||||

"max_tokens": 164000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "qwen3-coder-480b",

|

||||

"tags": "LLM,CHAT,1024k",

|

||||

"max_tokens": 1024000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5",

|

||||

"tags": "LLM,CHAT,131K",

|

||||

"max_tokens": 131000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-v3.1",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "Tongyi-Qianwen",

|

||||

"logo": "",

|

||||

@ -625,7 +689,7 @@

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4",

|

||||

"tags":"LLM,CHAT,128K",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

@ -4477,6 +4541,273 @@

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "CometAPI",

|

||||

"logo": "",

|

||||

"tags": "LLM,TEXT EMBEDDING,IMAGE2TEXT",

|

||||

"status": "1",

|

||||

"llm": [

|

||||

{

|

||||

"llm_name": "gpt-5-chat-latest",

|

||||

"tags": "LLM,CHAT,400k",

|

||||

"max_tokens": 400000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "chatgpt-4o-latest",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-5-mini",

|

||||

"tags": "LLM,CHAT,400k",

|

||||

"max_tokens": 400000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-5-nano",

|

||||

"tags": "LLM,CHAT,400k",

|

||||

"max_tokens": 400000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-5",

|

||||

"tags": "LLM,CHAT,400k",

|

||||

"max_tokens": 400000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-4.1-mini",

|

||||

"tags": "LLM,CHAT,1M",

|

||||

"max_tokens": 1047576,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-4.1-nano",

|

||||

"tags": "LLM,CHAT,1M",

|

||||

"max_tokens": 1047576,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-4.1",

|

||||

"tags": "LLM,CHAT,1M",

|

||||

"max_tokens": 1047576,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gpt-4o-mini",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "o4-mini-2025-04-16",

|

||||

"tags": "LLM,CHAT,200k",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "o3-pro-2025-06-10",

|

||||

"tags": "LLM,CHAT,200k",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "claude-opus-4-1-20250805",

|

||||

"tags": "LLM,CHAT,200k,IMAGE2TEXT",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "claude-opus-4-1-20250805-thinking",

|

||||

"tags": "LLM,CHAT,200k,IMAGE2TEXT",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "claude-sonnet-4-20250514",

|

||||

"tags": "LLM,CHAT,200k,IMAGE2TEXT",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "claude-sonnet-4-20250514-thinking",

|

||||

"tags": "LLM,CHAT,200k,IMAGE2TEXT",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "claude-3-7-sonnet-latest",

|

||||

"tags": "LLM,CHAT,200k",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "claude-3-5-haiku-latest",

|

||||

"tags": "LLM,CHAT,200k",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gemini-2.5-pro",

|

||||

"tags": "LLM,CHAT,1M,IMAGE2TEXT",

|

||||

"max_tokens": 1000000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gemini-2.5-flash",

|

||||

"tags": "LLM,CHAT,1M,IMAGE2TEXT",

|

||||

"max_tokens": 1000000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gemini-2.5-flash-lite",

|

||||

"tags": "LLM,CHAT,1M,IMAGE2TEXT",

|

||||

"max_tokens": 1000000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gemini-2.0-flash",

|

||||

"tags": "LLM,CHAT,1M,IMAGE2TEXT",

|

||||

"max_tokens": 1000000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "grok-4-0709",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131072,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "grok-3",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131072,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "grok-3-mini",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131072,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "grok-2-image-1212",

|

||||

"tags": "LLM,CHAT,32k,IMAGE2TEXT",

|

||||

"max_tokens": 32768,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-v3.1",

|

||||

"tags": "LLM,CHAT,64k",

|

||||

"max_tokens": 64000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-v3",

|

||||

"tags": "LLM,CHAT,64k",

|

||||

"max_tokens": 64000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-r1-0528",

|

||||

"tags": "LLM,CHAT,164k",

|

||||

"max_tokens": 164000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-chat",

|

||||

"tags": "LLM,CHAT,32k",

|

||||

"max_tokens": 32000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-reasoner",

|

||||

"tags": "LLM,CHAT,64k",

|

||||

"max_tokens": 64000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "qwen3-30b-a3b",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "qwen3-coder-plus-2025-07-22",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "text-embedding-ada-002",

|

||||

"tags": "TEXT EMBEDDING,8K",

|

||||

"max_tokens": 8191,

|

||||

"model_type": "embedding",

|

||||

"is_tools": false

|

||||

},

|

||||

{

|

||||

"llm_name": "text-embedding-3-small",

|

||||

"tags": "TEXT EMBEDDING,8K",

|

||||

"max_tokens": 8191,

|

||||

"model_type": "embedding",

|

||||

"is_tools": false

|

||||

},

|

||||

{

|

||||

"llm_name": "text-embedding-3-large",

|

||||

"tags": "TEXT EMBEDDING,8K",

|

||||

"max_tokens": 8191,

|

||||

"model_type": "embedding",

|

||||

"is_tools": false

|

||||

},

|

||||

{

|

||||

"llm_name": "whisper-1",

|

||||

"tags": "SPEECH2TEXT",

|

||||

"max_tokens": 26214400,

|

||||

"model_type": "speech2text",

|

||||

"is_tools": false

|

||||

},

|

||||

{

|

||||

"llm_name": "tts-1",

|

||||

"tags": "TTS",

|

||||

"max_tokens": 2048,

|

||||

"model_type": "tts",

|

||||

"is_tools": false

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "Meituan",

|

||||

"logo": "",

|

||||

@ -4493,4 +4824,4 @@

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

@ -37,7 +37,7 @@ TITLE_TAGS = {"h1": "#", "h2": "##", "h3": "###", "h4": "#####", "h5": "#####",

|

||||

|

||||

|

||||

class RAGFlowHtmlParser:

|

||||

def __call__(self, fnm, binary=None, chunk_token_num=None):

|

||||

def __call__(self, fnm, binary=None, chunk_token_num=512):

|

||||

if binary:

|

||||

encoding = find_codec(binary)

|

||||

txt = binary.decode(encoding, errors="ignore")

|

||||

|

||||

@ -34,7 +34,7 @@ from pypdf import PdfReader as pdf2_read

|

||||

|

||||

from api import settings

|

||||

from api.utils.file_utils import get_project_base_directory

|

||||

from deepdoc.vision import OCR, LayoutRecognizer, Recognizer, TableStructureRecognizer

|

||||

from deepdoc.vision import OCR, AscendLayoutRecognizer, LayoutRecognizer, Recognizer, TableStructureRecognizer

|

||||

from rag.app.picture import vision_llm_chunk as picture_vision_llm_chunk

|

||||

from rag.nlp import rag_tokenizer

|

||||

from rag.prompts import vision_llm_describe_prompt

|

||||

@ -64,33 +64,38 @@ class RAGFlowPdfParser:

|

||||

if PARALLEL_DEVICES > 1:

|

||||

self.parallel_limiter = [trio.CapacityLimiter(1) for _ in range(PARALLEL_DEVICES)]

|

||||

|

||||

layout_recognizer_type = os.getenv("LAYOUT_RECOGNIZER_TYPE", "onnx").lower()

|

||||

if layout_recognizer_type not in ["onnx", "ascend"]:

|

||||

raise RuntimeError("Unsupported layout recognizer type.")

|

||||

|

||||

if hasattr(self, "model_speciess"):

|

||||

self.layouter = LayoutRecognizer("layout." + self.model_speciess)

|

||||

recognizer_domain = "layout." + self.model_speciess

|

||||

else:

|

||||

self.layouter = LayoutRecognizer("layout")

|

||||

recognizer_domain = "layout"

|

||||

|

||||

if layout_recognizer_type == "ascend":

|

||||

logging.debug("Using Ascend LayoutRecognizer")

|

||||

self.layouter = AscendLayoutRecognizer(recognizer_domain)

|

||||

else: # onnx

|

||||

logging.debug("Using Onnx LayoutRecognizer")

|

||||

self.layouter = LayoutRecognizer(recognizer_domain)

|

||||

self.tbl_det = TableStructureRecognizer()

|

||||

|

||||

self.updown_cnt_mdl = xgb.Booster()

|

||||

if not settings.LIGHTEN:

|

||||

try:

|

||||

import torch.cuda

|

||||

|

||||

if torch.cuda.is_available():

|

||||

self.updown_cnt_mdl.set_param({"device": "cuda"})

|

||||

except Exception:

|

||||

logging.exception("RAGFlowPdfParser __init__")

|

||||

try:

|

||||

model_dir = os.path.join(

|

||||

get_project_base_directory(),

|

||||

"rag/res/deepdoc")

|

||||

self.updown_cnt_mdl.load_model(os.path.join(

|

||||

model_dir, "updown_concat_xgb.model"))

|

||||

model_dir = os.path.join(get_project_base_directory(), "rag/res/deepdoc")

|

||||

self.updown_cnt_mdl.load_model(os.path.join(model_dir, "updown_concat_xgb.model"))

|

||||

except Exception:

|

||||

model_dir = snapshot_download(

|

||||

repo_id="InfiniFlow/text_concat_xgb_v1.0",

|

||||

local_dir=os.path.join(get_project_base_directory(), "rag/res/deepdoc"),

|

||||

local_dir_use_symlinks=False)

|

||||

self.updown_cnt_mdl.load_model(os.path.join(

|

||||

model_dir, "updown_concat_xgb.model"))

|

||||

model_dir = snapshot_download(repo_id="InfiniFlow/text_concat_xgb_v1.0", local_dir=os.path.join(get_project_base_directory(), "rag/res/deepdoc"), local_dir_use_symlinks=False)

|

||||

self.updown_cnt_mdl.load_model(os.path.join(model_dir, "updown_concat_xgb.model"))

|

||||

|

||||

self.page_from = 0

|

||||

self.column_num = 1

|

||||

@ -102,13 +107,10 @@ class RAGFlowPdfParser:

|

||||

return c["bottom"] - c["top"]

|

||||

|

||||

def _x_dis(self, a, b):

|

||||

return min(abs(a["x1"] - b["x0"]), abs(a["x0"] - b["x1"]),

|

||||

abs(a["x0"] + a["x1"] - b["x0"] - b["x1"]) / 2)

|

||||

return min(abs(a["x1"] - b["x0"]), abs(a["x0"] - b["x1"]), abs(a["x0"] + a["x1"] - b["x0"] - b["x1"]) / 2)

|

||||

|

||||

def _y_dis(

|

||||

self, a, b):

|

||||

return (

|

||||

b["top"] + b["bottom"] - a["top"] - a["bottom"]) / 2

|

||||

def _y_dis(self, a, b):

|

||||

return (b["top"] + b["bottom"] - a["top"] - a["bottom"]) / 2

|

||||

|

||||

def _match_proj(self, b):

|

||||

proj_patt = [

|

||||

@ -130,10 +132,7 @@ class RAGFlowPdfParser:

|

||||

LEN = 6

|

||||

tks_down = rag_tokenizer.tokenize(down["text"][:LEN]).split()

|

||||

tks_up = rag_tokenizer.tokenize(up["text"][-LEN:]).split()

|

||||

tks_all = up["text"][-LEN:].strip() \

|

||||

+ (" " if re.match(r"[a-zA-Z0-9]+",

|

||||

up["text"][-1] + down["text"][0]) else "") \

|

||||

+ down["text"][:LEN].strip()

|

||||

tks_all = up["text"][-LEN:].strip() + (" " if re.match(r"[a-zA-Z0-9]+", up["text"][-1] + down["text"][0]) else "") + down["text"][:LEN].strip()

|

||||

tks_all = rag_tokenizer.tokenize(tks_all).split()

|

||||

fea = [

|

||||

up.get("R", -1) == down.get("R", -1),

|

||||

@ -144,39 +143,30 @@ class RAGFlowPdfParser:

|

||||

down["layout_type"] == "text",

|

||||

up["layout_type"] == "table",

|

||||

down["layout_type"] == "table",

|

||||

True if re.search(

|

||||

r"([。?!;!?;+))]|[a-z]\.)$",

|

||||

up["text"]) else False,

|

||||

True if re.search(r"([。?!;!?;+))]|[a-z]\.)$", up["text"]) else False,

|

||||

True if re.search(r"[,:‘“、0-9(+-]$", up["text"]) else False,

|

||||

True if re.search(

|

||||

r"(^.?[/,?;:\],。;:’”?!》】)-])",

|

||||

down["text"]) else False,

|

||||

True if re.search(r"(^.?[/,?;:\],。;:’”?!》】)-])", down["text"]) else False,

|

||||

True if re.match(r"[\((][^\(\)()]+[)\)]$", up["text"]) else False,

|

||||

True if re.search(r"[,,][^。.]+$", up["text"]) else False,

|

||||

True if re.search(r"[,,][^。.]+$", up["text"]) else False,

|

||||

True if re.search(r"[\((][^\))]+$", up["text"])

|

||||

and re.search(r"[\))]", down["text"]) else False,

|

||||

True if re.search(r"[\((][^\))]+$", up["text"]) and re.search(r"[\))]", down["text"]) else False,

|

||||

self._match_proj(down),

|

||||

True if re.match(r"[A-Z]", down["text"]) else False,

|

||||

True if re.match(r"[A-Z]", up["text"][-1]) else False,

|

||||

True if re.match(r"[a-z0-9]", up["text"][-1]) else False,

|

||||

True if re.match(r"[0-9.%,-]+$", down["text"]) else False,

|

||||

up["text"].strip()[-2:] == down["text"].strip()[-2:] if len(up["text"].strip()

|

||||

) > 1 and len(

|

||||

down["text"].strip()) > 1 else False,

|

||||

up["text"].strip()[-2:] == down["text"].strip()[-2:] if len(up["text"].strip()) > 1 and len(down["text"].strip()) > 1 else False,

|

||||

up["x0"] > down["x1"],

|

||||

abs(self.__height(up) - self.__height(down)) / min(self.__height(up),

|

||||

self.__height(down)),

|

||||

abs(self.__height(up) - self.__height(down)) / min(self.__height(up), self.__height(down)),

|

||||

self._x_dis(up, down) / max(w, 0.000001),

|

||||

(len(up["text"]) - len(down["text"])) /

|

||||

max(len(up["text"]), len(down["text"])),

|

||||

(len(up["text"]) - len(down["text"])) / max(len(up["text"]), len(down["text"])),

|

||||

len(tks_all) - len(tks_up) - len(tks_down),

|

||||

len(tks_down) - len(tks_up),

|

||||

tks_down[-1] == tks_up[-1] if tks_down and tks_up else False,

|

||||

max(down["in_row"], up["in_row"]),

|

||||

abs(down["in_row"] - up["in_row"]),

|

||||

len(tks_down) == 1 and rag_tokenizer.tag(tks_down[0]).find("n") >= 0,

|

||||

len(tks_up) == 1 and rag_tokenizer.tag(tks_up[0]).find("n") >= 0

|

||||

len(tks_up) == 1 and rag_tokenizer.tag(tks_up[0]).find("n") >= 0,

|

||||

]

|

||||

return fea

|

||||

|

||||

@ -187,9 +177,7 @@ class RAGFlowPdfParser:

|

||||

for i in range(len(arr) - 1):

|

||||

for j in range(i, -1, -1):

|

||||

# restore the order using th

|

||||

if abs(arr[j + 1]["x0"] - arr[j]["x0"]) < threshold \

|

||||

and arr[j + 1]["top"] < arr[j]["top"] \

|

||||

and arr[j + 1]["page_number"] == arr[j]["page_number"]:

|

||||

if abs(arr[j + 1]["x0"] - arr[j]["x0"]) < threshold and arr[j + 1]["top"] < arr[j]["top"] and arr[j + 1]["page_number"] == arr[j]["page_number"]:

|

||||

tmp = arr[j]

|

||||

arr[j] = arr[j + 1]

|

||||

arr[j + 1] = tmp

|

||||

@ -197,8 +185,7 @@ class RAGFlowPdfParser:

|

||||

|

||||

def _has_color(self, o):

|

||||

if o.get("ncs", "") == "DeviceGray":

|

||||