mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-04 03:25:30 +08:00

Compare commits

25 Commits

v0.21.0

...

43ea312144

| Author | SHA1 | Date | |

|---|---|---|---|

| 43ea312144 | |||

| ce05696d95 | |||

| 0f62bfda21 | |||

| 70ffe2b4e8 | |||

| e76db6e222 | |||

| 7b664b5a84 | |||

| 8a41057236 | |||

| 447041d265 | |||

| f0375c4acd | |||

| 8af769de41 | |||

| f808bc32ba | |||

| e8cb1d8fc4 | |||

| 4e86ee4ff9 | |||

| c99034f717 | |||

| 86b254d214 | |||

| 1c38f4cefb | |||

| 74c195cd36 | |||

| e48bec1cbf | |||

| 205a5eb9f5 | |||

| 8844826208 | |||

| 8fe4281d81 | |||

| fb1bedbd3c | |||

| 6e55b9146c | |||

| 071ea9c493 | |||

| 5037a28e4d |

@ -135,7 +135,7 @@ releases! 🌟

|

||||

## 🔎 System Architecture

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 Get Started

|

||||

|

||||

@ -129,7 +129,7 @@ Coba demo kami di [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

## 🔎 Arsitektur Sistem

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 Mulai

|

||||

|

||||

@ -109,7 +109,7 @@

|

||||

## 🔎 システム構成

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 初期設定

|

||||

|

||||

@ -109,7 +109,7 @@

|

||||

## 🔎 시스템 아키텍처

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 시작하기

|

||||

|

||||

@ -129,7 +129,7 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

## 🔎 Arquitetura do Sistema

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 Primeiros Passos

|

||||

|

||||

@ -132,7 +132,7 @@

|

||||

## 🔎 系統架構

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 快速開始

|

||||

|

||||

@ -132,7 +132,7 @@

|

||||

## 🔎 系统架构

|

||||

|

||||

<div align="center" style="margin-top:20px;margin-bottom:20px;">

|

||||

<img src="https://github.com/infiniflow/ragflow/assets/12318111/d6ac5664-c237-4200-a7c2-a4a00691b485" width="1000"/>

|

||||

<img src="https://github.com/user-attachments/assets/31b0dd6f-ca4f-445a-9457-70cb44a381b2" width="1000"/>

|

||||

</div>

|

||||

|

||||

## 🎬 快速开始

|

||||

|

||||

@ -48,13 +48,20 @@ It consists of a server-side Service and a command-line client (CLI), both imple

|

||||

1. Ensure the Admin Service is running.

|

||||

2. Install ragflow-cli.

|

||||

```bash

|

||||

pip install ragflow-cli

|

||||

pip install ragflow-cli==0.21.0

|

||||

```

|

||||

3. Launch the CLI client:

|

||||

```bash

|

||||

ragflow-cli -h 0.0.0.0 -p 9381

|

||||

ragflow-cli -h 127.0.0.1 -p 9381

|

||||

```

|

||||

Enter superuser's password to login. Default password is `admin`.

|

||||

You will be prompted to enter the superuser's password to log in.

|

||||

The default password is admin.

|

||||

|

||||

**Parameters:**

|

||||

|

||||

- -h: RAGFlow admin server host address

|

||||

|

||||

- -p: RAGFlow admin server port

|

||||

|

||||

|

||||

|

||||

|

||||

@ -213,12 +213,8 @@ class AdminCLI(Cmd):

|

||||

|

||||

def onecmd(self, command: str) -> bool:

|

||||

try:

|

||||

# print(f"command: {command}")

|

||||

result = self.parse_command(command)

|

||||

|

||||

# if 'type' in result and result.get('type') == 'empty':

|

||||

# return False

|

||||

|

||||

if isinstance(result, dict):

|

||||

if 'type' in result and result.get('type') == 'empty':

|

||||

return False

|

||||

@ -341,9 +337,9 @@ class AdminCLI(Cmd):

|

||||

row = "|"

|

||||

for col in columns:

|

||||

value = str(item.get(col, ''))

|

||||

if len(value) > col_widths[col]:

|

||||

if get_string_width(value) > col_widths[col]:

|

||||

value = value[:col_widths[col] - 3] + "..."

|

||||

row += f" {value:<{col_widths[col]}} |"

|

||||

row += f" {value:<{col_widths[col] - (get_string_width(value) - len(value))}} |"

|

||||

print(row)

|

||||

|

||||

print(separator)

|

||||

@ -452,7 +448,7 @@ class AdminCLI(Cmd):

|

||||

if response.status_code == 200:

|

||||

self._print_table_simple(res_json['data'])

|

||||

else:

|

||||

print(f"Fail to get all users, code: {res_json['code']}, message: {res_json['message']}")

|

||||

print(f"Fail to get all services, code: {res_json['code']}, message: {res_json['message']}")

|

||||

|

||||

def _handle_show_service(self, command):

|

||||

service_id: int = command['number']

|

||||

@ -463,14 +459,14 @@ class AdminCLI(Cmd):

|

||||

res_json = response.json()

|

||||

if response.status_code == 200:

|

||||

res_data = res_json['data']

|

||||

if res_data['alive']:

|

||||

print(f"Service {res_data['service_name']} is alive. Detail:")

|

||||

if 'status' in res_data and res_data['status'] == 'alive':

|

||||

print(f"Service {res_data['service_name']} is alive, ")

|

||||

if isinstance(res_data['message'], str):

|

||||

print(res_data['message'])

|

||||

else:

|

||||

self._print_table_simple(res_data['message'])

|

||||

else:

|

||||

print(f"Service {res_data['service_name']} is down. Detail: {res_data['message']}")

|

||||

print(f"Service {res_data['service_name']} is down, {res_data['message']}")

|

||||

else:

|

||||

print(f"Fail to show service, code: {res_json['code']}, message: {res_json['message']}")

|

||||

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

[project]

|

||||

name = "ragflow-cli"

|

||||

version = "0.21.0.dev5"

|

||||

version = "0.21.0"

|

||||

description = "Admin Service's client of [RAGFlow](https://github.com/infiniflow/ragflow). The Admin Service provides user management and system monitoring. "

|

||||

authors = [{ name = "Lynn", email = "lynn_inf@hotmail.com" }]

|

||||

license = { text = "Apache License, Version 2.0" }

|

||||

|

||||

@ -26,7 +26,7 @@ from routes import admin_bp

|

||||

from api.utils.log_utils import init_root_logger

|

||||

from api.constants import SERVICE_CONF

|

||||

from api import settings

|

||||

from admin.server.config import load_configurations, SERVICE_CONFIGS

|

||||

from config import load_configurations, SERVICE_CONFIGS

|

||||

|

||||

stop_event = threading.Event()

|

||||

|

||||

|

||||

@ -26,6 +26,8 @@ from urllib.parse import urlparse

|

||||

|

||||

|

||||

class ServiceConfigs:

|

||||

configs = dict

|

||||

|

||||

def __init__(self):

|

||||

self.configs = []

|

||||

self.lock = threading.Lock()

|

||||

@ -229,7 +231,8 @@ def load_configurations(config_path: str) -> list[BaseConfig]:

|

||||

host: str = v['host']

|

||||

http_port: int = v['http_port']

|

||||

config = RAGFlowServerConfig(id=id_count, name=name, host=host, port=http_port,

|

||||

service_type="ragflow_server", detail_func_name="check_ragflow_server_alive")

|

||||

service_type="ragflow_server",

|

||||

detail_func_name="check_ragflow_server_alive")

|

||||

configurations.append(config)

|

||||

id_count += 1

|

||||

case "es":

|

||||

@ -254,7 +257,8 @@ def load_configurations(config_path: str) -> list[BaseConfig]:

|

||||

host = parts[0]

|

||||

port = int(parts[1])

|

||||

database: str = v.get('db_name', 'default_db')

|

||||

config = InfinityConfig(id=id_count, name=name, host=host, port=port, service_type="retrieval", retrieval_type="infinity",

|

||||

config = InfinityConfig(id=id_count, name=name, host=host, port=port, service_type="retrieval",

|

||||

retrieval_type="infinity",

|

||||

db_name=database, detail_func_name="get_infinity_status")

|

||||

configurations.append(config)

|

||||

id_count += 1

|

||||

@ -266,7 +270,8 @@ def load_configurations(config_path: str) -> list[BaseConfig]:

|

||||

port = int(parts[1])

|

||||

user = v.get('user')

|

||||

password = v.get('password')

|

||||

config = MinioConfig(id=id_count, name=name, host=host, port=port, user=user, password=password, service_type="file_store",

|

||||

config = MinioConfig(id=id_count, name=name, host=host, port=port, user=user, password=password,

|

||||

service_type="file_store",

|

||||

store_type="minio", detail_func_name="check_minio_alive")

|

||||

configurations.append(config)

|

||||

id_count += 1

|

||||

|

||||

@ -17,7 +17,7 @@

|

||||

|

||||

from flask import Blueprint, request

|

||||

|

||||

from admin.server.auth import login_verify

|

||||

from auth import login_verify

|

||||

from responses import success_response, error_response

|

||||

from services import UserMgr, ServiceMgr, UserServiceMgr

|

||||

from api.common.exceptions import AdminException

|

||||

|

||||

@ -27,7 +27,7 @@ from api.utils.crypt import decrypt

|

||||

from api.utils import health_utils

|

||||

|

||||

from api.common.exceptions import AdminException, UserAlreadyExistsError, UserNotFoundError

|

||||

from admin.server.config import SERVICE_CONFIGS

|

||||

from config import SERVICE_CONFIGS

|

||||

|

||||

|

||||

class UserMgr:

|

||||

@ -181,12 +181,12 @@ class ServiceMgr:

|

||||

config_dict = config.to_dict()

|

||||

try:

|

||||

service_detail = ServiceMgr.get_service_details(service_id)

|

||||

if service_detail['alive']:

|

||||

config_dict['status'] = 'Alive'

|

||||

if "status" in service_detail:

|

||||

config_dict['status'] = service_detail['status']

|

||||

else:

|

||||

config_dict['status'] = 'Timeout'

|

||||

config_dict['status'] = 'timeout'

|

||||

except Exception:

|

||||

config_dict['status'] = 'Timeout'

|

||||

config_dict['status'] = 'timeout'

|

||||

result.append(config_dict)

|

||||

return result

|

||||

|

||||

@ -206,7 +206,7 @@ class ServiceMgr:

|

||||

}

|

||||

service_info = service_config_mapping.get(service_id, {})

|

||||

if not service_info:

|

||||

raise AdminException(f"Invalid service_id: {service_id}")

|

||||

raise AdminException(f"invalid service_id: {service_id}")

|

||||

|

||||

detail_func = getattr(health_utils, service_info.get('detail_func_name'))

|

||||

res = detail_func()

|

||||

|

||||

@ -60,7 +60,7 @@ def list_chunk():

|

||||

}

|

||||

if "available_int" in req:

|

||||

query["available_int"] = int(req["available_int"])

|

||||

sres = settings.retriever.search(query, search.index_name(tenant_id), kb_ids, highlight=True)

|

||||

sres = settings.retriever.search(query, search.index_name(tenant_id), kb_ids, highlight=["content_ltks"])

|

||||

res = {"total": sres.total, "chunks": [], "doc": doc.to_dict()}

|

||||

for id in sres.ids:

|

||||

d = {

|

||||

|

||||

@ -13,6 +13,7 @@

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License

|

||||

#

|

||||

import logging

|

||||

import os

|

||||

import pathlib

|

||||

import re

|

||||

@ -234,54 +235,63 @@ def get_all_parent_folders():

|

||||

return server_error_response(e)

|

||||

|

||||

|

||||

@manager.route('/rm', methods=['POST']) # noqa: F821

|

||||

@manager.route("/rm", methods=["POST"]) # noqa: F821

|

||||

@login_required

|

||||

@validate_request("file_ids")

|

||||

def rm():

|

||||

req = request.json

|

||||

file_ids = req["file_ids"]

|

||||

|

||||

def _delete_single_file(file):

|

||||

try:

|

||||

if file.location:

|

||||

STORAGE_IMPL.rm(file.parent_id, file.location)

|

||||

except Exception:

|

||||

logging.exception(f"Fail to remove object: {file.parent_id}/{file.location}")

|

||||

|

||||

informs = File2DocumentService.get_by_file_id(file.id)

|

||||

for inform in informs:

|

||||

doc_id = inform.document_id

|

||||

e, doc = DocumentService.get_by_id(doc_id)

|

||||

if e and doc:

|

||||

tenant_id = DocumentService.get_tenant_id(doc_id)

|

||||

if tenant_id:

|

||||

DocumentService.remove_document(doc, tenant_id)

|

||||

File2DocumentService.delete_by_file_id(file.id)

|

||||

|

||||

FileService.delete(file)

|

||||

|

||||

def _delete_folder_recursive(folder, tenant_id):

|

||||

sub_files = FileService.list_all_files_by_parent_id(folder.id)

|

||||

for sub_file in sub_files:

|

||||

if sub_file.type == FileType.FOLDER.value:

|

||||

_delete_folder_recursive(sub_file, tenant_id)

|

||||

else:

|

||||

_delete_single_file(sub_file)

|

||||

|

||||

FileService.delete(folder)

|

||||

|

||||

try:

|

||||

for file_id in file_ids:

|

||||

e, file = FileService.get_by_id(file_id)

|

||||

if not e:

|

||||

if not e or not file:

|

||||

return get_data_error_result(message="File or Folder not found!")

|

||||

if not file.tenant_id:

|

||||

return get_data_error_result(message="Tenant not found!")

|

||||

if not check_file_team_permission(file, current_user.id):

|

||||

return get_json_result(data=False, message='No authorization.', code=settings.RetCode.AUTHENTICATION_ERROR)

|

||||

return get_json_result(data=False, message="No authorization.", code=settings.RetCode.AUTHENTICATION_ERROR)

|

||||

|

||||

if file.source_type == FileSource.KNOWLEDGEBASE:

|

||||

continue

|

||||

|

||||

if file.type == FileType.FOLDER.value:

|

||||

file_id_list = FileService.get_all_innermost_file_ids(file_id, [])

|

||||

for inner_file_id in file_id_list:

|

||||

e, file = FileService.get_by_id(inner_file_id)

|

||||

if not e:

|

||||

return get_data_error_result(message="File not found!")

|

||||

STORAGE_IMPL.rm(file.parent_id, file.location)

|

||||

FileService.delete_folder_by_pf_id(current_user.id, file_id)

|

||||

else:

|

||||

STORAGE_IMPL.rm(file.parent_id, file.location)

|

||||

if not FileService.delete(file):

|

||||

return get_data_error_result(

|

||||

message="Database error (File removal)!")

|

||||

_delete_folder_recursive(file, current_user.id)

|

||||

continue

|

||||

|

||||

# delete file2document

|

||||

informs = File2DocumentService.get_by_file_id(file_id)

|

||||

for inform in informs:

|

||||

doc_id = inform.document_id

|

||||

e, doc = DocumentService.get_by_id(doc_id)

|

||||

if not e:

|

||||

return get_data_error_result(message="Document not found!")

|

||||

tenant_id = DocumentService.get_tenant_id(doc_id)

|

||||

if not tenant_id:

|

||||

return get_data_error_result(message="Tenant not found!")

|

||||

if not DocumentService.remove_document(doc, tenant_id):

|

||||

return get_data_error_result(

|

||||

message="Database error (Document removal)!")

|

||||

File2DocumentService.delete_by_file_id(file_id)

|

||||

_delete_single_file(file)

|

||||

|

||||

return get_json_result(data=True)

|

||||

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

|

||||

@ -355,31 +365,89 @@ def get(file_id):

|

||||

return server_error_response(e)

|

||||

|

||||

|

||||

@manager.route('/mv', methods=['POST']) # noqa: F821

|

||||

@manager.route("/mv", methods=["POST"]) # noqa: F821

|

||||

@login_required

|

||||

@validate_request("src_file_ids", "dest_file_id")

|

||||

def move():

|

||||

req = request.json

|

||||

try:

|

||||

file_ids = req["src_file_ids"]

|

||||

parent_id = req["dest_file_id"]

|

||||

dest_parent_id = req["dest_file_id"]

|

||||

|

||||

ok, dest_folder = FileService.get_by_id(dest_parent_id)

|

||||

if not ok or not dest_folder:

|

||||

return get_data_error_result(message="Parent Folder not found!")

|

||||

|

||||

files = FileService.get_by_ids(file_ids)

|

||||

files_dict = {}

|

||||

for file in files:

|

||||

files_dict[file.id] = file

|

||||

if not files:

|

||||

return get_data_error_result(message="Source files not found!")

|

||||

|

||||

files_dict = {f.id: f for f in files}

|

||||

|

||||

for file_id in file_ids:

|

||||

file = files_dict[file_id]

|

||||

file = files_dict.get(file_id)

|

||||

if not file:

|

||||

return get_data_error_result(message="File or Folder not found!")

|

||||

if not file.tenant_id:

|

||||

return get_data_error_result(message="Tenant not found!")

|

||||

if not check_file_team_permission(file, current_user.id):

|

||||

return get_json_result(data=False, message='No authorization.', code=settings.RetCode.AUTHENTICATION_ERROR)

|

||||

fe, _ = FileService.get_by_id(parent_id)

|

||||

if not fe:

|

||||

return get_data_error_result(message="Parent Folder not found!")

|

||||

FileService.move_file(file_ids, parent_id)

|

||||

return get_json_result(

|

||||

data=False,

|

||||

message="No authorization.",

|

||||

code=settings.RetCode.AUTHENTICATION_ERROR,

|

||||

)

|

||||

|

||||

def _move_entry_recursive(source_file_entry, dest_folder):

|

||||

if source_file_entry.type == FileType.FOLDER.value:

|

||||

existing_folder = FileService.query(name=source_file_entry.name, parent_id=dest_folder.id)

|

||||

if existing_folder:

|

||||

new_folder = existing_folder[0]

|

||||

else:

|

||||

new_folder = FileService.insert(

|

||||

{

|

||||

"id": get_uuid(),

|

||||

"parent_id": dest_folder.id,

|

||||

"tenant_id": source_file_entry.tenant_id,

|

||||

"created_by": current_user.id,

|

||||

"name": source_file_entry.name,

|

||||

"location": "",

|

||||

"size": 0,

|

||||

"type": FileType.FOLDER.value,

|

||||

}

|

||||

)

|

||||

|

||||

sub_files = FileService.list_all_files_by_parent_id(source_file_entry.id)

|

||||

for sub_file in sub_files:

|

||||

_move_entry_recursive(sub_file, new_folder)

|

||||

|

||||

FileService.delete_by_id(source_file_entry.id)

|

||||

return

|

||||

|

||||

old_parent_id = source_file_entry.parent_id

|

||||

old_location = source_file_entry.location

|

||||

filename = source_file_entry.name

|

||||

|

||||

new_location = filename

|

||||

while STORAGE_IMPL.obj_exist(dest_folder.id, new_location):

|

||||

new_location += "_"

|

||||

|

||||

try:

|

||||

STORAGE_IMPL.move(old_parent_id, old_location, dest_folder.id, new_location)

|

||||

except Exception as storage_err:

|

||||

raise RuntimeError(f"Move file failed at storage layer: {str(storage_err)}")

|

||||

|

||||

FileService.update_by_id(

|

||||

source_file_entry.id,

|

||||

{

|

||||

"parent_id": dest_folder.id,

|

||||

"location": new_location,

|

||||

},

|

||||

)

|

||||

|

||||

for file in files:

|

||||

_move_entry_recursive(file, dest_folder)

|

||||

|

||||

return get_json_result(data=True)

|

||||

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

|

||||

@ -194,6 +194,9 @@ def add_llm():

|

||||

elif factory == "Azure-OpenAI":

|

||||

api_key = apikey_json(["api_key", "api_version"])

|

||||

|

||||

elif factory == "OpenRouter":

|

||||

api_key = apikey_json(["api_key", "provider_order"])

|

||||

|

||||

llm = {

|

||||

"tenant_id": current_user.id,

|

||||

"llm_factory": factory,

|

||||

|

||||

@ -15,11 +15,14 @@

|

||||

#

|

||||

import json

|

||||

import logging

|

||||

import string

|

||||

import os

|

||||

import re

|

||||

import secrets

|

||||

import time

|

||||

from datetime import datetime

|

||||

|

||||

from flask import redirect, request, session

|

||||

from flask import redirect, request, session, Response

|

||||

from flask_login import current_user, login_required, login_user, logout_user

|

||||

from werkzeug.security import check_password_hash, generate_password_hash

|

||||

|

||||

@ -46,6 +49,19 @@ from api.utils.api_utils import (

|

||||

validate_request,

|

||||

)

|

||||

from api.utils.crypt import decrypt

|

||||

from rag.utils.redis_conn import REDIS_CONN

|

||||

from api.apps import smtp_mail_server

|

||||

from api.utils.web_utils import (

|

||||

send_email_html,

|

||||

OTP_LENGTH,

|

||||

OTP_TTL_SECONDS,

|

||||

ATTEMPT_LIMIT,

|

||||

ATTEMPT_LOCK_SECONDS,

|

||||

RESEND_COOLDOWN_SECONDS,

|

||||

otp_keys,

|

||||

hash_code,

|

||||

captcha_key,

|

||||

)

|

||||

|

||||

|

||||

@manager.route("/login", methods=["POST", "GET"]) # noqa: F821

|

||||

@ -825,3 +841,170 @@ def set_tenant_info():

|

||||

return get_json_result(data=True)

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

|

||||

|

||||

@manager.route("/forget/captcha", methods=["GET"]) # noqa: F821

|

||||

def forget_get_captcha():

|

||||

"""

|

||||

GET /forget/captcha?email=<email>

|

||||

- Generate an image captcha and cache it in Redis under key captcha:{email} with TTL = OTP_TTL_SECONDS.

|

||||

- Returns the captcha as a PNG image.

|

||||

"""

|

||||

email = (request.args.get("email") or "")

|

||||

if not email:

|

||||

return get_json_result(data=False, code=settings.RetCode.ARGUMENT_ERROR, message="email is required")

|

||||

|

||||

users = UserService.query(email=email)

|

||||

if not users:

|

||||

return get_json_result(data=False, code=settings.RetCode.DATA_ERROR, message="invalid email")

|

||||

|

||||

# Generate captcha text

|

||||

allowed = string.ascii_uppercase + string.digits

|

||||

captcha_text = "".join(secrets.choice(allowed) for _ in range(OTP_LENGTH))

|

||||

REDIS_CONN.set(captcha_key(email), captcha_text, 60) # Valid for 60 seconds

|

||||

|

||||

from captcha.image import ImageCaptcha

|

||||

image = ImageCaptcha(width=300, height=120, font_sizes=[50, 60, 70])

|

||||

img_bytes = image.generate(captcha_text).read()

|

||||

return Response(img_bytes, mimetype="image/png")

|

||||

|

||||

|

||||

@manager.route("/forget/otp", methods=["POST"]) # noqa: F821

|

||||

def forget_send_otp():

|

||||

"""

|

||||

POST /forget/otp

|

||||

- Verify the image captcha stored at captcha:{email} (case-insensitive).

|

||||

- On success, generate an email OTP (A–Z with length = OTP_LENGTH), store hash + salt (and timestamp) in Redis with TTL, reset attempts and cooldown, and send the OTP via email.

|

||||

"""

|

||||

req = request.get_json()

|

||||

email = req.get("email") or ""

|

||||

captcha = (req.get("captcha") or "").strip()

|

||||

|

||||

if not email or not captcha:

|

||||

return get_json_result(data=False, code=settings.RetCode.ARGUMENT_ERROR, message="email and captcha required")

|

||||

|

||||

users = UserService.query(email=email)

|

||||

if not users:

|

||||

return get_json_result(data=False, code=settings.RetCode.DATA_ERROR, message="invalid email")

|

||||

|

||||

stored_captcha = REDIS_CONN.get(captcha_key(email))

|

||||

if not stored_captcha:

|

||||

return get_json_result(data=False, code=settings.RetCode.NOT_EFFECTIVE, message="invalid or expired captcha")

|

||||

if (stored_captcha or "").strip().lower() != captcha.lower():

|

||||

return get_json_result(data=False, code=settings.RetCode.AUTHENTICATION_ERROR, message="invalid or expired captcha")

|

||||

|

||||

# Delete captcha to prevent reuse

|

||||

REDIS_CONN.delete(captcha_key(email))

|

||||

|

||||

k_code, k_attempts, k_last, k_lock = otp_keys(email)

|

||||

now = int(time.time())

|

||||

last_ts = REDIS_CONN.get(k_last)

|

||||

if last_ts:

|

||||

try:

|

||||

elapsed = now - int(last_ts)

|

||||

except Exception:

|

||||

elapsed = RESEND_COOLDOWN_SECONDS

|

||||

remaining = RESEND_COOLDOWN_SECONDS - elapsed

|

||||

if remaining > 0:

|

||||

return get_json_result(data=False, code=settings.RetCode.NOT_EFFECTIVE, message=f"you still have to wait {remaining} seconds")

|

||||

|

||||

# Generate OTP (uppercase letters only) and store hashed

|

||||

otp = "".join(secrets.choice(string.ascii_uppercase) for _ in range(OTP_LENGTH))

|

||||

salt = os.urandom(16)

|

||||

code_hash = hash_code(otp, salt)

|

||||

REDIS_CONN.set(k_code, f"{code_hash}:{salt.hex()}", OTP_TTL_SECONDS)

|

||||

REDIS_CONN.set(k_attempts, 0, OTP_TTL_SECONDS)

|

||||

REDIS_CONN.set(k_last, now, OTP_TTL_SECONDS)

|

||||

REDIS_CONN.delete(k_lock)

|

||||

|

||||

ttl_min = OTP_TTL_SECONDS // 60

|

||||

|

||||

if not smtp_mail_server:

|

||||

logging.warning("SMTP mail server not initialized; skip sending email.")

|

||||

else:

|

||||

try:

|

||||

send_email_html(

|

||||

subject="Your Password Reset Code",

|

||||

to_email=email,

|

||||

template_key="reset_code",

|

||||

code=otp,

|

||||

ttl_min=ttl_min,

|

||||

)

|

||||

except Exception:

|

||||

return get_json_result(data=False, code=settings.RetCode.SERVER_ERROR, message="failed to send email")

|

||||

|

||||

return get_json_result(data=True, code=settings.RetCode.SUCCESS, message="verification passed, email sent")

|

||||

|

||||

|

||||

@manager.route("/forget", methods=["POST"]) # noqa: F821

|

||||

def forget():

|

||||

"""

|

||||

POST: Verify email + OTP and reset password, then log the user in.

|

||||

Request JSON: { email, otp, new_password, confirm_new_password }

|

||||

"""

|

||||

req = request.get_json()

|

||||

email = req.get("email") or ""

|

||||

otp = (req.get("otp") or "").strip()

|

||||

new_pwd = req.get("new_password")

|

||||

new_pwd2 = req.get("confirm_new_password")

|

||||

|

||||

if not all([email, otp, new_pwd, new_pwd2]):

|

||||

return get_json_result(data=False, code=settings.RetCode.ARGUMENT_ERROR, message="email, otp and passwords are required")

|

||||

|

||||

# For reset, passwords are provided as-is (no decrypt needed)

|

||||

if new_pwd != new_pwd2:

|

||||

return get_json_result(data=False, code=settings.RetCode.ARGUMENT_ERROR, message="passwords do not match")

|

||||

|

||||

users = UserService.query(email=email)

|

||||

if not users:

|

||||

return get_json_result(data=False, code=settings.RetCode.DATA_ERROR, message="invalid email")

|

||||

|

||||

user = users[0]

|

||||

# Verify OTP from Redis

|

||||

k_code, k_attempts, k_last, k_lock = otp_keys(email)

|

||||

if REDIS_CONN.get(k_lock):

|

||||

return get_json_result(data=False, code=settings.RetCode.NOT_EFFECTIVE, message="too many attempts, try later")

|

||||

|

||||

stored = REDIS_CONN.get(k_code)

|

||||

if not stored:

|

||||

return get_json_result(data=False, code=settings.RetCode.NOT_EFFECTIVE, message="expired otp")

|

||||

|

||||

try:

|

||||

stored_hash, salt_hex = str(stored).split(":", 1)

|

||||

salt = bytes.fromhex(salt_hex)

|

||||

except Exception:

|

||||

return get_json_result(data=False, code=settings.RetCode.EXCEPTION_ERROR, message="otp storage corrupted")

|

||||

|

||||

# Case-insensitive verification: OTP generated uppercase

|

||||

calc = hash_code(otp.upper(), salt)

|

||||

if calc != stored_hash:

|

||||

# bump attempts

|

||||

try:

|

||||

attempts = int(REDIS_CONN.get(k_attempts) or 0) + 1

|

||||

except Exception:

|

||||

attempts = 1

|

||||

REDIS_CONN.set(k_attempts, attempts, OTP_TTL_SECONDS)

|

||||

if attempts >= ATTEMPT_LIMIT:

|

||||

REDIS_CONN.set(k_lock, int(time.time()), ATTEMPT_LOCK_SECONDS)

|

||||

return get_json_result(data=False, code=settings.RetCode.AUTHENTICATION_ERROR, message="expired otp")

|

||||

|

||||

# Success: consume OTP and reset password

|

||||

REDIS_CONN.delete(k_code)

|

||||

REDIS_CONN.delete(k_attempts)

|

||||

REDIS_CONN.delete(k_last)

|

||||

REDIS_CONN.delete(k_lock)

|

||||

|

||||

try:

|

||||

UserService.update_user_password(user.id, new_pwd)

|

||||

except Exception as e:

|

||||

logging.exception(e)

|

||||

return get_json_result(data=False, code=settings.RetCode.EXCEPTION_ERROR, message="failed to reset password")

|

||||

|

||||

# Auto login (reuse login flow)

|

||||

user.access_token = get_uuid()

|

||||

login_user(user)

|

||||

user.update_time = (current_timestamp(),)

|

||||

user.update_date = (datetime_format(datetime.now()),)

|

||||

user.save()

|

||||

msg = "Password reset successful. Logged in."

|

||||

return construct_response(data=user.to_json(), auth=user.get_id(), message=msg)

|

||||

|

||||

@ -313,9 +313,75 @@ class RetryingPooledMySQLDatabase(PooledMySQLDatabase):

|

||||

raise

|

||||

|

||||

|

||||

class RetryingPooledPostgresqlDatabase(PooledPostgresqlDatabase):

|

||||

def __init__(self, *args, **kwargs):

|

||||

self.max_retries = kwargs.pop("max_retries", 5)

|

||||

self.retry_delay = kwargs.pop("retry_delay", 1)

|

||||

super().__init__(*args, **kwargs)

|

||||

|

||||

def execute_sql(self, sql, params=None, commit=True):

|

||||

for attempt in range(self.max_retries + 1):

|

||||

try:

|

||||

return super().execute_sql(sql, params, commit)

|

||||

except (OperationalError, InterfaceError) as e:

|

||||

# PostgreSQL specific error codes

|

||||

# 57P01: admin_shutdown

|

||||

# 57P02: crash_shutdown

|

||||

# 57P03: cannot_connect_now

|

||||

# 08006: connection_failure

|

||||

# 08003: connection_does_not_exist

|

||||

# 08000: connection_exception

|

||||

error_messages = ['connection', 'server closed', 'connection refused',

|

||||

'no connection to the server', 'terminating connection']

|

||||

|

||||

should_retry = any(msg in str(e).lower() for msg in error_messages)

|

||||

|

||||

if should_retry and attempt < self.max_retries:

|

||||

logging.warning(

|

||||

f"PostgreSQL connection issue (attempt {attempt+1}/{self.max_retries}): {e}"

|

||||

)

|

||||

self._handle_connection_loss()

|

||||

time.sleep(self.retry_delay * (2 ** attempt))

|

||||

else:

|

||||

logging.error(f"PostgreSQL execution failure: {e}")

|

||||

raise

|

||||

return None

|

||||

|

||||

def _handle_connection_loss(self):

|

||||

try:

|

||||

self.close()

|

||||

except Exception:

|

||||

pass

|

||||

try:

|

||||

self.connect()

|

||||

except Exception as e:

|

||||

logging.error(f"Failed to reconnect to PostgreSQL: {e}")

|

||||

time.sleep(0.1)

|

||||

self.connect()

|

||||

|

||||

def begin(self):

|

||||

for attempt in range(self.max_retries + 1):

|

||||

try:

|

||||

return super().begin()

|

||||

except (OperationalError, InterfaceError) as e:

|

||||

error_messages = ['connection', 'server closed', 'connection refused',

|

||||

'no connection to the server', 'terminating connection']

|

||||

|

||||

should_retry = any(msg in str(e).lower() for msg in error_messages)

|

||||

|

||||

if should_retry and attempt < self.max_retries:

|

||||

logging.warning(

|

||||

f"PostgreSQL connection lost during transaction (attempt {attempt+1}/{self.max_retries})"

|

||||

)

|

||||

self._handle_connection_loss()

|

||||

time.sleep(self.retry_delay * (2 ** attempt))

|

||||

else:

|

||||

raise

|

||||

|

||||

|

||||

class PooledDatabase(Enum):

|

||||

MYSQL = RetryingPooledMySQLDatabase

|

||||

POSTGRES = PooledPostgresqlDatabase

|

||||

POSTGRES = RetryingPooledPostgresqlDatabase

|

||||

|

||||

|

||||

class DatabaseMigrator(Enum):

|

||||

|

||||

@ -476,6 +476,16 @@ class FileService(CommonService):

|

||||

|

||||

return err, files

|

||||

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def list_all_files_by_parent_id(cls, parent_id):

|

||||

try:

|

||||

files = cls.model.select().where((cls.model.parent_id == parent_id) & (cls.model.id != parent_id))

|

||||

return list(files)

|

||||

except Exception:

|

||||

logging.exception("list_by_parent_id failed")

|

||||

raise RuntimeError("Database error (list_by_parent_id)!")

|

||||

|

||||

@staticmethod

|

||||

def parse_docs(file_objs, user_id):

|

||||

exe = ThreadPoolExecutor(max_workers=12)

|

||||

|

||||

25

api/utils/email_templates.py

Normal file

25

api/utils/email_templates.py

Normal file

@ -0,0 +1,25 @@

|

||||

"""

|

||||

Reusable HTML email templates and registry.

|

||||

"""

|

||||

|

||||

# Invitation email template

|

||||

INVITE_EMAIL_TMPL = """

|

||||

<p>Hi {{email}},</p>

|

||||

<p>{{inviter}} has invited you to join their team (ID: {{tenant_id}}).</p>

|

||||

<p>Click the link below to complete your registration:<br>

|

||||

<a href="{{invite_url}}">{{invite_url}}</a></p>

|

||||

<p>If you did not request this, please ignore this email.</p>

|

||||

"""

|

||||

|

||||

# Password reset code template

|

||||

RESET_CODE_EMAIL_TMPL = """

|

||||

<p>Hello,</p>

|

||||

<p>Your password reset code is: <b>{{ code }}</b></p>

|

||||

<p>This code will expire in {{ ttl_min }} minutes.</p>

|

||||

"""

|

||||

|

||||

# Template registry

|

||||

EMAIL_TEMPLATES = {

|

||||

"invite": INVITE_EMAIL_TMPL,

|

||||

"reset_code": RESET_CODE_EMAIL_TMPL,

|

||||

}

|

||||

@ -74,12 +74,12 @@ def get_es_cluster_stats() -> dict:

|

||||

raise Exception("Elasticsearch is not in use.")

|

||||

try:

|

||||

return {

|

||||

"alive": True,

|

||||

"status": "alive",

|

||||

"message": ESConnection().get_cluster_stats()

|

||||

}

|

||||

except Exception as e:

|

||||

return {

|

||||

"alive": False,

|

||||

"status": "timeout",

|

||||

"message": f"error: {str(e)}",

|

||||

}

|

||||

|

||||

@ -90,12 +90,12 @@ def get_infinity_status():

|

||||

raise Exception("Infinity is not in use.")

|

||||

try:

|

||||

return {

|

||||

"alive": True,

|

||||

"status": "alive",

|

||||

"message": InfinityConnection().health()

|

||||

}

|

||||

except Exception as e:

|

||||

return {

|

||||

"alive": False,

|

||||

"status": "timeout",

|

||||

"message": f"error: {str(e)}",

|

||||

}

|

||||

|

||||

@ -107,12 +107,12 @@ def get_mysql_status():

|

||||

headers = ['id', 'user', 'host', 'db', 'command', 'time', 'state', 'info']

|

||||

cursor.close()

|

||||

return {

|

||||

"alive": True,

|

||||

"status": "alive",

|

||||

"message": [dict(zip(headers, r)) for r in res_rows]

|

||||

}

|

||||

except Exception as e:

|

||||

return {

|

||||

"alive": False,

|

||||

"status": "timeout",

|

||||

"message": f"error: {str(e)}",

|

||||

}

|

||||

|

||||

@ -122,12 +122,12 @@ def check_minio_alive():

|

||||

try:

|

||||

response = requests.get(f'http://{rag_settings.MINIO["host"]}/minio/health/live')

|

||||

if response.status_code == 200:

|

||||

return {'alive': True, "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

return {"status": "alive", "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

else:

|

||||

return {'alive': False, "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

return {"status": "timeout", "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

except Exception as e:

|

||||

return {

|

||||

"alive": False,

|

||||

"status": "timeout",

|

||||

"message": f"error: {str(e)}",

|

||||

}

|

||||

|

||||

@ -135,12 +135,12 @@ def check_minio_alive():

|

||||

def get_redis_info():

|

||||

try:

|

||||

return {

|

||||

"alive": True,

|

||||

"status": "alive",

|

||||

"message": REDIS_CONN.info()

|

||||

}

|

||||

except Exception as e:

|

||||

return {

|

||||

"alive": False,

|

||||

"status": "timeout",

|

||||

"message": f"error: {str(e)}",

|

||||

}

|

||||

|

||||

@ -150,12 +150,12 @@ def check_ragflow_server_alive():

|

||||

try:

|

||||

response = requests.get(f'http://{settings.HOST_IP}:{settings.HOST_PORT}/v1/system/ping')

|

||||

if response.status_code == 200:

|

||||

return {'alive': True, "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

return {"status": "alive", "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

else:

|

||||

return {'alive': False, "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

return {"status": "timeout", "message": f"Confirm elapsed: {(timer() - start_time) * 1000.0:.1f} ms."}

|

||||

except Exception as e:

|

||||

return {

|

||||

"alive": False,

|

||||

"status": "timeout",

|

||||

"message": f"error: {str(e)}",

|

||||

}

|

||||

|

||||

@ -192,9 +192,7 @@ def run_health_checks() -> tuple[dict, bool]:

|

||||

except Exception:

|

||||

result["storage"] = "nok"

|

||||

|

||||

|

||||

all_ok = (result.get("db") == "ok") and (result.get("redis") == "ok") and (result.get("doc_engine") == "ok") and (result.get("storage") == "ok")

|

||||

all_ok = (result.get("db") == "ok") and (result.get("redis") == "ok") and (result.get("doc_engine") == "ok") and (

|

||||

result.get("storage") == "ok")

|

||||

result["status"] = "ok" if all_ok else "nok"

|

||||

return result, all_ok

|

||||

|

||||

|

||||

|

||||

@ -24,6 +24,7 @@ from urllib.parse import urlparse

|

||||

from api.apps import smtp_mail_server

|

||||

from flask_mail import Message

|

||||

from flask import render_template_string

|

||||

from api.utils.email_templates import EMAIL_TEMPLATES

|

||||

from selenium import webdriver

|

||||

from selenium.common.exceptions import TimeoutException

|

||||

from selenium.webdriver.chrome.options import Options

|

||||

@ -34,6 +35,12 @@ from selenium.webdriver.support.ui import WebDriverWait

|

||||

from webdriver_manager.chrome import ChromeDriverManager

|

||||

|

||||

|

||||

OTP_LENGTH = 8

|

||||

OTP_TTL_SECONDS = 5 * 60

|

||||

ATTEMPT_LIMIT = 5

|

||||

ATTEMPT_LOCK_SECONDS = 30 * 60

|

||||

RESEND_COOLDOWN_SECONDS = 60

|

||||

|

||||

|

||||

CONTENT_TYPE_MAP = {

|

||||

# Office

|

||||

@ -178,24 +185,49 @@ def get_float(req: dict, key: str, default: float | int = 10.0) -> float:

|

||||

return default

|

||||

|

||||

|

||||

INVITE_EMAIL_TMPL = """

|

||||

<p>Hi {{email}},</p>

|

||||

<p>{{inviter}} has invited you to join their team (ID: {{tenant_id}}).</p>

|

||||

<p>Click the link below to complete your registration:<br>

|

||||

<a href="{{invite_url}}">{{invite_url}}</a></p>

|

||||

<p>If you did not request this, please ignore this email.</p>

|

||||

"""

|

||||

def send_email_html(subject: str, to_email: str, template_key: str, **context):

|

||||

"""Generic HTML email sender using shared templates.

|

||||

template_key must exist in EMAIL_TEMPLATES.

|

||||

"""

|

||||

from api.apps import app

|

||||

tmpl = EMAIL_TEMPLATES.get(template_key)

|

||||

if not tmpl:

|

||||

raise ValueError(f"Unknown email template: {template_key}")

|

||||

with app.app_context():

|

||||

msg = Message(subject=subject, recipients=[to_email])

|

||||

msg.html = render_template_string(tmpl, **context)

|

||||

smtp_mail_server.send(msg)

|

||||

|

||||

|

||||

def send_invite_email(to_email, invite_url, tenant_id, inviter):

|

||||

from api.apps import app

|

||||

with app.app_context():

|

||||

msg = Message(subject="RAGFlow Invitation",

|

||||

recipients=[to_email])

|

||||

msg.html = render_template_string(

|

||||

INVITE_EMAIL_TMPL,

|

||||

email=to_email,

|

||||

invite_url=invite_url,

|

||||

tenant_id=tenant_id,

|

||||

inviter=inviter,

|

||||

)

|

||||

smtp_mail_server.send(msg)

|

||||

# Reuse the generic HTML sender with 'invite' template

|

||||

send_email_html(

|

||||

subject="RAGFlow Invitation",

|

||||

to_email=to_email,

|

||||

template_key="invite",

|

||||

email=to_email,

|

||||

invite_url=invite_url,

|

||||

tenant_id=tenant_id,

|

||||

inviter=inviter,

|

||||

)

|

||||

|

||||

|

||||

def otp_keys(email: str):

|

||||

email = (email or "").strip().lower()

|

||||

return (

|

||||

f"otp:{email}",

|

||||

f"otp_attempts:{email}",

|

||||

f"otp_last_sent:{email}",

|

||||

f"otp_lock:{email}",

|

||||

)

|

||||

|

||||

|

||||

def hash_code(code: str, salt: bytes) -> str:

|

||||

import hashlib

|

||||

import hmac

|

||||

return hmac.new(salt, (code or "").encode("utf-8"), hashlib.sha256).hexdigest()

|

||||

|

||||

|

||||

def captcha_key(email: str) -> str:

|

||||

return f"captcha:{email}"

|

||||

|

||||

|

||||

@ -31,7 +31,6 @@

|

||||

"entities_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

"pagerank_fea": {"type": "integer", "default": 0},

|

||||

"tag_feas": {"type": "varchar", "default": "", "analyzer": "rankfeatures"},

|

||||

|

||||

"from_entity_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

"to_entity_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

"entity_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

@ -39,6 +38,6 @@

|

||||

"source_id": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

"n_hop_with_weight": {"type": "varchar", "default": ""},

|

||||

"removed_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

|

||||

"doc_type_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"}

|

||||

"doc_type_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"},

|

||||

"toc_kwd": {"type": "varchar", "default": "", "analyzer": "whitespace-#"}

|

||||

}

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: -1

|

||||

sidebar_position: -10

|

||||

slug: /configure_knowledge_base

|

||||

---

|

||||

|

||||

@ -37,7 +37,7 @@ This section covers the following topics:

|

||||

|

||||

### Select chunking method

|

||||

|

||||

RAGFlow offers multiple chunking template to facilitate chunking files of different layouts and ensure semantic integrity. In **Chunking method**, you can choose the default template that suits the layouts and formats of your files. The following table shows the descriptions and the compatible file formats of each supported chunk template:

|

||||

RAGFlow offers multiple built-in chunking template to facilitate chunking files of different layouts and ensure semantic integrity. From the **Built-in** chunking method dropdown under **Parse type**, you can choose the default template that suits the layouts and formats of your files. The following table shows the descriptions and the compatible file formats of each supported chunk template:

|

||||

|

||||

| **Template** | Description | File format |

|

||||

|--------------|-----------------------------------------------------------------------|-----------------------------------------------------------------------------------------------|

|

||||

@ -54,9 +54,23 @@ RAGFlow offers multiple chunking template to facilitate chunking files of differ

|

||||

| One | Each document is chunked in its entirety (as one). | DOCX, XLSX, XLS (Excel 97-2003), PDF, TXT |

|

||||

| Tag | The dataset functions as a tag set for the others. | XLSX, CSV/TXT |

|

||||

|

||||

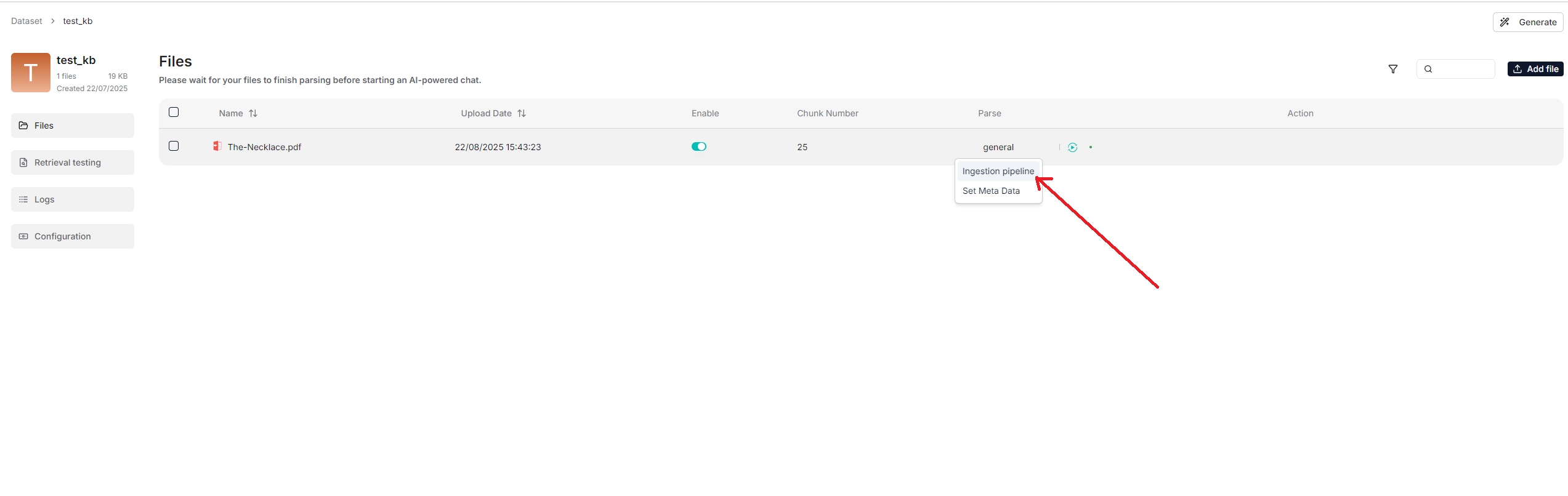

You can also change a file's chunking method on the **Datasets** page.

|

||||

You can also change a file's chunking method on the **Files** page.

|

||||

|

||||

|

||||

|

||||

|

||||

<details>

|

||||

<summary>From v0.21.0 onward, RAGFlow supports ingestion pipeline for customized data ingestion and cleansing workflows.</summary>

|

||||

|

||||

To use a customized data pipeline:

|

||||

|

||||

1. On the **Agent** page, click **+ Create agent** > **Create from blank**.

|

||||

2. Select **Ingestion pipeline** and name your data pipeline in the popup, then click **Save** to show the data pipeline canvas.

|

||||

3. After updating your data pipeline, click **Save** on the top right of the canvas.

|

||||

4. Navigate to the **Configuration** page of your dataset, select **Choose pipeline** in **Ingestion pipeline**.

|

||||

|

||||

*Your saved data pipeline will appear in the dropdown menu below.*

|

||||

|

||||

</details>

|

||||

|

||||

### Select embedding model

|

||||

|

||||

|

||||

@ -53,25 +53,31 @@ Whether to enable entity resolution. You can think of this as an entity deduplic

|

||||

- (Default) Disable entity resolution.

|

||||

- Enable entity resolution. This option consumes more tokens.

|

||||

|

||||

### Community report generation

|

||||

### Community reports

|

||||

|

||||

In a knowledge graph, a community is a cluster of entities linked by relationships. You can have the LLM generate an abstract for each community, known as a community report. See [here](https://www.microsoft.com/en-us/research/blog/graphrag-improving-global-search-via-dynamic-community-selection/) for more information. This indicates whether to generate community reports:

|

||||

|

||||

- Generate community reports. This option consumes more tokens.

|

||||

- (Default) Do not generate community reports.

|

||||

|

||||

## Procedure

|

||||

## Quickstart

|

||||

|

||||

1. On the **Configuration** page of your dataset, switch on **Extract knowledge graph** or adjust its settings as needed, and click **Save** to confirm your changes.

|

||||

1. Navigate to the **Configuration** page of your dataset and update:

|

||||

|

||||

- Entity types: *Required* - Specifies the entity types in the knowledge graph to generate. You don't have to stick with the default, but you need to customize them for your documents.

|

||||

- Method: *Optional*

|

||||

- Entity resolution: *Optional*

|

||||

- Community reports: *Optional*

|

||||

*The default knowledge graph configurations for your dataset are now set.*

|

||||

|

||||

- *The default knowledge graph configurations for your dataset are now set and files uploaded from this point onward will automatically use these settings during parsing.*

|

||||

- *Files parsed before this update will retain their original knowledge graph settings.*

|

||||

2. Navigate to the **Files** page of your dataset, click the **Generate** button on the top right corner of the page, then select **Knowledge graph** from the dropdown to initiate the knowledge graph generation process.

|

||||

|

||||

2. The knowledge graph of your dataset does *not* automatically update *until* a newly uploaded file is parsed.

|

||||

*You can click the pause button in the dropdown to halt the build process when necessary.*

|

||||

|

||||

_A **Knowledge graph** entry appears under **Configuration** once a knowledge graph is created._

|

||||

3. Go back to the **Configuration** page:

|

||||

|

||||

*Once a knowledge graph is generated, the **Knowledge graph** field changes from `Not generated` to `Generated at a specific timestamp`. You can delete it by clicking the recycle bin button to the right of the field.*

|

||||

|

||||

3. Click **Knowledge graph** to view the details of the generated graph.

|

||||

4. To use the created knowledge graph, do either of the following:

|

||||

|

||||

- In the **Chat setting** panel of your chat app, switch on the **Use knowledge graph** toggle.

|

||||

@ -79,17 +85,13 @@ In a knowledge graph, a community is a cluster of entities linked by relationshi

|

||||

|

||||

## Frequently asked questions

|

||||

|

||||

### Can I have different knowledge graph settings for different files in my dataset?

|

||||

|

||||

Yes, you can. Just one graph is generated per dataset. The smaller graphs of your files will be *combined* into one big, unified graph at the end of the graph extraction process.

|

||||

|

||||

### Does the knowledge graph automatically update when I remove a related file?

|

||||

|

||||

Nope. The knowledge graph does *not* automatically update *until* a newly uploaded document is parsed.

|

||||

Nope. The knowledge graph does *not* update *until* you regenerate a knowledge graph for your dataset.

|

||||

|

||||

### How to remove a generated knowledge graph?

|

||||

|

||||

To remove the generated knowledge graph, delete all related files in your dataset. Although the **Knowledge graph** entry will still be visible, the graph has actually been deleted.

|

||||

On the **Configuration** page of your dataset, find the **Knoweledge graph** field and click the recycle bin button to the right of the field.

|

||||

|

||||

### Where is the created knowledge graph stored?

|

||||

|

||||

|

||||

@ -72,3 +72,22 @@ The maximum number of clusters to create. Defaults to 64, with a maximum limit o

|

||||

### Random seed

|

||||

|

||||

A random seed. Click **+** to change the seed value.

|

||||

|

||||

## Quickstart

|

||||

|

||||

1. Navigate to the **Configuration** page of your dataset and update:

|

||||

|

||||

- Prompt: *Optional* - We recommend that you keep it as-is until you understand the mechanism behind.

|

||||

- Max token: *Optional*

|

||||

- Threshold: *Optional*

|

||||

- Max cluster: *Optional*

|

||||

|

||||

2. Navigate to the **Files** page of your dataset, click the **Generate** button on the top right corner of the page, then select **RAPTOR** from the dropdown to initiate the RAPTOR build process.

|

||||

|

||||

*You can click the pause button in the dropdown to halt the build process when necessary.*

|

||||

|

||||

3. Go back to the **Configuration** page:

|

||||

|

||||

*The **RAPTOR** field changes from `Not generated` to `Generated at a specific timestamp` when a RAPTOR hierarchical tree structure is generated. You can delete it by clicking the recycle bin button to the right of the field.*

|

||||

|

||||

4. Once a RAPTOR hierarchical tree structure is generated, your chat assistant and **Retrieval** agent component will use it for retrieval as a default.

|

||||

|

||||

39

docs/guides/dataset/extract_table_of_contents.md

Normal file

39

docs/guides/dataset/extract_table_of_contents.md

Normal file

@ -0,0 +1,39 @@

|

||||

---

|

||||

sidebar_position: 4

|

||||

slug: /enable_table_of_contents

|

||||

---

|

||||

|

||||

# Extract table of contents

|

||||

|

||||

Extract table of contents (TOC) from documents to provide long context RAG and improve retrieval.

|

||||

|

||||

---

|

||||

|

||||

During indexing, this technique uses LLM to extract and generate chapter information, which is added to each chunk to provide sufficient global context. At the retrieval stage, it first uses the chunks matched by search, then supplements missing chunks based on the table of contents structure. This addresses issues caused by chunk fragmentation and insufficient context, improving answer quality.

|

||||

|

||||

:::danger WARNING

|

||||

Enabling TOC extraction requires significant memory, computational resources, and tokens.

|

||||

:::

|

||||

|

||||

## Prerequisites

|

||||

|

||||

The system's default chat model is used to summarize clustered content. Before proceeding, ensure that you have a chat model properly configured:

|

||||

|

||||

|

||||

|

||||

## Quickstart

|

||||

|

||||

1. Navigate to the **Configuration** page.

|

||||

|

||||

2. Enable **TOC Enhance**.

|

||||

|

||||

3. To use this technique during retrieval, do either of the following:

|

||||

|

||||

- In the **Chat setting** panel of your chat app, switch on the **TOC Enhance** toggle.

|

||||

- If you are using an agent, click the **Retrieval** agent component to specify the dataset(s) and switch on the **TOC Enhance** toggle.

|

||||

|

||||

## Frequently asked questions

|

||||

|

||||

### Will previously parsed files be searched using the TOC enhancement feature once I enable `TOC Enhance`?

|

||||

|

||||

No. Only files parsed after you enable **TOC Enhance** will be searched using the TOC enhancement feature. To apply this feature to files parsed before enabling **TOC Enhance**, you must reparse them.

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: 1

|

||||

sidebar_position: -4

|

||||

slug: /select_pdf_parser

|

||||

---

|

||||

|

||||

@ -25,7 +25,7 @@ RAGFlow isn't one-size-fits-all. It is built for flexibility and supports deeper

|

||||

- **One**

|

||||

- To use a third-party visual model for parsing PDFs, ensure you have set a default img2txt model under **Set default models** on the **Model providers** page.

|

||||

|

||||

## Procedure

|

||||

## Quickstart

|

||||

|

||||

1. On your dataset's **Configuration** page, select a chunking method, say **General**.

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: 0

|

||||

sidebar_position: -7

|

||||

slug: /set_metada

|

||||

---

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: 2

|

||||

sidebar_position: -2

|

||||

slug: /set_page_rank

|

||||

---

|

||||

|

||||

|

||||

@ -42,8 +42,8 @@ A tag set is *not* involved in document indexing or retrieval. Do not specify a

|

||||

:::

|

||||

|

||||

1. Click **+ Create dataset** to create a dataset.

|

||||

2. Navigate to the **Configuration** page of the created dataset and choose **Tag** as the default chunking method.

|

||||

3. Navigate to the **Dataset** page and upload and parse your table file in XLSX, CSV, or TXT formats.

|

||||

2. Navigate to the **Configuration** page of the created dataset, select **Built-in** in **Ingestion pipeline**, then choose **Tag** as the default chunking method from the **Built-in** drop-down menu.

|

||||

3. Go back to the **Files** page and upload and parse your table file in XLSX, CSV, or TXT formats.

|

||||

_A tag cloud appears under the **Tag view** section, indicating the tag set is created:_

|

||||

|

||||

4. Click the **Table** tab to view the tag frequency table:

|

||||

|

||||

@ -46,16 +46,23 @@ The Admin CLI and Admin Service form a client-server architectural suite for RAG

|

||||

2. Install ragflow-cli.

|

||||

|

||||

```bash

|

||||

pip install ragflow-cli

|

||||

pip install ragflow-cli==0.21.0

|

||||

```

|

||||

|

||||

3. Launch the CLI client:

|

||||

|

||||

```bash

|

||||

ragflow-cli -h 0.0.0.0 -p 9381

|

||||

ragflow-cli -h 127.0.0.1 -p 9381

|

||||

```

|

||||

|

||||

Enter superuser's password to login. Default password is `admin`.

|

||||

You will be prompted to enter the superuser's password to log in.

|

||||

The default password is admin.

|

||||

|

||||

**Parameters:**

|

||||

|

||||

- -h: RAGFlow admin server host address

|

||||

|

||||

- -p: RAGFlow admin server port

|

||||

|

||||

|

||||

|

||||

|

||||

@ -343,19 +343,20 @@ You can add keywords or questions to a file chunk to improve its ranking for que

|

||||

|

||||

Conversations in RAGFlow are based on a particular dataset or multiple datasets. Once you have created your dataset and finished file parsing, you can go ahead and start an AI conversation.

|

||||

|

||||

1. Click the **Chat** tab in the middle top of the mage **>** **Create an assistant** to show the **Chat Configuration** dialogue *of your next dialogue*.

|

||||

1. Click the **Chat** tab in the middle top of the page **>** **Create chat** to create a chat assistant.

|

||||

2. Click the created chat app to enter its configuration page.

|

||||

> RAGFlow offer the flexibility of choosing a different chat model for each dialogue, while allowing you to set the default models in **System Model Settings**.

|

||||

|

||||

2. Update **Assistant settings**:

|

||||

2. Update **Chat setting** on the right of the configuration page:

|

||||

|

||||

- Name your assistant and specify your datasets.

|

||||

- **Empty response**:

|

||||

- If you wish to *confine* RAGFlow's answers to your datasets, leave a response here. Then when it doesn't retrieve an answer, it *uniformly* responds with what you set here.

|

||||

- If you wish RAGFlow to *improvise* when it doesn't retrieve an answer from your datasets, leave it blank, which may give rise to hallucinations.

|

||||

|

||||

3. Update **Prompt engine** or leave it as is for the beginning.

|

||||

3. Update **System prompt** or leave it as is for the beginning.

|

||||

|

||||

4. Update **Model settings**.

|

||||

4. Select a chat model in the **Model** dropdown list.

|

||||

|

||||

5. Now, let's start the show:

|

||||

|

||||

|

||||

@ -45,7 +45,7 @@ Released on October 15, 2025.

|

||||

- Claude Sonnet 4.5

|

||||

- Meituan LongCat-Flash-Thinking

|

||||

|

||||

## New agent templates

|

||||

### New agent templates

|

||||

|

||||

- Company Research Report Deep Dive Agent: Designed for financial institutions to help analysts quickly organize information, generate research reports, and make investment decisions.

|

||||

- Orchestratable Ingestion Pipeline Template: Allows users to apply this template on the canvas to rapidly establish standardized data ingestion and cleansing processes.

|

||||

|

||||

@ -227,7 +227,7 @@ class Extractor:

|

||||

async def _handle_entity_relation_summary(self, entity_or_relation_name: str, description: str) -> str:

|

||||

summary_max_tokens = 512

|

||||

use_description = truncate(description, summary_max_tokens)

|

||||

description_list = (use_description.split(GRAPH_FIELD_SEP),)

|

||||

description_list = use_description.split(GRAPH_FIELD_SEP)

|

||||

if len(description_list) <= 12:

|

||||

return use_description

|

||||

prompt_template = SUMMARIZE_DESCRIPTIONS_PROMPT

|

||||

|

||||

@ -44,7 +44,7 @@ dependencies = [

|