mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-02-04 17:45:07 +08:00

Compare commits

27 Commits

v0.20.5

...

86f6da2f74

| Author | SHA1 | Date | |

|---|---|---|---|

| 86f6da2f74 | |||

| 8c00cbc87a | |||

| 41e808f4e6 | |||

| bc0281040b | |||

| 341a7b1473 | |||

| c29c395390 | |||

| a23a0f230c | |||

| 2a88ce6be1 | |||

| 664b781d62 | |||

| 65571e5254 | |||

| aa30f20730 | |||

| b9b278d441 | |||

| e1d86cfee3 | |||

| 8ebd07337f | |||

| dd584d57b0 | |||

| 3d39b96c6f | |||

| 179091b1a4 | |||

| d14d92a900 | |||

| 1936ad82d2 | |||

| 8a09f07186 | |||

| df8d31451b | |||

| fc95d113c3 | |||

| 7d14455fbe | |||

| bbe6ed3b90 | |||

| 127af4e45c | |||

| 41cdba19ba | |||

| 0d9c1f1c3c |

8

.github/workflows/release.yml

vendored

8

.github/workflows/release.yml

vendored

@ -88,7 +88,9 @@ jobs:

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: infiniflow/ragflow:${{ env.RELEASE_TAG }}

|

||||

tags: |

|

||||

infiniflow/ragflow:${{ env.RELEASE_TAG }}

|

||||

infiniflow/ragflow:latest-full

|

||||

file: Dockerfile

|

||||

platforms: linux/amd64

|

||||

|

||||

@ -98,7 +100,9 @@ jobs:

|

||||

with:

|

||||

context: .

|

||||

push: true

|

||||

tags: infiniflow/ragflow:${{ env.RELEASE_TAG }}-slim

|

||||

tags: |

|

||||

infiniflow/ragflow:${{ env.RELEASE_TAG }}-slim

|

||||

infiniflow/ragflow:latest-slim

|

||||

file: Dockerfile

|

||||

build-args: LIGHTEN=1

|

||||

platforms: linux/amd64

|

||||

|

||||

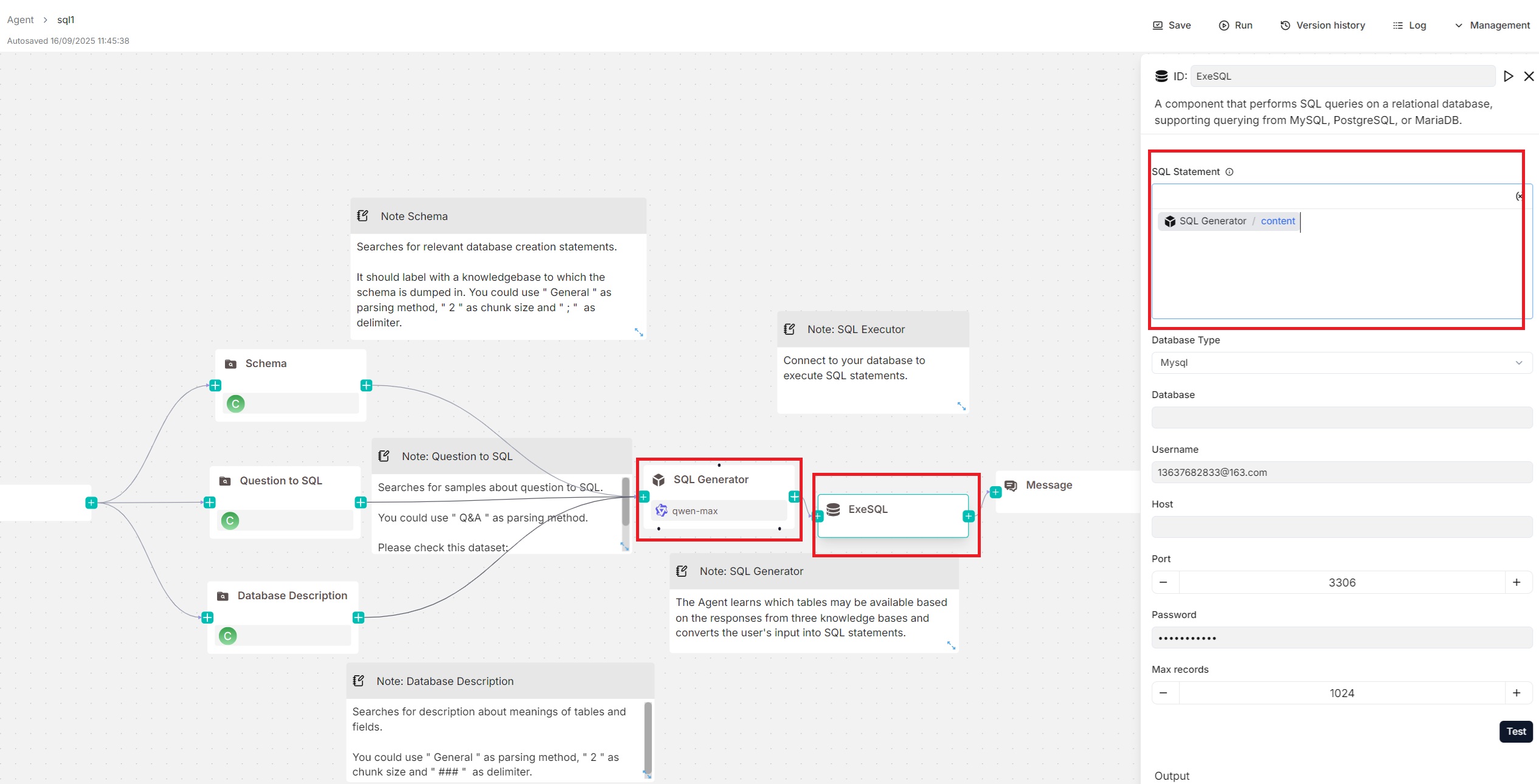

@ -83,7 +83,7 @@

|

||||

},

|

||||

"password": "20010812Yy!",

|

||||

"port": 3306,

|

||||

"sql": "Agent:WickedGoatsDivide@content",

|

||||

"sql": "{Agent:WickedGoatsDivide@content}",

|

||||

"username": "13637682833@163.com"

|

||||

}

|

||||

},

|

||||

@ -114,9 +114,7 @@

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"ed31364c727211f0bdb2bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -124,7 +122,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -145,9 +143,7 @@

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"0f968106727311f08357bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -155,7 +151,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -176,9 +172,7 @@

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"4ad1f9d0727311f0827dbafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -186,7 +180,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -347,9 +341,7 @@

|

||||

"form": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"ed31364c727211f0bdb2bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -357,7 +349,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -387,9 +379,7 @@

|

||||

"form": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"0f968106727311f08357bafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -397,7 +387,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -427,9 +417,7 @@

|

||||

"form": {

|

||||

"cross_languages": [],

|

||||

"empty_response": "",

|

||||

"kb_ids": [

|

||||

"4ad1f9d0727311f0827dbafe6e7908e6"

|

||||

],

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

@ -437,7 +425,7 @@

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"query": "sys.query",

|

||||

"query": "{sys.query}",

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

@ -539,7 +527,7 @@

|

||||

},

|

||||

"password": "20010812Yy!",

|

||||

"port": 3306,

|

||||

"sql": "Agent:WickedGoatsDivide@content",

|

||||

"sql": "{Agent:WickedGoatsDivide@content}",

|

||||

"username": "13637682833@163.com"

|

||||

},

|

||||

"label": "ExeSQL",

|

||||

|

||||

@ -219,6 +219,70 @@

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "TokenPony",

|

||||

"logo": "",

|

||||

"tags": "LLM",

|

||||

"status": "1",

|

||||

"llm": [

|

||||

{

|

||||

"llm_name": "qwen3-8b",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-v3-0324",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "qwen3-32b",

|

||||

"tags": "LLM,CHAT,131k",

|

||||

"max_tokens": 131000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "kimi-k2-instruct",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-r1-0528",

|

||||

"tags": "LLM,CHAT,164k",

|

||||

"max_tokens": 164000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "qwen3-coder-480b",

|

||||

"tags": "LLM,CHAT,1024k",

|

||||

"max_tokens": 1024000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5",

|

||||

"tags": "LLM,CHAT,131K",

|

||||

"max_tokens": 131000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "deepseek-v3.1",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "Tongyi-Qianwen",

|

||||

"logo": "",

|

||||

|

||||

@ -22,10 +22,10 @@ from openpyxl import Workbook, load_workbook

|

||||

from rag.nlp import find_codec

|

||||

|

||||

# copied from `/openpyxl/cell/cell.py`

|

||||

ILLEGAL_CHARACTERS_RE = re.compile(r'[\000-\010]|[\013-\014]|[\016-\037]')

|

||||

ILLEGAL_CHARACTERS_RE = re.compile(r"[\000-\010]|[\013-\014]|[\016-\037]")

|

||||

|

||||

|

||||

class RAGFlowExcelParser:

|

||||

|

||||

@staticmethod

|

||||

def _load_excel_to_workbook(file_like_object):

|

||||

if isinstance(file_like_object, bytes):

|

||||

@ -36,7 +36,7 @@ class RAGFlowExcelParser:

|

||||

file_head = file_like_object.read(4)

|

||||

file_like_object.seek(0)

|

||||

|

||||

if not (file_head.startswith(b'PK\x03\x04') or file_head.startswith(b'\xD0\xCF\x11\xE0')):

|

||||

if not (file_head.startswith(b"PK\x03\x04") or file_head.startswith(b"\xd0\xcf\x11\xe0")):

|

||||

logging.info("Not an Excel file, converting CSV to Excel Workbook")

|

||||

|

||||

try:

|

||||

@ -48,7 +48,7 @@ class RAGFlowExcelParser:

|

||||

raise Exception(f"Failed to parse CSV and convert to Excel Workbook: {e_csv}")

|

||||

|

||||

try:

|

||||

return load_workbook(file_like_object,data_only= True)

|

||||

return load_workbook(file_like_object, data_only=True)

|

||||

except Exception as e:

|

||||

logging.info(f"openpyxl load error: {e}, try pandas instead")

|

||||

try:

|

||||

@ -59,7 +59,7 @@ class RAGFlowExcelParser:

|

||||

except Exception as ex:

|

||||

logging.info(f"pandas with default engine load error: {ex}, try calamine instead")

|

||||

file_like_object.seek(0)

|

||||

df = pd.read_excel(file_like_object, engine='calamine')

|

||||

df = pd.read_excel(file_like_object, engine="calamine")

|

||||

return RAGFlowExcelParser._dataframe_to_workbook(df)

|

||||

except Exception as e_pandas:

|

||||

raise Exception(f"pandas.read_excel error: {e_pandas}, original openpyxl error: {e}")

|

||||

@ -116,9 +116,7 @@ class RAGFlowExcelParser:

|

||||

tb = ""

|

||||

tb += f"<table><caption>{sheetname}</caption>"

|

||||

tb += tb_rows_0

|

||||

for r in list(

|

||||

rows[1 + chunk_i * chunk_rows: min(1 + (chunk_i + 1) * chunk_rows, len(rows))]

|

||||

):

|

||||

for r in list(rows[1 + chunk_i * chunk_rows : min(1 + (chunk_i + 1) * chunk_rows, len(rows))]):

|

||||

tb += "<tr>"

|

||||

for i, c in enumerate(r):

|

||||

if c.value is None:

|

||||

@ -133,8 +131,16 @@ class RAGFlowExcelParser:

|

||||

|

||||

def markdown(self, fnm):

|

||||

import pandas as pd

|

||||

|

||||

file_like_object = BytesIO(fnm) if not isinstance(fnm, str) else fnm

|

||||

df = pd.read_excel(file_like_object)

|

||||

try:

|

||||

file_like_object.seek(0)

|

||||

df = pd.read_excel(file_like_object)

|

||||

except Exception as e:

|

||||

logging.warning(f"Parse spreadsheet error: {e}, trying to interpret as CSV file")

|

||||

file_like_object.seek(0)

|

||||

df = pd.read_csv(file_like_object)

|

||||

df = df.replace(r"^\s*$", "", regex=True)

|

||||

return df.to_markdown(index=False)

|

||||

|

||||

def __call__(self, fnm):

|

||||

|

||||

@ -34,7 +34,7 @@ from pypdf import PdfReader as pdf2_read

|

||||

|

||||

from api import settings

|

||||

from api.utils.file_utils import get_project_base_directory

|

||||

from deepdoc.vision import OCR, LayoutRecognizer, Recognizer, TableStructureRecognizer

|

||||

from deepdoc.vision import OCR, AscendLayoutRecognizer, LayoutRecognizer, Recognizer, TableStructureRecognizer

|

||||

from rag.app.picture import vision_llm_chunk as picture_vision_llm_chunk

|

||||

from rag.nlp import rag_tokenizer

|

||||

from rag.prompts import vision_llm_describe_prompt

|

||||

@ -64,33 +64,38 @@ class RAGFlowPdfParser:

|

||||

if PARALLEL_DEVICES > 1:

|

||||

self.parallel_limiter = [trio.CapacityLimiter(1) for _ in range(PARALLEL_DEVICES)]

|

||||

|

||||

layout_recognizer_type = os.getenv("LAYOUT_RECOGNIZER_TYPE", "onnx").lower()

|

||||

if layout_recognizer_type not in ["onnx", "ascend"]:

|

||||

raise RuntimeError("Unsupported layout recognizer type.")

|

||||

|

||||

if hasattr(self, "model_speciess"):

|

||||

self.layouter = LayoutRecognizer("layout." + self.model_speciess)

|

||||

recognizer_domain = "layout." + self.model_speciess

|

||||

else:

|

||||

self.layouter = LayoutRecognizer("layout")

|

||||

recognizer_domain = "layout"

|

||||

|

||||

if layout_recognizer_type == "ascend":

|

||||

logging.debug("Using Ascend LayoutRecognizer", flush=True)

|

||||

self.layouter = AscendLayoutRecognizer(recognizer_domain)

|

||||

else: # onnx

|

||||

logging.debug("Using Onnx LayoutRecognizer", flush=True)

|

||||

self.layouter = LayoutRecognizer(recognizer_domain)

|

||||

self.tbl_det = TableStructureRecognizer()

|

||||

|

||||

self.updown_cnt_mdl = xgb.Booster()

|

||||

if not settings.LIGHTEN:

|

||||

try:

|

||||

import torch.cuda

|

||||

|

||||

if torch.cuda.is_available():

|

||||

self.updown_cnt_mdl.set_param({"device": "cuda"})

|

||||

except Exception:

|

||||

logging.exception("RAGFlowPdfParser __init__")

|

||||

try:

|

||||

model_dir = os.path.join(

|

||||

get_project_base_directory(),

|

||||

"rag/res/deepdoc")

|

||||

self.updown_cnt_mdl.load_model(os.path.join(

|

||||

model_dir, "updown_concat_xgb.model"))

|

||||

model_dir = os.path.join(get_project_base_directory(), "rag/res/deepdoc")

|

||||

self.updown_cnt_mdl.load_model(os.path.join(model_dir, "updown_concat_xgb.model"))

|

||||

except Exception:

|

||||

model_dir = snapshot_download(

|

||||

repo_id="InfiniFlow/text_concat_xgb_v1.0",

|

||||

local_dir=os.path.join(get_project_base_directory(), "rag/res/deepdoc"),

|

||||

local_dir_use_symlinks=False)

|

||||

self.updown_cnt_mdl.load_model(os.path.join(

|

||||

model_dir, "updown_concat_xgb.model"))

|

||||

model_dir = snapshot_download(repo_id="InfiniFlow/text_concat_xgb_v1.0", local_dir=os.path.join(get_project_base_directory(), "rag/res/deepdoc"), local_dir_use_symlinks=False)

|

||||

self.updown_cnt_mdl.load_model(os.path.join(model_dir, "updown_concat_xgb.model"))

|

||||

|

||||

self.page_from = 0

|

||||

self.column_num = 1

|

||||

@ -102,13 +107,10 @@ class RAGFlowPdfParser:

|

||||

return c["bottom"] - c["top"]

|

||||

|

||||

def _x_dis(self, a, b):

|

||||

return min(abs(a["x1"] - b["x0"]), abs(a["x0"] - b["x1"]),

|

||||

abs(a["x0"] + a["x1"] - b["x0"] - b["x1"]) / 2)

|

||||

return min(abs(a["x1"] - b["x0"]), abs(a["x0"] - b["x1"]), abs(a["x0"] + a["x1"] - b["x0"] - b["x1"]) / 2)

|

||||

|

||||

def _y_dis(

|

||||

self, a, b):

|

||||

return (

|

||||

b["top"] + b["bottom"] - a["top"] - a["bottom"]) / 2

|

||||

def _y_dis(self, a, b):

|

||||

return (b["top"] + b["bottom"] - a["top"] - a["bottom"]) / 2

|

||||

|

||||

def _match_proj(self, b):

|

||||

proj_patt = [

|

||||

@ -130,10 +132,7 @@ class RAGFlowPdfParser:

|

||||

LEN = 6

|

||||

tks_down = rag_tokenizer.tokenize(down["text"][:LEN]).split()

|

||||

tks_up = rag_tokenizer.tokenize(up["text"][-LEN:]).split()

|

||||

tks_all = up["text"][-LEN:].strip() \

|

||||

+ (" " if re.match(r"[a-zA-Z0-9]+",

|

||||

up["text"][-1] + down["text"][0]) else "") \

|

||||

+ down["text"][:LEN].strip()

|

||||

tks_all = up["text"][-LEN:].strip() + (" " if re.match(r"[a-zA-Z0-9]+", up["text"][-1] + down["text"][0]) else "") + down["text"][:LEN].strip()

|

||||

tks_all = rag_tokenizer.tokenize(tks_all).split()

|

||||

fea = [

|

||||

up.get("R", -1) == down.get("R", -1),

|

||||

@ -144,39 +143,30 @@ class RAGFlowPdfParser:

|

||||

down["layout_type"] == "text",

|

||||

up["layout_type"] == "table",

|

||||

down["layout_type"] == "table",

|

||||

True if re.search(

|

||||

r"([。?!;!?;+))]|[a-z]\.)$",

|

||||

up["text"]) else False,

|

||||

True if re.search(r"([。?!;!?;+))]|[a-z]\.)$", up["text"]) else False,

|

||||

True if re.search(r"[,:‘“、0-9(+-]$", up["text"]) else False,

|

||||

True if re.search(

|

||||

r"(^.?[/,?;:\],。;:’”?!》】)-])",

|

||||

down["text"]) else False,

|

||||

True if re.search(r"(^.?[/,?;:\],。;:’”?!》】)-])", down["text"]) else False,

|

||||

True if re.match(r"[\((][^\(\)()]+[)\)]$", up["text"]) else False,

|

||||

True if re.search(r"[,,][^。.]+$", up["text"]) else False,

|

||||

True if re.search(r"[,,][^。.]+$", up["text"]) else False,

|

||||

True if re.search(r"[\((][^\))]+$", up["text"])

|

||||

and re.search(r"[\))]", down["text"]) else False,

|

||||

True if re.search(r"[\((][^\))]+$", up["text"]) and re.search(r"[\))]", down["text"]) else False,

|

||||

self._match_proj(down),

|

||||

True if re.match(r"[A-Z]", down["text"]) else False,

|

||||

True if re.match(r"[A-Z]", up["text"][-1]) else False,

|

||||

True if re.match(r"[a-z0-9]", up["text"][-1]) else False,

|

||||

True if re.match(r"[0-9.%,-]+$", down["text"]) else False,

|

||||

up["text"].strip()[-2:] == down["text"].strip()[-2:] if len(up["text"].strip()

|

||||

) > 1 and len(

|

||||

down["text"].strip()) > 1 else False,

|

||||

up["text"].strip()[-2:] == down["text"].strip()[-2:] if len(up["text"].strip()) > 1 and len(down["text"].strip()) > 1 else False,

|

||||

up["x0"] > down["x1"],

|

||||

abs(self.__height(up) - self.__height(down)) / min(self.__height(up),

|

||||

self.__height(down)),

|

||||

abs(self.__height(up) - self.__height(down)) / min(self.__height(up), self.__height(down)),

|

||||

self._x_dis(up, down) / max(w, 0.000001),

|

||||

(len(up["text"]) - len(down["text"])) /

|

||||

max(len(up["text"]), len(down["text"])),

|

||||

(len(up["text"]) - len(down["text"])) / max(len(up["text"]), len(down["text"])),

|

||||

len(tks_all) - len(tks_up) - len(tks_down),

|

||||

len(tks_down) - len(tks_up),

|

||||

tks_down[-1] == tks_up[-1] if tks_down and tks_up else False,

|

||||

max(down["in_row"], up["in_row"]),

|

||||

abs(down["in_row"] - up["in_row"]),

|

||||

len(tks_down) == 1 and rag_tokenizer.tag(tks_down[0]).find("n") >= 0,

|

||||

len(tks_up) == 1 and rag_tokenizer.tag(tks_up[0]).find("n") >= 0

|

||||

len(tks_up) == 1 and rag_tokenizer.tag(tks_up[0]).find("n") >= 0,

|

||||

]

|

||||

return fea

|

||||

|

||||

@ -187,9 +177,7 @@ class RAGFlowPdfParser:

|

||||

for i in range(len(arr) - 1):

|

||||

for j in range(i, -1, -1):

|

||||

# restore the order using th

|

||||

if abs(arr[j + 1]["x0"] - arr[j]["x0"]) < threshold \

|

||||

and arr[j + 1]["top"] < arr[j]["top"] \

|

||||

and arr[j + 1]["page_number"] == arr[j]["page_number"]:

|

||||

if abs(arr[j + 1]["x0"] - arr[j]["x0"]) < threshold and arr[j + 1]["top"] < arr[j]["top"] and arr[j + 1]["page_number"] == arr[j]["page_number"]:

|

||||

tmp = arr[j]

|

||||

arr[j] = arr[j + 1]

|

||||

arr[j + 1] = tmp

|

||||

@ -197,8 +185,7 @@ class RAGFlowPdfParser:

|

||||

|

||||

def _has_color(self, o):

|

||||

if o.get("ncs", "") == "DeviceGray":

|

||||

if o["stroking_color"] and o["stroking_color"][0] == 1 and o["non_stroking_color"] and \

|

||||

o["non_stroking_color"][0] == 1:

|

||||

if o["stroking_color"] and o["stroking_color"][0] == 1 and o["non_stroking_color"] and o["non_stroking_color"][0] == 1:

|

||||

if re.match(r"[a-zT_\[\]\(\)-]+", o.get("text", "")):

|

||||

return False

|

||||

return True

|

||||

@ -216,8 +203,7 @@ class RAGFlowPdfParser:

|

||||

if not tbls:

|

||||

continue

|

||||

for tb in tbls: # for table

|

||||

left, top, right, bott = tb["x0"] - MARGIN, tb["top"] - MARGIN, \

|

||||

tb["x1"] + MARGIN, tb["bottom"] + MARGIN

|

||||

left, top, right, bott = tb["x0"] - MARGIN, tb["top"] - MARGIN, tb["x1"] + MARGIN, tb["bottom"] + MARGIN

|

||||

left *= ZM

|

||||

top *= ZM

|

||||

right *= ZM

|

||||

@ -232,14 +218,13 @@ class RAGFlowPdfParser:

|

||||

tbcnt = np.cumsum(tbcnt)

|

||||

for i in range(len(tbcnt) - 1): # for page

|

||||

pg = []

|

||||

for j, tb_items in enumerate(

|

||||

recos[tbcnt[i]: tbcnt[i + 1]]): # for table

|

||||

poss = pos[tbcnt[i]: tbcnt[i + 1]]

|

||||

for j, tb_items in enumerate(recos[tbcnt[i] : tbcnt[i + 1]]): # for table

|

||||

poss = pos[tbcnt[i] : tbcnt[i + 1]]

|

||||

for it in tb_items: # for table components

|

||||

it["x0"] = (it["x0"] + poss[j][0])

|

||||

it["x1"] = (it["x1"] + poss[j][0])

|

||||

it["top"] = (it["top"] + poss[j][1])

|

||||

it["bottom"] = (it["bottom"] + poss[j][1])

|

||||

it["x0"] = it["x0"] + poss[j][0]

|

||||

it["x1"] = it["x1"] + poss[j][0]

|

||||

it["top"] = it["top"] + poss[j][1]

|

||||

it["bottom"] = it["bottom"] + poss[j][1]

|

||||

for n in ["x0", "x1", "top", "bottom"]:

|

||||

it[n] /= ZM

|

||||

it["top"] += self.page_cum_height[i]

|

||||

@ -250,8 +235,7 @@ class RAGFlowPdfParser:

|

||||

self.tb_cpns.extend(pg)

|

||||

|

||||

def gather(kwd, fzy=10, ption=0.6):

|

||||

eles = Recognizer.sort_Y_firstly(

|

||||

[r for r in self.tb_cpns if re.match(kwd, r["label"])], fzy)

|

||||

eles = Recognizer.sort_Y_firstly([r for r in self.tb_cpns if re.match(kwd, r["label"])], fzy)

|

||||

eles = Recognizer.layouts_cleanup(self.boxes, eles, 5, ption)

|

||||

return Recognizer.sort_Y_firstly(eles, 0)

|

||||

|

||||

@ -259,8 +243,7 @@ class RAGFlowPdfParser:

|

||||

headers = gather(r".*header$")

|

||||

rows = gather(r".* (row|header)")

|

||||

spans = gather(r".*spanning")

|

||||

clmns = sorted([r for r in self.tb_cpns if re.match(

|

||||

r"table column$", r["label"])], key=lambda x: (x["pn"], x["layoutno"], x["x0"]))

|

||||

clmns = sorted([r for r in self.tb_cpns if re.match(r"table column$", r["label"])], key=lambda x: (x["pn"], x["layoutno"], x["x0"]))

|

||||

clmns = Recognizer.layouts_cleanup(self.boxes, clmns, 5, 0.5)

|

||||

for b in self.boxes:

|

||||

if b.get("layout_type", "") != "table":

|

||||

@ -271,8 +254,7 @@ class RAGFlowPdfParser:

|

||||

b["R_top"] = rows[ii]["top"]

|

||||

b["R_bott"] = rows[ii]["bottom"]

|

||||

|

||||

ii = Recognizer.find_overlapped_with_threshold(

|

||||

b, headers, thr=0.3)

|

||||

ii = Recognizer.find_overlapped_with_threshold(b, headers, thr=0.3)

|

||||

if ii is not None:

|

||||

b["H_top"] = headers[ii]["top"]

|

||||

b["H_bott"] = headers[ii]["bottom"]

|

||||

@ -305,12 +287,12 @@ class RAGFlowPdfParser:

|

||||

return

|

||||

bxs = [(line[0], line[1][0]) for line in bxs]

|

||||

bxs = Recognizer.sort_Y_firstly(

|

||||

[{"x0": b[0][0] / ZM, "x1": b[1][0] / ZM,

|

||||

"top": b[0][1] / ZM, "text": "", "txt": t,

|

||||

"bottom": b[-1][1] / ZM,

|

||||

"chars": [],

|

||||

"page_number": pagenum} for b, t in bxs if b[0][0] <= b[1][0] and b[0][1] <= b[-1][1]],

|

||||

self.mean_height[pagenum-1] / 3

|

||||

[

|

||||

{"x0": b[0][0] / ZM, "x1": b[1][0] / ZM, "top": b[0][1] / ZM, "text": "", "txt": t, "bottom": b[-1][1] / ZM, "chars": [], "page_number": pagenum}

|

||||

for b, t in bxs

|

||||

if b[0][0] <= b[1][0] and b[0][1] <= b[-1][1]

|

||||

],

|

||||

self.mean_height[pagenum - 1] / 3,

|

||||

)

|

||||

|

||||

# merge chars in the same rect

|

||||

@ -321,7 +303,7 @@ class RAGFlowPdfParser:

|

||||

continue

|

||||

ch = c["bottom"] - c["top"]

|

||||

bh = bxs[ii]["bottom"] - bxs[ii]["top"]

|

||||

if abs(ch - bh) / max(ch, bh) >= 0.7 and c["text"] != ' ':

|

||||

if abs(ch - bh) / max(ch, bh) >= 0.7 and c["text"] != " ":

|

||||

self.lefted_chars.append(c)

|

||||

continue

|

||||

bxs[ii]["chars"].append(c)

|

||||

@ -345,8 +327,7 @@ class RAGFlowPdfParser:

|

||||

img_np = np.array(img)

|

||||

for b in bxs:

|

||||

if not b["text"]:

|

||||

left, right, top, bott = b["x0"] * ZM, b["x1"] * \

|

||||

ZM, b["top"] * ZM, b["bottom"] * ZM

|

||||

left, right, top, bott = b["x0"] * ZM, b["x1"] * ZM, b["top"] * ZM, b["bottom"] * ZM

|

||||

b["box_image"] = self.ocr.get_rotate_crop_image(img_np, np.array([[left, top], [right, top], [right, bott], [left, bott]], dtype=np.float32))

|

||||

boxes_to_reg.append(b)

|

||||

del b["txt"]

|

||||

@ -356,21 +337,17 @@ class RAGFlowPdfParser:

|

||||

del boxes_to_reg[i]["box_image"]

|

||||

logging.info(f"__ocr recognize {len(bxs)} boxes cost {timer() - start}s")

|

||||

bxs = [b for b in bxs if b["text"]]

|

||||

if self.mean_height[pagenum-1] == 0:

|

||||

self.mean_height[pagenum-1] = np.median([b["bottom"] - b["top"]

|

||||

for b in bxs])

|

||||

if self.mean_height[pagenum - 1] == 0:

|

||||

self.mean_height[pagenum - 1] = np.median([b["bottom"] - b["top"] for b in bxs])

|

||||

self.boxes.append(bxs)

|

||||

|

||||

def _layouts_rec(self, ZM, drop=True):

|

||||

assert len(self.page_images) == len(self.boxes)

|

||||

self.boxes, self.page_layout = self.layouter(

|

||||

self.page_images, self.boxes, ZM, drop=drop)

|

||||

self.boxes, self.page_layout = self.layouter(self.page_images, self.boxes, ZM, drop=drop)

|

||||

# cumlative Y

|

||||

for i in range(len(self.boxes)):

|

||||

self.boxes[i]["top"] += \

|

||||

self.page_cum_height[self.boxes[i]["page_number"] - 1]

|

||||

self.boxes[i]["bottom"] += \

|

||||

self.page_cum_height[self.boxes[i]["page_number"] - 1]

|

||||

self.boxes[i]["top"] += self.page_cum_height[self.boxes[i]["page_number"] - 1]

|

||||

self.boxes[i]["bottom"] += self.page_cum_height[self.boxes[i]["page_number"] - 1]

|

||||

|

||||

def _text_merge(self):

|

||||

# merge adjusted boxes

|

||||

@ -390,12 +367,10 @@ class RAGFlowPdfParser:

|

||||

while i < len(bxs) - 1:

|

||||

b = bxs[i]

|

||||

b_ = bxs[i + 1]

|

||||

if b.get("layoutno", "0") != b_.get("layoutno", "1") or b.get("layout_type", "") in ["table", "figure",

|

||||

"equation"]:

|

||||

if b.get("layoutno", "0") != b_.get("layoutno", "1") or b.get("layout_type", "") in ["table", "figure", "equation"]:

|

||||

i += 1

|

||||

continue

|

||||

if abs(self._y_dis(b, b_)

|

||||

) < self.mean_height[bxs[i]["page_number"] - 1] / 3:

|

||||

if abs(self._y_dis(b, b_)) < self.mean_height[bxs[i]["page_number"] - 1] / 3:

|

||||

# merge

|

||||

bxs[i]["x1"] = b_["x1"]

|

||||

bxs[i]["top"] = (b["top"] + b_["top"]) / 2

|

||||

@ -408,16 +383,14 @@ class RAGFlowPdfParser:

|

||||

|

||||

dis_thr = 1

|

||||

dis = b["x1"] - b_["x0"]

|

||||

if b.get("layout_type", "") != "text" or b_.get(

|

||||

"layout_type", "") != "text":

|

||||

if b.get("layout_type", "") != "text" or b_.get("layout_type", "") != "text":

|

||||

if end_with(b, ",") or start_with(b_, "(,"):

|

||||

dis_thr = -8

|

||||

else:

|

||||

i += 1

|

||||

continue

|

||||

|

||||

if abs(self._y_dis(b, b_)) < self.mean_height[bxs[i]["page_number"] - 1] / 5 \

|

||||

and dis >= dis_thr and b["x1"] < b_["x1"]:

|

||||

if abs(self._y_dis(b, b_)) < self.mean_height[bxs[i]["page_number"] - 1] / 5 and dis >= dis_thr and b["x1"] < b_["x1"]:

|

||||

# merge

|

||||

bxs[i]["x1"] = b_["x1"]

|

||||

bxs[i]["top"] = (b["top"] + b_["top"]) / 2

|

||||

@ -429,23 +402,19 @@ class RAGFlowPdfParser:

|

||||

self.boxes = bxs

|

||||

|

||||

def _naive_vertical_merge(self, zoomin=3):

|

||||

bxs = Recognizer.sort_Y_firstly(

|

||||

self.boxes, np.median(

|

||||

self.mean_height) / 3)

|

||||

bxs = Recognizer.sort_Y_firstly(self.boxes, np.median(self.mean_height) / 3)

|

||||

|

||||

column_width = np.median([b["x1"] - b["x0"] for b in self.boxes])

|

||||

self.column_num = int(self.page_images[0].size[0] / zoomin / column_width)

|

||||

if column_width < self.page_images[0].size[0] / zoomin / self.column_num:

|

||||

logging.info("Multi-column................... {} {}".format(column_width,

|

||||

self.page_images[0].size[0] / zoomin / self.column_num))

|

||||

logging.info("Multi-column................... {} {}".format(column_width, self.page_images[0].size[0] / zoomin / self.column_num))

|

||||

self.boxes = self.sort_X_by_page(self.boxes, column_width / self.column_num)

|

||||

|

||||

i = 0

|

||||

while i + 1 < len(bxs):

|

||||

b = bxs[i]

|

||||

b_ = bxs[i + 1]

|

||||

if b["page_number"] < b_["page_number"] and re.match(

|

||||

r"[0-9 •一—-]+$", b["text"]):

|

||||

if b["page_number"] < b_["page_number"] and re.match(r"[0-9 •一—-]+$", b["text"]):

|

||||

bxs.pop(i)

|

||||

continue

|

||||

if not b["text"].strip():

|

||||

@ -453,8 +422,7 @@ class RAGFlowPdfParser:

|

||||

continue

|

||||

concatting_feats = [

|

||||

b["text"].strip()[-1] in ",;:'\",、‘“;:-",

|

||||

len(b["text"].strip()) > 1 and b["text"].strip(

|

||||

)[-2] in ",;:'\",‘“、;:",

|

||||

len(b["text"].strip()) > 1 and b["text"].strip()[-2] in ",;:'\",‘“、;:",

|

||||

b_["text"].strip() and b_["text"].strip()[0] in "。;?!?”)),,、:",

|

||||

]

|

||||

# features for not concating

|

||||

@ -462,21 +430,20 @@ class RAGFlowPdfParser:

|

||||

b.get("layoutno", 0) != b_.get("layoutno", 0),

|

||||

b["text"].strip()[-1] in "。?!?",

|

||||

self.is_english and b["text"].strip()[-1] in ".!?",

|

||||

b["page_number"] == b_["page_number"] and b_["top"] -

|

||||

b["bottom"] > self.mean_height[b["page_number"] - 1] * 1.5,

|

||||

b["page_number"] < b_["page_number"] and abs(

|

||||

b["x0"] - b_["x0"]) > self.mean_width[b["page_number"] - 1] * 4,

|

||||

b["page_number"] == b_["page_number"] and b_["top"] - b["bottom"] > self.mean_height[b["page_number"] - 1] * 1.5,

|

||||

b["page_number"] < b_["page_number"] and abs(b["x0"] - b_["x0"]) > self.mean_width[b["page_number"] - 1] * 4,

|

||||

]

|

||||

# split features

|

||||

detach_feats = [b["x1"] < b_["x0"],

|

||||

b["x0"] > b_["x1"]]

|

||||

detach_feats = [b["x1"] < b_["x0"], b["x0"] > b_["x1"]]

|

||||

if (any(feats) and not any(concatting_feats)) or any(detach_feats):

|

||||

logging.debug("{} {} {} {}".format(

|

||||

b["text"],

|

||||

b_["text"],

|

||||

any(feats),

|

||||

any(concatting_feats),

|

||||

))

|

||||

logging.debug(

|

||||

"{} {} {} {}".format(

|

||||

b["text"],

|

||||

b_["text"],

|

||||

any(feats),

|

||||

any(concatting_feats),

|

||||

)

|

||||

)

|

||||

i += 1

|

||||

continue

|

||||

# merge up and down

|

||||

@ -529,14 +496,11 @@ class RAGFlowPdfParser:

|

||||

if not concat_between_pages and down["page_number"] > up["page_number"]:

|

||||

break

|

||||

|

||||

if up.get("R", "") != down.get(

|

||||

"R", "") and up["text"][-1] != ",":

|

||||

if up.get("R", "") != down.get("R", "") and up["text"][-1] != ",":

|

||||

i += 1

|

||||

continue

|

||||

|

||||

if re.match(r"[0-9]{2,3}/[0-9]{3}$", up["text"]) \

|

||||

or re.match(r"[0-9]{2,3}/[0-9]{3}$", down["text"]) \

|

||||

or not down["text"].strip():

|

||||

if re.match(r"[0-9]{2,3}/[0-9]{3}$", up["text"]) or re.match(r"[0-9]{2,3}/[0-9]{3}$", down["text"]) or not down["text"].strip():

|

||||

i += 1

|

||||

continue

|

||||

|

||||

@ -544,14 +508,12 @@ class RAGFlowPdfParser:

|

||||

i += 1

|

||||

continue

|

||||

|

||||

if up["x1"] < down["x0"] - 10 * \

|

||||

mw or up["x0"] > down["x1"] + 10 * mw:

|

||||

if up["x1"] < down["x0"] - 10 * mw or up["x0"] > down["x1"] + 10 * mw:

|

||||

i += 1

|

||||

continue

|

||||

|

||||

if i - dp < 5 and up.get("layout_type") == "text":

|

||||

if up.get("layoutno", "1") == down.get(

|

||||

"layoutno", "2"):

|

||||

if up.get("layoutno", "1") == down.get("layoutno", "2"):

|

||||

dfs(down, i + 1)

|

||||

boxes.pop(i)

|

||||

return

|

||||

@ -559,8 +521,7 @@ class RAGFlowPdfParser:

|

||||

continue

|

||||

|

||||

fea = self._updown_concat_features(up, down)

|

||||

if self.updown_cnt_mdl.predict(

|

||||

xgb.DMatrix([fea]))[0] <= 0.5:

|

||||

if self.updown_cnt_mdl.predict(xgb.DMatrix([fea]))[0] <= 0.5:

|

||||

i += 1

|

||||

continue

|

||||

dfs(down, i + 1)

|

||||

@ -584,16 +545,14 @@ class RAGFlowPdfParser:

|

||||

c["text"] = c["text"].strip()

|

||||

if not c["text"]:

|

||||

continue

|

||||

if t["text"] and re.match(

|

||||

r"[0-9\.a-zA-Z]+$", t["text"][-1] + c["text"][-1]):

|

||||

if t["text"] and re.match(r"[0-9\.a-zA-Z]+$", t["text"][-1] + c["text"][-1]):

|

||||

t["text"] += " "

|

||||

t["text"] += c["text"]

|

||||

t["x0"] = min(t["x0"], c["x0"])

|

||||

t["x1"] = max(t["x1"], c["x1"])

|

||||

t["page_number"] = min(t["page_number"], c["page_number"])

|

||||

t["bottom"] = c["bottom"]

|

||||

if not t["layout_type"] \

|

||||

and c["layout_type"]:

|

||||

if not t["layout_type"] and c["layout_type"]:

|

||||

t["layout_type"] = c["layout_type"]

|

||||

boxes.append(t)

|

||||

|

||||

@ -605,25 +564,20 @@ class RAGFlowPdfParser:

|

||||

findit = False

|

||||

i = 0

|

||||

while i < len(self.boxes):

|

||||

if not re.match(r"(contents|目录|目次|table of contents|致谢|acknowledge)$",

|

||||

re.sub(r"( | |\u3000)+", "", self.boxes[i]["text"].lower())):

|

||||

if not re.match(r"(contents|目录|目次|table of contents|致谢|acknowledge)$", re.sub(r"( | |\u3000)+", "", self.boxes[i]["text"].lower())):

|

||||

i += 1

|

||||

continue

|

||||

findit = True

|

||||

eng = re.match(

|

||||

r"[0-9a-zA-Z :'.-]{5,}",

|

||||

self.boxes[i]["text"].strip())

|

||||

eng = re.match(r"[0-9a-zA-Z :'.-]{5,}", self.boxes[i]["text"].strip())

|

||||

self.boxes.pop(i)

|

||||

if i >= len(self.boxes):

|

||||

break

|

||||

prefix = self.boxes[i]["text"].strip()[:3] if not eng else " ".join(

|

||||

self.boxes[i]["text"].strip().split()[:2])

|

||||

prefix = self.boxes[i]["text"].strip()[:3] if not eng else " ".join(self.boxes[i]["text"].strip().split()[:2])

|

||||

while not prefix:

|

||||

self.boxes.pop(i)

|

||||

if i >= len(self.boxes):

|

||||

break

|

||||

prefix = self.boxes[i]["text"].strip()[:3] if not eng else " ".join(

|

||||

self.boxes[i]["text"].strip().split()[:2])

|

||||

prefix = self.boxes[i]["text"].strip()[:3] if not eng else " ".join(self.boxes[i]["text"].strip().split()[:2])

|

||||

self.boxes.pop(i)

|

||||

if i >= len(self.boxes) or not prefix:

|

||||

break

|

||||

@ -662,10 +616,12 @@ class RAGFlowPdfParser:

|

||||

self.boxes.pop(i + 1)

|

||||

continue

|

||||

|

||||

if b["text"].strip()[0] != b_["text"].strip()[0] \

|

||||

or b["text"].strip()[0].lower() in set("qwertyuopasdfghjklzxcvbnm") \

|

||||

or rag_tokenizer.is_chinese(b["text"].strip()[0]) \

|

||||

or b["top"] > b_["bottom"]:

|

||||

if (

|

||||

b["text"].strip()[0] != b_["text"].strip()[0]

|

||||

or b["text"].strip()[0].lower() in set("qwertyuopasdfghjklzxcvbnm")

|

||||

or rag_tokenizer.is_chinese(b["text"].strip()[0])

|

||||

or b["top"] > b_["bottom"]

|

||||

):

|

||||

i += 1

|

||||

continue

|

||||

b_["text"] = b["text"] + "\n" + b_["text"]

|

||||

@ -685,12 +641,8 @@ class RAGFlowPdfParser:

|

||||

if "layoutno" not in self.boxes[i]:

|

||||

i += 1

|

||||

continue

|

||||

lout_no = str(self.boxes[i]["page_number"]) + \

|

||||

"-" + str(self.boxes[i]["layoutno"])

|

||||

if TableStructureRecognizer.is_caption(self.boxes[i]) or self.boxes[i]["layout_type"] in ["table caption",

|

||||

"title",

|

||||

"figure caption",

|

||||

"reference"]:

|

||||

lout_no = str(self.boxes[i]["page_number"]) + "-" + str(self.boxes[i]["layoutno"])

|

||||

if TableStructureRecognizer.is_caption(self.boxes[i]) or self.boxes[i]["layout_type"] in ["table caption", "title", "figure caption", "reference"]:

|

||||

nomerge_lout_no.append(lst_lout_no)

|

||||

if self.boxes[i]["layout_type"] == "table":

|

||||

if re.match(r"(数据|资料|图表)*来源[:: ]", self.boxes[i]["text"]):

|

||||

@ -716,8 +668,7 @@ class RAGFlowPdfParser:

|

||||

|

||||

# merge table on different pages

|

||||

nomerge_lout_no = set(nomerge_lout_no)

|

||||

tbls = sorted([(k, bxs) for k, bxs in tables.items()],

|

||||

key=lambda x: (x[1][0]["top"], x[1][0]["x0"]))

|

||||

tbls = sorted([(k, bxs) for k, bxs in tables.items()], key=lambda x: (x[1][0]["top"], x[1][0]["x0"]))

|

||||

|

||||

i = len(tbls) - 1

|

||||

while i - 1 >= 0:

|

||||

@ -758,9 +709,7 @@ class RAGFlowPdfParser:

|

||||

if b.get("layout_type", "").find("caption") >= 0:

|

||||

continue

|

||||

y_dis = self._y_dis(c, b)

|

||||

x_dis = self._x_dis(

|

||||

c, b) if not x_overlapped(

|

||||

c, b) else 0

|

||||

x_dis = self._x_dis(c, b) if not x_overlapped(c, b) else 0

|

||||

dis = y_dis * y_dis + x_dis * x_dis

|

||||

if dis < minv:

|

||||

mink = k

|

||||

@ -774,18 +723,10 @@ class RAGFlowPdfParser:

|

||||

# continue

|

||||

if tv < fv and tk:

|

||||

tables[tk].insert(0, c)

|

||||

logging.debug(

|

||||

"TABLE:" +

|

||||

self.boxes[i]["text"] +

|

||||

"; Cap: " +

|

||||

tk)

|

||||

logging.debug("TABLE:" + self.boxes[i]["text"] + "; Cap: " + tk)

|

||||

elif fk:

|

||||

figures[fk].insert(0, c)

|

||||

logging.debug(

|

||||

"FIGURE:" +

|

||||

self.boxes[i]["text"] +

|

||||

"; Cap: " +

|

||||

tk)

|

||||

logging.debug("FIGURE:" + self.boxes[i]["text"] + "; Cap: " + tk)

|

||||

self.boxes.pop(i)

|

||||

|

||||

def cropout(bxs, ltype, poss):

|

||||

@ -794,29 +735,19 @@ class RAGFlowPdfParser:

|

||||

if len(pn) < 2:

|

||||

pn = list(pn)[0]

|

||||

ht = self.page_cum_height[pn]

|

||||

b = {

|

||||

"x0": np.min([b["x0"] for b in bxs]),

|

||||

"top": np.min([b["top"] for b in bxs]) - ht,

|

||||

"x1": np.max([b["x1"] for b in bxs]),

|

||||

"bottom": np.max([b["bottom"] for b in bxs]) - ht

|

||||

}

|

||||

b = {"x0": np.min([b["x0"] for b in bxs]), "top": np.min([b["top"] for b in bxs]) - ht, "x1": np.max([b["x1"] for b in bxs]), "bottom": np.max([b["bottom"] for b in bxs]) - ht}

|

||||

louts = [layout for layout in self.page_layout[pn] if layout["type"] == ltype]

|

||||

ii = Recognizer.find_overlapped(b, louts, naive=True)

|

||||

if ii is not None:

|

||||

b = louts[ii]

|

||||

else:

|

||||

logging.warning(

|

||||

f"Missing layout match: {pn + 1},%s" %

|

||||

(bxs[0].get(

|

||||

"layoutno", "")))

|

||||

logging.warning(f"Missing layout match: {pn + 1},%s" % (bxs[0].get("layoutno", "")))

|

||||

|

||||

left, top, right, bott = b["x0"], b["top"], b["x1"], b["bottom"]

|

||||

if right < left:

|

||||

right = left + 1

|

||||

poss.append((pn + self.page_from, left, right, top, bott))

|

||||

return self.page_images[pn] \

|

||||

.crop((left * ZM, top * ZM,

|

||||

right * ZM, bott * ZM))

|

||||

return self.page_images[pn].crop((left * ZM, top * ZM, right * ZM, bott * ZM))

|

||||

pn = {}

|

||||

for b in bxs:

|

||||

p = b["page_number"] - 1

|

||||

@ -825,10 +756,7 @@ class RAGFlowPdfParser:

|

||||

pn[p].append(b)

|

||||

pn = sorted(pn.items(), key=lambda x: x[0])

|

||||

imgs = [cropout(arr, ltype, poss) for p, arr in pn]

|

||||

pic = Image.new("RGB",

|

||||

(int(np.max([i.size[0] for i in imgs])),

|

||||

int(np.sum([m.size[1] for m in imgs]))),

|

||||

(245, 245, 245))

|

||||

pic = Image.new("RGB", (int(np.max([i.size[0] for i in imgs])), int(np.sum([m.size[1] for m in imgs]))), (245, 245, 245))

|

||||

height = 0

|

||||

for img in imgs:

|

||||

pic.paste(img, (0, int(height)))

|

||||

@ -848,30 +776,20 @@ class RAGFlowPdfParser:

|

||||

poss = []

|

||||

|

||||

if separate_tables_figures:

|

||||

figure_results.append(

|

||||

(cropout(

|

||||

bxs,

|

||||

"figure", poss),

|

||||

[txt]))

|

||||

figure_results.append((cropout(bxs, "figure", poss), [txt]))

|

||||

figure_positions.append(poss)

|

||||

else:

|

||||

res.append(

|

||||

(cropout(

|

||||

bxs,

|

||||

"figure", poss),

|

||||

[txt]))

|

||||

res.append((cropout(bxs, "figure", poss), [txt]))

|

||||

positions.append(poss)

|

||||

|

||||

for k, bxs in tables.items():

|

||||

if not bxs:

|

||||

continue

|

||||

bxs = Recognizer.sort_Y_firstly(bxs, np.mean(

|

||||

[(b["bottom"] - b["top"]) / 2 for b in bxs]))

|

||||

bxs = Recognizer.sort_Y_firstly(bxs, np.mean([(b["bottom"] - b["top"]) / 2 for b in bxs]))

|

||||

|

||||

poss = []

|

||||

|

||||

res.append((cropout(bxs, "table", poss),

|

||||

self.tbl_det.construct_table(bxs, html=return_html, is_english=self.is_english)))

|

||||

res.append((cropout(bxs, "table", poss), self.tbl_det.construct_table(bxs, html=return_html, is_english=self.is_english)))

|

||||

positions.append(poss)

|

||||

|

||||

if separate_tables_figures:

|

||||

@ -905,7 +823,7 @@ class RAGFlowPdfParser:

|

||||

(r"[0-9]+)", 10),

|

||||

(r"[\((][0-9]+[)\)]", 11),

|

||||

(r"[零一二三四五六七八九十百]+是", 12),

|

||||

(r"[⚫•➢✓]", 12)

|

||||

(r"[⚫•➢✓]", 12),

|

||||

]:

|

||||

if re.match(p, line):

|

||||

return j

|

||||

@ -924,12 +842,9 @@ class RAGFlowPdfParser:

|

||||

if pn[-1] - 1 >= page_images_cnt:

|

||||

return ""

|

||||

|

||||

return "@@{}\t{:.1f}\t{:.1f}\t{:.1f}\t{:.1f}##" \

|

||||

.format("-".join([str(p) for p in pn]),

|

||||

bx["x0"], bx["x1"], top, bott)

|

||||

return "@@{}\t{:.1f}\t{:.1f}\t{:.1f}\t{:.1f}##".format("-".join([str(p) for p in pn]), bx["x0"], bx["x1"], top, bott)

|

||||

|

||||

def __filterout_scraps(self, boxes, ZM):

|

||||

|

||||

def width(b):

|

||||

return b["x1"] - b["x0"]

|

||||

|

||||

@ -939,8 +854,7 @@ class RAGFlowPdfParser:

|

||||

def usefull(b):

|

||||

if b.get("layout_type"):

|

||||

return True

|

||||

if width(

|

||||

b) > self.page_images[b["page_number"] - 1].size[0] / ZM / 3:

|

||||

if width(b) > self.page_images[b["page_number"] - 1].size[0] / ZM / 3:

|

||||

return True

|

||||

if b["bottom"] - b["top"] > self.mean_height[b["page_number"] - 1]:

|

||||

return True

|

||||

@ -952,31 +866,23 @@ class RAGFlowPdfParser:

|

||||

widths = []

|

||||

pw = self.page_images[boxes[0]["page_number"] - 1].size[0] / ZM

|

||||

mh = self.mean_height[boxes[0]["page_number"] - 1]

|

||||

mj = self.proj_match(

|

||||

boxes[0]["text"]) or boxes[0].get(

|

||||

"layout_type",

|

||||

"") == "title"

|

||||

mj = self.proj_match(boxes[0]["text"]) or boxes[0].get("layout_type", "") == "title"

|

||||

|

||||

def dfs(line, st):

|

||||

nonlocal mh, pw, lines, widths

|

||||

lines.append(line)

|

||||

widths.append(width(line))

|

||||

mmj = self.proj_match(

|

||||

line["text"]) or line.get(

|

||||

"layout_type",

|

||||

"") == "title"

|

||||

mmj = self.proj_match(line["text"]) or line.get("layout_type", "") == "title"

|

||||

for i in range(st + 1, min(st + 20, len(boxes))):

|

||||

if (boxes[i]["page_number"] - line["page_number"]) > 0:

|

||||

break

|

||||

if not mmj and self._y_dis(

|

||||

line, boxes[i]) >= 3 * mh and height(line) < 1.5 * mh:

|

||||

if not mmj and self._y_dis(line, boxes[i]) >= 3 * mh and height(line) < 1.5 * mh:

|

||||

break

|

||||

|

||||

if not usefull(boxes[i]):

|

||||

continue

|

||||

if mmj or \

|

||||

(self._x_dis(boxes[i], line) < pw / 10): \

|

||||

# and abs(width(boxes[i])-width_mean)/max(width(boxes[i]),width_mean)<0.5):

|

||||

if mmj or (self._x_dis(boxes[i], line) < pw / 10):

|

||||

# and abs(width(boxes[i])-width_mean)/max(width(boxes[i]),width_mean)<0.5):

|

||||

# concat following

|

||||

dfs(boxes[i], i)

|

||||

boxes.pop(i)

|

||||

@ -992,11 +898,9 @@ class RAGFlowPdfParser:

|

||||

boxes.pop(0)

|

||||

mw = np.mean(widths)

|

||||

if mj or mw / pw >= 0.35 or mw > 200:

|

||||

res.append(

|

||||

"\n".join([c["text"] + self._line_tag(c, ZM) for c in lines]))

|

||||

res.append("\n".join([c["text"] + self._line_tag(c, ZM) for c in lines]))

|

||||

else:

|

||||

logging.debug("REMOVED: " +

|

||||

"<<".join([c["text"] for c in lines]))

|

||||

logging.debug("REMOVED: " + "<<".join([c["text"] for c in lines]))

|

||||

|

||||

return "\n\n".join(res)

|

||||

|

||||

@ -1004,16 +908,14 @@ class RAGFlowPdfParser:

|

||||

def total_page_number(fnm, binary=None):

|

||||

try:

|

||||

with sys.modules[LOCK_KEY_pdfplumber]:

|

||||

pdf = pdfplumber.open(

|

||||

fnm) if not binary else pdfplumber.open(BytesIO(binary))

|

||||

pdf = pdfplumber.open(fnm) if not binary else pdfplumber.open(BytesIO(binary))

|

||||

total_page = len(pdf.pages)

|

||||

pdf.close()

|

||||

return total_page

|

||||

except Exception:

|

||||

logging.exception("total_page_number")

|

||||

|

||||

def __images__(self, fnm, zoomin=3, page_from=0,

|

||||

page_to=299, callback=None):

|

||||

def __images__(self, fnm, zoomin=3, page_from=0, page_to=299, callback=None):

|

||||

self.lefted_chars = []

|

||||

self.mean_height = []

|

||||

self.mean_width = []

|

||||

@ -1025,10 +927,9 @@ class RAGFlowPdfParser:

|

||||

start = timer()

|

||||

try:

|

||||

with sys.modules[LOCK_KEY_pdfplumber]:

|

||||

with (pdfplumber.open(fnm) if isinstance(fnm, str) else pdfplumber.open(BytesIO(fnm))) as pdf:

|

||||

with pdfplumber.open(fnm) if isinstance(fnm, str) else pdfplumber.open(BytesIO(fnm)) as pdf:

|

||||

self.pdf = pdf

|

||||

self.page_images = [p.to_image(resolution=72 * zoomin, antialias=True).annotated for i, p in

|

||||

enumerate(self.pdf.pages[page_from:page_to])]

|

||||

self.page_images = [p.to_image(resolution=72 * zoomin, antialias=True).annotated for i, p in enumerate(self.pdf.pages[page_from:page_to])]

|

||||

|

||||

try:

|

||||

self.page_chars = [[c for c in page.dedupe_chars().chars if self._has_color(c)] for page in self.pdf.pages[page_from:page_to]]

|

||||

@ -1044,11 +945,11 @@ class RAGFlowPdfParser:

|

||||

|

||||

self.outlines = []

|

||||

try:

|

||||

with (pdf2_read(fnm if isinstance(fnm, str)

|

||||

else BytesIO(fnm))) as pdf:

|

||||

with pdf2_read(fnm if isinstance(fnm, str) else BytesIO(fnm)) as pdf:

|

||||

self.pdf = pdf

|

||||

|

||||

outlines = self.pdf.outline

|

||||

|

||||

def dfs(arr, depth):

|

||||

for a in arr:

|

||||

if isinstance(a, dict):

|

||||

@ -1065,11 +966,11 @@ class RAGFlowPdfParser:

|

||||

logging.warning("Miss outlines")

|

||||

|

||||

logging.debug("Images converted.")

|

||||

self.is_english = [re.search(r"[a-zA-Z0-9,/¸;:'\[\]\(\)!@#$%^&*\"?<>._-]{30,}", "".join(

|

||||

random.choices([c["text"] for c in self.page_chars[i]], k=min(100, len(self.page_chars[i]))))) for i in

|

||||

range(len(self.page_chars))]

|

||||

if sum([1 if e else 0 for e in self.is_english]) > len(

|

||||

self.page_images) / 2:

|

||||

self.is_english = [

|

||||

re.search(r"[a-zA-Z0-9,/¸;:'\[\]\(\)!@#$%^&*\"?<>._-]{30,}", "".join(random.choices([c["text"] for c in self.page_chars[i]], k=min(100, len(self.page_chars[i])))))

|

||||

for i in range(len(self.page_chars))

|

||||

]

|

||||

if sum([1 if e else 0 for e in self.is_english]) > len(self.page_images) / 2:

|

||||

self.is_english = True

|

||||

else:

|

||||

self.is_english = False

|

||||

@ -1077,10 +978,12 @@ class RAGFlowPdfParser:

|

||||

async def __img_ocr(i, id, img, chars, limiter):

|

||||

j = 0

|

||||

while j + 1 < len(chars):

|

||||

if chars[j]["text"] and chars[j + 1]["text"] \

|

||||

and re.match(r"[0-9a-zA-Z,.:;!%]+", chars[j]["text"] + chars[j + 1]["text"]) \

|

||||

and chars[j + 1]["x0"] - chars[j]["x1"] >= min(chars[j + 1]["width"],

|

||||

chars[j]["width"]) / 2:

|

||||

if (

|

||||

chars[j]["text"]

|

||||

and chars[j + 1]["text"]

|

||||

and re.match(r"[0-9a-zA-Z,.:;!%]+", chars[j]["text"] + chars[j + 1]["text"])

|

||||

and chars[j + 1]["x0"] - chars[j]["x1"] >= min(chars[j + 1]["width"], chars[j]["width"]) / 2

|

||||

):

|

||||

chars[j]["text"] += " "

|

||||

j += 1

|

||||

|

||||

@ -1096,12 +999,8 @@ class RAGFlowPdfParser:

|

||||

async def __img_ocr_launcher():

|

||||

def __ocr_preprocess():

|

||||

chars = self.page_chars[i] if not self.is_english else []

|

||||

self.mean_height.append(

|

||||

np.median(sorted([c["height"] for c in chars])) if chars else 0

|

||||

)

|

||||

self.mean_width.append(

|

||||

np.median(sorted([c["width"] for c in chars])) if chars else 8

|

||||

)

|

||||

self.mean_height.append(np.median(sorted([c["height"] for c in chars])) if chars else 0)

|

||||

self.mean_width.append(np.median(sorted([c["width"] for c in chars])) if chars else 8)

|

||||

self.page_cum_height.append(img.size[1] / zoomin)

|

||||

return chars

|

||||

|

||||

@ -1110,8 +1009,7 @@ class RAGFlowPdfParser:

|

||||

for i, img in enumerate(self.page_images):

|

||||

chars = __ocr_preprocess()

|

||||

|

||||

nursery.start_soon(__img_ocr, i, i % PARALLEL_DEVICES, img, chars,

|

||||

self.parallel_limiter[i % PARALLEL_DEVICES])

|

||||

nursery.start_soon(__img_ocr, i, i % PARALLEL_DEVICES, img, chars, self.parallel_limiter[i % PARALLEL_DEVICES])

|

||||

await trio.sleep(0.1)

|

||||

else:

|

||||

for i, img in enumerate(self.page_images):

|

||||

@ -1124,11 +1022,9 @@ class RAGFlowPdfParser:

|

||||

|

||||

logging.info(f"__images__ {len(self.page_images)} pages cost {timer() - start}s")

|

||||

|

||||

if not self.is_english and not any(

|

||||

[c for c in self.page_chars]) and self.boxes:

|

||||

if not self.is_english and not any([c for c in self.page_chars]) and self.boxes:

|

||||

bxes = [b for bxs in self.boxes for b in bxs]

|

||||

self.is_english = re.search(r"[\na-zA-Z0-9,/¸;:'\[\]\(\)!@#$%^&*\"?<>._-]{30,}",

|

||||

"".join([b["text"] for b in random.choices(bxes, k=min(30, len(bxes)))]))

|

||||

self.is_english = re.search(r"[\na-zA-Z0-9,/¸;:'\[\]\(\)!@#$%^&*\"?<>._-]{30,}", "".join([b["text"] for b in random.choices(bxes, k=min(30, len(bxes)))]))

|

||||

|

||||

logging.debug("Is it English:", self.is_english)

|

||||

|

||||

@ -1144,8 +1040,7 @@ class RAGFlowPdfParser:

|

||||

self._text_merge()

|

||||

self._concat_downward()

|

||||

self._filter_forpages()

|

||||

tbls = self._extract_table_figure(

|

||||

need_image, zoomin, return_html, False)

|

||||

tbls = self._extract_table_figure(need_image, zoomin, return_html, False)

|

||||

return self.__filterout_scraps(deepcopy(self.boxes), zoomin), tbls

|

||||

|

||||

def parse_into_bboxes(self, fnm, callback=None, zoomin=3):

|

||||

@ -1177,11 +1072,11 @@ class RAGFlowPdfParser:

|

||||

def insert_table_figures(tbls_or_figs, layout_type):

|

||||

def min_rectangle_distance(rect1, rect2):

|

||||

import math

|

||||

|

||||

pn1, left1, right1, top1, bottom1 = rect1

|

||||

pn2, left2, right2, top2, bottom2 = rect2

|

||||

if (right1 >= left2 and right2 >= left1 and

|

||||

bottom1 >= top2 and bottom2 >= top1):

|

||||

return 0 + (pn1-pn2)*10000

|

||||

if right1 >= left2 and right2 >= left1 and bottom1 >= top2 and bottom2 >= top1:

|

||||

return 0 + (pn1 - pn2) * 10000

|

||||

if right1 < left2:

|

||||

dx = left2 - right1

|

||||

elif right2 < left1:

|

||||

@ -1194,18 +1089,16 @@ class RAGFlowPdfParser:

|

||||

dy = top1 - bottom2

|

||||

else:

|

||||

dy = 0

|

||||

return math.sqrt(dx*dx + dy*dy) + (pn1-pn2)*10000

|

||||

return math.sqrt(dx * dx + dy * dy) + (pn1 - pn2) * 10000

|

||||

|

||||

for (img, txt), poss in tbls_or_figs:

|

||||

bboxes = [(i, (b["page_number"], b["x0"], b["x1"], b["top"], b["bottom"])) for i, b in enumerate(self.boxes)]

|

||||

dists = [(min_rectangle_distance((pn, left, right, top, bott), rect),i) for i, rect in bboxes for pn, left, right, top, bott in poss]

|

||||

dists = [(min_rectangle_distance((pn, left, right, top, bott), rect), i) for i, rect in bboxes for pn, left, right, top, bott in poss]

|

||||

min_i = np.argmin(dists, axis=0)[0]

|

||||

min_i, rect = bboxes[dists[min_i][-1]]

|

||||

if isinstance(txt, list):

|

||||

txt = "\n".join(txt)

|

||||

self.boxes.insert(min_i, {

|

||||

"page_number": rect[0], "x0": rect[1], "x1": rect[2], "top": rect[3], "bottom": rect[4], "layout_type": layout_type, "text": txt, "image": img

|

||||

})

|

||||

self.boxes.insert(min_i, {"page_number": rect[0], "x0": rect[1], "x1": rect[2], "top": rect[3], "bottom": rect[4], "layout_type": layout_type, "text": txt, "image": img})

|

||||

|

||||

for b in self.boxes:

|

||||

b["position_tag"] = self._line_tag(b, zoomin)

|

||||

@ -1225,12 +1118,9 @@ class RAGFlowPdfParser:

|

||||

def extract_positions(txt):

|

||||

poss = []

|

||||

for tag in re.findall(r"@@[0-9-]+\t[0-9.\t]+##", txt):

|

||||

pn, left, right, top, bottom = tag.strip(

|

||||

"#").strip("@").split("\t")

|

||||

left, right, top, bottom = float(left), float(

|

||||

right), float(top), float(bottom)

|

||||

poss.append(([int(p) - 1 for p in pn.split("-")],

|

||||

left, right, top, bottom))

|

||||

pn, left, right, top, bottom = tag.strip("#").strip("@").split("\t")

|

||||

left, right, top, bottom = float(left), float(right), float(top), float(bottom)

|

||||

poss.append(([int(p) - 1 for p in pn.split("-")], left, right, top, bottom))

|

||||

return poss

|

||||

|

||||

def crop(self, text, ZM=3, need_position=False):

|

||||

@ -1241,15 +1131,12 @@ class RAGFlowPdfParser:

|

||||

return None, None

|

||||

return

|

||||

|

||||

max_width = max(

|

||||

np.max([right - left for (_, left, right, _, _) in poss]), 6)

|

||||

max_width = max(np.max([right - left for (_, left, right, _, _) in poss]), 6)

|

||||

GAP = 6

|

||||

pos = poss[0]

|

||||

poss.insert(0, ([pos[0][0]], pos[1], pos[2], max(

|

||||

0, pos[3] - 120), max(pos[3] - GAP, 0)))

|

||||

poss.insert(0, ([pos[0][0]], pos[1], pos[2], max(0, pos[3] - 120), max(pos[3] - GAP, 0)))

|

||||

pos = poss[-1]

|

||||

poss.append(([pos[0][-1]], pos[1], pos[2], min(self.page_images[pos[0][-1]].size[1] / ZM, pos[4] + GAP),

|

||||

min(self.page_images[pos[0][-1]].size[1] / ZM, pos[4] + 120)))

|

||||

poss.append(([pos[0][-1]], pos[1], pos[2], min(self.page_images[pos[0][-1]].size[1] / ZM, pos[4] + GAP), min(self.page_images[pos[0][-1]].size[1] / ZM, pos[4] + 120)))

|

||||

|

||||

positions = []

|

||||

for ii, (pns, left, right, top, bottom) in enumerate(poss):

|

||||

@ -1257,28 +1144,14 @@ class RAGFlowPdfParser:

|

||||

bottom *= ZM

|

||||

for pn in pns[1:]:

|

||||

bottom += self.page_images[pn - 1].size[1]

|

||||

imgs.append(

|

||||

self.page_images[pns[0]].crop((left * ZM, top * ZM,

|

||||

right *

|

||||

ZM, min(

|

||||

bottom, self.page_images[pns[0]].size[1])

|

||||

))

|

||||

)

|

||||

imgs.append(self.page_images[pns[0]].crop((left * ZM, top * ZM, right * ZM, min(bottom, self.page_images[pns[0]].size[1]))))

|

||||

if 0 < ii < len(poss) - 1:

|

||||

positions.append((pns[0] + self.page_from, left, right, top, min(

|

||||

bottom, self.page_images[pns[0]].size[1]) / ZM))

|

||||

positions.append((pns[0] + self.page_from, left, right, top, min(bottom, self.page_images[pns[0]].size[1]) / ZM))

|

||||

bottom -= self.page_images[pns[0]].size[1]

|

||||

for pn in pns[1:]:

|

||||

imgs.append(

|

||||

self.page_images[pn].crop((left * ZM, 0,

|

||||

right * ZM,

|

||||

min(bottom,

|

||||

self.page_images[pn].size[1])

|

||||

))

|

||||

)

|

||||

imgs.append(self.page_images[pn].crop((left * ZM, 0, right * ZM, min(bottom, self.page_images[pn].size[1]))))

|

||||

if 0 < ii < len(poss) - 1:

|

||||

positions.append((pn + self.page_from, left, right, 0, min(

|

||||

bottom, self.page_images[pn].size[1]) / ZM))

|

||||

positions.append((pn + self.page_from, left, right, 0, min(bottom, self.page_images[pn].size[1]) / ZM))

|

||||

bottom -= self.page_images[pn].size[1]

|

||||

|

||||

if not imgs:

|

||||

@ -1290,14 +1163,12 @@ class RAGFlowPdfParser:

|

||||

height += img.size[1] + GAP

|

||||

height = int(height)

|

||||

width = int(np.max([i.size[0] for i in imgs]))

|

||||

pic = Image.new("RGB",

|

||||

(width, height),

|

||||

(245, 245, 245))

|

||||

pic = Image.new("RGB", (width, height), (245, 245, 245))

|

||||

height = 0

|

||||

for ii, img in enumerate(imgs):

|

||||

if ii == 0 or ii + 1 == len(imgs):

|

||||

img = img.convert('RGBA')

|

||||

overlay = Image.new('RGBA', img.size, (0, 0, 0, 0))

|

||||

img = img.convert("RGBA")

|

||||

overlay = Image.new("RGBA", img.size, (0, 0, 0, 0))

|

||||

overlay.putalpha(128)

|

||||

img = Image.alpha_composite(img, overlay).convert("RGB")

|

||||

pic.paste(img, (0, int(height)))

|

||||

@ -1312,14 +1183,12 @@ class RAGFlowPdfParser:

|

||||

pn = bx["page_number"]

|

||||

top = bx["top"] - self.page_cum_height[pn - 1]

|

||||

bott = bx["bottom"] - self.page_cum_height[pn - 1]

|

||||

poss.append((pn, bx["x0"], bx["x1"], top, min(

|

||||

bott, self.page_images[pn - 1].size[1] / ZM)))

|

||||

poss.append((pn, bx["x0"], bx["x1"], top, min(bott, self.page_images[pn - 1].size[1] / ZM)))

|

||||

while bott * ZM > self.page_images[pn - 1].size[1]:

|

||||

bott -= self.page_images[pn - 1].size[1] / ZM

|

||||

top = 0

|

||||

pn += 1

|

||||

poss.append((pn, bx["x0"], bx["x1"], top, min(

|

||||

bott, self.page_images[pn - 1].size[1] / ZM)))

|

||||

poss.append((pn, bx["x0"], bx["x1"], top, min(bott, self.page_images[pn - 1].size[1] / ZM)))

|

||||

return poss

|

||||

|

||||

|

||||

@ -1328,9 +1197,7 @@ class PlainParser:

|

||||

self.outlines = []

|

||||

lines = []

|

||||

try:

|

||||

self.pdf = pdf2_read(

|

||||

filename if isinstance(

|

||||

filename, str) else BytesIO(filename))

|

||||

self.pdf = pdf2_read(filename if isinstance(filename, str) else BytesIO(filename))

|

||||

for page in self.pdf.pages[from_page:to_page]:

|

||||

lines.extend([t for t in page.extract_text().split("\n")])

|

||||

|

||||

@ -1367,10 +1234,8 @@ class VisionParser(RAGFlowPdfParser):

|

||||

def __images__(self, fnm, zoomin=3, page_from=0, page_to=299, callback=None):

|

||||

try:

|

||||

with sys.modules[LOCK_KEY_pdfplumber]:

|

||||

self.pdf = pdfplumber.open(fnm) if isinstance(

|

||||

fnm, str) else pdfplumber.open(BytesIO(fnm))

|

||||

self.page_images = [p.to_image(resolution=72 * zoomin).annotated for i, p in

|

||||

enumerate(self.pdf.pages[page_from:page_to])]

|

||||

self.pdf = pdfplumber.open(fnm) if isinstance(fnm, str) else pdfplumber.open(BytesIO(fnm))

|

||||