mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-04 03:25:30 +08:00

Compare commits

32 Commits

v0.20.4

...

2e00d8d3d4

| Author | SHA1 | Date | |

|---|---|---|---|

| 2e00d8d3d4 | |||

| 0b456a18a3 | |||

| dd8e660f0a | |||

| 98ee3dee74 | |||

| d4b0cd8599 | |||

| 3398dac906 | |||

| 7eb25e0de6 | |||

| bed77ee28f | |||

| 56cd576876 | |||

| 4fbad2828c | |||

| e997bf6507 | |||

| 209b731541 | |||

| c47a38773c | |||

| fcd18d7d87 | |||

| fe9adbf0a5 | |||

| c7f7adf029 | |||

| c27172b3bc | |||

| a246949b77 | |||

| 0a954d720a | |||

| f89e55ec42 | |||

| 5fe8cf6018 | |||

| 4720849ac0 | |||

| d7721833e7 | |||

| 7332f1d0f3 | |||

| 2d101561f8 | |||

| 59590e9aae | |||

| bb9b9b8357 | |||

| a4b368e53f | |||

| c461261f0b | |||

| a1633e0a2f | |||

| 369add35b8 | |||

| 5abd0bbac1 |

@ -307,7 +307,7 @@ docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly

|

||||

|

||||

## 🔨 Launch service from source for development

|

||||

|

||||

1. Install uv, or skip this step if it is already installed:

|

||||

1. Install `uv` and `pre-commit`, or skip this step if they are already installed:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

@ -271,7 +271,7 @@ docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly

|

||||

|

||||

## 🔨 Menjalankan Aplikasi dari untuk Pengembangan

|

||||

|

||||

1. Instal uv, atau lewati langkah ini jika sudah terinstal:

|

||||

1. Instal `uv` dan `pre-commit`, atau lewati langkah ini jika sudah terinstal:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

@ -266,7 +266,7 @@ docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly

|

||||

|

||||

## 🔨 ソースコードからサービスを起動する方法

|

||||

|

||||

1. uv をインストールする。すでにインストールされている場合は、このステップをスキップしてください:

|

||||

1. `uv` と `pre-commit` をインストールする。すでにインストールされている場合は、このステップをスキップしてください:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

@ -265,7 +265,7 @@ docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly

|

||||

|

||||

## 🔨 소스 코드로 서비스를 시작합니다.

|

||||

|

||||

1. uv를 설치하거나 이미 설치된 경우 이 단계를 건너뜁니다:

|

||||

1. `uv` 와 `pre-commit` 을 설치하거나, 이미 설치된 경우 이 단계를 건너뜁니다:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

@ -289,7 +289,7 @@ docker build --platform linux/amd64 -f Dockerfile -t infiniflow/ragflow:nightly

|

||||

|

||||

## 🔨 Lançar o serviço a partir do código-fonte para desenvolvimento

|

||||

|

||||

1. Instale o `uv`, ou pule esta etapa se ele já estiver instalado:

|

||||

1. Instale o `uv` e o `pre-commit`, ou pule esta etapa se eles já estiverem instalados:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

@ -301,7 +301,7 @@ docker build --platform linux/amd64 --build-arg NEED_MIRROR=1 -f Dockerfile -t i

|

||||

|

||||

## 🔨 以原始碼啟動服務

|

||||

|

||||

1. 安裝 uv。如已安裝,可跳過此步驟:

|

||||

1. 安裝 `uv` 和 `pre-commit`。如已安裝,可跳過此步驟:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

@ -301,7 +301,7 @@ docker build --platform linux/amd64 --build-arg NEED_MIRROR=1 -f Dockerfile -t i

|

||||

|

||||

## 🔨 以源代码启动服务

|

||||

|

||||

1. 安装 uv。如已经安装,可跳过本步骤:

|

||||

1. 安装 `uv` 和 `pre-commit`。如已经安装,可跳过本步骤:

|

||||

|

||||

```bash

|

||||

pipx install uv pre-commit

|

||||

|

||||

241

agent/canvas.py

241

agent/canvas.py

@ -29,83 +29,52 @@ from api.utils import get_uuid, hash_str2int

|

||||

from rag.prompts.prompts import chunks_format

|

||||

from rag.utils.redis_conn import REDIS_CONN

|

||||

|

||||

|

||||

class Canvas:

|

||||

class Graph:

|

||||

"""

|

||||

dsl = {

|

||||

"components": {

|

||||

"begin": {

|

||||

"obj":{

|

||||

"component_name": "Begin",

|

||||

"params": {},

|

||||

},

|

||||

"downstream": ["answer_0"],

|

||||

"upstream": [],

|

||||

},

|

||||

"retrieval_0": {

|

||||

"obj": {

|

||||

"component_name": "Retrieval",

|

||||

"params": {}

|

||||

},

|

||||

"downstream": ["generate_0"],

|

||||

"upstream": ["answer_0"],

|

||||

},

|

||||

"generate_0": {

|

||||

"obj": {

|

||||

"component_name": "Generate",

|

||||

"params": {}

|

||||

},

|

||||

"downstream": ["answer_0"],

|

||||

"upstream": ["retrieval_0"],

|

||||

}

|

||||

},

|

||||

"history": [],

|

||||

"path": ["begin"],

|

||||

"retrieval": {"chunks": [], "doc_aggs": []},

|

||||

"globals": {

|

||||

"sys.query": "",

|

||||

"sys.user_id": tenant_id,

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": []

|

||||

}

|

||||

}

|

||||

"""

|

||||

|

||||

def __init__(self, dsl: str, tenant_id=None, task_id=None):

|

||||

self.path = []

|

||||

self.history = []

|

||||

self.components = {}

|

||||

self.error = ""

|

||||

self.globals = {

|

||||

"sys.query": "",

|

||||

"sys.user_id": tenant_id,

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": []

|

||||

}

|

||||

self.dsl = json.loads(dsl) if dsl else {

|

||||

dsl = {

|

||||

"components": {

|

||||

"begin": {

|

||||

"obj": {

|

||||

"obj":{

|

||||

"component_name": "Begin",

|

||||

"params": {

|

||||

"prologue": "Hi there!"

|

||||

}

|

||||

"params": {},

|

||||

},

|

||||

"downstream": [],

|

||||

"downstream": ["answer_0"],

|

||||

"upstream": [],

|

||||

"parent_id": ""

|

||||

},

|

||||

"retrieval_0": {

|

||||

"obj": {

|

||||

"component_name": "Retrieval",

|

||||

"params": {}

|

||||

},

|

||||

"downstream": ["generate_0"],

|

||||

"upstream": ["answer_0"],

|

||||

},

|

||||

"generate_0": {

|

||||

"obj": {

|

||||

"component_name": "Generate",

|

||||

"params": {}

|

||||

},

|

||||

"downstream": ["answer_0"],

|

||||

"upstream": ["retrieval_0"],

|

||||

}

|

||||

},

|

||||

"history": [],

|

||||

"path": [],

|

||||

"retrieval": [],

|

||||

"path": ["begin"],

|

||||

"retrieval": {"chunks": [], "doc_aggs": []},

|

||||

"globals": {

|

||||

"sys.query": "",

|

||||

"sys.user_id": "",

|

||||

"sys.user_id": tenant_id,

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": []

|

||||

}

|

||||

}

|

||||

"""

|

||||

|

||||

def __init__(self, dsl: str, tenant_id=None, task_id=None):

|

||||

self.path = []

|

||||

self.components = {}

|

||||

self.error = ""

|

||||

self.dsl = json.loads(dsl)

|

||||

self._tenant_id = tenant_id

|

||||

self.task_id = task_id if task_id else get_uuid()

|

||||

self.load()

|

||||

@ -116,8 +85,6 @@ class Canvas:

|

||||

for k, cpn in self.components.items():

|

||||

cpn_nms.add(cpn["obj"]["component_name"])

|

||||

|

||||

assert "Begin" in cpn_nms, "There have to be an 'Begin' component."

|

||||

|

||||

for k, cpn in self.components.items():

|

||||

cpn_nms.add(cpn["obj"]["component_name"])

|

||||

param = component_class(cpn["obj"]["component_name"] + "Param")()

|

||||

@ -130,27 +97,10 @@ class Canvas:

|

||||

cpn["obj"] = component_class(cpn["obj"]["component_name"])(self, k, param)

|

||||

|

||||

self.path = self.dsl["path"]

|

||||

self.history = self.dsl["history"]

|

||||

if "globals" in self.dsl:

|

||||

self.globals = self.dsl["globals"]

|

||||

else:

|

||||

self.globals = {

|

||||

"sys.query": "",

|

||||

"sys.user_id": "",

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": []

|

||||

}

|

||||

|

||||

self.retrieval = self.dsl["retrieval"]

|

||||

self.memory = self.dsl.get("memory", [])

|

||||

|

||||

def __str__(self):

|

||||

self.dsl["path"] = self.path

|

||||

self.dsl["history"] = self.history

|

||||

self.dsl["globals"] = self.globals

|

||||

self.dsl["task_id"] = self.task_id

|

||||

self.dsl["retrieval"] = self.retrieval

|

||||

self.dsl["memory"] = self.memory

|

||||

dsl = {

|

||||

"components": {}

|

||||

}

|

||||

@ -169,14 +119,79 @@ class Canvas:

|

||||

dsl["components"][k][c] = deepcopy(cpn[c])

|

||||

return json.dumps(dsl, ensure_ascii=False)

|

||||

|

||||

def reset(self, mem=False):

|

||||

def reset(self):

|

||||

self.path = []

|

||||

for k, cpn in self.components.items():

|

||||

self.components[k]["obj"].reset()

|

||||

try:

|

||||

REDIS_CONN.delete(f"{self.task_id}-logs")

|

||||

except Exception as e:

|

||||

logging.exception(e)

|

||||

|

||||

def get_component_name(self, cid):

|

||||

for n in self.dsl.get("graph", {}).get("nodes", []):

|

||||

if cid == n["id"]:

|

||||

return n["data"]["name"]

|

||||

return ""

|

||||

|

||||

def run(self, **kwargs):

|

||||

raise NotImplementedError()

|

||||

|

||||

def get_component(self, cpn_id) -> Union[None, dict[str, Any]]:

|

||||

return self.components.get(cpn_id)

|

||||

|

||||

def get_component_obj(self, cpn_id) -> ComponentBase:

|

||||

return self.components.get(cpn_id)["obj"]

|

||||

|

||||

def get_component_type(self, cpn_id) -> str:

|

||||

return self.components.get(cpn_id)["obj"].component_name

|

||||

|

||||

def get_component_input_form(self, cpn_id) -> dict:

|

||||

return self.components.get(cpn_id)["obj"].get_input_form()

|

||||

|

||||

def get_tenant_id(self):

|

||||

return self._tenant_id

|

||||

|

||||

|

||||

class Canvas(Graph):

|

||||

|

||||

def __init__(self, dsl: str, tenant_id=None, task_id=None):

|

||||

self.globals = {

|

||||

"sys.query": "",

|

||||

"sys.user_id": tenant_id,

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": []

|

||||

}

|

||||

super().__init__(dsl, tenant_id, task_id)

|

||||

|

||||

def load(self):

|

||||

super().load()

|

||||

self.history = self.dsl["history"]

|

||||

if "globals" in self.dsl:

|

||||

self.globals = self.dsl["globals"]

|

||||

else:

|

||||

self.globals = {

|

||||

"sys.query": "",

|

||||

"sys.user_id": "",

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": []

|

||||

}

|

||||

|

||||

self.retrieval = self.dsl["retrieval"]

|

||||

self.memory = self.dsl.get("memory", [])

|

||||

|

||||

def __str__(self):

|

||||

self.dsl["history"] = self.history

|

||||

self.dsl["retrieval"] = self.retrieval

|

||||

self.dsl["memory"] = self.memory

|

||||

return super().__str__()

|

||||

|

||||

def reset(self, mem=False):

|

||||

super().reset()

|

||||

if not mem:

|

||||

self.history = []

|

||||

self.retrieval = []

|

||||

self.memory = []

|

||||

for k, cpn in self.components.items():

|

||||

self.components[k]["obj"].reset()

|

||||

|

||||

for k in self.globals.keys():

|

||||

if isinstance(self.globals[k], str):

|

||||

@ -192,22 +207,13 @@ class Canvas:

|

||||

else:

|

||||

self.globals[k] = None

|

||||

|

||||

try:

|

||||

REDIS_CONN.delete(f"{self.task_id}-logs")

|

||||

except Exception as e:

|

||||

logging.exception(e)

|

||||

|

||||

def get_component_name(self, cid):

|

||||

for n in self.dsl.get("graph", {}).get("nodes", []):

|

||||

if cid == n["id"]:

|

||||

return n["data"]["name"]

|

||||

return ""

|

||||

|

||||

def run(self, **kwargs):

|

||||

st = time.perf_counter()

|

||||

self.message_id = get_uuid()

|

||||

created_at = int(time.time())

|

||||

self.add_user_input(kwargs.get("query"))

|

||||

for k, cpn in self.components.items():

|

||||

self.components[k]["obj"].reset(True)

|

||||

|

||||

for k in kwargs.keys():

|

||||

if k in ["query", "user_id", "files"] and kwargs[k]:

|

||||

@ -386,18 +392,6 @@ class Canvas:

|

||||

})

|

||||

self.history.append(("assistant", self.get_component_obj(self.path[-1]).output()))

|

||||

|

||||

def get_component(self, cpn_id) -> Union[None, dict[str, Any]]:

|

||||

return self.components.get(cpn_id)

|

||||

|

||||

def get_component_obj(self, cpn_id) -> ComponentBase:

|

||||

return self.components.get(cpn_id)["obj"]

|

||||

|

||||

def get_component_type(self, cpn_id) -> str:

|

||||

return self.components.get(cpn_id)["obj"].component_name

|

||||

|

||||

def get_component_input_form(self, cpn_id) -> dict:

|

||||

return self.components.get(cpn_id)["obj"].get_input_form()

|

||||

|

||||

def is_reff(self, exp: str) -> bool:

|

||||

exp = exp.strip("{").strip("}")

|

||||

if exp.find("@") < 0:

|

||||

@ -419,9 +413,6 @@ class Canvas:

|

||||

raise Exception(f"Can't find variable: '{cpn_id}@{var_nm}'")

|

||||

return cpn["obj"].output(var_nm)

|

||||

|

||||

def get_tenant_id(self):

|

||||

return self._tenant_id

|

||||

|

||||

def get_history(self, window_size):

|

||||

convs = []

|

||||

if window_size <= 0:

|

||||

@ -436,36 +427,6 @@ class Canvas:

|

||||

def add_user_input(self, question):

|

||||

self.history.append(("user", question))

|

||||

|

||||

def _find_loop(self, max_loops=6):

|

||||

path = self.path[-1][::-1]

|

||||

if len(path) < 2:

|

||||

return False

|

||||

|

||||

for i in range(len(path)):

|

||||

if path[i].lower().find("answer") == 0 or path[i].lower().find("iterationitem") == 0:

|

||||

path = path[:i]

|

||||

break

|

||||

|

||||

if len(path) < 2:

|

||||

return False

|

||||

|

||||

for loc in range(2, len(path) // 2):

|

||||

pat = ",".join(path[0:loc])

|

||||

path_str = ",".join(path)

|

||||

if len(pat) >= len(path_str):

|

||||

return False

|

||||

loop = max_loops

|

||||

while path_str.find(pat) == 0 and loop >= 0:

|

||||

loop -= 1

|

||||

if len(pat)+1 >= len(path_str):

|

||||

return False

|

||||

path_str = path_str[len(pat)+1:]

|

||||

if loop < 0:

|

||||

pat = " => ".join([p.split(":")[0] for p in path[0:loc]])

|

||||

return pat + " => " + pat

|

||||

|

||||

return False

|

||||

|

||||

def get_prologue(self):

|

||||

return self.components["begin"]["obj"]._param.prologue

|

||||

|

||||

|

||||

@ -50,8 +50,9 @@ del _package_path, _import_submodules, _extract_classes_from_module

|

||||

|

||||

|

||||

def component_class(class_name):

|

||||

m = importlib.import_module("agent.component")

|

||||

try:

|

||||

return getattr(m, class_name)

|

||||

except Exception:

|

||||

return getattr(importlib.import_module("agent.tools"), class_name)

|

||||

for mdl in ["agent.component", "agent.tools", "rag.flow"]:

|

||||

try:

|

||||

return getattr(importlib.import_module(mdl), class_name)

|

||||

except Exception:

|

||||

pass

|

||||

assert False, f"Can't import {class_name}"

|

||||

|

||||

@ -16,7 +16,7 @@

|

||||

|

||||

import re

|

||||

import time

|

||||

from abc import ABC, abstractmethod

|

||||

from abc import ABC

|

||||

import builtins

|

||||

import json

|

||||

import os

|

||||

@ -410,8 +410,8 @@ class ComponentBase(ABC):

|

||||

)

|

||||

|

||||

def __init__(self, canvas, id, param: ComponentParamBase):

|

||||

from agent.canvas import Canvas # Local import to avoid cyclic dependency

|

||||

assert isinstance(canvas, Canvas), "canvas must be an instance of Canvas"

|

||||

from agent.canvas import Graph # Local import to avoid cyclic dependency

|

||||

assert isinstance(canvas, Graph), "canvas must be an instance of Canvas"

|

||||

self._canvas = canvas

|

||||

self._id = id

|

||||

self._param = param

|

||||

@ -448,9 +448,11 @@ class ComponentBase(ABC):

|

||||

def error(self):

|

||||

return self._param.outputs.get("_ERROR", {}).get("value")

|

||||

|

||||

def reset(self):

|

||||

def reset(self, only_output=False):

|

||||

for k in self._param.outputs.keys():

|

||||

self._param.outputs[k]["value"] = None

|

||||

if only_output:

|

||||

return

|

||||

for k in self._param.inputs.keys():

|

||||

self._param.inputs[k]["value"] = None

|

||||

self._param.debug_inputs = {}

|

||||

@ -526,6 +528,10 @@ class ComponentBase(ABC):

|

||||

cpn_nms = self._canvas.get_component(self._id)['upstream']

|

||||

return cpn_nms

|

||||

|

||||

def get_downstream(self) -> List[str]:

|

||||

cpn_nms = self._canvas.get_component(self._id)['downstream']

|

||||

return cpn_nms

|

||||

|

||||

@staticmethod

|

||||

def string_format(content: str, kv: dict[str, str]) -> str:

|

||||

for n, v in kv.items():

|

||||

@ -554,6 +560,5 @@ class ComponentBase(ABC):

|

||||

def set_exception_default_value(self):

|

||||

self.set_output("result", self.get_exception_default_value())

|

||||

|

||||

@abstractmethod

|

||||

def thoughts(self) -> str:

|

||||

...

|

||||

raise NotImplementedError()

|

||||

|

||||

@ -1,8 +1,12 @@

|

||||

{

|

||||

"id": 19,

|

||||

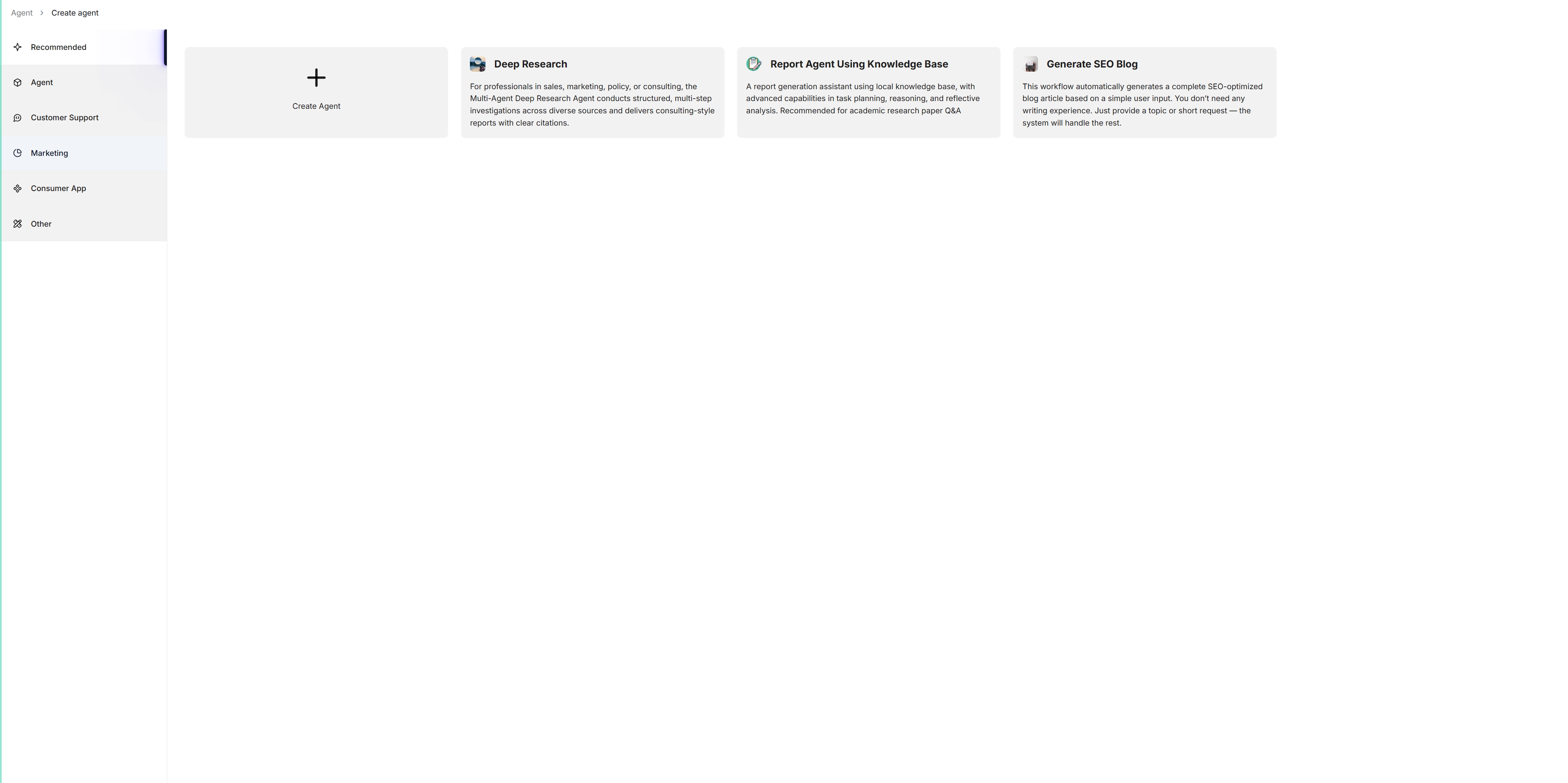

"title": "Choose Your Knowledge Base Agent",

|

||||

"description": "Select your desired knowledge base from the dropdown menu. The Agent will only retrieve from the selected knowledge base and use this content to generate responses.",

|

||||

"canvas_type": "Agent",

|

||||

"title": {

|

||||

"en": "Choose Your Knowledge Base Agent",

|

||||

"zh": "选择知识库智能体"},

|

||||

"description": {

|

||||

"en": "Select your desired knowledge base from the dropdown menu. The Agent will only retrieve from the selected knowledge base and use this content to generate responses.",

|

||||

"zh": "从下拉菜单中选择知识库,智能体将仅根据所选知识库内容生成回答。"},

|

||||

"canvas_type": "Agent",

|

||||

"dsl": {

|

||||

"components": {

|

||||

"Agent:BraveParksJoke": {

|

||||

|

||||

@ -1,8 +1,12 @@

|

||||

{

|

||||

"id": 18,

|

||||

"title": "Choose Your Knowledge Base Workflow",

|

||||

"description": "Select your desired knowledge base from the dropdown menu. The retrieval assistant will only use data from your selected knowledge base to generate responses.",

|

||||

"canvas_type": "Other",

|

||||

"title": {

|

||||

"en": "Choose Your Knowledge Base Workflow",

|

||||

"zh": "选择知识库工作流"},

|

||||

"description": {

|

||||

"en": "Select your desired knowledge base from the dropdown menu. The retrieval assistant will only use data from your selected knowledge base to generate responses.",

|

||||

"zh": "从下拉菜单中选择知识库,工作流将仅根据所选知识库内容生成回答。"},

|

||||

"canvas_type": "Other",

|

||||

"dsl": {

|

||||

"components": {

|

||||

"Agent:ProudDingosShout": {

|

||||

|

||||

@ -1,9 +1,13 @@

|

||||

|

||||

{

|

||||

"id": 11,

|

||||

"title": "Customer Review Analysis",

|

||||

"description": "Automatically classify customer reviews using LLM (Large Language Model) and route them via email to the relevant departments.",

|

||||

"canvas_type": "Customer Support",

|

||||

"title": {

|

||||

"en": "Customer Review Analysis",

|

||||

"zh": "客户评价分析"},

|

||||

"description": {

|

||||

"en": "Automatically classify customer reviews using LLM (Large Language Model) and route them via email to the relevant departments.",

|

||||

"zh": "大模型将自动分类客户评价,并通过电子邮件将结果发送到相关部门。"},

|

||||

"canvas_type": "Customer Support",

|

||||

"dsl": {

|

||||

"components": {

|

||||

"Categorize:FourTeamsFold": {

|

||||

|

||||

File diff suppressed because one or more lines are too long

@ -1,8 +1,12 @@

|

||||

|

||||

{

|

||||

"id": 10,

|

||||

"title": "Customer Support",

|

||||

"description": "This is an intelligent customer service processing system workflow based on user intent classification. It uses LLM to identify user demand types and transfers them to the corresponding professional agent for processing.",

|

||||

"title": {

|

||||

"en":"Customer Support",

|

||||

"zh": "客户支持"},

|

||||

"description": {

|

||||

"en": "This is an intelligent customer service processing system workflow based on user intent classification. It uses LLM to identify user demand types and transfers them to the corresponding professional agent for processing.",

|

||||

"zh": "工作流系统,用于智能客服场景。基于用户意图分类。使用大模型识别用户需求类型,并将需求转移给相应的智能体进行处理。"},

|

||||

"canvas_type": "Customer Support",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,8 +1,12 @@

|

||||

|

||||

{

|

||||

"id": 15,

|

||||

"title": "CV Analysis and Candidate Evaluation",

|

||||

"description": "This is a workflow that helps companies evaluate resumes, HR uploads a job description first, then submits multiple resumes via the chat window for evaluation.",

|

||||

"title": {

|

||||

"en": "CV Analysis and Candidate Evaluation",

|

||||

"zh": "简历分析和候选人评估"},

|

||||

"description": {

|

||||

"en": "This is a workflow that helps companies evaluate resumes, HR uploads a job description first, then submits multiple resumes via the chat window for evaluation.",

|

||||

"zh": "帮助公司评估简历的工作流。HR首先上传职位描述,通过聊天窗口提交多份简历进行评估。"},

|

||||

"canvas_type": "Other",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

File diff suppressed because one or more lines are too long

@ -1,8 +1,12 @@

|

||||

|

||||

{

|

||||

"id": 1,

|

||||

"title": "Deep Research",

|

||||

"description": "For professionals in sales, marketing, policy, or consulting, the Multi-Agent Deep Research Agent conducts structured, multi-step investigations across diverse sources and delivers consulting-style reports with clear citations.",

|

||||

"title": {

|

||||

"en": "Deep Research",

|

||||

"zh": "深度研究"},

|

||||

"description": {

|

||||

"en": "For professionals in sales, marketing, policy, or consulting, the Multi-Agent Deep Research Agent conducts structured, multi-step investigations across diverse sources and delivers consulting-style reports with clear citations.",

|

||||

"zh": "专为销售、市场、政策或咨询领域的专业人士设计,多智能体的深度研究会结合多源信息进行结构化、多步骤地回答问题,并附带有清晰的引用。"},

|

||||

"canvas_type": "Recommended",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,8 +1,12 @@

|

||||

|

||||

{

|

||||

"id": 6,

|

||||

"title": "Deep Research",

|

||||

"description": "For professionals in sales, marketing, policy, or consulting, the Multi-Agent Deep Research Agent conducts structured, multi-step investigations across diverse sources and delivers consulting-style reports with clear citations.",

|

||||

"title": {

|

||||

"en": "Deep Research",

|

||||

"zh": "深度研究"},

|

||||

"description": {

|

||||

"en": "For professionals in sales, marketing, policy, or consulting, the Multi-Agent Deep Research Agent conducts structured, multi-step investigations across diverse sources and delivers consulting-style reports with clear citations.",

|

||||

"zh": "专为销售、市场、政策或咨询领域的专业人士设计,多智能体的深度研究会结合多源信息进行结构化、多步骤地回答问题,并附带有清晰的引用。"},

|

||||

"canvas_type": "Agent",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,7 +1,13 @@

|

||||

{

|

||||

"id": 22,

|

||||

"title": "Ecommerce Customer Service Workflow",

|

||||

"description": "This template helps e-commerce platforms address complex customer needs, such as comparing product features, providing usage support, and coordinating home installation services.",

|

||||

"title": {

|

||||

"en": "Ecommerce Customer Service Workflow",

|

||||

"zh": "电子商务客户服务工作流程"

|

||||

},

|

||||

"description": {

|

||||

"en": "This template helps e-commerce platforms address complex customer needs, such as comparing product features, providing usage support, and coordinating home installation services.",

|

||||

"zh": "该模板可帮助电子商务平台解决复杂的客户需求,例如比较产品功能、提供使用支持和协调家庭安装服务。"

|

||||

},

|

||||

"canvas_type": "Customer Support",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,7 +1,11 @@

|

||||

{

|

||||

"id": 8,

|

||||

"title": "Generate SEO Blog",

|

||||

"description": "This is a multi-agent version of the SEO blog generation workflow. It simulates a small team of AI “writers”, where each agent plays a specialized role — just like a real editorial team.",

|

||||

"title": {

|

||||

"en": "Generate SEO Blog",

|

||||

"zh": "生成SEO博客"},

|

||||

"description": {

|

||||

"en": "This is a multi-agent version of the SEO blog generation workflow. It simulates a small team of AI “writers”, where each agent plays a specialized role — just like a real editorial team.",

|

||||

"zh": "多智能体架构可根据简单的用户输入自动生成完整的SEO博客文章。模拟小型“作家”团队,其中每个智能体扮演一个专业角色——就像真正的编辑团队。"},

|

||||

"canvas_type": "Agent",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,7 +1,11 @@

|

||||

{

|

||||

"id": 13,

|

||||

"title": "ImageLingo",

|

||||

"description": "ImageLingo lets you snap any photo containing text—menus, signs, or documents—and instantly recognize and translate it into your language of choice using advanced AI-powered translation technology.",

|

||||

"title": {

|

||||

"en": "ImageLingo",

|

||||

"zh": "图片解析"},

|

||||

"description": {

|

||||

"en": "ImageLingo lets you snap any photo containing text—menus, signs, or documents—and instantly recognize and translate it into your language of choice using advanced AI-powered translation technology.",

|

||||

"zh": "多模态大模型允许您拍摄任何包含文本的照片——菜单、标志或文档——立即识别并转换成您选择的语言。"},

|

||||

"canvas_type": "Consumer App",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,7 +1,11 @@

|

||||

{

|

||||

"id": 20,

|

||||

"title": "Report Agent Using Knowledge Base",

|

||||

"description": "A report generation assistant using local knowledge base, with advanced capabilities in task planning, reasoning, and reflective analysis. Recommended for academic research paper Q&A",

|

||||

"title": {

|

||||

"en": "Report Agent Using Knowledge Base",

|

||||

"zh": "知识库检索智能体"},

|

||||

"description": {

|

||||

"en": "A report generation assistant using local knowledge base, with advanced capabilities in task planning, reasoning, and reflective analysis. Recommended for academic research paper Q&A",

|

||||

"zh": "一个使用本地知识库的报告生成助手,具备高级能力,包括任务规划、推理和反思性分析。推荐用于学术研究论文问答。"},

|

||||

"canvas_type": "Agent",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

331

agent/templates/knowledge_base_report_r.json

Normal file

331

agent/templates/knowledge_base_report_r.json

Normal file

@ -0,0 +1,331 @@

|

||||

{

|

||||

"id": 21,

|

||||

"title": {

|

||||

"en": "Report Agent Using Knowledge Base",

|

||||

"zh": "知识库检索智能体"},

|

||||

"description": {

|

||||

"en": "A report generation assistant using local knowledge base, with advanced capabilities in task planning, reasoning, and reflective analysis. Recommended for academic research paper Q&A",

|

||||

"zh": "一个使用本地知识库的报告生成助手,具备高级能力,包括任务规划、推理和反思性分析。推荐用于学术研究论文问答。"},

|

||||

"canvas_type": "Recommended",

|

||||

"dsl": {

|

||||

"components": {

|

||||

"Agent:NewPumasLick": {

|

||||

"downstream": [

|

||||

"Message:OrangeYearsShine"

|

||||

],

|

||||

"obj": {

|

||||

"component_name": "Agent",

|

||||

"params": {

|

||||

"delay_after_error": 1,

|

||||

"description": "",

|

||||

"exception_comment": "",

|

||||

"exception_default_value": "",

|

||||

"exception_goto": [],

|

||||

"exception_method": null,

|

||||

"frequencyPenaltyEnabled": false,

|

||||

"frequency_penalty": 0.5,

|

||||

"llm_id": "qwen3-235b-a22b-instruct-2507@Tongyi-Qianwen",

|

||||

"maxTokensEnabled": true,

|

||||

"max_retries": 3,

|

||||

"max_rounds": 3,

|

||||

"max_tokens": 128000,

|

||||

"mcp": [],

|

||||

"message_history_window_size": 12,

|

||||

"outputs": {

|

||||

"content": {

|

||||

"type": "string",

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"parameter": "Precise",

|

||||

"presencePenaltyEnabled": false,

|

||||

"presence_penalty": 0.5,

|

||||

"prompts": [

|

||||

{

|

||||

"content": "# User Query\n {sys.query}",

|

||||

"role": "user"

|

||||

}

|

||||

],

|

||||

"sys_prompt": "## Role & Task\nYou are a **\u201cKnowledge Base Retrieval Q\\&A Agent\u201d** whose goal is to break down the user\u2019s question into retrievable subtasks, and then produce a multi-source-verified, structured, and actionable research report using the internal knowledge base.\n## Execution Framework (Detailed Steps & Key Points)\n1. **Assessment & Decomposition**\n * Actions:\n * Automatically extract: main topic, subtopics, entities (people/organizations/products/technologies), time window, geographic/business scope.\n * Output as a list: N facts/data points that must be collected (*N* ranges from 5\u201320 depending on question complexity).\n2. **Query Type Determination (Rule-Based)**\n * Example rules:\n * If the question involves a single issue but requests \u201cmethod comparison/multiple explanations\u201d \u2192 use **depth-first**.\n * If the question can naturally be split into \u22653 independent sub-questions \u2192 use **breadth-first**.\n * If the question can be answered by a single fact/specification/definition \u2192 use **simple query**.\n3. **Research Plan Formulation**\n * Depth-first: define 3\u20135 perspectives (methodology/stakeholders/time dimension/technical route, etc.), assign search keywords, target document types, and output format for each perspective.\n * Breadth-first: list subtasks, prioritize them, and assign search terms.\n * Simple query: directly provide the search sentence and required fields.\n4. **Retrieval Execution**\n * After retrieval: perform coverage check (does it contain the key facts?) and quality check (source diversity, authority, latest update time).\n * If standards are not met, automatically loop: rewrite queries (synonyms/cross-domain terms) and retry \u22643 times, or flag as requiring external search.\n5. **Integration & Reasoning**\n * Build the answer using a **fact\u2013evidence\u2013reasoning** chain. For each conclusion, attach 1\u20132 strongest pieces of evidence.\n---\n## Quality Gate Checklist (Verify at Each Stage)\n* **Stage 1 (Decomposition)**:\n * [ ] Key concepts and expected outputs identified\n * [ ] Required facts/data points listed\n* **Stage 2 (Retrieval)**:\n * [ ] Meets quality standards (see above)\n * [ ] If not met: execute query iteration\n* **Stage 3 (Generation)**:\n * [ ] Each conclusion has at least one direct evidence source\n * [ ] State assumptions/uncertainties\n * [ ] Provide next-step suggestions or experiment/retrieval plans\n * [ ] Final length and depth match user expectations (comply with word count/format if specified)\n---\n## Core Principles\n1. **Strict reliance on the knowledge base**: answers must be **fully bounded** by the content retrieved from the knowledge base.\n2. **No fabrication**: do not generate, infer, or create information that is not explicitly present in the knowledge base.\n3. **Accuracy first**: prefer incompleteness over inaccurate content.\n4. **Output format**:\n * Hierarchically clear modular structure\n * Logical grouping according to the MECE principle\n * Professionally presented formatting\n * Step-by-step cognitive guidance\n * Reasonable use of headings and dividers for clarity\n * *Italicize* key parameters\n * **Bold** critical information\n5. **LaTeX formula requirements**:\n * Inline formulas: start and end with `$`\n * Block formulas: start and end with `$$`, each `$$` on its own line\n * Block formula content must comply with LaTeX math syntax\n * Verify formula correctness\n---\n## Additional Notes (Interaction & Failure Strategy)\n* If the knowledge base does not cover critical facts: explicitly inform the user (with sample wording)\n* For time-sensitive issues: enforce time filtering in the search request, and indicate the latest retrieval date in the answer.\n* Language requirement: answer in the user\u2019s preferred language\n",

|

||||

"temperature": "0.1",

|

||||

"temperatureEnabled": true,

|

||||

"tools": [

|

||||

{

|

||||

"component_name": "Retrieval",

|

||||

"name": "Retrieval",

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"description": "",

|

||||

"empty_response": "",

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

"type": "string",

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

"top_n": 8,

|

||||

"use_kg": false

|

||||

}

|

||||

}

|

||||

],

|

||||

"topPEnabled": false,

|

||||

"top_p": 0.75,

|

||||

"user_prompt": "",

|

||||

"visual_files_var": ""

|

||||

}

|

||||

},

|

||||

"upstream": [

|

||||

"begin"

|

||||

]

|

||||

},

|

||||

"Message:OrangeYearsShine": {

|

||||

"downstream": [],

|

||||

"obj": {

|

||||

"component_name": "Message",

|

||||

"params": {

|

||||

"content": [

|

||||

"{Agent:NewPumasLick@content}"

|

||||

]

|

||||

}

|

||||

},

|

||||

"upstream": [

|

||||

"Agent:NewPumasLick"

|

||||

]

|

||||

},

|

||||

"begin": {

|

||||

"downstream": [

|

||||

"Agent:NewPumasLick"

|

||||

],

|

||||

"obj": {

|

||||

"component_name": "Begin",

|

||||

"params": {

|

||||

"enablePrologue": true,

|

||||

"inputs": {},

|

||||

"mode": "conversational",

|

||||

"prologue": "\u4f60\u597d\uff01 \u6211\u662f\u4f60\u7684\u52a9\u7406\uff0c\u6709\u4ec0\u4e48\u53ef\u4ee5\u5e2e\u5230\u4f60\u7684\u5417\uff1f"

|

||||

}

|

||||

},

|

||||

"upstream": []

|

||||

}

|

||||

},

|

||||

"globals": {

|

||||

"sys.conversation_turns": 0,

|

||||

"sys.files": [],

|

||||

"sys.query": "",

|

||||

"sys.user_id": ""

|

||||

},

|

||||

"graph": {

|

||||

"edges": [

|

||||

{

|

||||

"data": {

|

||||

"isHovered": false

|

||||

},

|

||||

"id": "xy-edge__beginstart-Agent:NewPumasLickend",

|

||||

"source": "begin",

|

||||

"sourceHandle": "start",

|

||||

"target": "Agent:NewPumasLick",

|

||||

"targetHandle": "end"

|

||||

},

|

||||

{

|

||||

"data": {

|

||||

"isHovered": false

|

||||

},

|

||||

"id": "xy-edge__Agent:NewPumasLickstart-Message:OrangeYearsShineend",

|

||||

"markerEnd": "logo",

|

||||

"source": "Agent:NewPumasLick",

|

||||

"sourceHandle": "start",

|

||||

"style": {

|

||||

"stroke": "rgba(91, 93, 106, 1)",

|

||||

"strokeWidth": 1

|

||||

},

|

||||

"target": "Message:OrangeYearsShine",

|

||||

"targetHandle": "end",

|

||||

"type": "buttonEdge",

|

||||

"zIndex": 1001

|

||||

},

|

||||

{

|

||||

"data": {

|

||||

"isHovered": false

|

||||

},

|

||||

"id": "xy-edge__Agent:NewPumasLicktool-Tool:AllBirdsNailend",

|

||||

"selected": false,

|

||||

"source": "Agent:NewPumasLick",

|

||||

"sourceHandle": "tool",

|

||||

"target": "Tool:AllBirdsNail",

|

||||

"targetHandle": "end"

|

||||

}

|

||||

],

|

||||

"nodes": [

|

||||

{

|

||||

"data": {

|

||||

"form": {

|

||||

"enablePrologue": true,

|

||||

"inputs": {},

|

||||

"mode": "conversational",

|

||||

"prologue": "\u4f60\u597d\uff01 \u6211\u662f\u4f60\u7684\u52a9\u7406\uff0c\u6709\u4ec0\u4e48\u53ef\u4ee5\u5e2e\u5230\u4f60\u7684\u5417\uff1f"

|

||||

},

|

||||

"label": "Begin",

|

||||

"name": "begin"

|

||||

},

|

||||

"dragging": false,

|

||||

"id": "begin",

|

||||

"measured": {

|

||||

"height": 48,

|

||||

"width": 200

|

||||

},

|

||||

"position": {

|

||||

"x": -9.569875358221438,

|

||||

"y": 205.84018385864917

|

||||

},

|

||||

"selected": false,

|

||||

"sourcePosition": "left",

|

||||

"targetPosition": "right",

|

||||

"type": "beginNode"

|

||||

},

|

||||

{

|

||||

"data": {

|

||||

"form": {

|

||||

"content": [

|

||||

"{Agent:NewPumasLick@content}"

|

||||

]

|

||||

},

|

||||

"label": "Message",

|

||||

"name": "Response"

|

||||

},

|

||||

"dragging": false,

|

||||

"id": "Message:OrangeYearsShine",

|

||||

"measured": {

|

||||

"height": 56,

|

||||

"width": 200

|

||||

},

|

||||

"position": {

|

||||

"x": 734.4061285881053,

|

||||

"y": 199.9706031723009

|

||||

},

|

||||

"selected": false,

|

||||

"sourcePosition": "right",

|

||||

"targetPosition": "left",

|

||||

"type": "messageNode"

|

||||

},

|

||||

{

|

||||

"data": {

|

||||

"form": {

|

||||

"delay_after_error": 1,

|

||||

"description": "",

|

||||

"exception_comment": "",

|

||||

"exception_default_value": "",

|

||||

"exception_goto": [],

|

||||

"exception_method": null,

|

||||

"frequencyPenaltyEnabled": false,

|

||||

"frequency_penalty": 0.5,

|

||||

"llm_id": "qwen3-235b-a22b-instruct-2507@Tongyi-Qianwen",

|

||||

"maxTokensEnabled": true,

|

||||

"max_retries": 3,

|

||||

"max_rounds": 3,

|

||||

"max_tokens": 128000,

|

||||

"mcp": [],

|

||||

"message_history_window_size": 12,

|

||||

"outputs": {

|

||||

"content": {

|

||||

"type": "string",

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"parameter": "Precise",

|

||||

"presencePenaltyEnabled": false,

|

||||

"presence_penalty": 0.5,

|

||||

"prompts": [

|

||||

{

|

||||

"content": "# User Query\n {sys.query}",

|

||||

"role": "user"

|

||||

}

|

||||

],

|

||||

"sys_prompt": "## Role & Task\nYou are a **\u201cKnowledge Base Retrieval Q\\&A Agent\u201d** whose goal is to break down the user\u2019s question into retrievable subtasks, and then produce a multi-source-verified, structured, and actionable research report using the internal knowledge base.\n## Execution Framework (Detailed Steps & Key Points)\n1. **Assessment & Decomposition**\n * Actions:\n * Automatically extract: main topic, subtopics, entities (people/organizations/products/technologies), time window, geographic/business scope.\n * Output as a list: N facts/data points that must be collected (*N* ranges from 5\u201320 depending on question complexity).\n2. **Query Type Determination (Rule-Based)**\n * Example rules:\n * If the question involves a single issue but requests \u201cmethod comparison/multiple explanations\u201d \u2192 use **depth-first**.\n * If the question can naturally be split into \u22653 independent sub-questions \u2192 use **breadth-first**.\n * If the question can be answered by a single fact/specification/definition \u2192 use **simple query**.\n3. **Research Plan Formulation**\n * Depth-first: define 3\u20135 perspectives (methodology/stakeholders/time dimension/technical route, etc.), assign search keywords, target document types, and output format for each perspective.\n * Breadth-first: list subtasks, prioritize them, and assign search terms.\n * Simple query: directly provide the search sentence and required fields.\n4. **Retrieval Execution**\n * After retrieval: perform coverage check (does it contain the key facts?) and quality check (source diversity, authority, latest update time).\n * If standards are not met, automatically loop: rewrite queries (synonyms/cross-domain terms) and retry \u22643 times, or flag as requiring external search.\n5. **Integration & Reasoning**\n * Build the answer using a **fact\u2013evidence\u2013reasoning** chain. For each conclusion, attach 1\u20132 strongest pieces of evidence.\n---\n## Quality Gate Checklist (Verify at Each Stage)\n* **Stage 1 (Decomposition)**:\n * [ ] Key concepts and expected outputs identified\n * [ ] Required facts/data points listed\n* **Stage 2 (Retrieval)**:\n * [ ] Meets quality standards (see above)\n * [ ] If not met: execute query iteration\n* **Stage 3 (Generation)**:\n * [ ] Each conclusion has at least one direct evidence source\n * [ ] State assumptions/uncertainties\n * [ ] Provide next-step suggestions or experiment/retrieval plans\n * [ ] Final length and depth match user expectations (comply with word count/format if specified)\n---\n## Core Principles\n1. **Strict reliance on the knowledge base**: answers must be **fully bounded** by the content retrieved from the knowledge base.\n2. **No fabrication**: do not generate, infer, or create information that is not explicitly present in the knowledge base.\n3. **Accuracy first**: prefer incompleteness over inaccurate content.\n4. **Output format**:\n * Hierarchically clear modular structure\n * Logical grouping according to the MECE principle\n * Professionally presented formatting\n * Step-by-step cognitive guidance\n * Reasonable use of headings and dividers for clarity\n * *Italicize* key parameters\n * **Bold** critical information\n5. **LaTeX formula requirements**:\n * Inline formulas: start and end with `$`\n * Block formulas: start and end with `$$`, each `$$` on its own line\n * Block formula content must comply with LaTeX math syntax\n * Verify formula correctness\n---\n## Additional Notes (Interaction & Failure Strategy)\n* If the knowledge base does not cover critical facts: explicitly inform the user (with sample wording)\n* For time-sensitive issues: enforce time filtering in the search request, and indicate the latest retrieval date in the answer.\n* Language requirement: answer in the user\u2019s preferred language\n",

|

||||

"temperature": "0.1",

|

||||

"temperatureEnabled": true,

|

||||

"tools": [

|

||||

{

|

||||

"component_name": "Retrieval",

|

||||

"name": "Retrieval",

|

||||

"params": {

|

||||

"cross_languages": [],

|

||||

"description": "",

|

||||

"empty_response": "",

|

||||

"kb_ids": [],

|

||||

"keywords_similarity_weight": 0.7,

|

||||

"outputs": {

|

||||

"formalized_content": {

|

||||

"type": "string",

|

||||

"value": ""

|

||||

}

|

||||

},

|

||||

"rerank_id": "",

|

||||

"similarity_threshold": 0.2,

|

||||

"top_k": 1024,

|

||||

"top_n": 8,

|

||||

"use_kg": false

|

||||

}

|

||||

}

|

||||

],

|

||||

"topPEnabled": false,

|

||||

"top_p": 0.75,

|

||||

"user_prompt": "",

|

||||

"visual_files_var": ""

|

||||

},

|

||||

"label": "Agent",

|

||||

"name": "Knowledge Base Agent"

|

||||

},

|

||||

"dragging": false,

|

||||

"id": "Agent:NewPumasLick",

|

||||

"measured": {

|

||||

"height": 84,

|

||||

"width": 200

|

||||

},

|

||||

"position": {

|

||||

"x": 347.00048227952215,

|

||||

"y": 186.49109364794631

|

||||

},

|

||||

"selected": false,

|

||||

"sourcePosition": "right",

|

||||

"targetPosition": "left",

|

||||

"type": "agentNode"

|

||||

},

|

||||

{

|

||||

"data": {

|

||||

"form": {

|

||||

"description": "This is an agent for a specific task.",

|

||||

"user_prompt": "This is the order you need to send to the agent."

|

||||

},

|

||||

"label": "Tool",

|

||||

"name": "flow.tool_10"

|

||||

},

|

||||

"dragging": false,

|

||||

"id": "Tool:AllBirdsNail",

|

||||

"measured": {

|

||||

"height": 48,

|

||||

"width": 200

|

||||

},

|

||||

"position": {

|

||||

"x": 220.24819746977118,

|

||||

"y": 403.31576836482583

|

||||

},

|

||||

"selected": false,

|

||||

"sourcePosition": "right",

|

||||

"targetPosition": "left",

|

||||

"type": "toolNode"

|

||||

}

|

||||

]

|

||||

},

|

||||

"history": [],

|

||||

"memory": [],

|

||||

"messages": [],

|

||||

"path": [],

|

||||

"retrieval": []

|

||||

},

|

||||

"avatar": "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAADAAAAAwCAYAAABXAvmHAAAH0klEQVR4nO2ZC1BU1wGG/3uRp/IygG+DGK0GOjE1U6cxI4tT03Y0E+kENbaJbKpj60wzgNMwnTjuEtu0miGasY+0krI202kMVEnVxtoOLG00oVa0LajVBDcSEI0REFBgkZv/3GWXfdzdvctuHs7kmzmec9//d+45914XCXc4Xwjk1+59VJGGF7C5QAFSWBvgyWmWLl7IKiny6QNL173B5YjB84bOyrpKA4B1DLySdQpLKAiZGtZ7a/KMVoQJz6UfEZyhTWwaEBmssiLvCueu6BJg8EwFqGTTAC+uvNWC9w82sRWcux/JwaSHstjywcogRt4RG0KExwWG4QsVYCebKSwe3L5lR9OOWjyzfg2WL/0a1/jncO3b2FHxGnKeWYqo+Giu8UEMrWJKWBACPMY/DG+63txhvnKshUu+DF2/hayMDFRsL+VScDb++AVc6OjAuInxXPJl2tfnIikrzUyJMi7qQmLRhOEr2fOFbX/7P6STF7BqoWevfdij4NWGQfx+57OYO2sG1wSnsek8Nm15EU8sikF6ouelXz9ph7JwDqYt+5IIZaGEkauDIrH4wPBmhjexCSEws+VdVG1M4NIoj+2xYzBuJtavWcEl/VS8dggx/ZdQvcGzQwp+cxOXsu5RBQQMVkYJM4LA/Txh+ELFMWFVPARS5kFiabZdx8Olh7l17BzdvhzZmROhdJ3j6D/nIyBgOCMlLAgA9xmF4TMV4BSbrgnrLiBl5rOsRCRRbDUsBzQFiJjY91PCBj9w+yiP1lXWsTLAjc9YQGB9I8+Yx1oTiUWFvW9QgDo2PdASaDp/EQ8/sRnhcPTVcuTMncXwQQVESL9DidscaPW+QEtAICRu9PSxFTpJiePV8AI9AsTvXZBY/Pa+wJ9ApNApIILm8S5Y4QXXQwhYFH6csemDP4G3G5v579i5d04mknknQhDYS4HCrCVr/mC3D305KnbCEpvVIia5Onw6WaWw+KAl0Np+FUXbdiMcyoqfUoeRHoFrJ1uRtnBG1/9Mf/3LtElp+VwF2wcd7woJib1vUPwMH4GWQCQJJtBa/V9cPmFD8uQUpMdNGDhY8bNYrobh8acHu270/l0ImJWRt64Wn6WACN9z5gq2lXwPW8pfweT0icP/fH23vO9QLYq3/QKyLBmFQI3CUcT9NdESEEPItKsSN3r7MBaSJoxHWZERM6ZmMLy2gDP8/pd/og418dTL37hFSUpMUC5f+UiWZcnY9s5+ixCwUiCXx2iiJdDNx6f4pgkH8Q3lbxK7h8+enoHha1cRNdMp8axiHxo6+/5bVdk8DSROYIW1X7QEIom3wHD3gEf4vu1bVYEJZeWQ0zJQvmcfyiv2QZak6raG/QWfK4Ez9mTc5v8xPMJfuojoxXmIX/9DOMe+FCWbcHu4BJJ0YEwCx0824bFNW9HesB+CqYu+jepfPYcHF+aoPXS8sQl/+vU2bgmOU2C+qRc9/YrrPPbGBtzavd0nvCxLxui4pJrBm911PFwak4CYA80cj+JCAiGUzYkmxrSY4N2c3GLi6UEIFL/wRxxqkhmHnTEpDQcrfq6ea+hcE8bNy3GFzyq4H22HW1Kd4WMSkg1jmsSRpKj0Rzhy4gNUv/y8Gjrv8SJK3OWScA+fMn/ysVPPvTmeh6nh1TcxBUJ+jEaKYr7N36x7h+Edj0pB6+WrLokn87+BrTt/p4ZPzZ6MM7/8R2//h33vOcNzdwgBMwVMbGvySQmo4a0NqOZccU7YmGXLEfPQUlUid/XT6B8YdIU/99vjsPcOdEhDsfOd4QVCwKB8yp8SWuG1njbTl83DpMWz1PCKAswuWPDI0e8WebyAJBbxNdrF7cls+hBpAb3h3XtehL/3+4u7D35rQwpP4YFTwMJ91rHpQyQFQgmf9sAMNL9Ur4afv/FBjIuPVj+n4YVTwMD96tj0IVICoYYXv/q1VJ1Sl8UveQyaRwErvOB6B5SwKhqP00gI6A0vhsycJ7/KIzxhyHqGN0ADbnNAAYOicRfCFdAb/p50Gbfuc/wy5w1D5lOghk0fuG0USlgVr7sQjoDe8C8WxKGKPy2KjzlvAQb02/sCbh+FApngX1QUtyeSuwDi0hxFByV7L+LIf3r5kvpp4PBr07Hqvn71Y85bgOG6WS2ggA1+4D6eUKKQApVsqngI6KSkqh9HzsoM/3zg8Oz5VQ9E8wjf30YFDGdkeAsCwH18oYRZGXk7C4HuYxcwe6rjQsFovzaEvoFxqNkTOPzMjGikJso8wsF77XYkLx6dAwxWxvBmBIH7aUMJi8J3w0DnTVz7dyvX6KPzVBt+kL8cmzesRq9ps2Z48bRJmOIapS7E4zM2lXNt5CcU6ID7+ocSZkqY2NRN6ysnsHbJEpR8ZwV6t5Yg+iuLELf2KVd48VwXQf3BQGUMb4ZOuH9gKFEIYJfiNrEDcXZHHV4q3YRv5i7ikgM94RlETNgihrcgBHhccCiRCf7VhBK5rAPyr9I/Y/WKPEyfksH/9NjQ2dODhsYzwcLXsypkeBtCRGLRDUUMAMyKHxEx4dtrzyP97nQMygripiQiKi4aSbPvQmKW7+OXF69ntYvBa1iPCYklZEZECsGm4ja0Ops7EJsaj4SprlU+8IJiqIjAFga3Ikx4vvAYkTGALxyWFArlsnbBC9Sz6mI5zWKNRGh3JJY7mjte4GOz+r4tkRbxQQAAAABJRU5ErkJggg=="

|

||||

}

|

||||

@ -1,7 +1,11 @@

|

||||

{

|

||||

"id": 12,

|

||||

"title": "Generate SEO Blog",

|

||||

"description": "This workflow automatically generates a complete SEO-optimized blog article based on a simple user input. You don’t need any writing experience. Just provide a topic or short request — the system will handle the rest.",

|

||||

"title": {

|

||||

"en": "Generate SEO Blog",

|

||||

"zh": "生成SEO博客"},

|

||||

"description": {

|

||||

"en": "This workflow automatically generates a complete SEO-optimized blog article based on a simple user input. You don’t need any writing experience. Just provide a topic or short request — the system will handle the rest.",

|

||||

"zh": "此工作流根据简单的用户输入自动生成完整的SEO博客文章。你无需任何写作经验,只需提供一个主题或简短请求,系统将处理其余部分。"},

|

||||

"canvas_type": "Marketing",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,7 +1,11 @@

|

||||

{

|

||||

"id": 4,

|

||||

"title": "Generate SEO Blog",

|

||||

"description": "This workflow automatically generates a complete SEO-optimized blog article based on a simple user input. You don’t need any writing experience. Just provide a topic or short request — the system will handle the rest.",

|

||||

"title": {

|

||||

"en": "Generate SEO Blog",

|

||||

"zh": "生成SEO博客"},

|

||||

"description": {

|

||||

"en": "This workflow automatically generates a complete SEO-optimized blog article based on a simple user input. You don’t need any writing experience. Just provide a topic or short request — the system will handle the rest.",

|

||||

"zh": "此工作流根据简单的用户输入自动生成完整的SEO博客文章。你无需任何写作经验,只需提供一个主题或简短请求,系统将处理其余部分。"},

|

||||

"canvas_type": "Recommended",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,7 +1,11 @@

|

||||

{

|

||||

"id": 17,

|

||||

"title": "SQL Assistant",

|

||||

"description": "SQL Assistant is an AI-powered tool that lets business users turn plain-English questions into fully formed SQL queries. Simply type your question (e.g., “Show me last quarter’s top 10 products by revenue”) and SQL Assistant generates the exact SQL, runs it against your database, and returns the results in seconds. ",

|

||||

"title": {

|

||||

"en": "SQL Assistant",

|

||||

"zh": "SQL助理"},

|

||||

"description": {

|

||||

"en": "SQL Assistant is an AI-powered tool that lets business users turn plain-English questions into fully formed SQL queries. Simply type your question (e.g., “Show me last quarter’s top 10 products by revenue”) and SQL Assistant generates the exact SQL, runs it against your database, and returns the results in seconds. ",

|

||||

"zh": "用户能够将简单文本问题转化为完整的SQL查询并输出结果。只需输入您的问题(例如,“展示上个季度前十名按收入排序的产品”),SQL助理就会生成精确的SQL语句,对其运行您的数据库,并几秒钟内返回结果。"},

|

||||

"canvas_type": "Marketing",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

File diff suppressed because one or more lines are too long

@ -1,8 +1,12 @@

|

||||

|

||||

{

|

||||

"id": 9,

|

||||

"title": "Technical Docs QA",

|

||||

"description": "This is a document question-and-answer system based on a knowledge base. When a user asks a question, it retrieves relevant document content to provide accurate answers.",

|

||||

"title": {

|

||||

"en": "Technical Docs QA",

|

||||

"zh": "技术文档问答"},

|

||||

"description": {

|

||||

"en": "This is a document question-and-answer system based on a knowledge base. When a user asks a question, it retrieves relevant document content to provide accurate answers.",

|

||||

"zh": "基于知识库的文档问答系统,当用户提出问题时,会检索相关本地文档并提供准确回答。"},

|

||||

"canvas_type": "Customer Support",

|

||||

"dsl": {

|

||||

"components": {

|

||||

|

||||

@ -1,9 +1,13 @@

|

||||

|

||||

{

|

||||

"id": 14,

|

||||

"title": "Trip Planner",

|

||||

"description": "This smart trip planner utilizes LLM technology to automatically generate customized travel itineraries, with optional tool integration for enhanced reliability.",

|

||||

"canvas_type": "Consumer App",

|

||||

"title": {

|

||||

"en": "Trip Planner",

|

||||

"zh": "旅行规划"},

|

||||

"description": {

|

||||

"en": "This smart trip planner utilizes LLM technology to automatically generate customized travel itineraries, with optional tool integration for enhanced reliability.",

|

||||

"zh": "智能旅行规划将利用大模型自动生成定制化的旅行行程,附带可选工具集成,以增强可靠性。"},

|

||||

"canvas_type": "Consumer App",

|

||||

"dsl": {

|

||||

"components": {

|

||||

"Agent:OddGuestsPump": {

|

||||

|

||||

@ -1,9 +1,13 @@

|

||||

|

||||

{

|

||||

"id": 16,

|

||||

"title": "WebSearch Assistant",

|

||||

"description": "A chat assistant template that integrates information extracted from a knowledge base and web searches to respond to queries. Let's start by setting up your knowledge base in 'Retrieval'!",

|

||||

"canvas_type": "Other",

|

||||

"title": {

|

||||

"en": "WebSearch Assistant",

|

||||

"zh": "网页搜索助手"},

|

||||

"description": {

|

||||

"en": "A chat assistant template that integrates information extracted from a knowledge base and web searches to respond to queries. Let's start by setting up your knowledge base in 'Retrieval'!",

|

||||

"zh": "集成了从知识库和网络搜索中提取的信息回答用户问题。让我们从设置您的知识库开始检索!"},

|

||||

"canvas_type": "Other",

|

||||

"dsl": {

|

||||

"components": {

|

||||

"Agent:SmartSchoolsCross": {

|

||||

|

||||

@ -16,9 +16,8 @@

|

||||

from abc import ABC

|

||||

import asyncio

|

||||

from crawl4ai import AsyncWebCrawler

|

||||

|

||||

from agent.tools.base import ToolParamBase, ToolBase

|

||||

from api.utils.web_utils import is_valid_url

|

||||

|

||||

|

||||

|

||||

class CrawlerParam(ToolParamBase):

|

||||

@ -39,6 +38,7 @@ class Crawler(ToolBase, ABC):

|

||||

component_name = "Crawler"

|

||||

|

||||

def _run(self, history, **kwargs):

|

||||

from api.utils.web_utils import is_valid_url

|

||||

ans = self.get_input()

|

||||

ans = " - ".join(ans["content"]) if "content" in ans else ""

|

||||

if not is_valid_url(ans):

|

||||

|

||||

156

agent/tools/searxng.py

Normal file

156

agent/tools/searxng.py

Normal file

@ -0,0 +1,156 @@

|

||||

#

|

||||

# Copyright 2024 The InfiniFlow Authors. All Rights Reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

import logging

|

||||

import os

|

||||

import time

|

||||

from abc import ABC

|

||||

import requests

|

||||

from agent.tools.base import ToolMeta, ToolParamBase, ToolBase

|

||||

from api.utils.api_utils import timeout

|

||||

|

||||

|

||||

class SearXNGParam(ToolParamBase):

|

||||

"""

|

||||

Define the SearXNG component parameters.

|

||||

"""

|

||||

|

||||

def __init__(self):

|

||||

self.meta: ToolMeta = {

|

||||

"name": "searxng_search",

|

||||

"description": "SearXNG is a privacy-focused metasearch engine that aggregates results from multiple search engines without tracking users. It provides comprehensive web search capabilities.",

|

||||

"parameters": {

|

||||

"query": {

|

||||

"type": "string",

|

||||

"description": "The search keywords to execute with SearXNG. The keywords should be the most important words/terms(includes synonyms) from the original request.",

|

||||

"default": "{sys.query}",

|

||||

"required": True

|

||||

},

|

||||

"searxng_url": {

|

||||

"type": "string",

|

||||

"description": "The base URL of your SearXNG instance (e.g., http://localhost:4000). This is required to connect to your SearXNG server.",

|

||||

"required": False,

|

||||

"default": ""

|

||||

}

|

||||

}

|

||||

}

|

||||

super().__init__()

|

||||

self.top_n = 10

|

||||

self.searxng_url = ""

|

||||

|

||||

def check(self):

|

||||

# Keep validation lenient so opening try-run panel won't fail without URL.

|

||||

# Coerce top_n to int if it comes as string from UI.

|

||||

try:

|

||||

if isinstance(self.top_n, str):

|

||||

self.top_n = int(self.top_n.strip())

|

||||

except Exception:

|

||||

pass

|

||||

self.check_positive_integer(self.top_n, "Top N")

|

||||

|

||||

def get_input_form(self) -> dict[str, dict]:

|

||||

return {

|

||||

"query": {

|

||||

"name": "Query",

|

||||

"type": "line"

|

||||

},

|

||||

"searxng_url": {

|

||||

"name": "SearXNG URL",

|

||||

"type": "line",

|

||||

"placeholder": "http://localhost:4000"

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

class SearXNG(ToolBase, ABC):

|

||||

component_name = "SearXNG"

|

||||

|

||||

@timeout(os.environ.get("COMPONENT_EXEC_TIMEOUT", 12))

|

||||

def _invoke(self, **kwargs):

|

||||

# Gracefully handle try-run without inputs

|

||||

query = kwargs.get("query")

|

||||

if not query or not isinstance(query, str) or not query.strip():

|

||||

self.set_output("formalized_content", "")

|

||||

return ""

|

||||

|

||||

searxng_url = (kwargs.get("searxng_url") or getattr(self._param, "searxng_url", "") or "").strip()

|

||||

# In try-run, if no URL configured, just return empty instead of raising

|

||||

if not searxng_url:

|

||||

self.set_output("formalized_content", "")

|

||||

return ""

|

||||

|

||||

last_e = ""

|

||||

for _ in range(self._param.max_retries+1):

|

||||

try:

|

||||

# 构建搜索参数

|

||||

search_params = {

|

||||

'q': query,

|

||||

'format': 'json',

|

||||

'categories': 'general',

|

||||

'language': 'auto',

|

||||

'safesearch': 1,

|

||||

'pageno': 1

|

||||

}

|

||||

|

||||

# 发送搜索请求

|

||||

response = requests.get(

|

||||

f"{searxng_url}/search",

|

||||

params=search_params,

|

||||

timeout=10

|

||||

)

|

||||

response.raise_for_status()

|

||||

|

||||

data = response.json()

|

||||

|

||||

# 验证响应数据

|

||||

if not data or not isinstance(data, dict):

|

||||

raise ValueError("Invalid response from SearXNG")

|

||||

|

||||

results = data.get("results", [])

|

||||

if not isinstance(results, list):

|

||||

raise ValueError("Invalid results format from SearXNG")

|

||||

|

||||

# 限制结果数量

|

||||

results = results[:self._param.top_n]

|

||||

|

||||

# 处理搜索结果

|

||||

self._retrieve_chunks(results,

|

||||

get_title=lambda r: r.get("title", ""),

|

||||

get_url=lambda r: r.get("url", ""),

|

||||

get_content=lambda r: r.get("content", ""))

|

||||

|

||||

self.set_output("json", results)

|

||||

return self.output("formalized_content")

|

||||

|

||||

except requests.RequestException as e:

|

||||

last_e = f"Network error: {e}"

|

||||

logging.exception(f"SearXNG network error: {e}")

|

||||

time.sleep(self._param.delay_after_error)

|

||||

except Exception as e:

|

||||

last_e = str(e)

|

||||

logging.exception(f"SearXNG error: {e}")

|

||||

time.sleep(self._param.delay_after_error)

|

||||

|

||||

if last_e:

|

||||

self.set_output("_ERROR", last_e)

|

||||

return f"SearXNG error: {last_e}"

|

||||

|

||||

assert False, self.output()

|

||||

|

||||

def thoughts(self) -> str:

|

||||

return """

|

||||

Keywords: {}

|

||||

Searching with SearXNG for relevant results...

|

||||

""".format(self.get_input().get("query", "-_-!"))

|

||||

@ -93,6 +93,7 @@ def list_chunk():

|

||||

def get():

|

||||

chunk_id = request.args["chunk_id"]

|

||||

try:

|

||||

chunk = None

|

||||

tenants = UserTenantService.query(user_id=current_user.id)

|

||||

if not tenants:

|

||||

return get_data_error_result(message="Tenant not found!")

|

||||

|

||||

@ -66,7 +66,7 @@ def set_dialog():

|

||||

|

||||

if not is_create:

|

||||

if not req.get("kb_ids", []) and not prompt_config.get("tavily_api_key") and "{knowledge}" in prompt_config['system']:

|

||||

return get_data_error_result(message="Please remove `{knowledge}` in system prompt since no knowledge base/Tavily used here.")

|

||||

return get_data_error_result(message="Please remove `{knowledge}` in system prompt since no knowledge base / Tavily used here.")

|

||||

|

||||

for p in prompt_config["parameters"]:

|

||||

if p["optional"]:

|

||||

|

||||

@ -243,7 +243,7 @@ def add_llm():

|

||||

model_name=mdl_nm,

|

||||

base_url=llm["api_base"]

|

||||

)

|

||||

arr, tc = mdl.similarity("Hello~ Ragflower!", ["Hi, there!", "Ohh, my friend!"])

|

||||