mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-04 03:25:30 +08:00

Compare commits

36 Commits

cc167ae619

...

v0.20.4

| Author | SHA1 | Date | |

|---|---|---|---|

| 2d89863fdd | |||

| 6cb3e08381 | |||

| 986b9cbb1a | |||

| 9c456adffd | |||

| c15b138839 | |||

| ff11348f7c | |||

| cbdabbb58f | |||

| cf0011be67 | |||

| 1f47001c82 | |||

| a914535344 | |||

| ba1063c2b9 | |||

| 2b4bca4447 | |||

| 11cf6ae313 | |||

| 88db5d90d1 | |||

| 209ef09dc3 | |||

| 370c8bc25b | |||

| e90a959b4d | |||

| ca320a8c30 | |||

| ae505e6165 | |||

| 63b5c2292d | |||

| 8d8a5f73b6 | |||

| d0fa66f4d5 | |||

| 9dd22e141b | |||

| b6c1ca828e | |||

| d367c7e226 | |||

| a3aa3f0d36 | |||

| 7b8752fe24 | |||

| 5e2c33e5b0 | |||

| e40be8e541 | |||

| 23d0b564d3 | |||

| ecaa9de843 | |||

| 2f74727bb9 | |||

| adbb038a87 | |||

| 3947da10ae | |||

| 4862be28ad | |||

| 035e8ed0f7 |

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -190,7 +190,7 @@ releases! 🌟

|

||||

> All Docker images are built for x86 platforms. We don't currently offer Docker images for ARM64.

|

||||

> If you are on an ARM64 platform, follow [this guide](https://ragflow.io/docs/dev/build_docker_image) to build a Docker image compatible with your system.

|

||||

|

||||

> The command below downloads the `v0.20.3-slim` edition of the RAGFlow Docker image. See the following table for descriptions of different RAGFlow editions. To download a RAGFlow edition different from `v0.20.3-slim`, update the `RAGFLOW_IMAGE` variable accordingly in **docker/.env** before using `docker compose` to start the server. For example: set `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3` for the full edition `v0.20.3`.

|

||||

> The command below downloads the `v0.20.4-slim` edition of the RAGFlow Docker image. See the following table for descriptions of different RAGFlow editions. To download a RAGFlow edition different from `v0.20.4-slim`, update the `RAGFLOW_IMAGE` variable accordingly in **docker/.env** before using `docker compose` to start the server. For example: set `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4` for the full edition `v0.20.4`.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -203,8 +203,8 @@ releases! 🌟

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

|-------------------|-----------------|-----------------------|--------------------------|

|

||||

| v0.20.3 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.3-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.20.4 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.4-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Lencana Daring" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Rilis%20Terbaru" alt="Rilis Terbaru">

|

||||

@ -181,7 +181,7 @@ Coba demo kami di [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

> Semua gambar Docker dibangun untuk platform x86. Saat ini, kami tidak menawarkan gambar Docker untuk ARM64.

|

||||

> Jika Anda menggunakan platform ARM64, [silakan gunakan panduan ini untuk membangun gambar Docker yang kompatibel dengan sistem Anda](https://ragflow.io/docs/dev/build_docker_image).

|

||||

|

||||

> Perintah di bawah ini mengunduh edisi v0.20.3-slim dari gambar Docker RAGFlow. Silakan merujuk ke tabel berikut untuk deskripsi berbagai edisi RAGFlow. Untuk mengunduh edisi RAGFlow yang berbeda dari v0.20.3-slim, perbarui variabel RAGFLOW_IMAGE di docker/.env sebelum menggunakan docker compose untuk memulai server. Misalnya, atur RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3 untuk edisi lengkap v0.20.3.

|

||||

> Perintah di bawah ini mengunduh edisi v0.20.4-slim dari gambar Docker RAGFlow. Silakan merujuk ke tabel berikut untuk deskripsi berbagai edisi RAGFlow. Untuk mengunduh edisi RAGFlow yang berbeda dari v0.20.4-slim, perbarui variabel RAGFLOW_IMAGE di docker/.env sebelum menggunakan docker compose untuk memulai server. Misalnya, atur RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4 untuk edisi lengkap v0.20.4.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -194,8 +194,8 @@ $ docker compose -f docker-compose.yml up -d

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.20.3 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.3-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.20.4 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.4-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -160,7 +160,7 @@

|

||||

> 現在、公式に提供されているすべての Docker イメージは x86 アーキテクチャ向けにビルドされており、ARM64 用の Docker イメージは提供されていません。

|

||||

> ARM64 アーキテクチャのオペレーティングシステムを使用している場合は、[このドキュメント](https://ragflow.io/docs/dev/build_docker_image)を参照して Docker イメージを自分でビルドしてください。

|

||||

|

||||

> 以下のコマンドは、RAGFlow Docker イメージの v0.20.3-slim エディションをダウンロードします。異なる RAGFlow エディションの説明については、以下の表を参照してください。v0.20.3-slim とは異なるエディションをダウンロードするには、docker/.env ファイルの RAGFLOW_IMAGE 変数を適宜更新し、docker compose を使用してサーバーを起動してください。例えば、完全版 v0.20.3 をダウンロードするには、RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3 と設定します。

|

||||

> 以下のコマンドは、RAGFlow Docker イメージの v0.20.4-slim エディションをダウンロードします。異なる RAGFlow エディションの説明については、以下の表を参照してください。v0.20.4-slim とは異なるエディションをダウンロードするには、docker/.env ファイルの RAGFLOW_IMAGE 変数を適宜更新し、docker compose を使用してサーバーを起動してください。例えば、完全版 v0.20.4 をダウンロードするには、RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4 と設定します。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -173,8 +173,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.20.3 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.3-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.20.4 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.4-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -160,7 +160,7 @@

|

||||

> 모든 Docker 이미지는 x86 플랫폼을 위해 빌드되었습니다. 우리는 현재 ARM64 플랫폼을 위한 Docker 이미지를 제공하지 않습니다.

|

||||

> ARM64 플랫폼을 사용 중이라면, [시스템과 호환되는 Docker 이미지를 빌드하려면 이 가이드를 사용해 주세요](https://ragflow.io/docs/dev/build_docker_image).

|

||||

|

||||

> 아래 명령어는 RAGFlow Docker 이미지의 v0.20.3-slim 버전을 다운로드합니다. 다양한 RAGFlow 버전에 대한 설명은 다음 표를 참조하십시오. v0.20.3-slim과 다른 RAGFlow 버전을 다운로드하려면, docker/.env 파일에서 RAGFLOW_IMAGE 변수를 적절히 업데이트한 후 docker compose를 사용하여 서버를 시작하십시오. 예를 들어, 전체 버전인 v0.20.3을 다운로드하려면 RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3로 설정합니다.

|

||||

> 아래 명령어는 RAGFlow Docker 이미지의 v0.20.4-slim 버전을 다운로드합니다. 다양한 RAGFlow 버전에 대한 설명은 다음 표를 참조하십시오. v0.20.4-slim과 다른 RAGFlow 버전을 다운로드하려면, docker/.env 파일에서 RAGFLOW_IMAGE 변수를 적절히 업데이트한 후 docker compose를 사용하여 서버를 시작하십시오. 예를 들어, 전체 버전인 v0.20.4을 다운로드하려면 RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4로 설정합니다.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -173,8 +173,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.20.3 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.3-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.20.4 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.4-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Badge Estático" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Última%20Relese" alt="Última Versão">

|

||||

@ -180,7 +180,7 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

> Todas as imagens Docker são construídas para plataformas x86. Atualmente, não oferecemos imagens Docker para ARM64.

|

||||

> Se você estiver usando uma plataforma ARM64, por favor, utilize [este guia](https://ragflow.io/docs/dev/build_docker_image) para construir uma imagem Docker compatível com o seu sistema.

|

||||

|

||||

> O comando abaixo baixa a edição `v0.20.3-slim` da imagem Docker do RAGFlow. Consulte a tabela a seguir para descrições de diferentes edições do RAGFlow. Para baixar uma edição do RAGFlow diferente da `v0.20.3-slim`, atualize a variável `RAGFLOW_IMAGE` conforme necessário no **docker/.env** antes de usar `docker compose` para iniciar o servidor. Por exemplo: defina `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3` para a edição completa `v0.20.3`.

|

||||

> O comando abaixo baixa a edição `v0.20.4-slim` da imagem Docker do RAGFlow. Consulte a tabela a seguir para descrições de diferentes edições do RAGFlow. Para baixar uma edição do RAGFlow diferente da `v0.20.4-slim`, atualize a variável `RAGFLOW_IMAGE` conforme necessário no **docker/.env** antes de usar `docker compose` para iniciar o servidor. Por exemplo: defina `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4` para a edição completa `v0.20.4`.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -193,8 +193,8 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

|

||||

| Tag da imagem RAGFlow | Tamanho da imagem (GB) | Possui modelos de incorporação? | Estável? |

|

||||

| --------------------- | ---------------------- | ------------------------------- | ------------------------ |

|

||||

| v0.20.3 | ~9 | :heavy_check_mark: | Lançamento estável |

|

||||

| v0.20.3-slim | ~2 | ❌ | Lançamento estável |

|

||||

| v0.20.4 | ~9 | :heavy_check_mark: | Lançamento estável |

|

||||

| v0.20.4-slim | ~2 | ❌ | Lançamento estável |

|

||||

| nightly | ~9 | :heavy_check_mark: | _Instável_ build noturno |

|

||||

| nightly-slim | ~2 | ❌ | _Instável_ build noturno |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -183,7 +183,7 @@

|

||||

> 所有 Docker 映像檔都是為 x86 平台建置的。目前,我們不提供 ARM64 平台的 Docker 映像檔。

|

||||

> 如果您使用的是 ARM64 平台,請使用 [這份指南](https://ragflow.io/docs/dev/build_docker_image) 來建置適合您系統的 Docker 映像檔。

|

||||

|

||||

> 執行以下指令會自動下載 RAGFlow slim Docker 映像 `v0.20.3-slim`。請參考下表查看不同 Docker 發行版的說明。如需下載不同於 `v0.20.3-slim` 的 Docker 映像,請在執行 `docker compose` 啟動服務之前先更新 **docker/.env** 檔案內的 `RAGFLOW_IMAGE` 變數。例如,你可以透過設定 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3` 來下載 RAGFlow 鏡像的 `v0.20.3` 完整發行版。

|

||||

> 執行以下指令會自動下載 RAGFlow slim Docker 映像 `v0.20.4-slim`。請參考下表查看不同 Docker 發行版的說明。如需下載不同於 `v0.20.4-slim` 的 Docker 映像,請在執行 `docker compose` 啟動服務之前先更新 **docker/.env** 檔案內的 `RAGFLOW_IMAGE` 變數。例如,你可以透過設定 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4` 來下載 RAGFlow 鏡像的 `v0.20.4` 完整發行版。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -196,8 +196,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.20.3 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.3-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.20.4 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.4-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.3">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.20.4">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -183,7 +183,7 @@

|

||||

> 请注意,目前官方提供的所有 Docker 镜像均基于 x86 架构构建,并不提供基于 ARM64 的 Docker 镜像。

|

||||

> 如果你的操作系统是 ARM64 架构,请参考[这篇文档](https://ragflow.io/docs/dev/build_docker_image)自行构建 Docker 镜像。

|

||||

|

||||

> 运行以下命令会自动下载 RAGFlow slim Docker 镜像 `v0.20.3-slim`。请参考下表查看不同 Docker 发行版的描述。如需下载不同于 `v0.20.3-slim` 的 Docker 镜像,请在运行 `docker compose` 启动服务之前先更新 **docker/.env** 文件内的 `RAGFLOW_IMAGE` 变量。比如,你可以通过设置 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3` 来下载 RAGFlow 镜像的 `v0.20.3` 完整发行版。

|

||||

> 运行以下命令会自动下载 RAGFlow slim Docker 镜像 `v0.20.4-slim`。请参考下表查看不同 Docker 发行版的描述。如需下载不同于 `v0.20.4-slim` 的 Docker 镜像,请在运行 `docker compose` 启动服务之前先更新 **docker/.env** 文件内的 `RAGFLOW_IMAGE` 变量。比如,你可以通过设置 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4` 来下载 RAGFlow 镜像的 `v0.20.4` 完整发行版。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -196,8 +196,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.20.3 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.3-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.20.4 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.20.4-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -469,6 +469,9 @@ class Canvas:

|

||||

def get_prologue(self):

|

||||

return self.components["begin"]["obj"]._param.prologue

|

||||

|

||||

def get_mode(self):

|

||||

return self.components["begin"]["obj"]._param.mode

|

||||

|

||||

def set_global_param(self, **kwargs):

|

||||

self.globals.update(kwargs)

|

||||

|

||||

|

||||

1048

agent/templates/ecommerce_customer_service_workflow.json

Normal file

1048

agent/templates/ecommerce_customer_service_workflow.json

Normal file

File diff suppressed because one or more lines are too long

@ -79,7 +79,7 @@ def main() -> dict:

|

||||

return {

|

||||

"result": fibonacci_recursive(100),

|

||||

}

|

||||

|

||||

|

||||

Here's a code example for Javascript(`main` function MUST be included and exported):

|

||||

const axios = require('axios');

|

||||

async function main(args) {

|

||||

@ -156,7 +156,7 @@ class CodeExec(ToolBase, ABC):

|

||||

self.set_output("_ERROR", "construct code request error: " + str(e))

|

||||

|

||||

try:

|

||||

resp = requests.post(url=f"http://{settings.SANDBOX_HOST}:9385/run", json=code_req, timeout=10)

|

||||

resp = requests.post(url=f"http://{settings.SANDBOX_HOST}:9385/run", json=code_req, timeout=os.environ.get("COMPONENT_EXEC_TIMEOUT", 10*60))

|

||||

logging.info(f"http://{settings.SANDBOX_HOST}:9385/run", code_req, resp.status_code)

|

||||

if resp.status_code != 200:

|

||||

resp.raise_for_status()

|

||||

|

||||

@ -16,8 +16,10 @@

|

||||

import json

|

||||

import re

|

||||

import time

|

||||

|

||||

import tiktoken

|

||||

from flask import Response, jsonify, request

|

||||

|

||||

from agent.canvas import Canvas

|

||||

from api import settings

|

||||

from api.db import LLMType, StatusEnum

|

||||

@ -27,7 +29,8 @@ from api.db.services.canvas_service import UserCanvasService, completionOpenAI

|

||||

from api.db.services.canvas_service import completion as agent_completion

|

||||

from api.db.services.conversation_service import ConversationService, iframe_completion

|

||||

from api.db.services.conversation_service import completion as rag_completion

|

||||

from api.db.services.dialog_service import DialogService, ask, chat, gen_mindmap

|

||||

from api.db.services.dialog_service import DialogService, ask, chat, gen_mindmap, meta_filter

|

||||

from api.db.services.document_service import DocumentService

|

||||

from api.db.services.knowledgebase_service import KnowledgebaseService

|

||||

from api.db.services.llm_service import LLMBundle

|

||||

from api.db.services.search_service import SearchService

|

||||

@ -37,7 +40,7 @@ from api.utils.api_utils import check_duplicate_ids, get_data_openai, get_error_

|

||||

from rag.app.tag import label_question

|

||||

from rag.prompts import chunks_format

|

||||

from rag.prompts.prompt_template import load_prompt

|

||||

from rag.prompts.prompts import cross_languages, keyword_extraction

|

||||

from rag.prompts.prompts import cross_languages, gen_meta_filter, keyword_extraction

|

||||

|

||||

|

||||

@manager.route("/chats/<chat_id>/sessions", methods=["POST"]) # noqa: F821

|

||||

@ -81,21 +84,13 @@ def create_agent_session(tenant_id, agent_id):

|

||||

if not isinstance(cvs.dsl, str):

|

||||

cvs.dsl = json.dumps(cvs.dsl, ensure_ascii=False)

|

||||

|

||||

session_id=get_uuid()

|

||||

session_id = get_uuid()

|

||||

canvas = Canvas(cvs.dsl, tenant_id, agent_id)

|

||||

canvas.reset()

|

||||

conv = {

|

||||

"id": session_id,

|

||||

"dialog_id": cvs.id,

|

||||

"user_id": user_id,

|

||||

"message": [],

|

||||

"source": "agent",

|

||||

"dsl": cvs.dsl

|

||||

}

|

||||

API4ConversationService.save(**conv)

|

||||

|

||||

cvs.dsl = json.loads(str(canvas))

|

||||

conv = {"id": session_id, "dialog_id": cvs.id, "user_id": user_id, "message": [{"role": "assistant", "content": canvas.get_prologue()}], "source": "agent", "dsl": cvs.dsl}

|

||||

API4ConversationService.save(**conv)

|

||||

conv["agent_id"] = conv.pop("dialog_id")

|

||||

return get_result(data=conv)

|

||||

|

||||

@ -450,26 +445,46 @@ def agents_completion_openai_compatibility(tenant_id, agent_id):

|

||||

def agent_completions(tenant_id, agent_id):

|

||||

req = request.json

|

||||

|

||||

ans = {}

|

||||

if req.get("stream", True):

|

||||

|

||||

def generate():

|

||||

for answer in agent_completion(tenant_id=tenant_id, agent_id=agent_id, **req):

|

||||

if isinstance(answer, str):

|

||||

try:

|

||||

ans = json.loads(answer[5:]) # remove "data:"

|

||||

except Exception:

|

||||

continue

|

||||

|

||||

if ans.get("event") != "message" or not ans.get("data", {}).get("reference", None):

|

||||

continue

|

||||

|

||||

yield answer

|

||||

|

||||

yield "data:[DONE]\n\n"

|

||||

|

||||

if req.get("stream", True):

|

||||

resp = Response(agent_completion(tenant_id=tenant_id, agent_id=agent_id, **req), mimetype="text/event-stream")

|

||||

resp = Response(generate(), mimetype="text/event-stream")

|

||||

resp.headers.add_header("Cache-control", "no-cache")

|

||||

resp.headers.add_header("Connection", "keep-alive")

|

||||

resp.headers.add_header("X-Accel-Buffering", "no")

|

||||

resp.headers.add_header("Content-Type", "text/event-stream; charset=utf-8")

|

||||

return resp

|

||||

result = {}

|

||||

|

||||

full_content = ""

|

||||

for answer in agent_completion(tenant_id=tenant_id, agent_id=agent_id, **req):

|

||||

try:

|

||||

ans = json.loads(answer[5:]) # remove "data:"

|

||||

if not result:

|

||||

result = ans.copy()

|

||||

else:

|

||||

result["data"]["answer"] += ans["data"]["answer"]

|

||||

result["data"]["reference"] = ans["data"].get("reference", [])

|

||||

ans = json.loads(answer[5:])

|

||||

|

||||

if ans["event"] == "message":

|

||||

full_content += ans["data"]["content"]

|

||||

|

||||

if ans.get("data", {}).get("reference", None):

|

||||

ans["data"]["content"] = full_content

|

||||

return get_result(data=ans)

|

||||

except Exception as e:

|

||||

return get_error_data_result(str(e))

|

||||

return result

|

||||

return get_result(data=f"**ERROR**: {str(e)}")

|

||||

return get_result(data=ans)

|

||||

|

||||

|

||||

@manager.route("/chats/<chat_id>/sessions", methods=["GET"]) # noqa: F821

|

||||

@ -564,12 +579,12 @@ def list_agent_session(tenant_id, agent_id):

|

||||

if message_num != 0 and messages[message_num]["role"] != "user":

|

||||

chunk_list = []

|

||||

# Add boundary and type checks to prevent KeyError

|

||||

if (chunk_num < len(conv["reference"]) and

|

||||

conv["reference"][chunk_num] is not None and

|

||||

isinstance(conv["reference"][chunk_num], dict) and

|

||||

"chunks" in conv["reference"][chunk_num]):

|

||||

if chunk_num < len(conv["reference"]) and conv["reference"][chunk_num] is not None and isinstance(conv["reference"][chunk_num], dict) and "chunks" in conv["reference"][chunk_num]:

|

||||

chunks = conv["reference"][chunk_num]["chunks"]

|

||||

for chunk in chunks:

|

||||

# Ensure chunk is a dictionary before calling get method

|

||||

if not isinstance(chunk, dict):

|

||||

continue

|

||||

new_chunk = {

|

||||

"id": chunk.get("chunk_id", chunk.get("id")),

|

||||

"content": chunk.get("content_with_weight", chunk.get("content")),

|

||||

@ -865,14 +880,7 @@ def begin_inputs(agent_id):

|

||||

return get_error_data_result(f"Can't find agent by ID: {agent_id}")

|

||||

|

||||

canvas = Canvas(json.dumps(cvs.dsl), objs[0].tenant_id)

|

||||

return get_result(

|

||||

data={

|

||||

"title": cvs.title,

|

||||

"avatar": cvs.avatar,

|

||||

"inputs": canvas.get_component_input_form("begin"),

|

||||

"prologue": canvas.get_prologue()

|

||||

}

|

||||

)

|

||||

return get_result(data={"title": cvs.title, "avatar": cvs.avatar, "inputs": canvas.get_component_input_form("begin"), "prologue": canvas.get_prologue(), "mode": canvas.get_mode()})

|

||||

|

||||

|

||||

@manager.route("/searchbots/ask", methods=["POST"]) # noqa: F821

|

||||

@ -912,7 +920,7 @@ def ask_about_embedded():

|

||||

return resp

|

||||

|

||||

|

||||

@manager.route("/searchbots/retrieval_test", methods=['POST']) # noqa: F821

|

||||

@manager.route("/searchbots/retrieval_test", methods=["POST"]) # noqa: F821

|

||||

@validate_request("kb_id", "question")

|

||||

def retrieval_test_embedded():

|

||||

token = request.headers.get("Authorization").split()

|

||||

@ -942,18 +950,30 @@ def retrieval_test_embedded():

|

||||

if not tenant_id:

|

||||

return get_error_data_result(message="permission denined.")

|

||||

|

||||

if req.get("search_id", ""):

|

||||

search_config = SearchService.get_detail(req.get("search_id", "")).get("search_config", {})

|

||||

meta_data_filter = search_config.get("meta_data_filter", {})

|

||||

metas = DocumentService.get_meta_by_kbs(kb_ids)

|

||||

if meta_data_filter.get("method") == "auto":

|

||||

chat_mdl = LLMBundle(tenant_id, LLMType.CHAT, llm_name=search_config.get("chat_id", ""))

|

||||

filters = gen_meta_filter(chat_mdl, metas, question)

|

||||

doc_ids.extend(meta_filter(metas, filters))

|

||||

if not doc_ids:

|

||||

doc_ids = None

|

||||

elif meta_data_filter.get("method") == "manual":

|

||||

doc_ids.extend(meta_filter(metas, meta_data_filter["manual"]))

|

||||

if not doc_ids:

|

||||

doc_ids = None

|

||||

|

||||

try:

|

||||

tenants = UserTenantService.query(user_id=tenant_id)

|

||||

for kb_id in kb_ids:

|

||||

for tenant in tenants:

|

||||

if KnowledgebaseService.query(

|

||||

tenant_id=tenant.tenant_id, id=kb_id):

|

||||

if KnowledgebaseService.query(tenant_id=tenant.tenant_id, id=kb_id):

|

||||

tenant_ids.append(tenant.tenant_id)

|

||||

break

|

||||

else:

|

||||

return get_json_result(

|

||||

data=False, message='Only owner of knowledgebase authorized for this operation.',

|

||||

code=settings.RetCode.OPERATING_ERROR)

|

||||

return get_json_result(data=False, message="Only owner of knowledgebase authorized for this operation.", code=settings.RetCode.OPERATING_ERROR)

|

||||

|

||||

e, kb = KnowledgebaseService.get_by_id(kb_ids[0])

|

||||

if not e:

|

||||

@ -973,17 +993,11 @@ def retrieval_test_embedded():

|

||||

question += keyword_extraction(chat_mdl, question)

|

||||

|

||||

labels = label_question(question, [kb])

|

||||

ranks = settings.retrievaler.retrieval(question, embd_mdl, tenant_ids, kb_ids, page, size,

|

||||

similarity_threshold, vector_similarity_weight, top,

|

||||

doc_ids, rerank_mdl=rerank_mdl, highlight=req.get("highlight"),

|

||||

rank_feature=labels

|

||||

)

|

||||

ranks = settings.retrievaler.retrieval(

|

||||

question, embd_mdl, tenant_ids, kb_ids, page, size, similarity_threshold, vector_similarity_weight, top, doc_ids, rerank_mdl=rerank_mdl, highlight=req.get("highlight"), rank_feature=labels

|

||||

)

|

||||

if use_kg:

|

||||

ck = settings.kg_retrievaler.retrieval(question,

|

||||

tenant_ids,

|

||||

kb_ids,

|

||||

embd_mdl,

|

||||

LLMBundle(kb.tenant_id, LLMType.CHAT))

|

||||

ck = settings.kg_retrievaler.retrieval(question, tenant_ids, kb_ids, embd_mdl, LLMBundle(kb.tenant_id, LLMType.CHAT))

|

||||

if ck["content_with_weight"]:

|

||||

ranks["chunks"].insert(0, ck)

|

||||

|

||||

@ -994,8 +1008,7 @@ def retrieval_test_embedded():

|

||||

return get_json_result(data=ranks)

|

||||

except Exception as e:

|

||||

if str(e).find("not_found") > 0:

|

||||

return get_json_result(data=False, message='No chunk found! Check the chunk status please!',

|

||||

code=settings.RetCode.DATA_ERROR)

|

||||

return get_json_result(data=False, message="No chunk found! Check the chunk status please!", code=settings.RetCode.DATA_ERROR)

|

||||

return server_error_response(e)

|

||||

|

||||

|

||||

|

||||

@ -155,8 +155,9 @@ def list_search_app():

|

||||

owner_ids = req.get("owner_ids", [])

|

||||

try:

|

||||

if not owner_ids:

|

||||

tenants = TenantService.get_joined_tenants_by_user_id(current_user.id)

|

||||

tenants = [m["tenant_id"] for m in tenants]

|

||||

# tenants = TenantService.get_joined_tenants_by_user_id(current_user.id)

|

||||

# tenants = [m["tenant_id"] for m in tenants]

|

||||

tenants = []

|

||||

search_apps, total = SearchService.get_by_tenant_ids(tenants, current_user.id, page_number, items_per_page, orderby, desc, keywords)

|

||||

else:

|

||||

tenants = owner_ids

|

||||

|

||||

@ -135,24 +135,6 @@ class UserCanvasService(CommonService):

|

||||

return True

|

||||

|

||||

|

||||

def structure_answer(conv, ans, message_id, session_id):

|

||||

if not conv:

|

||||

return ans

|

||||

content = ""

|

||||

if ans["event"] == "message":

|

||||

if ans["data"].get("start_to_think") is True:

|

||||

content = "<think>"

|

||||

elif ans["data"].get("end_to_think") is True:

|

||||

content = "</think>"

|

||||

else:

|

||||

content = ans["data"]["content"]

|

||||

|

||||

reference = ans["data"].get("reference")

|

||||

result = {"id": message_id, "session_id": session_id, "answer": content}

|

||||

if reference:

|

||||

result["reference"] = [reference]

|

||||

return result

|

||||

|

||||

def completion(tenant_id, agent_id, session_id=None, **kwargs):

|

||||

query = kwargs.get("query", "") or kwargs.get("question", "")

|

||||

files = kwargs.get("files", [])

|

||||

@ -182,7 +164,8 @@ def completion(tenant_id, agent_id, session_id=None, **kwargs):

|

||||

"user_id": user_id,

|

||||

"message": [],

|

||||

"source": "agent",

|

||||

"dsl": cvs.dsl

|

||||

"dsl": cvs.dsl,

|

||||

"reference": []

|

||||

}

|

||||

API4ConversationService.save(**conv)

|

||||

conv = API4Conversation(**conv)

|

||||

@ -195,14 +178,13 @@ def completion(tenant_id, agent_id, session_id=None, **kwargs):

|

||||

})

|

||||

txt = ""

|

||||

for ans in canvas.run(query=query, files=files, user_id=user_id, inputs=inputs):

|

||||

ans = structure_answer(conv, ans, message_id, session_id)

|

||||

txt += ans["answer"]

|

||||

if ans.get("answer") or ans.get("reference"):

|

||||

yield "data:" + json.dumps({"code": 0, "data": ans},

|

||||

ensure_ascii=False) + "\n\n"

|

||||

ans["session_id"] = session_id

|

||||

if ans["event"] == "message":

|

||||

txt += ans["data"]["content"]

|

||||

yield "data:" + json.dumps(ans, ensure_ascii=False) + "\n\n"

|

||||

|

||||

conv.message.append({"role": "assistant", "content": txt, "created_at": time.time(), "id": message_id})

|

||||

conv.reference.append(canvas.get_reference())

|

||||

conv.reference = canvas.get_reference()

|

||||

conv.errors = canvas.error

|

||||

conv.dsl = str(canvas)

|

||||

conv = conv.to_dict()

|

||||

@ -231,9 +213,9 @@ def completionOpenAI(tenant_id, agent_id, question, session_id=None, stream=True

|

||||

except Exception as e:

|

||||

logging.exception(f"Agent OpenAI-Compatible completionOpenAI parse answer failed: {e}")

|

||||

continue

|

||||

if not ans["data"]["answer"]:

|

||||

if ans.get("event") != "message" or not ans.get("data", {}).get("reference", None):

|

||||

continue

|

||||

content_piece = ans["data"]["answer"]

|

||||

content_piece = ans["data"]["content"]

|

||||

completion_tokens += len(tiktokenenc.encode(content_piece))

|

||||

|

||||

yield "data: " + json.dumps(

|

||||

@ -278,9 +260,9 @@ def completionOpenAI(tenant_id, agent_id, question, session_id=None, stream=True

|

||||

):

|

||||

if isinstance(ans, str):

|

||||

ans = json.loads(ans[5:])

|

||||

if not ans["data"]["answer"]:

|

||||

if ans.get("event") != "message" or not ans.get("data", {}).get("reference", None):

|

||||

continue

|

||||

all_content += ans["data"]["answer"]

|

||||

all_content += ans["data"]["content"]

|

||||

|

||||

completion_tokens = len(tiktokenenc.encode(all_content))

|

||||

|

||||

|

||||

@ -256,10 +256,10 @@ def repair_bad_citation_formats(answer: str, kbinfos: dict, idx: set):

|

||||

|

||||

|

||||

def meta_filter(metas: dict, filters: list[dict]):

|

||||

doc_ids = []

|

||||

doc_ids = set([])

|

||||

|

||||

def filter_out(v2docs, operator, value):

|

||||

nonlocal doc_ids

|

||||

ids = []

|

||||

for input, docids in v2docs.items():

|

||||

try:

|

||||

input = float(input)

|

||||

@ -284,16 +284,24 @@ def meta_filter(metas: dict, filters: list[dict]):

|

||||

]:

|

||||

try:

|

||||

if all(conds):

|

||||

doc_ids.extend(docids)

|

||||

ids.extend(docids)

|

||||

break

|

||||

except Exception:

|

||||

pass

|

||||

return ids

|

||||

|

||||

for k, v2docs in metas.items():

|

||||

for f in filters:

|

||||

if k != f["key"]:

|

||||

continue

|

||||

filter_out(v2docs, f["op"], f["value"])

|

||||

return doc_ids

|

||||

ids = filter_out(v2docs, f["op"], f["value"])

|

||||

if not doc_ids:

|

||||

doc_ids = set(ids)

|

||||

else:

|

||||

doc_ids = doc_ids & set(ids)

|

||||

if not doc_ids:

|

||||

return []

|

||||

return list(doc_ids)

|

||||

|

||||

|

||||

def chat(dialog, messages, stream=True, **kwargs):

|

||||

|

||||

@ -152,7 +152,7 @@ class LLMBundle(LLM4Tenant):

|

||||

|

||||

def describe_with_prompt(self, image, prompt):

|

||||

if self.langfuse:

|

||||

generation = self.language.start_generation(trace_context=self.trace_context, name="describe_with_prompt", metadata={"model": self.llm_name, "prompt": prompt})

|

||||

generation = self.langfuse.start_generation(trace_context=self.trace_context, name="describe_with_prompt", metadata={"model": self.llm_name, "prompt": prompt})

|

||||

|

||||

txt, used_tokens = self.mdl.describe_with_prompt(image, prompt)

|

||||

if not TenantLLMService.increase_usage(self.tenant_id, self.llm_type, used_tokens):

|

||||

|

||||

@ -17,6 +17,7 @@ import asyncio

|

||||

import functools

|

||||

import json

|

||||

import logging

|

||||

import os

|

||||

import queue

|

||||

import random

|

||||

import threading

|

||||

@ -353,7 +354,7 @@ def get_parser_config(chunk_method, parser_config):

|

||||

if not chunk_method:

|

||||

chunk_method = "naive"

|

||||

|

||||

# Define default configurations for each chunk method

|

||||

# Define default configurations for each chunking method

|

||||

key_mapping = {

|

||||

"naive": {"chunk_token_num": 512, "delimiter": r"\n", "html4excel": False, "layout_recognize": "DeepDOC", "raptor": {"use_raptor": False}, "graphrag": {"use_graphrag": False}},

|

||||

"qa": {"raptor": {"use_raptor": False}, "graphrag": {"use_graphrag": False}},

|

||||

@ -667,7 +668,10 @@ def timeout(seconds: float | int = None, attempts: int = 2, *, exception: Option

|

||||

|

||||

for a in range(attempts):

|

||||

try:

|

||||

result = result_queue.get(timeout=seconds)

|

||||

if os.environ.get("ENABLE_TIMEOUT_ASSERTION"):

|

||||

result = result_queue.get(timeout=seconds)

|

||||

else:

|

||||

result = result_queue.get()

|

||||

if isinstance(result, Exception):

|

||||

raise result

|

||||

return result

|

||||

@ -682,7 +686,10 @@ def timeout(seconds: float | int = None, attempts: int = 2, *, exception: Option

|

||||

|

||||

for a in range(attempts):

|

||||

try:

|

||||

with trio.fail_after(seconds):

|

||||

if os.environ.get("ENABLE_TIMEOUT_ASSERTION"):

|

||||

with trio.fail_after(seconds):

|

||||

return await func(*args, **kwargs)

|

||||

else:

|

||||

return await func(*args, **kwargs)

|

||||

except trio.TooSlowError:

|

||||

if a < attempts - 1:

|

||||

|

||||

@ -532,23 +532,65 @@

|

||||

"tags": "LLM,TEXT EMBEDDING,SPEECH2TEXT,MODERATION",

|

||||

"status": "1",

|

||||

"llm": [

|

||||

{

|

||||

"llm_name": "glm-4.5",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5-x",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5-air",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5-airx",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5-flash",

|

||||

"tags": "LLM,CHAT,128k",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4.5v",

|

||||

"tags": "LLM,IMAGE2TEXT,64,",

|

||||

"max_tokens": 64000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": false

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4-plus",

|

||||

"tags": "LLM,CHAT,",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4-0520",

|

||||

"tags": "LLM,CHAT,",

|

||||

"tags": "LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "glm-4",

|

||||

"tags": "LLM,CHAT,",

|

||||

"tags":"LLM,CHAT,128K",

|

||||

"max_tokens": 128000,

|

||||

"model_type": "chat",

|

||||

"is_tools": true

|

||||

|

||||

@ -15,35 +15,200 @@

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

from rag.nlp import find_codec

|

||||

import readability

|

||||

import html_text

|

||||

from rag.nlp import find_codec, rag_tokenizer

|

||||

import uuid

|

||||

import chardet

|

||||

|

||||

from bs4 import BeautifulSoup, NavigableString, Tag, Comment

|

||||

import html

|

||||

|

||||

def get_encoding(file):

|

||||

with open(file,'rb') as f:

|

||||

tmp = chardet.detect(f.read())

|

||||

return tmp['encoding']

|

||||

|

||||

BLOCK_TAGS = [

|

||||

"h1", "h2", "h3", "h4", "h5", "h6",

|

||||

"p", "div", "article", "section", "aside",

|

||||

"ul", "ol", "li",

|

||||

"table", "pre", "code", "blockquote",

|

||||

"figure", "figcaption"

|

||||

]

|

||||

TITLE_TAGS = {"h1": "#", "h2": "##", "h3": "###", "h4": "#####", "h5": "#####", "h6": "######"}

|

||||

|

||||

|

||||

class RAGFlowHtmlParser:

|

||||

def __call__(self, fnm, binary=None):

|

||||

def __call__(self, fnm, binary=None, chunk_token_num=None):

|

||||

if binary:

|

||||

encoding = find_codec(binary)

|

||||

txt = binary.decode(encoding, errors="ignore")

|

||||

else:

|

||||

with open(fnm, "r",encoding=get_encoding(fnm)) as f:

|

||||

txt = f.read()

|

||||

return self.parser_txt(txt)

|

||||

return self.parser_txt(txt, chunk_token_num)

|

||||

|

||||

@classmethod

|

||||

def parser_txt(cls, txt):

|

||||

def parser_txt(cls, txt, chunk_token_num):

|

||||

if not isinstance(txt, str):

|

||||

raise TypeError("txt type should be string!")

|

||||

html_doc = readability.Document(txt)

|

||||

title = html_doc.title()

|

||||

content = html_text.extract_text(html_doc.summary(html_partial=True))

|

||||

txt = f"{title}\n{content}"

|

||||

sections = txt.split("\n")

|

||||

|

||||

temp_sections = []

|

||||

soup = BeautifulSoup(txt, "html5lib")

|

||||

# delete <style> tag

|

||||

for style_tag in soup.find_all(["style", "script"]):

|

||||

style_tag.decompose()

|

||||

# delete <script> tag in <div>

|

||||

for div_tag in soup.find_all("div"):

|

||||

for script_tag in div_tag.find_all("script"):

|

||||

script_tag.decompose()

|

||||

# delete inline style

|

||||

for tag in soup.find_all(True):

|

||||

if 'style' in tag.attrs:

|

||||

del tag.attrs['style']

|

||||

# delete HTML comment

|

||||

for comment in soup.find_all(string=lambda text: isinstance(text, Comment)):

|

||||

comment.extract()

|

||||

|

||||

cls.read_text_recursively(soup.body, temp_sections, chunk_token_num=chunk_token_num)

|

||||

block_txt_list, table_list = cls.merge_block_text(temp_sections)

|

||||

sections = cls.chunk_block(block_txt_list, chunk_token_num=chunk_token_num)

|

||||

for table in table_list:

|

||||

sections.append(table.get("content", ""))

|

||||

return sections

|

||||

|

||||

@classmethod

|

||||

def split_table(cls, html_table, chunk_token_num=512):

|

||||

soup = BeautifulSoup(html_table, "html.parser")

|

||||

rows = soup.find_all("tr")

|

||||

tables = []

|

||||

current_table = []

|

||||

current_count = 0

|

||||

table_str_list = []

|

||||

for row in rows:

|

||||

tks_str = rag_tokenizer.tokenize(str(row))

|

||||

token_count = len(tks_str.split(" ")) if tks_str else 0

|

||||

if current_count + token_count > chunk_token_num:

|

||||

tables.append(current_table)

|

||||

current_table = []

|

||||

current_count = 0

|

||||

current_table.append(row)

|

||||

current_count += token_count

|

||||

if current_table:

|

||||

tables.append(current_table)

|

||||

|

||||

for table_rows in tables:

|

||||

new_table = soup.new_tag("table")

|

||||

for row in table_rows:

|

||||

new_table.append(row)

|

||||

table_str_list.append(str(new_table))

|

||||

|

||||

return table_str_list

|

||||

|

||||

@classmethod

|

||||

def read_text_recursively(cls, element, parser_result, chunk_token_num=512, parent_name=None, block_id=None):

|

||||

if isinstance(element, NavigableString):

|

||||

content = element.strip()

|

||||

|

||||

def is_valid_html(content):

|

||||

try:

|

||||

soup = BeautifulSoup(content, "html.parser")

|

||||

return bool(soup.find())

|

||||

except Exception:

|

||||

return False

|

||||

|

||||

return_info = []

|

||||

if content:

|

||||

if is_valid_html(content):

|

||||

soup = BeautifulSoup(content, "html.parser")

|

||||

child_info = cls.read_text_recursively(soup, parser_result, chunk_token_num, element.name, block_id)

|

||||

parser_result.extend(child_info)

|

||||

else:

|

||||

info = {"content": element.strip(), "tag_name": "inner_text", "metadata": {"block_id": block_id}}

|

||||

if parent_name:

|

||||

info["tag_name"] = parent_name

|

||||

return_info.append(info)

|

||||

return return_info

|

||||

elif isinstance(element, Tag):

|

||||

|

||||

if str.lower(element.name) == "table":

|

||||

table_info_list = []

|

||||

table_id = str(uuid.uuid1())

|

||||

table_list = [html.unescape(str(element))]

|

||||

for t in table_list:

|

||||

table_info_list.append({"content": t, "tag_name": "table",

|

||||

"metadata": {"table_id": table_id, "index": table_list.index(t)}})

|

||||

return table_info_list

|

||||

else:

|

||||

block_id = None

|

||||

if str.lower(element.name) in BLOCK_TAGS:

|

||||

block_id = str(uuid.uuid1())

|

||||

for child in element.children:

|

||||

child_info = cls.read_text_recursively(child, parser_result, chunk_token_num, element.name,

|

||||

block_id)

|

||||

parser_result.extend(child_info)

|

||||

return []

|

||||

|

||||

@classmethod

|

||||

def merge_block_text(cls, parser_result):

|

||||

block_content = []

|

||||

current_content = ""

|

||||

table_info_list = []

|

||||

lask_block_id = None

|

||||

for item in parser_result:

|

||||

content = item.get("content")

|

||||

tag_name = item.get("tag_name")

|

||||

title_flag = tag_name in TITLE_TAGS

|

||||

block_id = item.get("metadata", {}).get("block_id")

|

||||

if block_id:

|

||||

if title_flag:

|

||||

content = f"{TITLE_TAGS[tag_name]} {content}"

|

||||

if lask_block_id != block_id:

|

||||

if lask_block_id is not None:

|

||||

block_content.append(current_content)

|

||||

current_content = content

|

||||

lask_block_id = block_id

|

||||

else:

|

||||

current_content += (" " if current_content else "") + content

|

||||

else:

|

||||

if tag_name == "table":

|

||||

table_info_list.append(item)

|

||||

else:

|

||||

current_content += (" " if current_content else "" + content)

|

||||

if current_content:

|

||||

block_content.append(current_content)

|

||||

return block_content, table_info_list

|

||||

|

||||

@classmethod

|

||||

def chunk_block(cls, block_txt_list, chunk_token_num=512):

|

||||

chunks = []

|

||||

current_block = ""

|

||||

current_token_count = 0

|

||||

|

||||

for block in block_txt_list:

|

||||

tks_str = rag_tokenizer.tokenize(block)

|

||||

block_token_count = len(tks_str.split(" ")) if tks_str else 0

|

||||

if block_token_count > chunk_token_num:

|

||||

if current_block:

|

||||

chunks.append(current_block)

|

||||

start = 0

|

||||

tokens = tks_str.split(" ")

|

||||

while start < len(tokens):

|

||||

end = start + chunk_token_num

|

||||

split_tokens = tokens[start:end]

|

||||

chunks.append(" ".join(split_tokens))

|

||||

start = end

|

||||

current_block = ""

|

||||

current_token_count = 0

|

||||

else:

|

||||

if current_token_count + block_token_count <= chunk_token_num:

|

||||

current_block += ("\n" if current_block else "") + block

|

||||

current_token_count += block_token_count

|

||||

else:

|

||||

chunks.append(current_block)

|

||||

current_block = block

|

||||

current_token_count = block_token_count

|

||||

|

||||

if current_block:

|

||||

chunks.append(current_block)

|

||||

|

||||

return chunks

|

||||

|

||||

|

||||

@ -93,13 +93,13 @@ REDIS_PASSWORD=infini_rag_flow

|

||||

SVR_HTTP_PORT=9380

|

||||

|

||||

# The RAGFlow Docker image to download.

|

||||

# Defaults to the v0.20.1-slim edition, which is the RAGFlow Docker image without embedding models.

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3-slim

|

||||

# Defaults to the v0.20.4-slim edition, which is the RAGFlow Docker image without embedding models.

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4-slim

|

||||

#

|

||||

# To download the RAGFlow Docker image with embedding models, uncomment the following line instead:

|

||||

# RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.1

|

||||

# RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4

|

||||

#

|

||||

# The Docker image of the v0.20.1 edition includes built-in embedding models:

|

||||

# The Docker image of the v0.20.4 edition includes built-in embedding models:

|

||||

# - BAAI/bge-large-zh-v1.5

|

||||

# - maidalun1020/bce-embedding-base_v1

|

||||

#

|

||||

|

||||

@ -79,8 +79,8 @@ The [.env](./.env) file contains important environment variables for Docker.

|

||||

- `RAGFLOW-IMAGE`

|

||||

The Docker image edition. Available editions:

|

||||

|

||||

- `infiniflow/ragflow:v0.20.3-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.20.3`: The RAGFlow Docker image with embedding models including:

|

||||

- `infiniflow/ragflow:v0.20.4-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.20.4`: The RAGFlow Docker image with embedding models including:

|

||||

- Built-in embedding models:

|

||||

- `BAAI/bge-large-zh-v1.5`

|

||||

- `maidalun1020/bce-embedding-base_v1`

|

||||

|

||||

@ -99,8 +99,8 @@ RAGFlow utilizes MinIO as its object storage solution, leveraging its scalabilit

|

||||

- `RAGFLOW-IMAGE`

|

||||

The Docker image edition. Available editions:

|

||||

|

||||

- `infiniflow/ragflow:v0.20.3-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.20.3`: The RAGFlow Docker image with embedding models including:

|

||||

- `infiniflow/ragflow:v0.20.4-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.20.4`: The RAGFlow Docker image with embedding models including:

|

||||

- Built-in embedding models:

|

||||

- `BAAI/bge-large-zh-v1.5`

|

||||

- `maidalun1020/bce-embedding-base_v1`

|

||||

|

||||

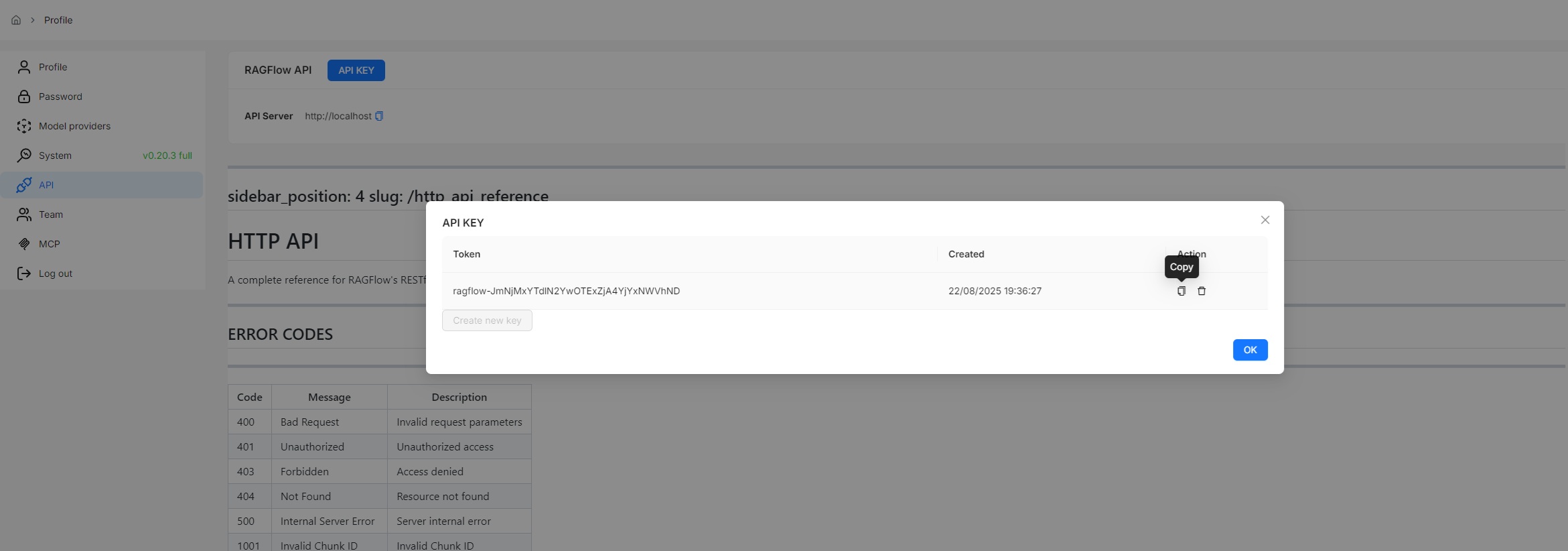

@ -11,7 +11,7 @@ An API key is required for the RAGFlow server to authenticate your HTTP/Python o

|

||||

2. Click **API** to switch to the **API** page.

|

||||

3. Obtain a RAGFlow API key:

|

||||

|

||||

|

||||

|

||||

|

||||

:::tip NOTE

|

||||

See the [RAGFlow HTTP API reference](../references/http_api_reference.md) or the [RAGFlow Python API reference](../references/python_api_reference.md) for a complete reference of RAGFlow's HTTP or Python APIs.

|

||||

|

||||

@ -77,7 +77,7 @@ After building the infiniflow/ragflow:nightly-slim image, you are ready to launc

|

||||

|

||||

1. Edit Docker Compose Configuration

|

||||

|

||||

Open the `docker/.env` file. Find the `RAGFLOW_IMAGE` setting and change the image reference from `infiniflow/ragflow:v0.20.3-slim` to `infiniflow/ragflow:nightly-slim` to use the pre-built image.

|

||||

Open the `docker/.env` file. Find the `RAGFLOW_IMAGE` setting and change the image reference from `infiniflow/ragflow:v0.20.4-slim` to `infiniflow/ragflow:nightly-slim` to use the pre-built image.

|

||||

|

||||

|

||||

2. Launch the Service

|

||||

|

||||

10

docs/faq.mdx

10

docs/faq.mdx

@ -30,17 +30,17 @@ The "garbage in garbage out" status quo remains unchanged despite the fact that

|

||||

|

||||

Each RAGFlow release is available in two editions:

|

||||

|

||||

- **Slim edition**: excludes built-in embedding models and is identified by a **-slim** suffix added to the version name. Example: `infiniflow/ragflow:v0.20.3-slim`

|

||||

- **Full edition**: includes built-in embedding models and has no suffix added to the version name. Example: `infiniflow/ragflow:v0.20.3`

|

||||

- **Slim edition**: excludes built-in embedding models and is identified by a **-slim** suffix added to the version name. Example: `infiniflow/ragflow:v0.20.4-slim`

|

||||

- **Full edition**: includes built-in embedding models and has no suffix added to the version name. Example: `infiniflow/ragflow:v0.20.4`

|

||||

|

||||

---

|

||||

|

||||

### Which embedding models can be deployed locally?

|

||||

|

||||

RAGFlow offers two Docker image editions, `v0.20.3-slim` and `v0.20.3`:

|

||||

RAGFlow offers two Docker image editions, `v0.20.4-slim` and `v0.20.4`:

|

||||

|

||||

- `infiniflow/ragflow:v0.20.3-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.20.3`: The RAGFlow Docker image with embedding models including:

|

||||

- `infiniflow/ragflow:v0.20.4-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.20.4`: The RAGFlow Docker image with embedding models including:

|

||||

- Built-in embedding models:

|

||||

- `BAAI/bge-large-zh-v1.5`

|

||||

- `maidalun1020/bce-embedding-base_v1`

|

||||

|

||||

@ -9,7 +9,7 @@ The component equipped with reasoning, tool usage, and multi-agent collaboration

|

||||

|

||||

---

|

||||

|

||||

An **Agent** component fine-tunes the LLM and sets its prompt. From v0.20.3 onwards, an **Agent** component is able to work independently and with the following capabilities:

|

||||

An **Agent** component fine-tunes the LLM and sets its prompt. From v0.20.4 onwards, an **Agent** component is able to work independently and with the following capabilities:

|

||||

|

||||

- Autonomous reasoning with reflection and adjustment based on environmental feedback.

|

||||

- Use of tools or subagents to complete tasks.

|

||||

|

||||

@ -9,7 +9,7 @@ A component that retrieves information from specified datasets.

|

||||

|

||||

## Scenarios

|

||||

|

||||

A **Retrieval** component is essential in most RAG scenarios, where information is extracted from designated knowledge bases before being sent to the LLM for content generation. As of v0.20.3, a **Retrieval** component can operate either as a workflow component or as a tool of an **Agent**, enabling the Agent to control its invocation and search queries.

|

||||

A **Retrieval** component is essential in most RAG scenarios, where information is extracted from designated knowledge bases before being sent to the LLM for content generation. As of v0.20.4, a **Retrieval** component can operate either as a workflow component or as a tool of an **Agent**, enabling the Agent to control its invocation and search queries.

|

||||

|

||||

## Configurations

|

||||

|

||||

|

||||

@ -48,7 +48,7 @@ You start an AI conversation by creating an assistant.

|

||||

- If no target language is selected, the system will search only in the language of your query, which may cause relevant information in other languages to be missed.

|

||||

- **Variable** refers to the variables (keys) to be used in the system prompt. `{knowledge}` is a reserved variable. Click **Add** to add more variables for the system prompt.

|

||||

- If you are uncertain about the logic behind **Variable**, leave it *as-is*.

|

||||

- As of v0.20.3, if you add custom variables here, the only way you can pass in their values is to call:

|

||||

- As of v0.20.4, if you add custom variables here, the only way you can pass in their values is to call:

|

||||

- HTTP method [Converse with chat assistant](../../references/http_api_reference.md#converse-with-chat-assistant), or

|

||||

- Python method [Converse with chat assistant](../../references/python_api_reference.md#converse-with-chat-assistant).

|

||||

|

||||

|

||||

@ -128,7 +128,7 @@ See [Run retrieval test](./run_retrieval_test.md) for details.

|

||||

|

||||

## Search for knowledge base

|

||||

|

||||

As of RAGFlow v0.20.3, the search feature is still in a rudimentary form, supporting only knowledge base search by name.

|

||||

As of RAGFlow v0.20.4, the search feature is still in a rudimentary form, supporting only knowledge base search by name.

|

||||

|

||||

|

||||

|

||||

|

||||

@ -87,4 +87,4 @@ RAGFlow's file management allows you to download an uploaded file:

|

||||

|

||||

|

||||

|

||||

> As of RAGFlow v0.20.3, bulk download is not supported, nor can you download an entire folder.

|

||||

> As of RAGFlow v0.20.4, bulk download is not supported, nor can you download an entire folder.

|

||||

|

||||

@ -18,7 +18,7 @@ RAGFlow ships with a built-in [Langfuse](https://langfuse.com) integration so th

|

||||

Langfuse stores traces, spans and prompt payloads in a purpose-built observability backend and offers filtering and visualisations on top.

|

||||

|

||||

:::info NOTE

|

||||

• RAGFlow **≥ 0.20.3** (contains the Langfuse connector)

|

||||

• RAGFlow **≥ 0.20.4** (contains the Langfuse connector)

|

||||

• A Langfuse workspace (cloud or self-hosted) with a _Project Public Key_ and _Secret Key_

|

||||

:::

|

||||

|

||||

|

||||

@ -66,10 +66,10 @@ To upgrade RAGFlow, you must upgrade **both** your code **and** your Docker imag

|

||||

git clone https://github.com/infiniflow/ragflow.git

|

||||

```

|

||||

|

||||

2. Switch to the latest, officially published release, e.g., `v0.20.3`:

|

||||

2. Switch to the latest, officially published release, e.g., `v0.20.4`:

|

||||

|

||||

```bash

|

||||

git checkout -f v0.20.3

|

||||

git checkout -f v0.20.4

|

||||

```

|

||||

|

||||

3. Update **ragflow/docker/.env**:

|

||||

@ -83,14 +83,14 @@ To upgrade RAGFlow, you must upgrade **both** your code **and** your Docker imag

|

||||

<TabItem value="slim">

|

||||

|

||||

```bash

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3-slim

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4-slim

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

<TabItem value="full">

|

||||

|

||||

```bash

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.3

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.20.4

|

||||

```

|

||||

|

||||

</TabItem>

|

||||

@ -114,10 +114,10 @@ No, you do not need to. Upgrading RAGFlow in itself will *not* remove your uploa

|

||||

1. From an environment with Internet access, pull the required Docker image.

|

||||

2. Save the Docker image to a **.tar** file.

|

||||

```bash

|

||||

docker save -o ragflow.v0.20.3.tar infiniflow/ragflow:v0.20.3

|

||||

docker save -o ragflow.v0.20.4.tar infiniflow/ragflow:v0.20.4

|

||||

```

|

||||

3. Copy the **.tar** file to the target server.

|

||||

4. Load the **.tar** file into Docker:

|

||||

```bash

|

||||

docker load -i ragflow.v0.20.3.tar

|

||||

docker load -i ragflow.v0.20.4.tar

|

||||

```

|

||||

|

||||

@ -44,7 +44,7 @@ This section provides instructions on setting up the RAGFlow server on Linux. If

|

||||

|

||||

`vm.max_map_count`. This value sets the maximum number of memory map areas a process may have. Its default value is 65530. While most applications require fewer than a thousand maps, reducing this value can result in abnormal behaviors, and the system will throw out-of-memory errors when a process reaches the limitation.

|

||||

|

||||

RAGFlow v0.20.3 uses Elasticsearch or [Infinity](https://github.com/infiniflow/infinity) for multiple recall. Setting the value of `vm.max_map_count` correctly is crucial to the proper functioning of the Elasticsearch component.

|

||||

RAGFlow v0.20.4 uses Elasticsearch or [Infinity](https://github.com/infiniflow/infinity) for multiple recall. Setting the value of `vm.max_map_count` correctly is crucial to the proper functioning of the Elasticsearch component.

|

||||

|

||||

<Tabs

|

||||

defaultValue="linux"

|

||||

@ -184,13 +184,13 @@ This section provides instructions on setting up the RAGFlow server on Linux. If

|

||||

```bash

|

||||

$ git clone https://github.com/infiniflow/ragflow.git

|

||||

$ cd ragflow/docker

|

||||

$ git checkout -f v0.20.3

|

||||

$ git checkout -f v0.20.4

|

||||

```

|

||||

|

||||

3. Use the pre-built Docker images and start up the server:

|

||||

|

||||

:::tip NOTE

|

||||