mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-04 03:25:30 +08:00

Compare commits

46 Commits

5b2e5dd334

...

v0.21.1

| Author | SHA1 | Date | |

|---|---|---|---|

| de24e74b4c | |||

| 83e80e3d7f | |||

| ea73f13ebf | |||

| af6eabad0e | |||

| 5fb5a51b2e | |||

| 37004ecfb3 | |||

| 6d333ec4bc | |||

| ac188b0486 | |||

| adeb9d87e2 | |||

| d121033208 | |||

| 494f84cd69 | |||

| f24d464a53 | |||

| 484c536f2e | |||

| f7112acd97 | |||

| de4f75dcd8 | |||

| 15fff5724e | |||

| d616354d66 | |||

| 1bad24e3ab | |||

| 4910146149 | |||

| 0e549e96ee | |||

| 318cb7d792 | |||

| 4d1255b231 | |||

| b30f0be858 | |||

| a82e9b3d91 | |||

| 02a452993e | |||

| 307cdc62ea | |||

| 2d491188b8 | |||

| acc0f7396e | |||

| 9a4cd81891 | |||

| 1694f32e8e | |||

| 41fade3fe6 | |||

| 8d333f3590 | |||

| cd77425b87 | |||

| 544c9990e3 | |||

| 41a647fe32 | |||

| 594bf485d4 | |||

| 863c3e3d9c | |||

| 1767039be3 | |||

| cd75fa02b1 | |||

| cfdd37820a | |||

| 9d12380806 | |||

| 866098634b | |||

| 8013505daf | |||

| deb81810e9 | |||

| 6ab96287c9 | |||

| aaa4776657 |

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -187,7 +187,7 @@ releases! 🌟

|

||||

> All Docker images are built for x86 platforms. We don't currently offer Docker images for ARM64.

|

||||

> If you are on an ARM64 platform, follow [this guide](https://ragflow.io/docs/dev/build_docker_image) to build a Docker image compatible with your system.

|

||||

|

||||

> The command below downloads the `v0.21.0-slim` edition of the RAGFlow Docker image. See the following table for descriptions of different RAGFlow editions. To download a RAGFlow edition different from `v0.21.0-slim`, update the `RAGFLOW_IMAGE` variable accordingly in **docker/.env** before using `docker compose` to start the server. For example: set `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0` for the full edition `v0.21.0`.

|

||||

> The command below downloads the `v0.21.1-slim` edition of the RAGFlow Docker image. See the following table for descriptions of different RAGFlow editions. To download a RAGFlow edition different from `v0.21.1-slim`, update the `RAGFLOW_IMAGE` variable accordingly in **docker/.env** before using `docker compose` to start the server. For example: set `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1` for the full edition `v0.21.1`.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -200,8 +200,8 @@ releases! 🌟

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

|-------------------|-----------------|-----------------------|--------------------------|

|

||||

| v0.21.0 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.0-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.21.1 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.1-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Lencana Daring" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Rilis%20Terbaru" alt="Rilis Terbaru">

|

||||

@ -181,7 +181,7 @@ Coba demo kami di [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

> Semua gambar Docker dibangun untuk platform x86. Saat ini, kami tidak menawarkan gambar Docker untuk ARM64.

|

||||

> Jika Anda menggunakan platform ARM64, [silakan gunakan panduan ini untuk membangun gambar Docker yang kompatibel dengan sistem Anda](https://ragflow.io/docs/dev/build_docker_image).

|

||||

|

||||

> Perintah di bawah ini mengunduh edisi v0.21.0-slim dari gambar Docker RAGFlow. Silakan merujuk ke tabel berikut untuk deskripsi berbagai edisi RAGFlow. Untuk mengunduh edisi RAGFlow yang berbeda dari v0.21.0-slim, perbarui variabel RAGFLOW_IMAGE di docker/.env sebelum menggunakan docker compose untuk memulai server. Misalnya, atur RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0 untuk edisi lengkap v0.21.0.

|

||||

> Perintah di bawah ini mengunduh edisi v0.21.1-slim dari gambar Docker RAGFlow. Silakan merujuk ke tabel berikut untuk deskripsi berbagai edisi RAGFlow. Untuk mengunduh edisi RAGFlow yang berbeda dari v0.21.1-slim, perbarui variabel RAGFLOW_IMAGE di docker/.env sebelum menggunakan docker compose untuk memulai server. Misalnya, atur RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1 untuk edisi lengkap v0.21.1.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -194,8 +194,8 @@ $ docker compose -f docker-compose.yml up -d

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.21.0 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.0-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.21.1 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.1-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -160,7 +160,7 @@

|

||||

> 現在、公式に提供されているすべての Docker イメージは x86 アーキテクチャ向けにビルドされており、ARM64 用の Docker イメージは提供されていません。

|

||||

> ARM64 アーキテクチャのオペレーティングシステムを使用している場合は、[このドキュメント](https://ragflow.io/docs/dev/build_docker_image)を参照して Docker イメージを自分でビルドしてください。

|

||||

|

||||

> 以下のコマンドは、RAGFlow Docker イメージの v0.21.0-slim エディションをダウンロードします。異なる RAGFlow エディションの説明については、以下の表を参照してください。v0.21.0-slim とは異なるエディションをダウンロードするには、docker/.env ファイルの RAGFLOW_IMAGE 変数を適宜更新し、docker compose を使用してサーバーを起動してください。例えば、完全版 v0.21.0 をダウンロードするには、RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0 と設定します。

|

||||

> 以下のコマンドは、RAGFlow Docker イメージの v0.21.1-slim エディションをダウンロードします。異なる RAGFlow エディションの説明については、以下の表を参照してください。v0.21.1-slim とは異なるエディションをダウンロードするには、docker/.env ファイルの RAGFLOW_IMAGE 変数を適宜更新し、docker compose を使用してサーバーを起動してください。例えば、完全版 v0.21.1 をダウンロードするには、RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1 と設定します。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -173,8 +173,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.21.0 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.0-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.21.1 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.1-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -160,7 +160,7 @@

|

||||

> 모든 Docker 이미지는 x86 플랫폼을 위해 빌드되었습니다. 우리는 현재 ARM64 플랫폼을 위한 Docker 이미지를 제공하지 않습니다.

|

||||

> ARM64 플랫폼을 사용 중이라면, [시스템과 호환되는 Docker 이미지를 빌드하려면 이 가이드를 사용해 주세요](https://ragflow.io/docs/dev/build_docker_image).

|

||||

|

||||

> 아래 명령어는 RAGFlow Docker 이미지의 v0.21.0-slim 버전을 다운로드합니다. 다양한 RAGFlow 버전에 대한 설명은 다음 표를 참조하십시오. v0.21.0-slim과 다른 RAGFlow 버전을 다운로드하려면, docker/.env 파일에서 RAGFLOW_IMAGE 변수를 적절히 업데이트한 후 docker compose를 사용하여 서버를 시작하십시오. 예를 들어, 전체 버전인 v0.21.0을 다운로드하려면 RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0로 설정합니다.

|

||||

> 아래 명령어는 RAGFlow Docker 이미지의 v0.21.1-slim 버전을 다운로드합니다. 다양한 RAGFlow 버전에 대한 설명은 다음 표를 참조하십시오. v0.21.1-slim과 다른 RAGFlow 버전을 다운로드하려면, docker/.env 파일에서 RAGFLOW_IMAGE 변수를 적절히 업데이트한 후 docker compose를 사용하여 서버를 시작하십시오. 예를 들어, 전체 버전인 v0.21.1을 다운로드하려면 RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1로 설정합니다.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -173,8 +173,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.21.0 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.0-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.21.1 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.1-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Badge Estático" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Última%20Relese" alt="Última Versão">

|

||||

@ -180,7 +180,7 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

> Todas as imagens Docker são construídas para plataformas x86. Atualmente, não oferecemos imagens Docker para ARM64.

|

||||

> Se você estiver usando uma plataforma ARM64, por favor, utilize [este guia](https://ragflow.io/docs/dev/build_docker_image) para construir uma imagem Docker compatível com o seu sistema.

|

||||

|

||||

> O comando abaixo baixa a edição `v0.21.0-slim` da imagem Docker do RAGFlow. Consulte a tabela a seguir para descrições de diferentes edições do RAGFlow. Para baixar uma edição do RAGFlow diferente da `v0.21.0-slim`, atualize a variável `RAGFLOW_IMAGE` conforme necessário no **docker/.env** antes de usar `docker compose` para iniciar o servidor. Por exemplo: defina `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0` para a edição completa `v0.21.0`.

|

||||

> O comando abaixo baixa a edição `v0.21.1-slim` da imagem Docker do RAGFlow. Consulte a tabela a seguir para descrições de diferentes edições do RAGFlow. Para baixar uma edição do RAGFlow diferente da `v0.21.1-slim`, atualize a variável `RAGFLOW_IMAGE` conforme necessário no **docker/.env** antes de usar `docker compose` para iniciar o servidor. Por exemplo: defina `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1` para a edição completa `v0.21.1`.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -193,8 +193,8 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

|

||||

| Tag da imagem RAGFlow | Tamanho da imagem (GB) | Possui modelos de incorporação? | Estável? |

|

||||

| --------------------- | ---------------------- | ------------------------------- | ------------------------ |

|

||||

| v0.21.0 | ~9 | :heavy_check_mark: | Lançamento estável |

|

||||

| v0.21.0-slim | ~2 | ❌ | Lançamento estável |

|

||||

| v0.21.1 | ~9 | :heavy_check_mark: | Lançamento estável |

|

||||

| v0.21.1-slim | ~2 | ❌ | Lançamento estável |

|

||||

| nightly | ~9 | :heavy_check_mark: | _Instável_ build noturno |

|

||||

| nightly-slim | ~2 | ❌ | _Instável_ build noturno |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -183,7 +183,7 @@

|

||||

> 所有 Docker 映像檔都是為 x86 平台建置的。目前,我們不提供 ARM64 平台的 Docker 映像檔。

|

||||

> 如果您使用的是 ARM64 平台,請使用 [這份指南](https://ragflow.io/docs/dev/build_docker_image) 來建置適合您系統的 Docker 映像檔。

|

||||

|

||||

> 執行以下指令會自動下載 RAGFlow slim Docker 映像 `v0.21.0-slim`。請參考下表查看不同 Docker 發行版的說明。如需下載不同於 `v0.21.0-slim` 的 Docker 映像,請在執行 `docker compose` 啟動服務之前先更新 **docker/.env** 檔案內的 `RAGFLOW_IMAGE` 變數。例如,你可以透過設定 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0` 來下載 RAGFlow 鏡像的 `v0.21.0` 完整發行版。

|

||||

> 執行以下指令會自動下載 RAGFlow slim Docker 映像 `v0.21.1-slim`。請參考下表查看不同 Docker 發行版的說明。如需下載不同於 `v0.21.1-slim` 的 Docker 映像,請在執行 `docker compose` 啟動服務之前先更新 **docker/.env** 檔案內的 `RAGFLOW_IMAGE` 變數。例如,你可以透過設定 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1` 來下載 RAGFlow 鏡像的 `v0.21.1` 完整發行版。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -196,8 +196,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.21.0 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.0-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.21.1 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.1-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.21.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -183,7 +183,7 @@

|

||||

> 请注意,目前官方提供的所有 Docker 镜像均基于 x86 架构构建,并不提供基于 ARM64 的 Docker 镜像。

|

||||

> 如果你的操作系统是 ARM64 架构,请参考[这篇文档](https://ragflow.io/docs/dev/build_docker_image)自行构建 Docker 镜像。

|

||||

|

||||

> 运行以下命令会自动下载 RAGFlow slim Docker 镜像 `v0.21.0-slim`。请参考下表查看不同 Docker 发行版的描述。如需下载不同于 `v0.21.0-slim` 的 Docker 镜像,请在运行 `docker compose` 启动服务之前先更新 **docker/.env** 文件内的 `RAGFLOW_IMAGE` 变量。比如,你可以通过设置 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0` 来下载 RAGFlow 镜像的 `v0.21.0` 完整发行版。

|

||||

> 运行以下命令会自动下载 RAGFlow slim Docker 镜像 `v0.21.1-slim`。请参考下表查看不同 Docker 发行版的描述。如需下载不同于 `v0.21.1-slim` 的 Docker 镜像,请在运行 `docker compose` 启动服务之前先更新 **docker/.env** 文件内的 `RAGFLOW_IMAGE` 变量。比如,你可以通过设置 `RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1` 来下载 RAGFlow 镜像的 `v0.21.1` 完整发行版。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

@ -196,8 +196,8 @@

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Has embedding models? | Stable? |

|

||||

| ----------------- | --------------- | --------------------- | ------------------------ |

|

||||

| v0.21.0 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.0-slim | ≈2 | ❌ | Stable release |

|

||||

| v0.21.1 | ≈9 | :heavy_check_mark: | Stable release |

|

||||

| v0.21.1-slim | ≈2 | ❌ | Stable release |

|

||||

| nightly | ≈9 | :heavy_check_mark: | _Unstable_ nightly build |

|

||||

| nightly-slim | ≈2 | ❌ | _Unstable_ nightly build |

|

||||

|

||||

|

||||

@ -48,7 +48,7 @@ It consists of a server-side Service and a command-line client (CLI), both imple

|

||||

1. Ensure the Admin Service is running.

|

||||

2. Install ragflow-cli.

|

||||

```bash

|

||||

pip install ragflow-cli==0.21.0

|

||||

pip install ragflow-cli==0.21.1

|

||||

```

|

||||

3. Launch the CLI client:

|

||||

```bash

|

||||

|

||||

@ -370,7 +370,7 @@ class AdminCLI(Cmd):

|

||||

self.session.headers.update({

|

||||

'Content-Type': 'application/json',

|

||||

'Authorization': response.headers['Authorization'],

|

||||

'User-Agent': 'RAGFlow-CLI/0.21.0'

|

||||

'User-Agent': 'RAGFlow-CLI/0.21.1'

|

||||

})

|

||||

print("Authentication successful.")

|

||||

return True

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

[project]

|

||||

name = "ragflow-cli"

|

||||

version = "0.21.0"

|

||||

version = "0.21.1"

|

||||

description = "Admin Service's client of [RAGFlow](https://github.com/infiniflow/ragflow). The Admin Service provides user management and system monitoring. "

|

||||

authors = [{ name = "Lynn", email = "lynn_inf@hotmail.com" }]

|

||||

license = { text = "Apache License, Version 2.0" }

|

||||

|

||||

@ -1,24 +0,0 @@

|

||||

[project]

|

||||

name = "ragflow-cli"

|

||||

version = "0.21.0.dev2"

|

||||

description = "Admin Service's client of [RAGFlow](https://github.com/infiniflow/ragflow). The Admin Service provides user management and system monitoring. "

|

||||

authors = [{ name = "Lynn", email = "lynn_inf@hotmail.com" }]

|

||||

license = { text = "Apache License, Version 2.0" }

|

||||

readme = "README.md"

|

||||

requires-python = ">=3.10,<3.13"

|

||||

dependencies = [

|

||||

"requests>=2.30.0,<3.0.0",

|

||||

"beartype>=0.18.5,<0.19.0",

|

||||

"pycryptodomex>=3.10.0",

|

||||

"lark>=1.1.0",

|

||||

]

|

||||

|

||||

[dependency-groups]

|

||||

test = [

|

||||

"pytest>=8.3.5",

|

||||

"requests>=2.32.3",

|

||||

"requests-toolbelt>=1.0.0",

|

||||

]

|

||||

|

||||

[project.scripts]

|

||||

ragflow-cli = "ragflow_cli.admin_client:main"

|

||||

@ -32,6 +32,7 @@ from api.utils.crypt import decrypt

|

||||

from api.utils import (

|

||||

current_timestamp,

|

||||

datetime_format,

|

||||

get_format_time,

|

||||

get_uuid,

|

||||

)

|

||||

from api.utils.api_utils import (

|

||||

@ -131,6 +132,7 @@ def login_admin(email: str, password: str):

|

||||

login_user(user)

|

||||

user.update_time = (current_timestamp(),)

|

||||

user.update_date = (datetime_format(datetime.now()),)

|

||||

user.last_login_time = get_format_time()

|

||||

user.save()

|

||||

msg = "Welcome back!"

|

||||

return construct_response(data=resp, auth=user.get_id(), message=msg)

|

||||

|

||||

@ -32,18 +32,24 @@ admin_bp = Blueprint('admin', __name__, url_prefix='/api/v1/admin')

|

||||

def login():

|

||||

if not request.json:

|

||||

return error_response('Authorize admin failed.' ,400)

|

||||

email = request.json.get("email", "")

|

||||

password = request.json.get("password", "")

|

||||

return login_admin(email, password)

|

||||

try:

|

||||

email = request.json.get("email", "")

|

||||

password = request.json.get("password", "")

|

||||

return login_admin(email, password)

|

||||

except Exception as e:

|

||||

return error_response(str(e), 500)

|

||||

|

||||

|

||||

@admin_bp.route('/logout', methods=['GET'])

|

||||

@login_required

|

||||

def logout():

|

||||

current_user.access_token = f"INVALID_{secrets.token_hex(16)}"

|

||||

current_user.save()

|

||||

logout_user()

|

||||

return success_response(True)

|

||||

try:

|

||||

current_user.access_token = f"INVALID_{secrets.token_hex(16)}"

|

||||

current_user.save()

|

||||

logout_user()

|

||||

return success_response(True)

|

||||

except Exception as e:

|

||||

return error_response(str(e), 500)

|

||||

|

||||

|

||||

@admin_bp.route('/auth', methods=['GET'])

|

||||

|

||||

@ -36,8 +36,13 @@ class UserMgr:

|

||||

users = UserService.get_all_users()

|

||||

result = []

|

||||

for user in users:

|

||||

result.append({'email': user.email, 'nickname': user.nickname, 'create_date': user.create_date,

|

||||

'is_active': user.is_active})

|

||||

result.append({

|

||||

'email': user.email,

|

||||

'nickname': user.nickname,

|

||||

'create_date': user.create_date,

|

||||

'is_active': user.is_active,

|

||||

'is_superuser': user.is_superuser,

|

||||

})

|

||||

return result

|

||||

|

||||

@staticmethod

|

||||

@ -50,7 +55,6 @@ class UserMgr:

|

||||

'email': user.email,

|

||||

'language': user.language,

|

||||

'last_login_time': user.last_login_time,

|

||||

'is_authenticated': user.is_authenticated,

|

||||

'is_active': user.is_active,

|

||||

'is_anonymous': user.is_anonymous,

|

||||

'login_channel': user.login_channel,

|

||||

@ -166,7 +170,7 @@ class UserServiceMgr:

|

||||

return [{

|

||||

'title': r['title'],

|

||||

'permission': r['permission'],

|

||||

'canvas_category': r['canvas_category'].split('-')[0]

|

||||

'canvas_category': r['canvas_category'].split('_')[0]

|

||||

} for r in res]

|

||||

|

||||

|

||||

|

||||

@ -350,7 +350,7 @@

|

||||

]

|

||||

},

|

||||

"label": "Tokenizer",

|

||||

"name": "Tokenizer"

|

||||

"name": "Indexer"

|

||||

},

|

||||

"dragging": false,

|

||||

"id": "Tokenizer:EightRocketsAppear",

|

||||

|

||||

@ -18,12 +18,14 @@ import re

|

||||

from abc import ABC

|

||||

from agent.tools.base import ToolParamBase, ToolBase, ToolMeta

|

||||

from api.db import LLMType

|

||||

from api.db.services.document_service import DocumentService

|

||||

from api.db.services.dialog_service import meta_filter

|

||||

from api.db.services.knowledgebase_service import KnowledgebaseService

|

||||

from api.db.services.llm_service import LLMBundle

|

||||

from api import settings

|

||||

from api.utils.api_utils import timeout

|

||||

from rag.app.tag import label_question

|

||||

from rag.prompts.generator import cross_languages, kb_prompt

|

||||

from rag.prompts.generator import cross_languages, kb_prompt, gen_meta_filter

|

||||

|

||||

|

||||

class RetrievalParam(ToolParamBase):

|

||||

@ -58,6 +60,7 @@ class RetrievalParam(ToolParamBase):

|

||||

self.use_kg = False

|

||||

self.cross_languages = []

|

||||

self.toc_enhance = False

|

||||

self.meta_data_filter={}

|

||||

|

||||

def check(self):

|

||||

self.check_decimal_float(self.similarity_threshold, "[Retrieval] Similarity threshold")

|

||||

@ -117,6 +120,21 @@ class Retrieval(ToolBase, ABC):

|

||||

vars = self.get_input_elements_from_text(kwargs["query"])

|

||||

vars = {k:o["value"] for k,o in vars.items()}

|

||||

query = self.string_format(kwargs["query"], vars)

|

||||

|

||||

doc_ids=[]

|

||||

if self._param.meta_data_filter!={}:

|

||||

metas = DocumentService.get_meta_by_kbs(kb_ids)

|

||||

if self._param.meta_data_filter.get("method") == "auto":

|

||||

chat_mdl = LLMBundle(self._canvas.get_tenant_id(), LLMType.CHAT)

|

||||

filters = gen_meta_filter(chat_mdl, metas, query)

|

||||

doc_ids.extend(meta_filter(metas, filters))

|

||||

if not doc_ids:

|

||||

doc_ids = None

|

||||

elif self._param.meta_data_filter.get("method") == "manual":

|

||||

doc_ids.extend(meta_filter(metas, self._param.meta_data_filter["manual"]))

|

||||

if not doc_ids:

|

||||

doc_ids = None

|

||||

|

||||

if self._param.cross_languages:

|

||||

query = cross_languages(kbs[0].tenant_id, None, query, self._param.cross_languages)

|

||||

|

||||

@ -131,6 +149,7 @@ class Retrieval(ToolBase, ABC):

|

||||

self._param.top_n,

|

||||

self._param.similarity_threshold,

|

||||

1 - self._param.keywords_similarity_weight,

|

||||

doc_ids=doc_ids,

|

||||

aggs=False,

|

||||

rerank_mdl=rerank_mdl,

|

||||

rank_feature=label_question(query, kbs),

|

||||

|

||||

@ -45,7 +45,7 @@ from api.utils.api_utils import (

|

||||

from api.utils.file_utils import filename_type, get_project_base_directory, thumbnail

|

||||

from api.utils.web_utils import CONTENT_TYPE_MAP, html2pdf, is_valid_url

|

||||

from deepdoc.parser.html_parser import RAGFlowHtmlParser

|

||||

from rag.nlp import search

|

||||

from rag.nlp import search, rag_tokenizer

|

||||

from rag.utils.storage_factory import STORAGE_IMPL

|

||||

|

||||

|

||||

@ -524,6 +524,21 @@ def rename():

|

||||

e, file = FileService.get_by_id(informs[0].file_id)

|

||||

FileService.update_by_id(file.id, {"name": req["name"]})

|

||||

|

||||

tenant_id = DocumentService.get_tenant_id(req["doc_id"])

|

||||

title_tks = rag_tokenizer.tokenize(req["name"])

|

||||

es_body = {

|

||||

"docnm_kwd": req["name"],

|

||||

"title_tks": title_tks,

|

||||

"title_sm_tks": rag_tokenizer.fine_grained_tokenize(title_tks),

|

||||

}

|

||||

if settings.docStoreConn.indexExist(search.index_name(tenant_id), doc.kb_id):

|

||||

settings.docStoreConn.update(

|

||||

{"doc_id": req["doc_id"]},

|

||||

es_body,

|

||||

search.index_name(tenant_id),

|

||||

doc.kb_id,

|

||||

)

|

||||

|

||||

return get_json_result(data=True)

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

|

||||

@ -70,6 +70,7 @@ def create():

|

||||

e, t = TenantService.get_by_id(current_user.id)

|

||||

if not e:

|

||||

return get_data_error_result(message="Tenant not found.")

|

||||

|

||||

req["parser_config"] = {

|

||||

"layout_recognize": "DeepDOC",

|

||||

"chunk_token_num": 512,

|

||||

@ -579,7 +580,7 @@ def run_graphrag():

|

||||

sample_document = documents[0]

|

||||

document_ids = [document["id"] for document in documents]

|

||||

|

||||

task_id = queue_raptor_o_graphrag_tasks(doc=sample_document, ty="graphrag", priority=0, fake_doc_id=GRAPH_RAPTOR_FAKE_DOC_ID, doc_ids=list(document_ids))

|

||||

task_id = queue_raptor_o_graphrag_tasks(sample_doc_id=sample_document, ty="graphrag", priority=0, fake_doc_id=GRAPH_RAPTOR_FAKE_DOC_ID, doc_ids=list(document_ids))

|

||||

|

||||

if not KnowledgebaseService.update_by_id(kb.id, {"graphrag_task_id": task_id}):

|

||||

logging.warning(f"Cannot save graphrag_task_id for kb {kb_id}")

|

||||

@ -648,7 +649,7 @@ def run_raptor():

|

||||

sample_document = documents[0]

|

||||

document_ids = [document["id"] for document in documents]

|

||||

|

||||

task_id = queue_raptor_o_graphrag_tasks(doc=sample_document, ty="raptor", priority=0, fake_doc_id=GRAPH_RAPTOR_FAKE_DOC_ID, doc_ids=list(document_ids))

|

||||

task_id = queue_raptor_o_graphrag_tasks(sample_doc_id=sample_document, ty="raptor", priority=0, fake_doc_id=GRAPH_RAPTOR_FAKE_DOC_ID, doc_ids=list(document_ids))

|

||||

|

||||

if not KnowledgebaseService.update_by_id(kb.id, {"raptor_task_id": task_id}):

|

||||

logging.warning(f"Cannot save raptor_task_id for kb {kb_id}")

|

||||

@ -717,7 +718,7 @@ def run_mindmap():

|

||||

sample_document = documents[0]

|

||||

document_ids = [document["id"] for document in documents]

|

||||

|

||||

task_id = queue_raptor_o_graphrag_tasks(doc=sample_document, ty="mindmap", priority=0, fake_doc_id=GRAPH_RAPTOR_FAKE_DOC_ID, doc_ids=list(document_ids))

|

||||

task_id = queue_raptor_o_graphrag_tasks(sample_doc_id=sample_document, ty="mindmap", priority=0, fake_doc_id=GRAPH_RAPTOR_FAKE_DOC_ID, doc_ids=list(document_ids))

|

||||

|

||||

if not KnowledgebaseService.update_by_id(kb.id, {"mindmap_task_id": task_id}):

|

||||

logging.warning(f"Cannot save mindmap_task_id for kb {kb_id}")

|

||||

|

||||

@ -470,6 +470,20 @@ def list_docs(dataset_id, tenant_id):

|

||||

required: false

|

||||

default: 0

|

||||

description: Unix timestamp for filtering documents created before this time. 0 means no filter.

|

||||

- in: query

|

||||

name: suffix

|

||||

type: array

|

||||

items:

|

||||

type: string

|

||||

required: false

|

||||

description: Filter by file suffix (e.g., ["pdf", "txt", "docx"]).

|

||||

- in: query

|

||||

name: run

|

||||

type: array

|

||||

items:

|

||||

type: string

|

||||

required: false

|

||||

description: Filter by document run status. Supports both numeric ("0", "1", "2", "3", "4") and text formats ("UNSTART", "RUNNING", "CANCEL", "DONE", "FAIL").

|

||||

- in: header

|

||||

name: Authorization

|

||||

type: string

|

||||

@ -512,63 +526,62 @@ def list_docs(dataset_id, tenant_id):

|

||||

description: Processing status.

|

||||

"""

|

||||

if not KnowledgebaseService.accessible(kb_id=dataset_id, user_id=tenant_id):

|

||||

return get_error_data_result(message=f"You don't own the dataset {dataset_id}. ")

|

||||

id = request.args.get("id")

|

||||

name = request.args.get("name")

|

||||

return get_error_data_result(message=f"You don't own the dataset {dataset_id}. ")

|

||||

|

||||

if id and not DocumentService.query(id=id, kb_id=dataset_id):

|

||||

return get_error_data_result(message=f"You don't own the document {id}.")

|

||||

q = request.args

|

||||

document_id = q.get("id")

|

||||

name = q.get("name")

|

||||

|

||||

if document_id and not DocumentService.query(id=document_id, kb_id=dataset_id):

|

||||

return get_error_data_result(message=f"You don't own the document {document_id}.")

|

||||

if name and not DocumentService.query(name=name, kb_id=dataset_id):

|

||||

return get_error_data_result(message=f"You don't own the document {name}.")

|

||||

|

||||

page = int(request.args.get("page", 1))

|

||||

keywords = request.args.get("keywords", "")

|

||||

page_size = int(request.args.get("page_size", 30))

|

||||

orderby = request.args.get("orderby", "create_time")

|

||||

if request.args.get("desc") == "False":

|

||||

desc = False

|

||||

else:

|

||||

desc = True

|

||||

docs, tol = DocumentService.get_list(dataset_id, page, page_size, orderby, desc, keywords, id, name)

|

||||

page = int(q.get("page", 1))

|

||||

page_size = int(q.get("page_size", 30))

|

||||

orderby = q.get("orderby", "create_time")

|

||||

desc = str(q.get("desc", "true")).strip().lower() != "false"

|

||||

keywords = q.get("keywords", "")

|

||||

|

||||

create_time_from = int(request.args.get("create_time_from", 0))

|

||||

create_time_to = int(request.args.get("create_time_to", 0))

|

||||

# filters - align with OpenAPI parameter names

|

||||

suffix = q.getlist("suffix")

|

||||

run_status = q.getlist("run")

|

||||

create_time_from = int(q.get("create_time_from", 0))

|

||||

create_time_to = int(q.get("create_time_to", 0))

|

||||

|

||||

# map run status (accept text or numeric) - align with API parameter

|

||||

run_status_text_to_numeric = {"UNSTART": "0", "RUNNING": "1", "CANCEL": "2", "DONE": "3", "FAIL": "4"}

|

||||

run_status_converted = [run_status_text_to_numeric.get(v, v) for v in run_status]

|

||||

|

||||

docs, total = DocumentService.get_list(

|

||||

dataset_id, page, page_size, orderby, desc, keywords, document_id, name, suffix, run_status_converted

|

||||

)

|

||||

|

||||

# time range filter (0 means no bound)

|

||||

if create_time_from or create_time_to:

|

||||

filtered_docs = []

|

||||

for doc in docs:

|

||||

doc_create_time = doc.get("create_time", 0)

|

||||

if (create_time_from == 0 or doc_create_time >= create_time_from) and (create_time_to == 0 or doc_create_time <= create_time_to):

|

||||

filtered_docs.append(doc)

|

||||

docs = filtered_docs

|

||||

docs = [

|

||||

d for d in docs

|

||||

if (create_time_from == 0 or d.get("create_time", 0) >= create_time_from)

|

||||

and (create_time_to == 0 or d.get("create_time", 0) <= create_time_to)

|

||||

]

|

||||

|

||||

# rename key's name

|

||||

renamed_doc_list = []

|

||||

# rename keys + map run status back to text for output

|

||||

key_mapping = {

|

||||

"chunk_num": "chunk_count",

|

||||

"kb_id": "dataset_id",

|

||||

"kb_id": "dataset_id",

|

||||

"token_num": "token_count",

|

||||

"parser_id": "chunk_method",

|

||||

}

|

||||

run_mapping = {

|

||||

"0": "UNSTART",

|

||||

"1": "RUNNING",

|

||||

"2": "CANCEL",

|

||||

"3": "DONE",

|

||||

"4": "FAIL",

|

||||

}

|

||||

for doc in docs:

|

||||

renamed_doc = {}

|

||||

for key, value in doc.items():

|

||||

if key == "run":

|

||||

renamed_doc["run"] = run_mapping.get(str(value))

|

||||

new_key = key_mapping.get(key, key)

|

||||

renamed_doc[new_key] = value

|

||||

if key == "run":

|

||||

renamed_doc["run"] = run_mapping.get(value)

|

||||

renamed_doc_list.append(renamed_doc)

|

||||

return get_result(data={"total": tol, "docs": renamed_doc_list})

|

||||

run_status_numeric_to_text = {"0": "UNSTART", "1": "RUNNING", "2": "CANCEL", "3": "DONE", "4": "FAIL"}

|

||||

|

||||

output_docs = []

|

||||

for d in docs:

|

||||

renamed_doc = {key_mapping.get(k, k): v for k, v in d.items()}

|

||||

if "run" in d:

|

||||

renamed_doc["run"] = run_status_numeric_to_text.get(str(d["run"]), d["run"])

|

||||

output_docs.append(renamed_doc)

|

||||

|

||||

return get_result(data={"total": total, "docs": output_docs})

|

||||

|

||||

@manager.route("/datasets/<dataset_id>/documents", methods=["DELETE"]) # noqa: F821

|

||||

@token_required

|

||||

|

||||

@ -22,7 +22,7 @@ import secrets

|

||||

import time

|

||||

from datetime import datetime

|

||||

|

||||

from flask import redirect, request, session, Response

|

||||

from flask import redirect, request, session, make_response

|

||||

from flask_login import current_user, login_required, login_user, logout_user

|

||||

from werkzeug.security import check_password_hash, generate_password_hash

|

||||

|

||||

@ -866,7 +866,9 @@ def forget_get_captcha():

|

||||

from captcha.image import ImageCaptcha

|

||||

image = ImageCaptcha(width=300, height=120, font_sizes=[50, 60, 70])

|

||||

img_bytes = image.generate(captcha_text).read()

|

||||

return Response(img_bytes, mimetype="image/png")

|

||||

response = make_response(img_bytes)

|

||||

response.headers.set("Content-Type", "image/JPEG")

|

||||

return response

|

||||

|

||||

|

||||

@manager.route("/forget/otp", methods=["POST"]) # noqa: F821

|

||||

|

||||

@ -79,7 +79,7 @@ class DocumentService(CommonService):

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def get_list(cls, kb_id, page_number, items_per_page,

|

||||

orderby, desc, keywords, id, name):

|

||||

orderby, desc, keywords, id, name, suffix=None, run = None):

|

||||

fields = cls.get_cls_model_fields()

|

||||

docs = cls.model.select(*[*fields, UserCanvas.title]).join(File2Document, on = (File2Document.document_id == cls.model.id))\

|

||||

.join(File, on = (File.id == File2Document.file_id))\

|

||||

@ -96,6 +96,10 @@ class DocumentService(CommonService):

|

||||

docs = docs.where(

|

||||

fn.LOWER(cls.model.name).contains(keywords.lower())

|

||||

)

|

||||

if suffix:

|

||||

docs = docs.where(cls.model.suffix.in_(suffix))

|

||||

if run:

|

||||

docs = docs.where(cls.model.run.in_(run))

|

||||

if desc:

|

||||

docs = docs.order_by(cls.model.getter_by(orderby).desc())

|

||||

else:

|

||||

@ -667,9 +671,11 @@ class DocumentService(CommonService):

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def _sync_progress(cls, docs:list[dict]):

|

||||

from api.db.services.task_service import TaskService

|

||||

|

||||

for d in docs:

|

||||

try:

|

||||

tsks = Task.query(doc_id=d["id"], order_by=Task.create_time)

|

||||

tsks = TaskService.query(doc_id=d["id"], order_by=Task.create_time)

|

||||

if not tsks:

|

||||

continue

|

||||

msg = []

|

||||

@ -787,21 +793,23 @@ class DocumentService(CommonService):

|

||||

"cancelled": int(cancelled),

|

||||

}

|

||||

|

||||

def queue_raptor_o_graphrag_tasks(doc, ty, priority, fake_doc_id="", doc_ids=[]):

|

||||

def queue_raptor_o_graphrag_tasks(sample_doc_id, ty, priority, fake_doc_id="", doc_ids=[]):

|

||||

"""

|

||||

You can provide a fake_doc_id to bypass the restriction of tasks at the knowledgebase level.

|

||||

Optionally, specify a list of doc_ids to determine which documents participate in the task.

|

||||

"""

|

||||

chunking_config = DocumentService.get_chunking_config(doc["id"])

|

||||

assert ty in ["graphrag", "raptor", "mindmap"], "type should be graphrag, raptor or mindmap"

|

||||

|

||||

chunking_config = DocumentService.get_chunking_config(sample_doc_id["id"])

|

||||

hasher = xxhash.xxh64()

|

||||

for field in sorted(chunking_config.keys()):

|

||||

hasher.update(str(chunking_config[field]).encode("utf-8"))

|

||||

|

||||

def new_task():

|

||||

nonlocal doc

|

||||

nonlocal sample_doc_id

|

||||

return {

|

||||

"id": get_uuid(),

|

||||

"doc_id": fake_doc_id if fake_doc_id else doc["id"],

|

||||

"doc_id": sample_doc_id["id"],

|

||||

"from_page": 100000000,

|

||||

"to_page": 100000000,

|

||||

"task_type": ty,

|

||||

@ -816,9 +824,9 @@ def queue_raptor_o_graphrag_tasks(doc, ty, priority, fake_doc_id="", doc_ids=[])

|

||||

task["digest"] = hasher.hexdigest()

|

||||

bulk_insert_into_db(Task, [task], True)

|

||||

|

||||

if ty in ["graphrag", "raptor", "mindmap"]:

|

||||

task["doc_ids"] = doc_ids

|

||||

DocumentService.begin2parse(doc["id"])

|

||||

task["doc_id"] = fake_doc_id

|

||||

task["doc_ids"] = doc_ids

|

||||

DocumentService.begin2parse(sample_doc_id["id"])

|

||||

assert REDIS_CONN.queue_product(get_svr_queue_name(priority), message=task), "Can't access Redis. Please check the Redis' status."

|

||||

return task["id"]

|

||||

|

||||

|

||||

@ -210,19 +210,18 @@ class LLMBundle(LLM4Tenant):

|

||||

def _clean_param(chat_partial, **kwargs):

|

||||

func = chat_partial.func

|

||||

sig = inspect.signature(func)

|

||||

keyword_args = []

|

||||

support_var_args = False

|

||||

allowed_params = set()

|

||||

|

||||

for param in sig.parameters.values():

|

||||

if param.kind == inspect.Parameter.VAR_KEYWORD or param.kind == inspect.Parameter.VAR_POSITIONAL:

|

||||

if param.kind == inspect.Parameter.VAR_KEYWORD:

|

||||

support_var_args = True

|

||||

elif param.kind == inspect.Parameter.KEYWORD_ONLY:

|

||||

keyword_args.append(param.name)

|

||||

|

||||

use_kwargs = kwargs

|

||||

if not support_var_args:

|

||||

use_kwargs = {k: v for k, v in kwargs.items() if k in keyword_args}

|

||||

return use_kwargs

|

||||

|

||||

elif param.kind in (inspect.Parameter.POSITIONAL_OR_KEYWORD, inspect.Parameter.KEYWORD_ONLY):

|

||||

allowed_params.add(param.name)

|

||||

if support_var_args:

|

||||

return kwargs

|

||||

else:

|

||||

return {k: v for k, v in kwargs.items() if k in allowed_params}

|

||||

def chat(self, system: str, history: list, gen_conf: dict = {}, **kwargs) -> str:

|

||||

if self.langfuse:

|

||||

generation = self.langfuse.start_generation(trace_context=self.trace_context, name="chat", model=self.llm_name, input={"system": system, "history": history})

|

||||

|

||||

@ -173,7 +173,7 @@ def filename_type(filename):

|

||||

if re.match(r".*\.(wav|flac|ape|alac|wavpack|wv|mp3|aac|ogg|vorbis|opus)$", filename):

|

||||

return FileType.AURAL.value

|

||||

|

||||

if re.match(r".*\.(jpg|jpeg|png|tif|gif|pcx|tga|exif|fpx|svg|psd|cdr|pcd|dxf|ufo|eps|ai|raw|WMF|webp|avif|apng|icon|ico|mpg|mpeg|avi|rm|rmvb|mov|wmv|asf|dat|asx|wvx|mpe|mpa|mp4)$", filename):

|

||||

if re.match(r".*\.(jpg|jpeg|png|tif|gif|pcx|tga|exif|fpx|svg|psd|cdr|pcd|dxf|ufo|eps|ai|raw|WMF|webp|avif|apng|icon|ico|mpg|mpeg|avi|rm|rmvb|mov|wmv|asf|dat|asx|wvx|mpe|mpa|mp4|avi|mkv)$", filename):

|

||||

return FileType.VISUAL.value

|

||||

|

||||

return FileType.OTHER.value

|

||||

|

||||

@ -2987,7 +2987,7 @@

|

||||

"tags": "LLM,CHAT,IMAGE2TEXT,32k",

|

||||

"max_tokens": 32000,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

"is_tools": false

|

||||

},

|

||||

{

|

||||

"llm_name": "THUDM/GLM-Z1-32B-0414",

|

||||

|

||||

@ -54,8 +54,8 @@ class RAGFlowExcelParser:

|

||||

try:

|

||||

file_like_object.seek(0)

|

||||

try:

|

||||

df = pd.read_excel(file_like_object)

|

||||

return RAGFlowExcelParser._dataframe_to_workbook(df)

|

||||

dfs = pd.read_excel(file_like_object, sheet_name=None)

|

||||

return RAGFlowExcelParser._dataframe_to_workbook(dfs)

|

||||

except Exception as ex:

|

||||

logging.info(f"pandas with default engine load error: {ex}, try calamine instead")

|

||||

file_like_object.seek(0)

|

||||

@ -75,6 +75,10 @@ class RAGFlowExcelParser:

|

||||

|

||||

@staticmethod

|

||||

def _dataframe_to_workbook(df):

|

||||

# if contains multiple sheets use _dataframes_to_workbook

|

||||

if isinstance(df, dict) and len(df) > 1:

|

||||

return RAGFlowExcelParser._dataframes_to_workbook(df)

|

||||

|

||||

df = RAGFlowExcelParser._clean_dataframe(df)

|

||||

wb = Workbook()

|

||||

ws = wb.active

|

||||

@ -88,6 +92,22 @@ class RAGFlowExcelParser:

|

||||

ws.cell(row=row_num, column=col_num, value=value)

|

||||

|

||||

return wb

|

||||

|

||||

@staticmethod

|

||||

def _dataframes_to_workbook(dfs: dict):

|

||||

wb = Workbook()

|

||||

default_sheet = wb.active

|

||||

wb.remove(default_sheet)

|

||||

|

||||

for sheet_name, df in dfs.items():

|

||||

df = RAGFlowExcelParser._clean_dataframe(df)

|

||||

ws = wb.create_sheet(title=sheet_name)

|

||||

for col_num, column_name in enumerate(df.columns, 1):

|

||||

ws.cell(row=1, column=col_num, value=column_name)

|

||||

for row_num, row in enumerate(df.values, 2):

|

||||

for col_num, value in enumerate(row, 1):

|

||||

ws.cell(row=row_num, column=col_num, value=value)

|

||||

return wb

|

||||

|

||||

def html(self, fnm, chunk_rows=256):

|

||||

from html import escape

|

||||

|

||||

@ -17,6 +17,8 @@ from concurrent.futures import ThreadPoolExecutor, as_completed

|

||||

|

||||

from PIL import Image

|

||||

|

||||

from api.db import LLMType

|

||||

from api.db.services.llm_service import LLMBundle

|

||||

from api.utils.api_utils import timeout

|

||||

from rag.app.picture import vision_llm_chunk as picture_vision_llm_chunk

|

||||

from rag.prompts.generator import vision_llm_figure_describe_prompt

|

||||

@ -32,6 +34,43 @@ def vision_figure_parser_figure_data_wrapper(figures_data_without_positions):

|

||||

if isinstance(figure_data[1], Image.Image)

|

||||

]

|

||||

|

||||

def vision_figure_parser_docx_wrapper(sections,tbls,callback=None,**kwargs):

|

||||

try:

|

||||

vision_model = LLMBundle(kwargs["tenant_id"], LLMType.IMAGE2TEXT)

|

||||

callback(0.7, "Visual model detected. Attempting to enhance figure extraction...")

|

||||

except Exception:

|

||||

vision_model = None

|

||||

if vision_model:

|

||||

figures_data = vision_figure_parser_figure_data_wrapper(sections)

|

||||

try:

|

||||

docx_vision_parser = VisionFigureParser(vision_model=vision_model, figures_data=figures_data, **kwargs)

|

||||

boosted_figures = docx_vision_parser(callback=callback)

|

||||

tbls.extend(boosted_figures)

|

||||

except Exception as e:

|

||||

callback(0.8, f"Visual model error: {e}. Skipping figure parsing enhancement.")

|

||||

return tbls

|

||||

|

||||

def vision_figure_parser_pdf_wrapper(tbls,callback=None,**kwargs):

|

||||

try:

|

||||

vision_model = LLMBundle(kwargs["tenant_id"], LLMType.IMAGE2TEXT)

|

||||

callback(0.7, "Visual model detected. Attempting to enhance figure extraction...")

|

||||

except Exception:

|

||||

vision_model = None

|

||||

if vision_model:

|

||||

def is_figure_item(item):

|

||||

return (

|

||||

isinstance(item[0][0], Image.Image) and

|

||||

isinstance(item[0][1], list)

|

||||

)

|

||||

figures_data = [item for item in tbls if is_figure_item(item)]

|

||||

try:

|

||||

docx_vision_parser = VisionFigureParser(vision_model=vision_model, figures_data=figures_data, **kwargs)

|

||||

boosted_figures = docx_vision_parser(callback=callback)

|

||||

tbls = [item for item in tbls if not is_figure_item(item)]

|

||||

tbls.extend(boosted_figures)

|

||||

except Exception as e:

|

||||

callback(0.8, f"Visual model error: {e}. Skipping figure parsing enhancement.")

|

||||

return tbls

|

||||

|

||||

shared_executor = ThreadPoolExecutor(max_workers=10)

|

||||

|

||||

|

||||

@ -97,13 +97,13 @@ SVR_HTTP_PORT=9380

|

||||

ADMIN_SVR_HTTP_PORT=9381

|

||||

|

||||

# The RAGFlow Docker image to download.

|

||||

# Defaults to the v0.21.0-slim edition, which is the RAGFlow Docker image without embedding models.

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0-slim

|

||||

# Defaults to the v0.21.1-slim edition, which is the RAGFlow Docker image without embedding models.

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1-slim

|

||||

#

|

||||

# To download the RAGFlow Docker image with embedding models, uncomment the following line instead:

|

||||

# RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.0

|

||||

# RAGFLOW_IMAGE=infiniflow/ragflow:v0.21.1

|

||||

#

|

||||

# The Docker image of the v0.21.0 edition includes built-in embedding models:

|

||||

# The Docker image of the v0.21.1 edition includes built-in embedding models:

|

||||

# - BAAI/bge-large-zh-v1.5

|

||||

# - maidalun1020/bce-embedding-base_v1

|

||||

#

|

||||

|

||||

@ -79,8 +79,8 @@ The [.env](./.env) file contains important environment variables for Docker.

|

||||

- `RAGFLOW-IMAGE`

|

||||

The Docker image edition. Available editions:

|

||||

|

||||

- `infiniflow/ragflow:v0.21.0-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.21.0`: The RAGFlow Docker image with embedding models including:

|

||||

- `infiniflow/ragflow:v0.21.1-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.21.1`: The RAGFlow Docker image with embedding models including:

|

||||

- Built-in embedding models:

|

||||

- `BAAI/bge-large-zh-v1.5`

|

||||

- `maidalun1020/bce-embedding-base_v1`

|

||||

|

||||

@ -77,7 +77,7 @@ services:

|

||||

container_name: ragflow-infinity

|

||||

profiles:

|

||||

- infinity

|

||||

image: infiniflow/infinity:v0.6.0

|

||||

image: infiniflow/infinity:v0.6.1

|

||||

volumes:

|

||||

- infinity_data:/var/infinity

|

||||

- ./infinity_conf.toml:/infinity_conf.toml

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

[general]

|

||||

version = "0.6.0"

|

||||

version = "0.6.1"

|

||||

time_zone = "utc-8"

|

||||

|

||||

[network]

|

||||

|

||||

@ -99,8 +99,8 @@ RAGFlow utilizes MinIO as its object storage solution, leveraging its scalabilit

|

||||

- `RAGFLOW-IMAGE`

|

||||

The Docker image edition. Available editions:

|

||||

|

||||

- `infiniflow/ragflow:v0.21.0-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.21.0`: The RAGFlow Docker image with embedding models including:

|

||||

- `infiniflow/ragflow:v0.21.1-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.21.1`: The RAGFlow Docker image with embedding models including:

|

||||

- Built-in embedding models:

|

||||

- `BAAI/bge-large-zh-v1.5`

|

||||

- `maidalun1020/bce-embedding-base_v1`

|

||||

|

||||

@ -77,7 +77,7 @@ After building the infiniflow/ragflow:nightly-slim image, you are ready to launc

|

||||

|

||||

1. Edit Docker Compose Configuration

|

||||

|

||||

Open the `docker/.env` file. Find the `RAGFLOW_IMAGE` setting and change the image reference from `infiniflow/ragflow:v0.21.0-slim` to `infiniflow/ragflow:nightly-slim` to use the pre-built image.

|

||||

Open the `docker/.env` file. Find the `RAGFLOW_IMAGE` setting and change the image reference from `infiniflow/ragflow:v0.21.1-slim` to `infiniflow/ragflow:nightly-slim` to use the pre-built image.

|

||||

|

||||

|

||||

2. Launch the Service

|

||||

|

||||

34

docs/faq.mdx

34

docs/faq.mdx

@ -30,17 +30,17 @@ The "garbage in garbage out" status quo remains unchanged despite the fact that

|

||||

|

||||

Each RAGFlow release is available in two editions:

|

||||

|

||||

- **Slim edition**: excludes built-in embedding models and is identified by a **-slim** suffix added to the version name. Example: `infiniflow/ragflow:v0.21.0-slim`

|

||||

- **Full edition**: includes built-in embedding models and has no suffix added to the version name. Example: `infiniflow/ragflow:v0.21.0`

|

||||

- **Slim edition**: excludes built-in embedding models and is identified by a **-slim** suffix added to the version name. Example: `infiniflow/ragflow:v0.21.1-slim`

|

||||

- **Full edition**: includes built-in embedding models and has no suffix added to the version name. Example: `infiniflow/ragflow:v0.21.1`

|

||||

|

||||

---

|

||||

|

||||

### Which embedding models can be deployed locally?

|

||||

|

||||

RAGFlow offers two Docker image editions, `v0.21.0-slim` and `v0.21.0`:

|

||||

RAGFlow offers two Docker image editions, `v0.21.1-slim` and `v0.21.1`:

|

||||

|

||||

- `infiniflow/ragflow:v0.21.0-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.21.0`: The RAGFlow Docker image with the following built-in embedding models:

|

||||

- `infiniflow/ragflow:v0.21.1-slim` (default): The RAGFlow Docker image without embedding models.

|

||||

- `infiniflow/ragflow:v0.21.1`: The RAGFlow Docker image with the following built-in embedding models:

|

||||

- `BAAI/bge-large-zh-v1.5`

|

||||

- `maidalun1020/bce-embedding-base_v1`

|

||||

|

||||

@ -510,3 +510,27 @@ See [here](./guides/agent/best_practices/accelerate_agent_question_answering.md)

|

||||

|

||||

---

|

||||

|

||||

### How to use MinerU to parse PDF documents?

|

||||

|

||||

MinerU PDF document parsing is available starting from v0.21.1. To use this feature, follow these steps:

|

||||

|

||||

1. Before deploying ragflow-server, update your **docker/.env** file:

|

||||

- Enable `HF_ENDPOINT=https://hf-mirror.com`

|

||||

- Add a MinerU entry: `MINERU_EXECUTABLE=/ragflow/uv_tools/.venv/bin/mineru`

|

||||

|

||||

2. Start the ragflow-server and run the following commands inside the container:

|

||||

|

||||

```bash

|

||||

mkdir uv_tools

|

||||

cd uv_tools

|

||||

uv venv .venv

|

||||

source .venv/bin/activate

|

||||

uv pip install -U "mineru[core]" -i https://mirrors.aliyun.com/pypi/simple

|

||||

```

|

||||

|

||||

3. Restart the ragflow-server.

|

||||

4. In the web UI, navigate to the **Configuration** page of your dataset. Click **Built-in** in the **Ingestion pipeline** section, select a chunking method from the **Built-in** dropdown, which supports PDF parsing, and slect **MinerU** in **PDF parser**.

|

||||

5. If you use a custom ingestion pipeline instead, you must also complete the first three steps before selecting **MinerU** in the **Parsing method** section of the **Parser** component.

|

||||

|

||||

|

||||

|

||||

|

||||

40

docs/guides/agent/agent_component_reference/chunker_title.md

Normal file

40

docs/guides/agent/agent_component_reference/chunker_title.md

Normal file

@ -0,0 +1,40 @@

|

||||

---

|

||||

sidebar_position: 31

|

||||

slug: /chunker_title_component

|

||||

---

|

||||

|

||||

# Title chunker component

|

||||

|

||||

A component that splits texts into chunks by heading level.

|

||||

|

||||

---

|

||||

|

||||

A **Token chunker** component is a text splitter that uses specified heading level as delimiter to define chunk boundaries and create chunks.

|

||||

|

||||

## Scenario

|

||||

|

||||

A **Title chunker** component is optional, usually placed immediately after **Parser**.

|

||||

|

||||

:::caution WARNING

|

||||

Placing a **Title chunker** after a **Token chunker** is invalid and will cause an error. Please note that this restriction is not currently system-enforced and requires your attention.

|

||||

:::

|

||||

|

||||

## Configurations

|

||||

|

||||

### Hierarchy

|

||||

|

||||

Specifies the heading level to define chunk boundaries:

|

||||

|

||||

- H1

|

||||

- H2

|

||||

- H3 (Default)

|

||||

- H4

|

||||

|

||||

Click **+ Add** to add heading levels here or update the corresponding **Regular Expressions** fields for custom heading patterns.

|

||||

|

||||

### Output

|

||||

|

||||

The global variable name for the output of the **Title chunker** component, which can be referenced by subsequent components in the ingestion pipeline.

|

||||

|

||||

- Default: `chunks`

|

||||

- Type: `Array<Object>`

|

||||

43

docs/guides/agent/agent_component_reference/chunker_token.md

Normal file

43

docs/guides/agent/agent_component_reference/chunker_token.md

Normal file

@ -0,0 +1,43 @@

|

||||

---

|

||||

sidebar_position: 32

|

||||

slug: /chunker_token_component

|

||||

---

|

||||

|

||||

# Token chunker component

|

||||

|

||||

A component that splits texts into chunks, respecting a maximum token limit and using delimiters to find optimal breakpoints.

|

||||

|

||||

---

|

||||

|

||||

A **Token chunker** component is a text splitter that creates chunks by respecting a recommended maximum token length, using delimiters to ensure logical chunk breakpoints. It splits long texts into appropriately-sized, semantically related chunks.

|

||||

|

||||

|

||||

## Scenario

|

||||

|

||||

A **Token chunker** component is optional, usually placed immediately after **Parser** or **Title chunker**.

|

||||

|

||||

## Configurations

|

||||

|

||||

### Recommended chunk size

|

||||

|

||||

The recommended maximum token limit for each created chunk. The **Token chunker** component creates chunks at specified delimiters. If this token limit is reached before a delimiter, a chunk is created at that point.

|

||||

|

||||

### Overlapped percent (%)

|

||||

|

||||

This defines the overlap percentage between chunks. An appropriate degree of overlap ensures semantic coherence without creating excessive, redundant tokens for the LLM.

|

||||

|

||||

- Default: 0

|

||||

- Maximum: 30%

|

||||

|

||||

|

||||

### Delimiters

|

||||

|

||||

Defaults to `\n`. Click the right-hand **Recycle bin** button to remove it, or click **+ Add** to add a delimiter.

|

||||

|

||||

|

||||

### Output

|

||||

|

||||

The global variable name for the output of the **Token chunker** component, which can be referenced by subsequent components in the ingestion pipeline.

|

||||

|

||||

- Default: `chunks`

|

||||

- Type: `Array<Object>`

|

||||

@ -48,7 +48,7 @@ You start an AI conversation by creating an assistant.

|

||||

- If no target language is selected, the system will search only in the language of your query, which may cause relevant information in other languages to be missed.

|

||||

- **Variable** refers to the variables (keys) to be used in the system prompt. `{knowledge}` is a reserved variable. Click **Add** to add more variables for the system prompt.

|

||||

- If you are uncertain about the logic behind **Variable**, leave it *as-is*.

|

||||

- As of v0.21.0, if you add custom variables here, the only way you can pass in their values is to call:

|

||||

- As of v0.21.1, if you add custom variables here, the only way you can pass in their values is to call:

|

||||

- HTTP method [Converse with chat assistant](../../references/http_api_reference.md#converse-with-chat-assistant), or

|

||||

- Python method [Converse with chat assistant](../../references/python_api_reference.md#converse-with-chat-assistant).

|

||||

|

||||

|

||||

@ -59,7 +59,7 @@ You can also change a file's chunking method on the **Files** page.

|

||||

|

||||

|

||||

<details>

|

||||

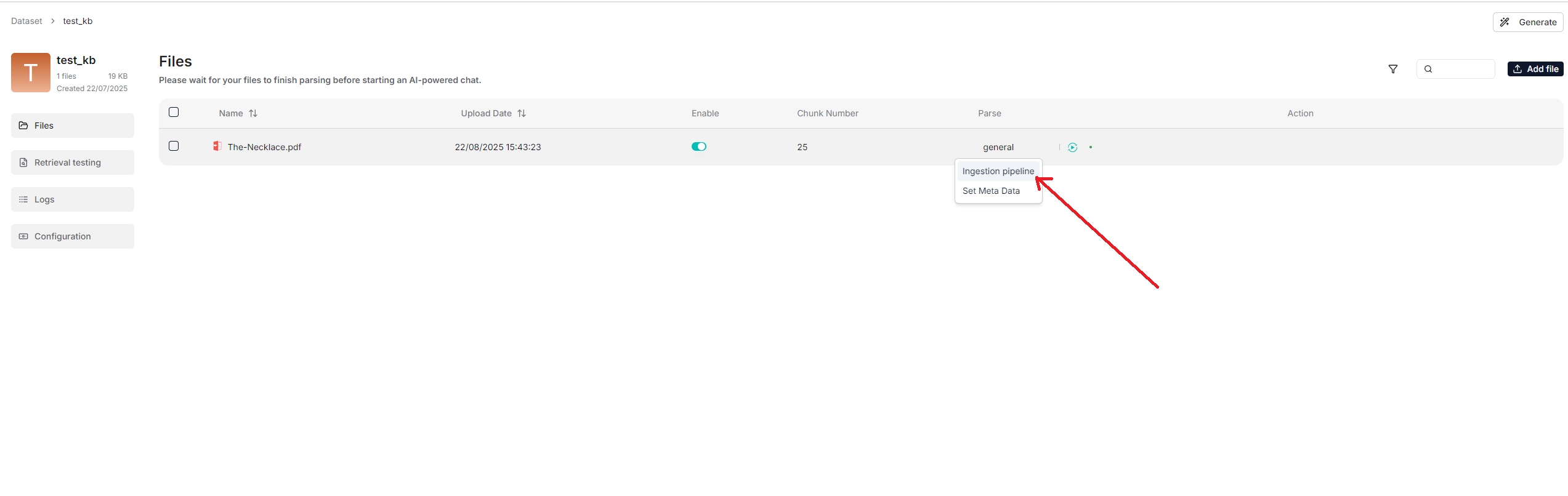

<summary>From v0.21.0 onward, RAGFlow supports ingestion pipeline for customized data ingestion and cleansing workflows.</summary>

|

||||

<summary>From v0.21.1 onward, RAGFlow supports ingestion pipeline for customized data ingestion and cleansing workflows.</summary>

|

||||

|

||||

To use a customized data pipeline:

|

||||

|

||||

@ -138,7 +138,7 @@ See [Run retrieval test](./run_retrieval_test.md) for details.

|

||||

|

||||

## Search for dataset

|

||||

|

||||

As of RAGFlow v0.21.0, the search feature is still in a rudimentary form, supporting only dataset search by name.

|

||||

As of RAGFlow v0.21.1, the search feature is still in a rudimentary form, supporting only dataset search by name.

|

||||

|

||||

|

||||

|

||||

|

||||

@ -35,8 +35,31 @@ RAGFlow isn't one-size-fits-all. It is built for flexibility and supports deeper

|

||||

|

||||

- DeepDoc: (Default) The default visual model performing OCR, TSR, and DLR tasks on PDFs, which can be time-consuming.

|

||||

- Naive: Skip OCR, TSR, and DLR tasks if *all* your PDFs are plain text.

|

||||

- MinerU: An experimental feature.

|

||||

- A third-party visual model provided by a specific model provider.

|

||||

|

||||

:::danger IMPORTANG

|

||||

MinerU PDF document parsing is available starting from v0.21.1. To use this feature, follow these steps:

|

||||

|

||||

1. Before deploying ragflow-server, update your **docker/.env** file:

|

||||

- Enable `HF_ENDPOINT=https://hf-mirror.com`

|

||||

- Add a MinerU entry: `MINERU_EXECUTABLE=/ragflow/uv_tools/.venv/bin/mineru`

|

||||

|

||||

2. Start the ragflow-server and run the following commands inside the container:

|

||||

|

||||

```bash

|

||||

mkdir uv_tools

|

||||

cd uv_tools

|

||||

uv venv .venv

|

||||

source .venv/bin/activate

|

||||

uv pip install -U "mineru[core]" -i https://mirrors.aliyun.com/pypi/simple

|

||||

```

|

||||

|

||||

3. Restart the ragflow-server.

|

||||

4. In the web UI, navigate to the **Configuration** page of your dataset. Click **Built-in** in the **Ingestion pipeline** section, select a chunking method from the **Built-in** dropdown, which supports PDF parsing, and slect **MinerU** in **PDF parser**.

|

||||

5. If you use a custom ingestion pipeline instead, you must also complete the first three steps before selecting **MinerU** in the **Parsing method** section of the **Parser** component.

|

||||

:::

|

||||