mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-04 03:25:30 +08:00

Compare commits

21 Commits

50bc53a1f5

...

v0.22.1

| Author | SHA1 | Date | |

|---|---|---|---|

| cfdccebb17 | |||

| 980a883033 | |||

| 02d429f0ca | |||

| 9c24d5d44a | |||

| 0cc5d7a8a6 | |||

| c43bf1dcf5 | |||

| f76b8279dd | |||

| db5ec89dc5 | |||

| 1c201c4d54 | |||

| ba78d0f0c2 | |||

| add8c63458 | |||

| 83661efdaf | |||

| 971197d595 | |||

| 0884e9a4d9 | |||

| 2de42f00b8 | |||

| e8fe580d7a | |||

| 62505164d5 | |||

| d1dcf3b43c | |||

| f84662d2ee | |||

| 1cb6b7f5dd | |||

| 023f509501 |

10

README.md

10

README.md

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -85,6 +85,7 @@ Try our demo at [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

|

||||

## 🔥 Latest Updates

|

||||

|

||||

- 2025-11-19 Supports Gemini 3 Pro.

|

||||

- 2025-11-12 Supports data synchronization from Confluence, AWS S3, Discord, Google Drive.

|

||||

- 2025-10-23 Supports MinerU & Docling as document parsing methods.

|

||||

- 2025-10-15 Supports orchestrable ingestion pipeline.

|

||||

@ -93,8 +94,6 @@ Try our demo at [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

- 2025-05-23 Adds a Python/JavaScript code executor component to Agent.

|

||||

- 2025-05-05 Supports cross-language query.

|

||||

- 2025-03-19 Supports using a multi-modal model to make sense of images within PDF or DOCX files.

|

||||

- 2024-12-18 Upgrades Document Layout Analysis model in DeepDoc.

|

||||

- 2024-08-22 Support text to SQL statements through RAG.

|

||||

|

||||

## 🎉 Stay Tuned

|

||||

|

||||

@ -188,12 +187,13 @@ releases! 🌟

|

||||

> All Docker images are built for x86 platforms. We don't currently offer Docker images for ARM64.

|

||||

> If you are on an ARM64 platform, follow [this guide](https://ragflow.io/docs/dev/build_docker_image) to build a Docker image compatible with your system.

|

||||

|

||||

> The command below downloads the `v0.22.0` edition of the RAGFlow Docker image. See the following table for descriptions of different RAGFlow editions. To download a RAGFlow edition different from `v0.22.0`, update the `RAGFLOW_IMAGE` variable accordingly in **docker/.env** before using `docker compose` to start the server.

|

||||

> The command below downloads the `v0.22.1` edition of the RAGFlow Docker image. See the following table for descriptions of different RAGFlow editions. To download a RAGFlow edition different from `v0.22.1`, update the `RAGFLOW_IMAGE` variable accordingly in **docker/.env** before using `docker compose` to start the server.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# Optional: use a stable tag (see releases: https://github.com/infiniflow/ragflow/releases), e.g.: git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# Optional: use a stable tag (see releases: https://github.com/infiniflow/ragflow/releases)

|

||||

# This steps ensures the **entrypoint.sh** file in the code matches the Docker image version.

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Lencana Daring" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Rilis%20Terbaru" alt="Rilis Terbaru">

|

||||

@ -85,6 +85,7 @@ Coba demo kami di [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

|

||||

## 🔥 Pembaruan Terbaru

|

||||

|

||||

- 2025-11-19 Mendukung Gemini 3 Pro.

|

||||

- 2025-11-12 Mendukung sinkronisasi data dari Confluence, AWS S3, Discord, Google Drive.

|

||||

- 2025-10-23 Mendukung MinerU & Docling sebagai metode penguraian dokumen.

|

||||

- 2025-10-15 Dukungan untuk jalur data yang terorkestrasi.

|

||||

@ -186,12 +187,13 @@ Coba demo kami di [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

> Semua gambar Docker dibangun untuk platform x86. Saat ini, kami tidak menawarkan gambar Docker untuk ARM64.

|

||||

> Jika Anda menggunakan platform ARM64, [silakan gunakan panduan ini untuk membangun gambar Docker yang kompatibel dengan sistem Anda](https://ragflow.io/docs/dev/build_docker_image).

|

||||

|

||||

> Perintah di bawah ini mengunduh edisi v0.22.0 dari gambar Docker RAGFlow. Silakan merujuk ke tabel berikut untuk deskripsi berbagai edisi RAGFlow. Untuk mengunduh edisi RAGFlow yang berbeda dari v0.22.0, perbarui variabel RAGFLOW_IMAGE di docker/.env sebelum menggunakan docker compose untuk memulai server.

|

||||

> Perintah di bawah ini mengunduh edisi v0.22.1 dari gambar Docker RAGFlow. Silakan merujuk ke tabel berikut untuk deskripsi berbagai edisi RAGFlow. Untuk mengunduh edisi RAGFlow yang berbeda dari v0.22.1, perbarui variabel RAGFLOW_IMAGE di docker/.env sebelum menggunakan docker compose untuk memulai server.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# Opsional: gunakan tag stabil (lihat releases: https://github.com/infiniflow/ragflow/releases), contoh: git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# Opsional: gunakan tag stabil (lihat releases: https://github.com/infiniflow/ragflow/releases)

|

||||

# This steps ensures the **entrypoint.sh** file in the code matches the Docker image version.

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -66,6 +66,7 @@

|

||||

|

||||

## 🔥 最新情報

|

||||

|

||||

- 2025-11-19 Gemini 3 Proをサポートしています

|

||||

- 2025-11-12 Confluence、AWS S3、Discord、Google Drive からのデータ同期をサポートします。

|

||||

- 2025-10-23 ドキュメント解析方法として MinerU と Docling をサポートします。

|

||||

- 2025-10-15 オーケストレーションされたデータパイプラインのサポート。

|

||||

@ -166,12 +167,13 @@

|

||||

> 現在、公式に提供されているすべての Docker イメージは x86 アーキテクチャ向けにビルドされており、ARM64 用の Docker イメージは提供されていません。

|

||||

> ARM64 アーキテクチャのオペレーティングシステムを使用している場合は、[このドキュメント](https://ragflow.io/docs/dev/build_docker_image)を参照して Docker イメージを自分でビルドしてください。

|

||||

|

||||

> 以下のコマンドは、RAGFlow Docker イメージの v0.22.0 エディションをダウンロードします。異なる RAGFlow エディションの説明については、以下の表を参照してください。v0.22.0 とは異なるエディションをダウンロードするには、docker/.env ファイルの RAGFLOW_IMAGE 変数を適宜更新し、docker compose を使用してサーバーを起動してください。

|

||||

> 以下のコマンドは、RAGFlow Docker イメージの v0.22.1 エディションをダウンロードします。異なる RAGFlow エディションの説明については、以下の表を参照してください。v0.22.1 とは異なるエディションをダウンロードするには、docker/.env ファイルの RAGFLOW_IMAGE 変数を適宜更新し、docker compose を使用してサーバーを起動してください。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# 任意: 安定版タグを利用 (一覧: https://github.com/infiniflow/ragflow/releases) 例: git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# 任意: 安定版タグを利用 (一覧: https://github.com/infiniflow/ragflow/releases)

|

||||

# この手順は、コード内の entrypoint.sh ファイルが Docker イメージのバージョンと一致していることを確認します。

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -67,6 +67,7 @@

|

||||

|

||||

## 🔥 업데이트

|

||||

|

||||

- 2025-11-19 Gemini 3 Pro를 지원합니다.

|

||||

- 2025-11-12 Confluence, AWS S3, Discord, Google Drive에서 데이터 동기화를 지원합니다.

|

||||

- 2025-10-23 문서 파싱 방법으로 MinerU 및 Docling을 지원합니다.

|

||||

- 2025-10-15 조정된 데이터 파이프라인 지원.

|

||||

@ -168,12 +169,13 @@

|

||||

> 모든 Docker 이미지는 x86 플랫폼을 위해 빌드되었습니다. 우리는 현재 ARM64 플랫폼을 위한 Docker 이미지를 제공하지 않습니다.

|

||||

> ARM64 플랫폼을 사용 중이라면, [시스템과 호환되는 Docker 이미지를 빌드하려면 이 가이드를 사용해 주세요](https://ragflow.io/docs/dev/build_docker_image).

|

||||

|

||||

> 아래 명령어는 RAGFlow Docker 이미지의 v0.22.0 버전을 다운로드합니다. 다양한 RAGFlow 버전에 대한 설명은 다음 표를 참조하십시오. v0.22.0과 다른 RAGFlow 버전을 다운로드하려면, docker/.env 파일에서 RAGFLOW_IMAGE 변수를 적절히 업데이트한 후 docker compose를 사용하여 서버를 시작하십시오.

|

||||

> 아래 명령어는 RAGFlow Docker 이미지의 v0.22.1 버전을 다운로드합니다. 다양한 RAGFlow 버전에 대한 설명은 다음 표를 참조하십시오. v0.22.1과 다른 RAGFlow 버전을 다운로드하려면, docker/.env 파일에서 RAGFLOW_IMAGE 변수를 적절히 업데이트한 후 docker compose를 사용하여 서버를 시작하십시오.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# Optional: use a stable tag (see releases: https://github.com/infiniflow/ragflow/releases), e.g.: git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# Optional: use a stable tag (see releases: https://github.com/infiniflow/ragflow/releases)

|

||||

# 이 단계는 코드의 entrypoint.sh 파일이 Docker 이미지 버전과 일치하도록 보장합니다.

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Badge Estático" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Última%20Relese" alt="Última Versão">

|

||||

@ -86,6 +86,7 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

|

||||

## 🔥 Últimas Atualizações

|

||||

|

||||

- 19-11-2025 Suporta Gemini 3 Pro.

|

||||

- 12-11-2025 Suporta a sincronização de dados do Confluence, AWS S3, Discord e Google Drive.

|

||||

- 23-10-2025 Suporta MinerU e Docling como métodos de análise de documentos.

|

||||

- 15-10-2025 Suporte para pipelines de dados orquestrados.

|

||||

@ -186,12 +187,13 @@ Experimente nossa demo em [https://demo.ragflow.io](https://demo.ragflow.io).

|

||||

> Todas as imagens Docker são construídas para plataformas x86. Atualmente, não oferecemos imagens Docker para ARM64.

|

||||

> Se você estiver usando uma plataforma ARM64, por favor, utilize [este guia](https://ragflow.io/docs/dev/build_docker_image) para construir uma imagem Docker compatível com o seu sistema.

|

||||

|

||||

> O comando abaixo baixa a edição`v0.22.0` da imagem Docker do RAGFlow. Consulte a tabela a seguir para descrições de diferentes edições do RAGFlow. Para baixar uma edição do RAGFlow diferente da `v0.22.0`, atualize a variável `RAGFLOW_IMAGE` conforme necessário no **docker/.env** antes de usar `docker compose` para iniciar o servidor.

|

||||

> O comando abaixo baixa a edição`v0.22.1` da imagem Docker do RAGFlow. Consulte a tabela a seguir para descrições de diferentes edições do RAGFlow. Para baixar uma edição do RAGFlow diferente da `v0.22.1`, atualize a variável `RAGFLOW_IMAGE` conforme necessário no **docker/.env** antes de usar `docker compose` para iniciar o servidor.

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# Opcional: use uma tag estável (veja releases: https://github.com/infiniflow/ragflow/releases), ex.: git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# Opcional: use uma tag estável (veja releases: https://github.com/infiniflow/ragflow/releases)

|

||||

# Esta etapa garante que o arquivo entrypoint.sh no código corresponda à versão da imagem do Docker.

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -85,6 +85,7 @@

|

||||

|

||||

## 🔥 近期更新

|

||||

|

||||

- 2025-11-19 支援 Gemini 3 Pro.

|

||||

- 2025-11-12 支援從 Confluence、AWS S3、Discord、Google Drive 進行資料同步。

|

||||

- 2025-10-23 支援 MinerU 和 Docling 作為文件解析方法。

|

||||

- 2025-10-15 支援可編排的資料管道。

|

||||

@ -185,12 +186,13 @@

|

||||

> 所有 Docker 映像檔都是為 x86 平台建置的。目前,我們不提供 ARM64 平台的 Docker 映像檔。

|

||||

> 如果您使用的是 ARM64 平台,請使用 [這份指南](https://ragflow.io/docs/dev/build_docker_image) 來建置適合您系統的 Docker 映像檔。

|

||||

|

||||

> 執行以下指令會自動下載 RAGFlow Docker 映像 `v0.22.0`。請參考下表查看不同 Docker 發行版的說明。如需下載不同於 `v0.22.0` 的 Docker 映像,請在執行 `docker compose` 啟動服務之前先更新 **docker/.env** 檔案內的 `RAGFLOW_IMAGE` 變數。

|

||||

> 執行以下指令會自動下載 RAGFlow Docker 映像 `v0.22.1`。請參考下表查看不同 Docker 發行版的說明。如需下載不同於 `v0.22.1` 的 Docker 映像,請在執行 `docker compose` 啟動服務之前先更新 **docker/.env** 檔案內的 `RAGFLOW_IMAGE` 變數。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# 可選:使用穩定版標籤(查看發佈:https://github.com/infiniflow/ragflow/releases),例:git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# 可選:使用穩定版標籤(查看發佈:https://github.com/infiniflow/ragflow/releases)

|

||||

# 此步驟確保程式碼中的 entrypoint.sh 檔案與 Docker 映像版本一致。

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -22,7 +22,7 @@

|

||||

<img alt="Static Badge" src="https://img.shields.io/badge/Online-Demo-4e6b99">

|

||||

</a>

|

||||

<a href="https://hub.docker.com/r/infiniflow/ragflow" target="_blank">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.0">

|

||||

<img src="https://img.shields.io/docker/pulls/infiniflow/ragflow?label=Docker%20Pulls&color=0db7ed&logo=docker&logoColor=white&style=flat-square" alt="docker pull infiniflow/ragflow:v0.22.1">

|

||||

</a>

|

||||

<a href="https://github.com/infiniflow/ragflow/releases/latest">

|

||||

<img src="https://img.shields.io/github/v/release/infiniflow/ragflow?color=blue&label=Latest%20Release" alt="Latest Release">

|

||||

@ -85,6 +85,7 @@

|

||||

|

||||

## 🔥 近期更新

|

||||

|

||||

- 2025-11-19 支持 Gemini 3 Pro.

|

||||

- 2025-11-12 支持从 Confluence、AWS S3、Discord、Google Drive 进行数据同步。

|

||||

- 2025-10-23 支持 MinerU 和 Docling 作为文档解析方法。

|

||||

- 2025-10-15 支持可编排的数据管道。

|

||||

@ -186,12 +187,13 @@

|

||||

> 请注意,目前官方提供的所有 Docker 镜像均基于 x86 架构构建,并不提供基于 ARM64 的 Docker 镜像。

|

||||

> 如果你的操作系统是 ARM64 架构,请参考[这篇文档](https://ragflow.io/docs/dev/build_docker_image)自行构建 Docker 镜像。

|

||||

|

||||

> 运行以下命令会自动下载 RAGFlow Docker 镜像 `v0.22.0`。请参考下表查看不同 Docker 发行版的描述。如需下载不同于 `v0.22.0` 的 Docker 镜像,请在运行 `docker compose` 启动服务之前先更新 **docker/.env** 文件内的 `RAGFLOW_IMAGE` 变量。

|

||||

> 运行以下命令会自动下载 RAGFlow Docker 镜像 `v0.22.1`。请参考下表查看不同 Docker 发行版的描述。如需下载不同于 `v0.22.1` 的 Docker 镜像,请在运行 `docker compose` 启动服务之前先更新 **docker/.env** 文件内的 `RAGFLOW_IMAGE` 变量。

|

||||

|

||||

```bash

|

||||

$ cd ragflow/docker

|

||||

|

||||

# 可选:使用稳定版本标签(查看发布:https://github.com/infiniflow/ragflow/releases),例如:git checkout v0.22.0

|

||||

# git checkout v0.22.1

|

||||

# 可选:使用稳定版本标签(查看发布:https://github.com/infiniflow/ragflow/releases)

|

||||

# 这一步确保代码中的 entrypoint.sh 文件与 Docker 镜像的版本保持一致。

|

||||

|

||||

# Use CPU for DeepDoc tasks:

|

||||

|

||||

@ -48,7 +48,7 @@ It consists of a server-side Service and a command-line client (CLI), both imple

|

||||

1. Ensure the Admin Service is running.

|

||||

2. Install ragflow-cli.

|

||||

```bash

|

||||

pip install ragflow-cli==0.22.0

|

||||

pip install ragflow-cli==0.22.1

|

||||

```

|

||||

3. Launch the CLI client:

|

||||

```bash

|

||||

|

||||

@ -31,7 +31,7 @@ from common.constants import ActiveEnum, StatusEnum

|

||||

from api.utils.crypt import decrypt

|

||||

from common.misc_utils import get_uuid

|

||||

from common.time_utils import current_timestamp, datetime_format, get_format_time

|

||||

from common.connection_utils import construct_response

|

||||

from common.connection_utils import sync_construct_response

|

||||

from common import settings

|

||||

|

||||

|

||||

@ -130,7 +130,7 @@ def login_admin(email: str, password: str):

|

||||

user.last_login_time = get_format_time()

|

||||

user.save()

|

||||

msg = "Welcome back!"

|

||||

return construct_response(data=resp, auth=user.get_id(), message=msg)

|

||||

return sync_construct_response(data=resp, auth=user.get_id(), message=msg)

|

||||

|

||||

|

||||

def check_admin(username: str, password: str):

|

||||

|

||||

@ -30,12 +30,12 @@ admin_bp = Blueprint('admin', __name__, url_prefix='/api/v1/admin')

|

||||

|

||||

|

||||

@admin_bp.route('/login', methods=['POST'])

|

||||

async def login():

|

||||

if not await request.json:

|

||||

def login():

|

||||

if not request.json:

|

||||

return error_response('Authorize admin failed.' ,400)

|

||||

try:

|

||||

email = await request.json.get("email", "")

|

||||

password = await request.json.get("password", "")

|

||||

email = request.json.get("email", "")

|

||||

password = request.json.get("password", "")

|

||||

return login_admin(email, password)

|

||||

except Exception as e:

|

||||

return error_response(str(e), 500)

|

||||

@ -76,9 +76,9 @@ def list_users():

|

||||

@admin_bp.route('/users', methods=['POST'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def create_user():

|

||||

def create_user():

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'username' not in data or 'password' not in data:

|

||||

return error_response("Username and password are required", 400)

|

||||

|

||||

@ -120,9 +120,9 @@ def delete_user(username):

|

||||

@admin_bp.route('/users/<username>/password', methods=['PUT'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def change_password(username):

|

||||

def change_password(username):

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'new_password' not in data:

|

||||

return error_response("New password is required", 400)

|

||||

|

||||

@ -139,9 +139,9 @@ async def change_password(username):

|

||||

@admin_bp.route('/users/<username>/activate', methods=['PUT'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def alter_user_activate_status(username):

|

||||

def alter_user_activate_status(username):

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'activate_status' not in data:

|

||||

return error_response("Activation status is required", 400)

|

||||

activate_status = data['activate_status']

|

||||

@ -253,9 +253,9 @@ def restart_service(service_id):

|

||||

@admin_bp.route('/roles', methods=['POST'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def create_role():

|

||||

def create_role():

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'role_name' not in data:

|

||||

return error_response("Role name is required", 400)

|

||||

role_name: str = data['role_name']

|

||||

@ -269,9 +269,9 @@ async def create_role():

|

||||

@admin_bp.route('/roles/<role_name>', methods=['PUT'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def update_role(role_name: str):

|

||||

def update_role(role_name: str):

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'description' not in data:

|

||||

return error_response("Role description is required", 400)

|

||||

description: str = data['description']

|

||||

@ -317,9 +317,9 @@ def get_role_permission(role_name: str):

|

||||

@admin_bp.route('/roles/<role_name>/permission', methods=['POST'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def grant_role_permission(role_name: str):

|

||||

def grant_role_permission(role_name: str):

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'actions' not in data or 'resource' not in data:

|

||||

return error_response("Permission is required", 400)

|

||||

actions: list = data['actions']

|

||||

@ -333,9 +333,9 @@ async def grant_role_permission(role_name: str):

|

||||

@admin_bp.route('/roles/<role_name>/permission', methods=['DELETE'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def revoke_role_permission(role_name: str):

|

||||

def revoke_role_permission(role_name: str):

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'actions' not in data or 'resource' not in data:

|

||||

return error_response("Permission is required", 400)

|

||||

actions: list = data['actions']

|

||||

@ -349,9 +349,9 @@ async def revoke_role_permission(role_name: str):

|

||||

@admin_bp.route('/users/<user_name>/role', methods=['PUT'])

|

||||

@login_required

|

||||

@check_admin_auth

|

||||

async def update_user_role(user_name: str):

|

||||

def update_user_role(user_name: str):

|

||||

try:

|

||||

data = await request.get_json()

|

||||

data = request.get_json()

|

||||

if not data or 'role_name' not in data:

|

||||

return error_response("Role name is required", 400)

|

||||

role_name: str = data['role_name']

|

||||

|

||||

@ -301,7 +301,7 @@ class Canvas(Graph):

|

||||

self.retrieval = []

|

||||

self.memory = []

|

||||

for k in self.globals.keys():

|

||||

if k.startswith("sys."):

|

||||

if k.startswith("sys.") or k.startswith("env."):

|

||||

if isinstance(self.globals[k], str):

|

||||

self.globals[k] = ""

|

||||

elif isinstance(self.globals[k], int):

|

||||

|

||||

@ -47,7 +47,9 @@ class ListOperations(ComponentBase,ABC):

|

||||

def _invoke(self, **kwargs):

|

||||

self.input_objects=[]

|

||||

inputs = getattr(self._param, "query", None)

|

||||

self.inputs=self._canvas.get_variable_value(inputs)

|

||||

self.inputs = self._canvas.get_variable_value(inputs)

|

||||

if not isinstance(self.inputs, list):

|

||||

raise TypeError("The input of List Operations should be an array.")

|

||||

self.set_input_value(inputs, self.inputs)

|

||||

if self._param.operations == "topN":

|

||||

self._topN()

|

||||

|

||||

@ -47,12 +47,14 @@ class VariableAssigner(ComponentBase,ABC):

|

||||

return

|

||||

else:

|

||||

for item in self._param.variables:

|

||||

if any([not item.get("variable"), not item.get("operator"), not item.get("parameter")]):

|

||||

assert "Variable is not complete."

|

||||

variable=item["variable"]

|

||||

operator=item["operator"]

|

||||

parameter=item["parameter"]

|

||||

variable_value=self._canvas.get_variable_value(variable)

|

||||

new_variable=self._operate(variable_value,operator,parameter)

|

||||

self._canvas.set_variable_value(variable,new_variable)

|

||||

self._canvas.set_variable_value(variable, new_variable)

|

||||

|

||||

def _operate(self,variable,operator,parameter):

|

||||

if operator == "overwrite":

|

||||

@ -122,7 +124,8 @@ class VariableAssigner(ComponentBase,ABC):

|

||||

elif len(variable)!=0 and not isinstance(parameter,type(variable[0])):

|

||||

return "ERROR:PARAMETER_NOT_LIST_ELEMENT_TYPE"

|

||||

else:

|

||||

return variable+parameter

|

||||

variable.append(parameter)

|

||||

return variable

|

||||

|

||||

def _extend(self,variable,parameter):

|

||||

parameter=self._canvas.get_variable_value(parameter)

|

||||

@ -135,7 +138,7 @@ class VariableAssigner(ComponentBase,ABC):

|

||||

elif len(variable)!=0 and len(parameter)!=0 and not isinstance(parameter[0],type(variable[0])):

|

||||

return "ERROR:PARAMETER_NOT_LIST_ELEMENT_TYPE"

|

||||

else:

|

||||

return variable+parameter

|

||||

return variable + parameter

|

||||

|

||||

def _remove_first(self,variable):

|

||||

if len(variable)==0:

|

||||

@ -153,7 +156,6 @@ class VariableAssigner(ComponentBase,ABC):

|

||||

else:

|

||||

return variable[:-1]

|

||||

|

||||

|

||||

def is_number(self, value):

|

||||

if isinstance(value, bool):

|

||||

return False

|

||||

@ -161,19 +163,19 @@ class VariableAssigner(ComponentBase,ABC):

|

||||

|

||||

def _add(self,variable,parameter):

|

||||

if self.is_number(variable) and self.is_number(parameter):

|

||||

return variable+parameter

|

||||

return variable + parameter

|

||||

else:

|

||||

return "ERROR:VARIABLE_NOT_NUMBER or PARAMETER_NOT_NUMBER"

|

||||

|

||||

def _subtract(self,variable,parameter):

|

||||

if self.is_number(variable) and self.is_number(parameter):

|

||||

return variable-parameter

|

||||

return variable - parameter

|

||||

else:

|

||||

return "ERROR:VARIABLE_NOT_NUMBER or PARAMETER_NOT_NUMBER"

|

||||

|

||||

def _multiply(self,variable,parameter):

|

||||

if self.is_number(variable) and self.is_number(parameter):

|

||||

return variable*parameter

|

||||

return variable * parameter

|

||||

else:

|

||||

return "ERROR:VARIABLE_NOT_NUMBER or PARAMETER_NOT_NUMBER"

|

||||

|

||||

@ -184,4 +186,7 @@ class VariableAssigner(ComponentBase,ABC):

|

||||

else:

|

||||

return variable/parameter

|

||||

else:

|

||||

return "ERROR:VARIABLE_NOT_NUMBER or PARAMETER_NOT_NUMBER"

|

||||

return "ERROR:VARIABLE_NOT_NUMBER or PARAMETER_NOT_NUMBER"

|

||||

|

||||

def thoughts(self) -> str:

|

||||

return "Assign variables from canvas."

|

||||

File diff suppressed because one or more lines are too long

@ -101,14 +101,18 @@ def stats():

|

||||

"to_date",

|

||||

datetime.now().strftime("%Y-%m-%d %H:%M:%S")),

|

||||

"agent" if "canvas_id" in request.args else None)

|

||||

res = {

|

||||

"pv": [(o["dt"], o["pv"]) for o in objs],

|

||||

"uv": [(o["dt"], o["uv"]) for o in objs],

|

||||

"speed": [(o["dt"], float(o["tokens"]) / (float(o["duration"] + 0.1))) for o in objs],

|

||||

"tokens": [(o["dt"], float(o["tokens"]) / 1000.) for o in objs],

|

||||

"round": [(o["dt"], o["round"]) for o in objs],

|

||||

"thumb_up": [(o["dt"], o["thumb_up"]) for o in objs]

|

||||

}

|

||||

|

||||

res = {"pv": [], "uv": [], "speed": [], "tokens": [], "round": [], "thumb_up": []}

|

||||

|

||||

for obj in objs:

|

||||

dt = obj["dt"]

|

||||

res["pv"].append((dt, obj["pv"]))

|

||||

res["uv"].append((dt, obj["uv"]))

|

||||

res["speed"].append((dt, float(obj["tokens"]) / (float(obj["duration"]) + 0.1))) # +0.1 to avoid division by zero

|

||||

res["tokens"].append((dt, float(obj["tokens"]) / 1000.0)) # convert to thousands

|

||||

res["round"].append((dt, obj["round"]))

|

||||

res["thumb_up"].append((dt, obj["thumb_up"]))

|

||||

|

||||

return get_json_result(data=res)

|

||||

except Exception as e:

|

||||

return server_error_response(e)

|

||||

|

||||

@ -13,6 +13,7 @@

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

#

|

||||

import asyncio

|

||||

import json

|

||||

import logging

|

||||

import time

|

||||

@ -20,9 +21,7 @@ import uuid

|

||||

from html import escape

|

||||

from typing import Any

|

||||

|

||||

import flask

|

||||

import trio

|

||||

from quart import request

|

||||

from quart import request, make_response

|

||||

from google_auth_oauthlib.flow import Flow

|

||||

|

||||

from api.db import InputType

|

||||

@ -59,7 +58,7 @@ async def set_connector():

|

||||

}

|

||||

ConnectorService.save(**conn)

|

||||

|

||||

await trio.sleep(1)

|

||||

await asyncio.sleep(1)

|

||||

e, conn = ConnectorService.get_by_id(req["id"])

|

||||

|

||||

return get_json_result(data=conn.to_dict())

|

||||

@ -147,7 +146,7 @@ def _get_web_client_config(credentials: dict[str, Any]) -> dict[str, Any]:

|

||||

return {"web": web_section}

|

||||

|

||||

|

||||

def _render_web_oauth_popup(flow_id: str, success: bool, message: str):

|

||||

async def _render_web_oauth_popup(flow_id: str, success: bool, message: str):

|

||||

status = "success" if success else "error"

|

||||

auto_close = "window.close();" if success else ""

|

||||

escaped_message = escape(message)

|

||||

@ -165,7 +164,7 @@ def _render_web_oauth_popup(flow_id: str, success: bool, message: str):

|

||||

payload_json=payload_json,

|

||||

auto_close=auto_close,

|

||||

)

|

||||

response = flask.make_response(html, 200)

|

||||

response = await make_response(html, 200)

|

||||

response.headers["Content-Type"] = "text/html; charset=utf-8"

|

||||

return response

|

||||

|

||||

@ -232,31 +231,31 @@ async def start_google_drive_web_oauth():

|

||||

|

||||

|

||||

@manager.route("/google-drive/oauth/web/callback", methods=["GET"]) # noqa: F821

|

||||

def google_drive_web_oauth_callback():

|

||||

async def google_drive_web_oauth_callback():

|

||||

state_id = request.args.get("state")

|

||||

error = request.args.get("error")

|

||||

error_description = request.args.get("error_description") or error

|

||||

|

||||

if not state_id:

|

||||

return _render_web_oauth_popup("", False, "Missing OAuth state parameter.")

|

||||

return await _render_web_oauth_popup("", False, "Missing OAuth state parameter.")

|

||||

|

||||

state_cache = REDIS_CONN.get(_web_state_cache_key(state_id))

|

||||

if not state_cache:

|

||||

return _render_web_oauth_popup(state_id, False, "Authorization session expired. Please restart from the main window.")

|

||||

return await _render_web_oauth_popup(state_id, False, "Authorization session expired. Please restart from the main window.")

|

||||

|

||||

state_obj = json.loads(state_cache)

|

||||

client_config = state_obj.get("client_config")

|

||||

if not client_config:

|

||||

REDIS_CONN.delete(_web_state_cache_key(state_id))

|

||||

return _render_web_oauth_popup(state_id, False, "Authorization session was invalid. Please retry.")

|

||||

return await _render_web_oauth_popup(state_id, False, "Authorization session was invalid. Please retry.")

|

||||

|

||||

if error:

|

||||

REDIS_CONN.delete(_web_state_cache_key(state_id))

|

||||

return _render_web_oauth_popup(state_id, False, error_description or "Authorization was cancelled.")

|

||||

return await _render_web_oauth_popup(state_id, False, error_description or "Authorization was cancelled.")

|

||||

|

||||

code = request.args.get("code")

|

||||

if not code:

|

||||

return _render_web_oauth_popup(state_id, False, "Missing authorization code from Google.")

|

||||

return await _render_web_oauth_popup(state_id, False, "Missing authorization code from Google.")

|

||||

|

||||

try:

|

||||

flow = Flow.from_client_config(client_config, scopes=GOOGLE_SCOPES[DocumentSource.GOOGLE_DRIVE])

|

||||

@ -265,7 +264,7 @@ def google_drive_web_oauth_callback():

|

||||

except Exception as exc: # pragma: no cover - defensive

|

||||

logging.exception("Failed to exchange Google OAuth code: %s", exc)

|

||||

REDIS_CONN.delete(_web_state_cache_key(state_id))

|

||||

return _render_web_oauth_popup(state_id, False, "Failed to exchange tokens with Google. Please retry.")

|

||||

return await _render_web_oauth_popup(state_id, False, "Failed to exchange tokens with Google. Please retry.")

|

||||

|

||||

creds_json = flow.credentials.to_json()

|

||||

result_payload = {

|

||||

@ -275,7 +274,7 @@ def google_drive_web_oauth_callback():

|

||||

REDIS_CONN.set_obj(_web_result_cache_key(state_id), result_payload, WEB_FLOW_TTL_SECS)

|

||||

REDIS_CONN.delete(_web_state_cache_key(state_id))

|

||||

|

||||

return _render_web_oauth_popup(state_id, True, "Authorization completed successfully.")

|

||||

return await _render_web_oauth_popup(state_id, True, "Authorization completed successfully.")

|

||||

|

||||

|

||||

@manager.route("/google-drive/oauth/web/result", methods=["POST"]) # noqa: F821

|

||||

|

||||

@ -886,6 +886,7 @@ async def check_embedding():

|

||||

|

||||

try:

|

||||

v, _ = emb_mdl.encode([title, txt_in])

|

||||

assert len(v[1]) == len(ck["vector"]), f"The dimension ({len(v[1])}) of given embedding model is different from the original ({len(ck['vector'])})"

|

||||

sim_content = _cos_sim(v[1], ck["vector"])

|

||||

title_w = 0.1

|

||||

qv_mix = title_w * v[0] + (1 - title_w) * v[1]

|

||||

@ -895,8 +896,8 @@ async def check_embedding():

|

||||

if sim_mix > sim:

|

||||

sim = sim_mix

|

||||

mode = "title+content"

|

||||

except Exception:

|

||||

return get_error_data_result(message="embedding failure")

|

||||

except Exception as e:

|

||||

return get_error_data_result(message=f"Embedding failure. {e}")

|

||||

|

||||

eff_sims.append(sim)

|

||||

results.append({

|

||||

|

||||

@ -33,7 +33,6 @@ from api.db.services.task_service import TaskService

|

||||

from api.utils.file_utils import filename_type, read_potential_broken_pdf, thumbnail_img, sanitize_path

|

||||

from rag.llm.cv_model import GptV4

|

||||

from common import settings

|

||||

from api.apps import current_user

|

||||

|

||||

|

||||

class FileService(CommonService):

|

||||

@ -184,6 +183,7 @@ class FileService(CommonService):

|

||||

@classmethod

|

||||

@DB.connection_context()

|

||||

def create_folder(cls, file, parent_id, name, count):

|

||||

from api.apps import current_user

|

||||

# Recursively create folder structure

|

||||

# Args:

|

||||

# file: Current file object

|

||||

@ -508,6 +508,7 @@ class FileService(CommonService):

|

||||

@staticmethod

|

||||

def parse(filename, blob, img_base64=True, tenant_id=None):

|

||||

from rag.app import audio, email, naive, picture, presentation

|

||||

from api.apps import current_user

|

||||

|

||||

def dummy(prog=None, msg=""):

|

||||

pass

|

||||

|

||||

@ -120,3 +120,23 @@ async def construct_response(code=RetCode.SUCCESS, message="success", data=None,

|

||||

response.headers["Access-Control-Allow-Headers"] = "*"

|

||||

response.headers["Access-Control-Expose-Headers"] = "Authorization"

|

||||

return response

|

||||

|

||||

|

||||

def sync_construct_response(code=RetCode.SUCCESS, message="success", data=None, auth=None):

|

||||

import flask

|

||||

result_dict = {"code": code, "message": message, "data": data}

|

||||

response_dict = {}

|

||||

for key, value in result_dict.items():

|

||||

if value is None and key != "code":

|

||||

continue

|

||||

else:

|

||||

response_dict[key] = value

|

||||

response = flask.make_response(flask.jsonify(response_dict))

|

||||

if auth:

|

||||

response.headers["Authorization"] = auth

|

||||

response.headers["Access-Control-Allow-Origin"] = "*"

|

||||

response.headers["Access-Control-Allow-Method"] = "*"

|

||||

response.headers["Access-Control-Allow-Headers"] = "*"

|

||||

response.headers["Access-Control-Allow-Headers"] = "*"

|

||||

response.headers["Access-Control-Expose-Headers"] = "Authorization"

|

||||

return response

|

||||

|

||||

@ -1429,6 +1429,13 @@

|

||||

"status": "1",

|

||||

"rank": "980",

|

||||

"llm": [

|

||||

{

|

||||

"llm_name": "gemini-3-pro-preview",

|

||||

"tags": "LLM,CHAT,1M,IMAGE2TEXT",

|

||||

"max_tokens": 1048576,

|

||||

"model_type": "image2text",

|

||||

"is_tools": true

|

||||

},

|

||||

{

|

||||

"llm_name": "gemini-2.5-flash",

|

||||

"tags": "LLM,CHAT,1024K,IMAGE2TEXT",

|

||||

@ -4841,7 +4848,7 @@

|

||||

]

|

||||

},

|

||||

{

|

||||

"name": "JieKou.AI",

|

||||

"name": "Jiekou.AI",

|

||||

"logo": "",

|

||||

"tags": "LLM,TEXT EMBEDDING,TEXT RE-RANK",

|

||||

"status": "1",

|

||||

@ -5474,4 +5481,4 @@

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

|

||||

@ -318,7 +318,7 @@ class MinerUParser(RAGFlowPdfParser):

|

||||

|

||||

def _line_tag(self, bx):

|

||||

pn = [bx["page_idx"] + 1]

|

||||

positions = bx["bbox"]

|

||||

positions = bx.get("bbox", (0, 0, 0, 0))

|

||||

x0, top, x1, bott = positions

|

||||

|

||||

if hasattr(self, "page_images") and self.page_images and len(self.page_images) > bx["page_idx"]:

|

||||

|

||||

@ -106,11 +106,11 @@ ADMIN_SVR_HTTP_PORT=9381

|

||||

SVR_MCP_PORT=9382

|

||||

|

||||

# The RAGFlow Docker image to download. v0.22+ doesn't include embedding models.

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.22.0

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.22.1

|

||||

|

||||

# If you cannot download the RAGFlow Docker image:

|

||||

# RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:v0.22.0

|

||||

# RAGFLOW_IMAGE=registry.cn-hangzhou.aliyuncs.com/infiniflow/ragflow:v0.22.0

|

||||

# RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:v0.22.1

|

||||

# RAGFLOW_IMAGE=registry.cn-hangzhou.aliyuncs.com/infiniflow/ragflow:v0.22.1

|

||||

#

|

||||

# - For the `nightly` edition, uncomment either of the following:

|

||||

# RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:nightly

|

||||

|

||||

@ -77,7 +77,7 @@ The [.env](./.env) file contains important environment variables for Docker.

|

||||

- `SVR_HTTP_PORT`

|

||||

The port used to expose RAGFlow's HTTP API service to the host machine, allowing **external** access to the service running inside the Docker container. Defaults to `9380`.

|

||||

- `RAGFLOW-IMAGE`

|

||||

The Docker image edition. Defaults to `infiniflow/ragflow:v0.22.0`. The RAGFlow Docker image does not include embedding models.

|

||||

The Docker image edition. Defaults to `infiniflow/ragflow:v0.22.1`. The RAGFlow Docker image does not include embedding models.

|

||||

|

||||

|

||||

> [!TIP]

|

||||

|

||||

@ -97,7 +97,7 @@ RAGFlow utilizes MinIO as its object storage solution, leveraging its scalabilit

|

||||

- `SVR_HTTP_PORT`

|

||||

The port used to expose RAGFlow's HTTP API service to the host machine, allowing **external** access to the service running inside the Docker container. Defaults to `9380`.

|

||||

- `RAGFLOW-IMAGE`

|

||||

The Docker image edition. Defaults to `infiniflow/ragflow:v0.22.0` (the RAGFlow Docker image without embedding models).

|

||||

The Docker image edition. Defaults to `infiniflow/ragflow:v0.22.1` (the RAGFlow Docker image without embedding models).

|

||||

|

||||

:::tip NOTE

|

||||

If you cannot download the RAGFlow Docker image, try the following mirrors.

|

||||

|

||||

@ -47,7 +47,7 @@ After building the infiniflow/ragflow:nightly image, you are ready to launch a f

|

||||

|

||||

1. Edit Docker Compose Configuration

|

||||

|

||||

Open the `docker/.env` file. Find the `RAGFLOW_IMAGE` setting and change the image reference from `infiniflow/ragflow:v0.22.0` to `infiniflow/ragflow:nightly` to use the pre-built image.

|

||||

Open the `docker/.env` file. Find the `RAGFLOW_IMAGE` setting and change the image reference from `infiniflow/ragflow:v0.22.1` to `infiniflow/ragflow:nightly` to use the pre-built image.

|

||||

|

||||

|

||||

2. Launch the Service

|

||||

|

||||

@ -133,7 +133,7 @@ See [Run retrieval test](./run_retrieval_test.md) for details.

|

||||

|

||||

## Search for dataset

|

||||

|

||||

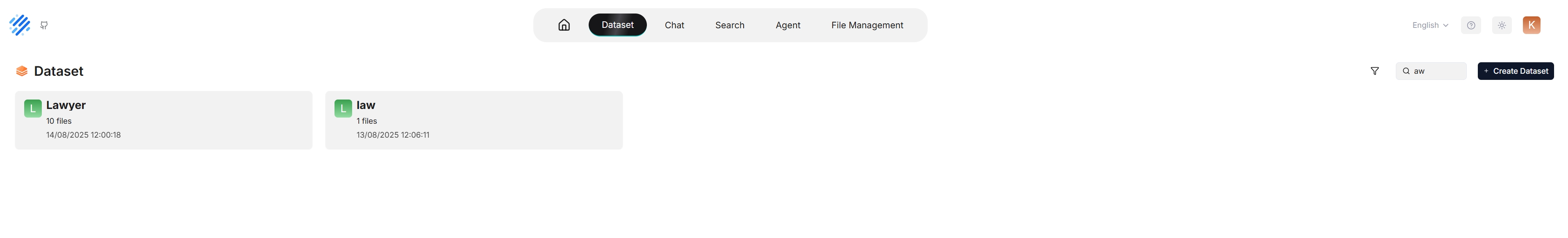

As of RAGFlow v0.22.0, the search feature is still in a rudimentary form, supporting only dataset search by name.

|

||||

As of RAGFlow v0.22.1, the search feature is still in a rudimentary form, supporting only dataset search by name.

|

||||

|

||||

|

||||

|

||||

|

||||

@ -87,4 +87,4 @@ RAGFlow's file management allows you to download an uploaded file:

|

||||

|

||||

|

||||

|

||||

> As of RAGFlow v0.22.0, bulk download is not supported, nor can you download an entire folder.

|

||||

> As of RAGFlow v0.22.1, bulk download is not supported, nor can you download an entire folder.

|

||||

|

||||

@ -46,7 +46,7 @@ The Admin CLI and Admin Service form a client-server architectural suite for RAG

|

||||

2. Install ragflow-cli.

|

||||

|

||||

```bash

|

||||

pip install ragflow-cli==0.22.0

|

||||

pip install ragflow-cli==0.22.1

|

||||

```

|

||||

|

||||

3. Launch the CLI client:

|

||||

|

||||

@ -48,16 +48,16 @@ To upgrade RAGFlow, you must upgrade **both** your code **and** your Docker imag

|

||||

git clone https://github.com/infiniflow/ragflow.git

|

||||

```

|

||||

|

||||

2. Switch to the latest, officially published release, e.g., `v0.22.0`:

|

||||

2. Switch to the latest, officially published release, e.g., `v0.22.1`:

|

||||

|

||||

```bash

|

||||

git checkout -f v0.22.0

|

||||

git checkout -f v0.22.1

|

||||

```

|

||||

|

||||

3. Update **ragflow/docker/.env**:

|

||||

|

||||

```bash

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.22.0

|

||||

RAGFLOW_IMAGE=infiniflow/ragflow:v0.22.1

|

||||

```

|

||||

|

||||

4. Update the RAGFlow image and restart RAGFlow:

|

||||

@ -78,10 +78,10 @@ No, you do not need to. Upgrading RAGFlow in itself will *not* remove your uploa

|

||||

1. From an environment with Internet access, pull the required Docker image.

|

||||

2. Save the Docker image to a **.tar** file.

|

||||

```bash

|

||||

docker save -o ragflow.v0.22.0.tar infiniflow/ragflow:v0.22.0

|

||||

docker save -o ragflow.v0.22.1.tar infiniflow/ragflow:v0.22.1

|

||||

```

|

||||

3. Copy the **.tar** file to the target server.

|

||||

4. Load the **.tar** file into Docker:

|

||||

```bash

|

||||

docker load -i ragflow.v0.22.0.tar

|

||||

docker load -i ragflow.v0.22.1.tar

|

||||

```

|

||||

|

||||

@ -44,7 +44,7 @@ This section provides instructions on setting up the RAGFlow server on Linux. If

|

||||

|

||||

`vm.max_map_count`. This value sets the maximum number of memory map areas a process may have. Its default value is 65530. While most applications require fewer than a thousand maps, reducing this value can result in abnormal behaviors, and the system will throw out-of-memory errors when a process reaches the limitation.

|

||||

|

||||

RAGFlow v0.22.0 uses Elasticsearch or [Infinity](https://github.com/infiniflow/infinity) for multiple recall. Setting the value of `vm.max_map_count` correctly is crucial to the proper functioning of the Elasticsearch component.

|

||||

RAGFlow v0.22.1 uses Elasticsearch or [Infinity](https://github.com/infiniflow/infinity) for multiple recall. Setting the value of `vm.max_map_count` correctly is crucial to the proper functioning of the Elasticsearch component.

|

||||

|

||||

<Tabs

|

||||

defaultValue="linux"

|

||||

@ -184,7 +184,7 @@ This section provides instructions on setting up the RAGFlow server on Linux. If

|

||||

```bash

|

||||

$ git clone https://github.com/infiniflow/ragflow.git

|

||||

$ cd ragflow/docker

|

||||

$ git checkout -f v0.22.0

|

||||

$ git checkout -f v0.22.1

|

||||

```

|

||||

|

||||

3. Use the pre-built Docker images and start up the server:

|

||||

@ -200,7 +200,7 @@ This section provides instructions on setting up the RAGFlow server on Linux. If

|

||||

|

||||

| RAGFlow image tag | Image size (GB) | Stable? |

|

||||

| ------------------- | --------------- | ------------------------ |

|

||||

| v0.22.0 | ≈2 | Stable release |

|

||||

| v0.22.1 | ≈2 | Stable release |

|

||||

| nightly | ≈2 | _Unstable_ nightly build |

|

||||

|

||||

```mdx-code-block

|

||||

|

||||

@ -7,6 +7,32 @@ slug: /release_notes

|

||||

|

||||

Key features, improvements and bug fixes in the latest releases.

|

||||

|

||||

## v0.22.1

|

||||

|

||||

Released on November 19, 2025.

|

||||

|

||||

### Improvements

|

||||

|

||||

- Agent:

|

||||

- Supports exporting Agent outputs in Word or Markdown formats.

|

||||

- Adds a **List operations** component.

|

||||

- Adds a **Variable aggregator** component.

|

||||

- Data sources:

|

||||

- Supports S3-compatible data sources, e.g., MinIO.

|

||||

- Adds data synchronization with JIRA.

|

||||

- Continues the redesign of the **Profile** page layouts.

|

||||

- Upgrades the Flask web framework from synchronous to asynchronous, increasing concurrency and preventing blocking issues caused when requesting upstream LLM services.

|

||||

|

||||

### Fixed issues

|

||||

|

||||

- A v0.22.0 issue: Users failed to parse uploaded files or switch embedding model in a dataset containing parsed files using a built-in model from a `-full` RAGFlow edition.

|

||||

- Image concatenated in Word documents. [#11310](https://github.com/infiniflow/ragflow/pull/11310)

|

||||

- Mixed images and text were not correctly displayed in the chat history.

|

||||

|

||||

### Newly supported models

|

||||

|

||||

- Gemini 3 Pro Preview

|

||||

|

||||

## v0.22.0

|

||||

|

||||

Released on November 12, 2025.

|

||||

@ -77,7 +103,7 @@ Released on October 15, 2025.

|

||||

- Redesigns RAGFlow's Login and Registration pages.

|

||||

- Upgrades RAGFlow's document engine Infinity to v0.6.0.

|

||||

|

||||

### Added models

|

||||

### Newly supported models

|

||||

|

||||

- Tongyi Qwen 3 series

|

||||

- Claude Sonnet 4.5

|

||||

@ -100,7 +126,7 @@ Released on September 10, 2025.

|

||||

- **Execute SQL** component enhanced: Replaces the original variable reference component with a text input field, allowing users to write free-form SQL queries and reference variables. See [here](./guides/agent/agent_component_reference/execute_sql.md).

|

||||

- Chat: Re-enables **Reasoning** and **Cross-language search**.

|

||||

|

||||

### Added models

|

||||

### Newly supported models

|

||||

|

||||

- Meituan LongCat

|

||||

- Kimi: kimi-k2-turbo-preview and kimi-k2-0905-preview

|

||||

@ -139,7 +165,7 @@ Released on August 27, 2025.

|

||||

- Improves Markdown file parsing, with AST support to avoid unintended chunking.

|

||||

- Enhances HTML parsing, supporting bs4-based HTML tag traversal.

|

||||

|

||||

### Added models

|

||||

### Newly supported models

|

||||

|

||||

ZHIPU GLM-4.5

|

||||

|

||||

@ -200,7 +226,7 @@ Released on August 8, 2025.

|

||||

- The **Retrieval** component now supports the dynamic specification of dataset names using variables.

|

||||

- The user interface now includes a French language option.

|

||||

|

||||

### Added Models

|

||||

### Newly supported models

|

||||

|

||||

- GPT-5

|

||||

- Claude 4.1

|

||||

@ -264,7 +290,7 @@ Released on June 23, 2025.

|

||||

- Added support for models installed via Ollama or VLLM when creating a dataset through the API. [#8069](https://github.com/infiniflow/ragflow/pull/8069)

|

||||

- Enabled role-based authentication for S3 bucket access. [#8149](https://github.com/infiniflow/ragflow/pull/8149)

|

||||

|

||||

### Added models

|

||||

### Newly supported models

|

||||

|

||||

- Qwen 3 Embedding. [#8184](https://github.com/infiniflow/ragflow/pull/8184)

|

||||

- Voyage Multimodal 3. [#7987](https://github.com/infiniflow/ragflow/pull/7987)

|

||||

|

||||

@ -56,7 +56,7 @@ env:

|

||||

ragflow:

|

||||

image:

|

||||

repository: infiniflow/ragflow

|

||||

tag: v0.22.0

|

||||

tag: v0.22.1

|

||||

pullPolicy: IfNotPresent

|

||||

pullSecrets: []

|

||||

# Optional service configuration overrides

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

[project]

|

||||

name = "ragflow"

|

||||

version = "0.22.0"

|

||||

version = "0.22.1"

|

||||

description = "[RAGFlow](https://ragflow.io/) is an open-source RAG (Retrieval-Augmented Generation) engine based on deep document understanding. It offers a streamlined RAG workflow for businesses of any scale, combining LLM (Large Language Models) to provide truthful question-answering capabilities, backed by well-founded citations from various complex formatted data."

|

||||

authors = [{ name = "Zhichang Yu", email = "yuzhichang@gmail.com" }]

|

||||

license-files = ["LICENSE"]

|

||||

|

||||

@ -14,24 +14,27 @@

|

||||

# limitations under the License.

|

||||

#

|

||||

|

||||

import re

|

||||

import base64

|

||||

import json

|

||||

import os

|

||||

import tempfile

|

||||

import logging

|

||||

import os

|

||||

import re

|

||||

import tempfile

|

||||

from abc import ABC

|

||||

from copy import deepcopy

|

||||

from io import BytesIO

|

||||

from pathlib import Path

|

||||

from urllib.parse import urljoin

|

||||

|

||||

import requests

|

||||

from openai import OpenAI

|

||||

from openai.lib.azure import AzureOpenAI

|

||||

from zhipuai import ZhipuAI

|

||||

|

||||

from common.token_utils import num_tokens_from_string, total_token_count_from_response

|

||||

from rag.nlp import is_english

|

||||

from rag.prompts.generator import vision_llm_describe_prompt

|

||||

from common.token_utils import num_tokens_from_string, total_token_count_from_response

|

||||

|

||||

|

||||

class Base(ABC):

|

||||

def __init__(self, **kwargs):

|

||||

@ -70,12 +73,7 @@ class Base(ABC):

|

||||

|

||||

pmpt = [{"type": "text", "text": text}]

|

||||

for img in images:

|

||||

pmpt.append({

|

||||

"type": "image_url",

|

||||

"image_url": {

|

||||

"url": img if isinstance(img, str) and img.startswith("data:") else f"data:image/png;base64,{img}"

|

||||

}

|

||||

})

|

||||

pmpt.append({"type": "image_url", "image_url": {"url": img if isinstance(img, str) and img.startswith("data:") else f"data:image/png;base64,{img}"}})

|

||||

return pmpt

|

||||

|

||||

def chat(self, system, history, gen_conf, images=None, **kwargs):

|

||||

@ -128,7 +126,7 @@ class Base(ABC):

|

||||

try:

|

||||

image.save(buffered, format="JPEG")

|

||||

except Exception:

|

||||

# reset buffer before saving PNG

|

||||

# reset buffer before saving PNG

|

||||

buffered.seek(0)

|

||||

buffered.truncate()

|

||||

image.save(buffered, format="PNG")

|

||||

@ -158,7 +156,7 @@ class Base(ABC):

|

||||

try:

|

||||

image.save(buffered, format="JPEG")

|

||||

except Exception:

|

||||

# reset buffer before saving PNG

|

||||

# reset buffer before saving PNG

|

||||

buffered.seek(0)

|

||||

buffered.truncate()

|

||||

image.save(buffered, format="PNG")

|

||||

@ -176,18 +174,13 @@ class Base(ABC):

|

||||

"请用中文详细描述一下图中的内容,比如时间,地点,人物,事情,人物心情等,如果有数据请提取出数据。"

|

||||

if self.lang.lower() == "chinese"

|

||||

else "Please describe the content of this picture, like where, when, who, what happen. If it has number data, please extract them out.",

|

||||

b64

|

||||

)

|

||||

b64,

|

||||

),

|

||||

}

|

||||

]

|

||||

|

||||

def vision_llm_prompt(self, b64, prompt=None):

|

||||

return [

|

||||

{

|

||||

"role": "user",

|

||||

"content": self._image_prompt(prompt if prompt else vision_llm_describe_prompt(), b64)

|

||||

}

|

||||

]

|

||||

return [{"role": "user", "content": self._image_prompt(prompt if prompt else vision_llm_describe_prompt(), b64)}]

|

||||

|

||||

|

||||

class GptV4(Base):

|

||||

@ -208,7 +201,7 @@ class GptV4(Base):

|

||||

model=self.model_name,

|

||||

messages=self.prompt(b64),

|

||||

extra_body=self.extra_body,

|

||||

unused = None,

|

||||

unused=None,

|

||||

)

|

||||

return res.choices[0].message.content.strip(), total_token_count_from_response(res)

|

||||

|

||||

@ -219,7 +212,7 @@ class GptV4(Base):

|

||||

messages=self.vision_llm_prompt(b64, prompt),

|

||||

extra_body=self.extra_body,

|

||||

)

|

||||

return res.choices[0].message.content.strip(),total_token_count_from_response(res)

|

||||

return res.choices[0].message.content.strip(), total_token_count_from_response(res)

|

||||

|

||||

|

||||

class AzureGptV4(GptV4):

|

||||

@ -324,14 +317,12 @@ class Zhipu4V(GptV4):

|

||||

self.lang = lang

|

||||

Base.__init__(self, **kwargs)

|

||||

|

||||

|

||||

def _clean_conf(self, gen_conf):

|

||||

if "max_tokens" in gen_conf:

|

||||

del gen_conf["max_tokens"]

|

||||

gen_conf = self._clean_conf_plealty(gen_conf)

|

||||

return gen_conf

|

||||

|

||||

|

||||

def _clean_conf_plealty(self, gen_conf):

|

||||

if "presence_penalty" in gen_conf:

|

||||

del gen_conf["presence_penalty"]

|

||||

@ -339,24 +330,17 @@ class Zhipu4V(GptV4):

|

||||

del gen_conf["frequency_penalty"]

|

||||

return gen_conf

|

||||

|

||||

|

||||

def _request(self, msg, stream, gen_conf={}):

|

||||

response = requests.post(

|

||||

self.base_url,

|

||||

json={

|

||||

"model": self.model_name,

|

||||

"messages": msg,

|

||||

"stream": stream,

|

||||

**gen_conf

|

||||

json={"model": self.model_name, "messages": msg, "stream": stream, **gen_conf},

|

||||

headers={

|

||||

"Authorization": f"Bearer {self.api_key}",

|

||||

"Content-Type": "application/json",

|

||||

},

|

||||

headers= {

|

||||

"Authorization": f"Bearer {self.api_key}",

|

||||

"Content-Type": "application/json",

|

||||

}

|

||||

)

|

||||

return response.json()

|

||||

|

||||

|

||||

def chat(self, system, history, gen_conf, images=None, stream=False, **kwargs):

|

||||

if system and history and history[0].get("role") != "system":

|

||||

history.insert(0, {"role": "system", "content": system})

|

||||

@ -369,10 +353,9 @@ class Zhipu4V(GptV4):

|

||||

|

||||

cleaned = re.sub(r"<\|(begin_of_box|end_of_box)\|>", "", content).strip()

|

||||

return cleaned, total_token_count_from_response(response)

|

||||

|

||||

|

||||

def chat_streamly(self, system, history, gen_conf, images=None, **kwargs):

|

||||

from rag.llm.chat_model import LENGTH_NOTIFICATION_CN, LENGTH_NOTIFICATION_EN

|

||||

from rag.llm.chat_model import LENGTH_NOTIFICATION_CN, LENGTH_NOTIFICATION_EN

|

||||

from rag.nlp import is_chinese

|

||||

|

||||

if system and history and history[0].get("role") != "system":

|

||||

@ -402,44 +385,24 @@ class Zhipu4V(GptV4):

|

||||

|

||||

yield tk_count

|

||||

|

||||

|

||||

def describe(self, image):

|

||||

return self.describe_with_prompt(image)

|

||||

|

||||

|

||||

def describe_with_prompt(self, image, prompt=None):

|

||||

b64 = self.image2base64(image)

|

||||

if prompt is None:

|

||||

prompt = "Describe this image."

|

||||

|

||||

# Chat messages

|

||||

messages = [

|

||||

{

|

||||

"role": "user",

|

||||

"content": [

|

||||

{

|

||||

"type": "image_url",

|

||||

"image_url": { "url": b64 }

|

||||

},

|

||||

{

|

||||

"type": "text",

|

||||

"text": prompt

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

messages = [{"role": "user", "content": [{"type": "image_url", "image_url": {"url": b64}}, {"type": "text", "text": prompt}]}]

|

||||

|

||||

resp = self.client.chat.completions.create(

|

||||

model=self.model_name,

|

||||

messages=messages,

|

||||

stream=False

|

||||

)

|

||||

resp = self.client.chat.completions.create(model=self.model_name, messages=messages, stream=False)

|

||||

|

||||

content = resp.choices[0].message.content.strip()

|

||||

cleaned = re.sub(r"<\|(begin_of_box|end_of_box)\|>", "", content).strip()

|

||||

|

||||

return cleaned, num_tokens_from_string(cleaned)

|

||||

|

||||

|

||||

|

||||

class StepFunCV(GptV4):

|

||||

_FACTORY_NAME = "StepFun"

|

||||

@ -452,6 +415,7 @@ class StepFunCV(GptV4):

|

||||

self.lang = lang

|

||||

Base.__init__(self, **kwargs)

|

||||

|

||||

|

||||

class VolcEngineCV(GptV4):

|

||||

_FACTORY_NAME = "VolcEngine"

|

||||

|

||||

@ -464,6 +428,7 @@ class VolcEngineCV(GptV4):

|

||||

self.lang = lang

|

||||

Base.__init__(self, **kwargs)

|

||||

|

||||

|

||||

class LmStudioCV(GptV4):

|

||||

_FACTORY_NAME = "LM-Studio"

|

||||

|

||||

@ -502,13 +467,7 @@ class TogetherAICV(GptV4):

|

||||

class YiCV(GptV4):

|

||||

_FACTORY_NAME = "01.AI"

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

key,

|

||||

model_name,

|

||||

lang="Chinese",

|

||||

base_url="https://api.lingyiwanwu.com/v1", **kwargs

|

||||

):

|

||||

def __init__(self, key, model_name, lang="Chinese", base_url="https://api.lingyiwanwu.com/v1", **kwargs):

|

||||

if not base_url:

|

||||

base_url = "https://api.lingyiwanwu.com/v1"

|

||||

super().__init__(key, model_name, lang, base_url, **kwargs)

|

||||

@ -517,13 +476,7 @@ class YiCV(GptV4):

|

||||

class SILICONFLOWCV(GptV4):

|

||||

_FACTORY_NAME = "SILICONFLOW"

|

||||

|

||||

def __init__(

|

||||

self,

|

||||

key,

|

||||

model_name,

|

||||

lang="Chinese",

|

||||

base_url="https://api.siliconflow.cn/v1", **kwargs

|

||||

):

|

||||

def __init__(self, key, model_name, lang="Chinese", base_url="https://api.siliconflow.cn/v1", **kwargs):

|

||||

if not base_url:

|

||||

base_url = "https://api.siliconflow.cn/v1"

|

||||

super().__init__(key, model_name, lang, base_url, **kwargs)

|

||||