mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-02-04 09:35:06 +08:00

Compare commits

23 Commits

17b8bb62b6

...

main_backu

| Author | SHA1 | Date | |

|---|---|---|---|

| 427e0540ca | |||

| 8f16fac898 | |||

| ea00478a21 | |||

| 906f19e863 | |||

| 667dc5467e | |||

| 977962fdfe | |||

| 1e9374a373 | |||

| 9b52ba8061 | |||

| 44671ea413 | |||

| c81421d340 | |||

| ee93a80e91 | |||

| 5c981978c1 | |||

| 7fef285af5 | |||

| b1efb905e5 | |||

| 6400bf87ba | |||

| f239bc02d3 | |||

| 5776fa73a7 | |||

| fc6af1998b | |||

| 0588fe79b9 | |||

| f545265f93 | |||

| c987d33649 | |||

| d72debf0db | |||

| c33134ea2c |

27

.github/workflows/tests.yml

vendored

27

.github/workflows/tests.yml

vendored

@ -197,37 +197,38 @@ jobs:

|

||||

echo -e "COMPOSE_PROFILES=\${COMPOSE_PROFILES},tei-cpu" >> docker/.env

|

||||

echo -e "TEI_MODEL=BAAI/bge-small-en-v1.5" >> docker/.env

|

||||

echo -e "RAGFLOW_IMAGE=${RAGFLOW_IMAGE}" >> docker/.env

|

||||

sed -i '1i DOC_ENGINE=infinity' docker/.env

|

||||

echo "HOST_ADDRESS=http://host.docker.internal:${SVR_HTTP_PORT}" >> ${GITHUB_ENV}

|

||||

|

||||

sudo docker compose -f docker/docker-compose.yml -p ${GITHUB_RUN_ID} up -d

|

||||

uv sync --python 3.12 --only-group test --no-default-groups --frozen && uv pip install sdk/python --group test

|

||||

|

||||

- name: Run sdk tests against Elasticsearch

|

||||

- name: Run sdk tests against Infinity

|

||||

run: |

|

||||

export http_proxy=""; export https_proxy=""; export no_proxy=""; export HTTP_PROXY=""; export HTTPS_PROXY=""; export NO_PROXY=""

|

||||

until sudo docker exec ${RAGFLOW_CONTAINER} curl -s --connect-timeout 5 ${HOST_ADDRESS} > /dev/null; do

|

||||

echo "Waiting for service to be available..."

|

||||

sleep 5

|

||||

done

|

||||

source .venv/bin/activate && pytest -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_sdk_api 2>&1 | tee es_sdk_test.log

|

||||

source .venv/bin/activate && DOC_ENGINE=infinity pytest -x -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_sdk_api 2>&1 | tee infinity_sdk_test.log

|

||||

|

||||

- name: Run frontend api tests against Elasticsearch

|

||||

- name: Run frontend api tests against Infinity

|

||||

run: |

|

||||

export http_proxy=""; export https_proxy=""; export no_proxy=""; export HTTP_PROXY=""; export HTTPS_PROXY=""; export NO_PROXY=""

|

||||

until sudo docker exec ${RAGFLOW_CONTAINER} curl -s --connect-timeout 5 ${HOST_ADDRESS} > /dev/null; do

|

||||

echo "Waiting for service to be available..."

|

||||

sleep 5

|

||||

done

|

||||

source .venv/bin/activate && pytest -s --tb=short sdk/python/test/test_frontend_api/get_email.py sdk/python/test/test_frontend_api/test_dataset.py 2>&1 | tee es_api_test.log

|

||||

source .venv/bin/activate && DOC_ENGINE=infinity pytest -x -s --tb=short sdk/python/test/test_frontend_api/get_email.py sdk/python/test/test_frontend_api/test_dataset.py 2>&1 | tee infinity_api_test.log

|

||||

|

||||

- name: Run http api tests against Elasticsearch

|

||||

- name: Run http api tests against Infinity

|

||||

run: |

|

||||

export http_proxy=""; export https_proxy=""; export no_proxy=""; export HTTP_PROXY=""; export HTTPS_PROXY=""; export NO_PROXY=""

|

||||

until sudo docker exec ${RAGFLOW_CONTAINER} curl -s --connect-timeout 5 ${HOST_ADDRESS} > /dev/null; do

|

||||

echo "Waiting for service to be available..."

|

||||

sleep 5

|

||||

done

|

||||

source .venv/bin/activate && pytest -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_http_api 2>&1 | tee es_http_api_test.log

|

||||

source .venv/bin/activate && DOC_ENGINE=infinity pytest -x -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_http_api 2>&1 | tee infinity_http_api_test.log

|

||||

|

||||

- name: Stop ragflow:nightly

|

||||

if: always() # always run this step even if previous steps failed

|

||||

@ -237,35 +238,35 @@ jobs:

|

||||

|

||||

- name: Start ragflow:nightly

|

||||

run: |

|

||||

sed -i '1i DOC_ENGINE=infinity' docker/.env

|

||||

sed -i '1i DOC_ENGINE=elasticsearch' docker/.env

|

||||

sudo docker compose -f docker/docker-compose.yml -p ${GITHUB_RUN_ID} up -d

|

||||

|

||||

- name: Run sdk tests against Infinity

|

||||

- name: Run sdk tests against Elasticsearch

|

||||

run: |

|

||||

export http_proxy=""; export https_proxy=""; export no_proxy=""; export HTTP_PROXY=""; export HTTPS_PROXY=""; export NO_PROXY=""

|

||||

until sudo docker exec ${RAGFLOW_CONTAINER} curl -s --connect-timeout 5 ${HOST_ADDRESS} > /dev/null; do

|

||||

echo "Waiting for service to be available..."

|

||||

sleep 5

|

||||

done

|

||||

source .venv/bin/activate && DOC_ENGINE=infinity pytest -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_sdk_api 2>&1 | tee infinity_sdk_test.log

|

||||

source .venv/bin/activate && DOC_ENGINE=elasticsearch pytest -x -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_sdk_api 2>&1 | tee es_sdk_test.log

|

||||

|

||||

- name: Run frontend api tests against Infinity

|

||||

- name: Run frontend api tests against Elasticsearch

|

||||

run: |

|

||||

export http_proxy=""; export https_proxy=""; export no_proxy=""; export HTTP_PROXY=""; export HTTPS_PROXY=""; export NO_PROXY=""

|

||||

until sudo docker exec ${RAGFLOW_CONTAINER} curl -s --connect-timeout 5 ${HOST_ADDRESS} > /dev/null; do

|

||||

echo "Waiting for service to be available..."

|

||||

sleep 5

|

||||

done

|

||||

source .venv/bin/activate && DOC_ENGINE=infinity pytest -s --tb=short sdk/python/test/test_frontend_api/get_email.py sdk/python/test/test_frontend_api/test_dataset.py 2>&1 | tee infinity_api_test.log

|

||||

source .venv/bin/activate && DOC_ENGINE=elasticsearch pytest -x -s --tb=short sdk/python/test/test_frontend_api/get_email.py sdk/python/test/test_frontend_api/test_dataset.py 2>&1 | tee es_api_test.log

|

||||

|

||||

- name: Run http api tests against Infinity

|

||||

- name: Run http api tests against Elasticsearch

|

||||

run: |

|

||||

export http_proxy=""; export https_proxy=""; export no_proxy=""; export HTTP_PROXY=""; export HTTPS_PROXY=""; export NO_PROXY=""

|

||||

until sudo docker exec ${RAGFLOW_CONTAINER} curl -s --connect-timeout 5 ${HOST_ADDRESS} > /dev/null; do

|

||||

echo "Waiting for service to be available..."

|

||||

sleep 5

|

||||

done

|

||||

source .venv/bin/activate && DOC_ENGINE=infinity pytest -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_http_api 2>&1 | tee infinity_http_api_test.log

|

||||

source .venv/bin/activate && DOC_ENGINE=elasticsearch pytest -x -s --tb=short --level=${HTTP_API_TEST_LEVEL} test/testcases/test_http_api 2>&1 | tee es_http_api_test.log

|

||||

|

||||

- name: Stop ragflow:nightly

|

||||

if: always() # always run this step even if previous steps failed

|

||||

|

||||

@ -86,8 +86,9 @@ class Agent(LLM, ToolBase):

|

||||

self.tools = {}

|

||||

for idx, cpn in enumerate(self._param.tools):

|

||||

cpn = self._load_tool_obj(cpn)

|

||||

name = cpn.get_meta()["function"]["name"]

|

||||

self.tools[f"{name}_{idx}"] = cpn

|

||||

original_name = cpn.get_meta()["function"]["name"]

|

||||

indexed_name = f"{original_name}_{idx}"

|

||||

self.tools[indexed_name] = cpn

|

||||

|

||||

self.chat_mdl = LLMBundle(self._canvas.get_tenant_id(), TenantLLMService.llm_id2llm_type(self._param.llm_id), self._param.llm_id,

|

||||

max_retries=self._param.max_retries,

|

||||

@ -95,7 +96,12 @@ class Agent(LLM, ToolBase):

|

||||

max_rounds=self._param.max_rounds,

|

||||

verbose_tool_use=True

|

||||

)

|

||||

self.tool_meta = [v.get_meta() for _,v in self.tools.items()]

|

||||

self.tool_meta = []

|

||||

for indexed_name, tool_obj in self.tools.items():

|

||||

original_meta = tool_obj.get_meta()

|

||||

indexed_meta = deepcopy(original_meta)

|

||||

indexed_meta["function"]["name"] = indexed_name

|

||||

self.tool_meta.append(indexed_meta)

|

||||

|

||||

for mcp in self._param.mcp:

|

||||

_, mcp_server = MCPServerService.get_by_id(mcp["mcp_id"])

|

||||

@ -109,7 +115,8 @@ class Agent(LLM, ToolBase):

|

||||

|

||||

def _load_tool_obj(self, cpn: dict) -> object:

|

||||

from agent.component import component_class

|

||||

param = component_class(cpn["component_name"] + "Param")()

|

||||

tool_name = cpn["component_name"]

|

||||

param = component_class(tool_name + "Param")()

|

||||

param.update(cpn["params"])

|

||||

try:

|

||||

param.check()

|

||||

@ -277,19 +284,15 @@ class Agent(LLM, ToolBase):

|

||||

else:

|

||||

user_request = history[-1]["content"]

|

||||

|

||||

def build_task_desc(prompt: str, user_request: str, tool_metas: list[dict], user_defined_prompt: dict | None = None) -> str:

|

||||

def build_task_desc(prompt: str, user_request: str, user_defined_prompt: dict | None = None) -> str:

|

||||

"""Build a minimal task_desc by concatenating prompt, query, and tool schemas."""

|

||||

user_defined_prompt = user_defined_prompt or {}

|

||||

|

||||

tools_json = json.dumps(tool_metas, ensure_ascii=False, indent=2)

|

||||

|

||||

task_desc = (

|

||||

"### Agent Prompt\n"

|

||||

f"{prompt}\n\n"

|

||||

"### User Request\n"

|

||||

f"{user_request}\n\n"

|

||||

"### Tools (schemas)\n"

|

||||

f"{tools_json}\n"

|

||||

)

|

||||

|

||||

if user_defined_prompt:

|

||||

@ -368,7 +371,7 @@ class Agent(LLM, ToolBase):

|

||||

hist.append({"role": "user", "content": content})

|

||||

|

||||

st = timer()

|

||||

task_desc = build_task_desc(prompt, user_request, tool_metas, user_defined_prompt)

|

||||

task_desc = build_task_desc(prompt, user_request, user_defined_prompt)

|

||||

self.callback("analyze_task", {}, task_desc, elapsed_time=timer()-st)

|

||||

for _ in range(self._param.max_rounds + 1):

|

||||

if self.check_if_canceled("Agent streaming"):

|

||||

|

||||

@ -56,7 +56,6 @@ class LLMParam(ComponentParamBase):

|

||||

self.check_nonnegative_number(int(self.max_tokens), "[Agent] Max tokens")

|

||||

self.check_decimal_float(float(self.top_p), "[Agent] Top P")

|

||||

self.check_empty(self.llm_id, "[Agent] LLM")

|

||||

self.check_empty(self.sys_prompt, "[Agent] System prompt")

|

||||

self.check_empty(self.prompts, "[Agent] User prompt")

|

||||

|

||||

def gen_conf(self):

|

||||

|

||||

@ -113,6 +113,10 @@ class LoopItem(ComponentBase, ABC):

|

||||

return len(var) == 0

|

||||

elif operator == "not empty":

|

||||

return len(var) > 0

|

||||

elif var is None:

|

||||

if operator == "empty":

|

||||

return True

|

||||

return False

|

||||

|

||||

raise Exception(f"Invalid operator: {operator}")

|

||||

|

||||

|

||||

@ -447,6 +447,26 @@ async def metadata_update():

|

||||

return get_json_result(data={"updated": updated, "matched_docs": len(target_doc_ids)})

|

||||

|

||||

|

||||

@manager.route("/update_metadata_setting", methods=["POST"]) # noqa: F821

|

||||

@login_required

|

||||

@validate_request("doc_id", "metadata")

|

||||

async def update_metadata_setting():

|

||||

req = await get_request_json()

|

||||

if not DocumentService.accessible(req["doc_id"], current_user.id):

|

||||

return get_json_result(data=False, message="No authorization.", code=RetCode.AUTHENTICATION_ERROR)

|

||||

|

||||

e, doc = DocumentService.get_by_id(req["doc_id"])

|

||||

if not e:

|

||||

return get_data_error_result(message="Document not found!")

|

||||

|

||||

DocumentService.update_parser_config(doc.id, {"metadata": req["metadata"]})

|

||||

e, doc = DocumentService.get_by_id(doc.id)

|

||||

if not e:

|

||||

return get_data_error_result(message="Document not found!")

|

||||

|

||||

return get_json_result(data=doc.to_dict())

|

||||

|

||||

|

||||

@manager.route("/thumbnails", methods=["GET"]) # noqa: F821

|

||||

# @login_required

|

||||

def thumbnails():

|

||||

|

||||

@ -33,6 +33,7 @@ from api.db.db_models import DB, Document, Knowledgebase, Task, Tenant, UserTena

|

||||

from api.db.db_utils import bulk_insert_into_db

|

||||

from api.db.services.common_service import CommonService

|

||||

from api.db.services.knowledgebase_service import KnowledgebaseService

|

||||

from common.metadata_utils import dedupe_list

|

||||

from common.misc_utils import get_uuid

|

||||

from common.time_utils import current_timestamp, get_format_time

|

||||

from common.constants import LLMType, ParserType, StatusEnum, TaskStatus, SVR_CONSUMER_GROUP_NAME

|

||||

@ -696,10 +697,14 @@ class DocumentService(CommonService):

|

||||

for k,v in r.meta_fields.items():

|

||||

if k not in meta:

|

||||

meta[k] = {}

|

||||

v = str(v)

|

||||

if v not in meta[k]:

|

||||

meta[k][v] = []

|

||||

meta[k][v].append(doc_id)

|

||||

if not isinstance(v, list):

|

||||

v = [v]

|

||||

for vv in v:

|

||||

if vv not in meta[k]:

|

||||

if isinstance(vv, list) or isinstance(vv, dict):

|

||||

continue

|

||||

meta[k][vv] = []

|

||||

meta[k][vv].append(doc_id)

|

||||

return meta

|

||||

|

||||

@classmethod

|

||||

@ -797,7 +802,10 @@ class DocumentService(CommonService):

|

||||

match_provided = "match" in upd

|

||||

if isinstance(meta[key], list):

|

||||

if not match_provided:

|

||||

meta[key] = new_value

|

||||

if isinstance(new_value, list):

|

||||

meta[key] = dedupe_list(new_value)

|

||||

else:

|

||||

meta[key] = new_value

|

||||

changed = True

|

||||

else:

|

||||

match_value = upd.get("match")

|

||||

@ -810,7 +818,7 @@ class DocumentService(CommonService):

|

||||

else:

|

||||

new_list.append(item)

|

||||

if replaced:

|

||||

meta[key] = new_list

|

||||

meta[key] = dedupe_list(new_list)

|

||||

changed = True

|

||||

else:

|

||||

if not match_provided:

|

||||

|

||||

@ -411,8 +411,6 @@ class KnowledgebaseService(CommonService):

|

||||

ok, _t = TenantService.get_by_id(tenant_id)

|

||||

if not ok:

|

||||

return False, get_data_error_result(message="Tenant not found.")

|

||||

if kwargs.get("parser_config") and isinstance(kwargs["parser_config"], dict) and not kwargs["parser_config"].get("llm_id"):

|

||||

kwargs["parser_config"]["llm_id"] = _t.llm_id

|

||||

|

||||

# Build payload

|

||||

kb_id = get_uuid()

|

||||

@ -427,6 +425,7 @@ class KnowledgebaseService(CommonService):

|

||||

|

||||

# Update parser_config (always override with validated default/merged config)

|

||||

payload["parser_config"] = get_parser_config(parser_id, kwargs.get("parser_config"))

|

||||

payload["parser_config"]["llm_id"] = _t.llm_id

|

||||

|

||||

return True, payload

|

||||

|

||||

|

||||

@ -117,6 +117,8 @@ class MemoryService(CommonService):

|

||||

if len(memory_name) > MEMORY_NAME_LIMIT:

|

||||

return False, f"Memory name {memory_name} exceeds limit of {MEMORY_NAME_LIMIT}."

|

||||

|

||||

timestamp = current_timestamp()

|

||||

format_time = get_format_time()

|

||||

# build create dict

|

||||

memory_info = {

|

||||

"id": get_uuid(),

|

||||

@ -126,10 +128,10 @@ class MemoryService(CommonService):

|

||||

"embd_id": embd_id,

|

||||

"llm_id": llm_id,

|

||||

"system_prompt": PromptAssembler.assemble_system_prompt({"memory_type": memory_type}),

|

||||

"create_time": current_timestamp(),

|

||||

"create_date": get_format_time(),

|

||||

"update_time": current_timestamp(),

|

||||

"update_date": get_format_time(),

|

||||

"create_time": timestamp,

|

||||

"create_date": format_time,

|

||||

"update_time": timestamp,

|

||||

"update_date": format_time,

|

||||

}

|

||||

obj = cls.model(**memory_info).save(force_insert=True)

|

||||

|

||||

|

||||

@ -44,21 +44,27 @@ def meta_filter(metas: dict, filters: list[dict], logic: str = "and"):

|

||||

def filter_out(v2docs, operator, value):

|

||||

ids = []

|

||||

for input, docids in v2docs.items():

|

||||

|

||||

if operator in ["=", "≠", ">", "<", "≥", "≤"]:

|

||||

try:

|

||||

if isinstance(input, list):

|

||||

input = input[0]

|

||||

input = float(input)

|

||||

value = float(value)

|

||||

except Exception:

|

||||

input = str(input)

|

||||

value = str(value)

|

||||

pass

|

||||

if isinstance(input, str):

|

||||

input = input.lower()

|

||||

if isinstance(value, str):

|

||||

value = value.lower()

|

||||

|

||||

for conds in [

|

||||

(operator == "contains", str(value).lower() in str(input).lower()),

|

||||

(operator == "not contains", str(value).lower() not in str(input).lower()),

|

||||

(operator == "in", str(input).lower() in str(value).lower()),

|

||||

(operator == "not in", str(input).lower() not in str(value).lower()),

|

||||

(operator == "start with", str(input).lower().startswith(str(value).lower())),

|

||||

(operator == "end with", str(input).lower().endswith(str(value).lower())),

|

||||

(operator == "contains", input in value if not isinstance(input, list) else all([i in value for i in input])),

|

||||

(operator == "not contains", input not in value if not isinstance(input, list) else all([i not in value for i in input])),

|

||||

(operator == "in", input in value if not isinstance(input, list) else all([i in value for i in input])),

|

||||

(operator == "not in", input not in value if not isinstance(input, list) else all([i not in value for i in input])),

|

||||

(operator == "start with", str(input).lower().startswith(str(value).lower()) if not isinstance(input, list) else "".join([str(i).lower() for i in input]).startswith(str(value).lower())),

|

||||

(operator == "end with", str(input).lower().endswith(str(value).lower()) if not isinstance(input, list) else "".join([str(i).lower() for i in input]).endswith(str(value).lower())),

|

||||

(operator == "empty", not input),

|

||||

(operator == "not empty", input),

|

||||

(operator == "=", input == value),

|

||||

@ -145,6 +151,18 @@ async def apply_meta_data_filter(

|

||||

return doc_ids

|

||||

|

||||

|

||||

def dedupe_list(values: list) -> list:

|

||||

seen = set()

|

||||

deduped = []

|

||||

for item in values:

|

||||

key = str(item)

|

||||

if key in seen:

|

||||

continue

|

||||

seen.add(key)

|

||||

deduped.append(item)

|

||||

return deduped

|

||||

|

||||

|

||||

def update_metadata_to(metadata, meta):

|

||||

if not meta:

|

||||

return metadata

|

||||

@ -156,11 +174,13 @@ def update_metadata_to(metadata, meta):

|

||||

return metadata

|

||||

if not isinstance(meta, dict):

|

||||

return metadata

|

||||

|

||||

for k, v in meta.items():

|

||||

if isinstance(v, list):

|

||||

v = [vv for vv in v if isinstance(vv, str)]

|

||||

if not v:

|

||||

continue

|

||||

v = dedupe_list(v)

|

||||

if not isinstance(v, list) and not isinstance(v, str):

|

||||

continue

|

||||

if k not in metadata:

|

||||

@ -171,6 +191,7 @@ def update_metadata_to(metadata, meta):

|

||||

metadata[k].extend(v)

|

||||

else:

|

||||

metadata[k].append(v)

|

||||

metadata[k] = dedupe_list(metadata[k])

|

||||

else:

|

||||

metadata[k] = v

|

||||

|

||||

@ -202,4 +223,4 @@ def metadata_schema(metadata: list|None) -> Dict[str, Any]:

|

||||

}

|

||||

|

||||

json_schema["additionalProperties"] = False

|

||||

return json_schema

|

||||

return json_schema

|

||||

|

||||

@ -1258,6 +1258,12 @@

|

||||

"status": "1",

|

||||

"rank": "810",

|

||||

"llm": [

|

||||

{

|

||||

"llm_name": "MiniMax-M2.1",

|

||||

"tags": "LLM,CHAT,200k",

|

||||

"max_tokens": 200000,

|

||||

"model_type": "chat"

|

||||

},

|

||||

{

|

||||

"llm_name": "MiniMax-M2",

|

||||

"tags": "LLM,CHAT,200k",

|

||||

|

||||

@ -1447,6 +1447,7 @@ class VisionParser(RAGFlowPdfParser):

|

||||

def __init__(self, vision_model, *args, **kwargs):

|

||||

super().__init__(*args, **kwargs)

|

||||

self.vision_model = vision_model

|

||||

self.outlines = []

|

||||

|

||||

def __images__(self, fnm, zoomin=3, page_from=0, page_to=299, callback=None):

|

||||

try:

|

||||

|

||||

@ -72,7 +72,7 @@ services:

|

||||

infinity:

|

||||

profiles:

|

||||

- infinity

|

||||

image: infiniflow/infinity:v0.6.11

|

||||

image: infiniflow/infinity:v0.6.13

|

||||

volumes:

|

||||

- infinity_data:/var/infinity

|

||||

- ./infinity_conf.toml:/infinity_conf.toml

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

[general]

|

||||

version = "0.6.11"

|

||||

version = "0.6.13"

|

||||

time_zone = "utc-8"

|

||||

|

||||

[network]

|

||||

|

||||

90

docs/guides/agent/agent_component_reference/http.md

Normal file

90

docs/guides/agent/agent_component_reference/http.md

Normal file

@ -0,0 +1,90 @@

|

||||

---

|

||||

sidebar_position: 30

|

||||

slug: /http_request_component

|

||||

---

|

||||

|

||||

# HTTP request component

|

||||

|

||||

A component that calls remote services.

|

||||

|

||||

---

|

||||

|

||||

An **HTTP request** component lets you access remote APIs or services by providing a URL and an HTTP method, and then receive the response. You can customize headers, parameters, proxies, and timeout settings, and use common methods like GET and POST. It’s useful for exchanging data with external systems in a workflow.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- An accessible remote API or service.

|

||||

- Add a Token or credentials to the request header, if the target service requires authentication.

|

||||

|

||||

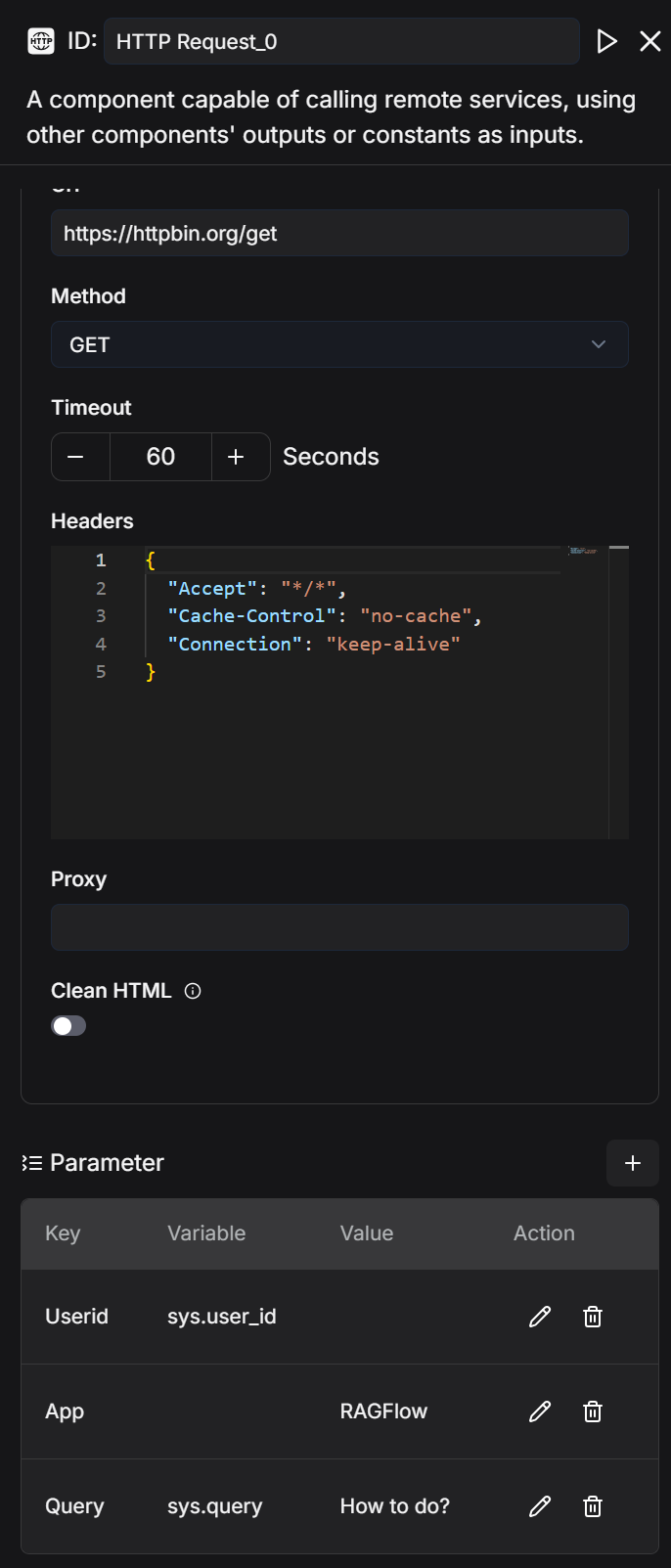

## Configurations

|

||||

|

||||

### Url

|

||||

|

||||

*Required*. The complete request address, for example: http://api.example.com/data.

|

||||

|

||||

### Method

|

||||

|

||||

The HTTP request method to select. Available options:

|

||||

|

||||

- GET

|

||||

- POST

|

||||

- PUT

|

||||

|

||||

### Timeout

|

||||

|

||||

The maximum waiting time for the request, in seconds. Defaults to `60`.

|

||||

|

||||

### Headers

|

||||

|

||||

Custom HTTP headers can be set here, for example:

|

||||

|

||||

```http

|

||||

{

|

||||

"Accept": "application/json",

|

||||

"Cache-Control": "no-cache",

|

||||

"Connection": "keep-alive"

|

||||

}

|

||||

```

|

||||

|

||||

### Proxy

|

||||

|

||||

Optional. The proxy server address to use for this request.

|

||||

|

||||

### Clean HTML

|

||||

|

||||

`Boolean`: Whether to remove HTML tags from the returned results and keep plain text only.

|

||||

|

||||

### Parameter

|

||||

|

||||

*Optional*. Parameters to send with the HTTP request. Supports key-value pairs:

|

||||

|

||||

- To assign a value using a dynamic system variable, set it as Variable.

|

||||

- To override these dynamic values under certain conditions and use a fixed static value instead, Value is the appropriate choice.

|

||||

|

||||

|

||||

:::tip NOTE

|

||||

- For GET requests, these parameters are appended to the end of the URL.

|

||||

- For POST/PUT requests, they are sent as the request body.

|

||||

:::

|

||||

|

||||

#### Example setting

|

||||

|

||||

|

||||

|

||||

#### Example response

|

||||

|

||||

```html

|

||||

{ "args": { "App": "RAGFlow", "Query": "How to do?", "Userid": "241ed25a8e1011f0b979424ebc5b108b" }, "headers": { "Accept": "/", "Accept-Encoding": "gzip, deflate, br, zstd", "Cache-Control": "no-cache", "Host": "httpbin.org", "User-Agent": "python-requests/2.32.2", "X-Amzn-Trace-Id": "Root=1-68c9210c-5aab9088580c130a2f065523" }, "origin": "185.36.193.38", "url": "https://httpbin.org/get?Userid=241ed25a8e1011f0b979424ebc5b108b&App=RAGFlow&Query=How+to+do%3F" }

|

||||

```

|

||||

|

||||

### Output

|

||||

|

||||

The global variable name for the output of the HTTP request component, which can be referenced by other components in the workflow.

|

||||

|

||||

- `Result`: `string` The response returned by the remote service.

|

||||

|

||||

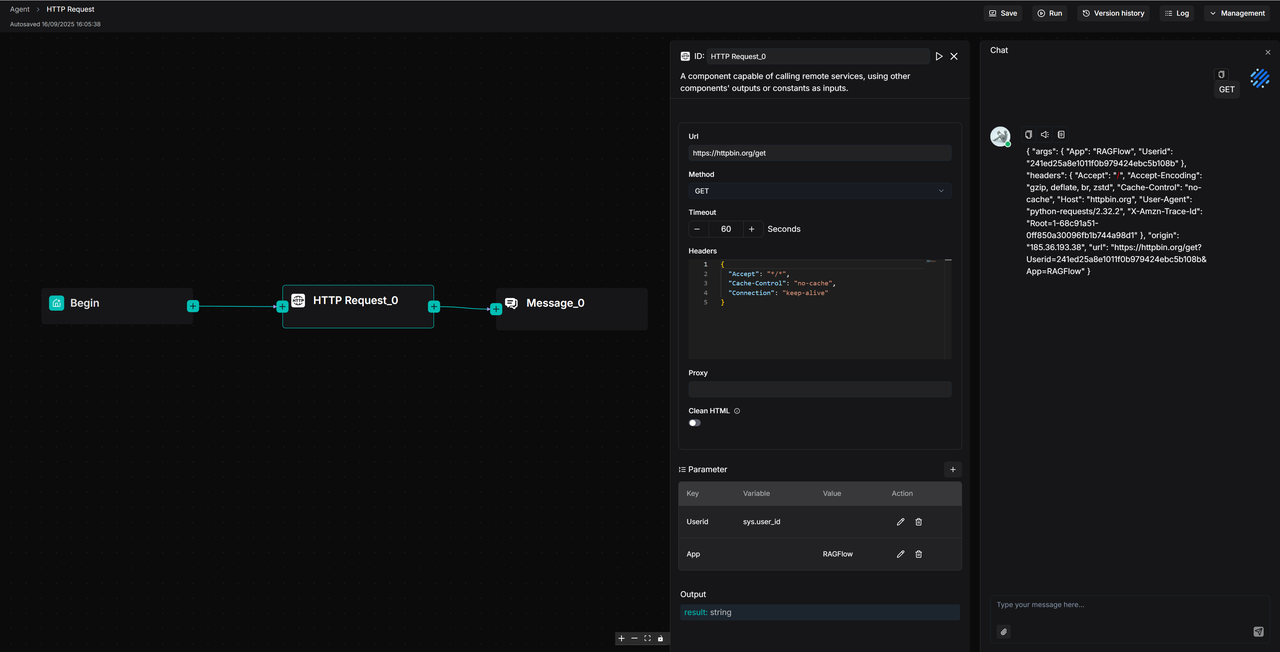

## Example

|

||||

|

||||

This is a usage example: a workflow sends a GET request from the **Begin** component to `https://httpbin.org/get` via the **HTTP Request_0** component, passes parameters to the server, and finally outputs the result through the **Message_0** component.

|

||||

|

||||

|

||||

@ -96,7 +96,7 @@ ragflow:

|

||||

infinity:

|

||||

image:

|

||||

repository: infiniflow/infinity

|

||||

tag: v0.6.11

|

||||

tag: v0.6.13

|

||||

pullPolicy: IfNotPresent

|

||||

pullSecrets: []

|

||||

storage:

|

||||

|

||||

@ -46,7 +46,7 @@ dependencies = [

|

||||

"groq==0.9.0",

|

||||

"grpcio-status==1.67.1",

|

||||

"html-text==0.6.2",

|

||||

"infinity-sdk==0.6.11",

|

||||

"infinity-sdk==0.6.13",

|

||||

"infinity-emb>=0.0.66,<0.0.67",

|

||||

"jira==3.10.5",

|

||||

"json-repair==0.35.0",

|

||||

|

||||

@ -37,7 +37,6 @@ from rag.app.naive import Docx

|

||||

from rag.flow.base import ProcessBase, ProcessParamBase

|

||||

from rag.flow.parser.schema import ParserFromUpstream

|

||||

from rag.llm.cv_model import Base as VLM

|

||||

from rag.nlp import attach_media_context

|

||||

from rag.utils.base64_image import image2id

|

||||

|

||||

|

||||

@ -86,8 +85,6 @@ class ParserParam(ProcessParamBase):

|

||||

"pdf",

|

||||

],

|

||||

"output_format": "json",

|

||||

"table_context_size": 0,

|

||||

"image_context_size": 0,

|

||||

},

|

||||

"spreadsheet": {

|

||||

"parse_method": "deepdoc", # deepdoc/tcadp_parser

|

||||

@ -97,8 +94,6 @@ class ParserParam(ProcessParamBase):

|

||||

"xlsx",

|

||||

"csv",

|

||||

],

|

||||

"table_context_size": 0,

|

||||

"image_context_size": 0,

|

||||

},

|

||||

"word": {

|

||||

"suffix": [

|

||||

@ -106,14 +101,10 @@ class ParserParam(ProcessParamBase):

|

||||

"docx",

|

||||

],

|

||||

"output_format": "json",

|

||||

"table_context_size": 0,

|

||||

"image_context_size": 0,

|

||||

},

|

||||

"text&markdown": {

|

||||

"suffix": ["md", "markdown", "mdx", "txt"],

|

||||

"output_format": "json",

|

||||

"table_context_size": 0,

|

||||

"image_context_size": 0,

|

||||

},

|

||||

"slides": {

|

||||

"parse_method": "deepdoc", # deepdoc/tcadp_parser

|

||||

@ -122,8 +113,6 @@ class ParserParam(ProcessParamBase):

|

||||

"ppt",

|

||||

],

|

||||

"output_format": "json",

|

||||

"table_context_size": 0,

|

||||

"image_context_size": 0,

|

||||

},

|

||||

"image": {

|

||||

"parse_method": "ocr",

|

||||

@ -357,11 +346,6 @@ class Parser(ProcessBase):

|

||||

elif layout == "table":

|

||||

b["doc_type_kwd"] = "table"

|

||||

|

||||

table_ctx = conf.get("table_context_size", 0) or 0

|

||||

image_ctx = conf.get("image_context_size", 0) or 0

|

||||

if table_ctx or image_ctx:

|

||||

bboxes = attach_media_context(bboxes, table_ctx, image_ctx)

|

||||

|

||||

if conf.get("output_format") == "json":

|

||||

self.set_output("json", bboxes)

|

||||

if conf.get("output_format") == "markdown":

|

||||

@ -436,11 +420,6 @@ class Parser(ProcessBase):

|

||||

if table:

|

||||

result.append({"text": table, "doc_type_kwd": "table"})

|

||||

|

||||

table_ctx = conf.get("table_context_size", 0) or 0

|

||||

image_ctx = conf.get("image_context_size", 0) or 0

|

||||

if table_ctx or image_ctx:

|

||||

result = attach_media_context(result, table_ctx, image_ctx)

|

||||

|

||||

self.set_output("json", result)

|

||||

|

||||

elif output_format == "markdown":

|

||||

@ -476,11 +455,6 @@ class Parser(ProcessBase):

|

||||

sections = [{"text": section[0], "image": section[1]} for section in sections if section]

|

||||

sections.extend([{"text": tb, "image": None, "doc_type_kwd": "table"} for ((_, tb), _) in tbls])

|

||||

|

||||

table_ctx = conf.get("table_context_size", 0) or 0

|

||||

image_ctx = conf.get("image_context_size", 0) or 0

|

||||

if table_ctx or image_ctx:

|

||||

sections = attach_media_context(sections, table_ctx, image_ctx)

|

||||

|

||||

self.set_output("json", sections)

|

||||

elif conf.get("output_format") == "markdown":

|

||||

markdown_text = docx_parser.to_markdown(name, binary=blob)

|

||||

@ -536,11 +510,6 @@ class Parser(ProcessBase):

|

||||

if table:

|

||||

result.append({"text": table, "doc_type_kwd": "table"})

|

||||

|

||||

table_ctx = conf.get("table_context_size", 0) or 0

|

||||

image_ctx = conf.get("image_context_size", 0) or 0

|

||||

if table_ctx or image_ctx:

|

||||

result = attach_media_context(result, table_ctx, image_ctx)

|

||||

|

||||

self.set_output("json", result)

|

||||

else:

|

||||

# Default DeepDOC parser (supports .pptx format)

|

||||

@ -554,10 +523,6 @@ class Parser(ProcessBase):

|

||||

# json

|

||||

assert conf.get("output_format") == "json", "have to be json for ppt"

|

||||

if conf.get("output_format") == "json":

|

||||

table_ctx = conf.get("table_context_size", 0) or 0

|

||||

image_ctx = conf.get("image_context_size", 0) or 0

|

||||

if table_ctx or image_ctx:

|

||||

sections = attach_media_context(sections, table_ctx, image_ctx)

|

||||

self.set_output("json", sections)

|

||||

|

||||

def _markdown(self, name, blob):

|

||||

@ -597,11 +562,6 @@ class Parser(ProcessBase):

|

||||

|

||||

json_results.append(json_result)

|

||||

|

||||

table_ctx = conf.get("table_context_size", 0) or 0

|

||||

image_ctx = conf.get("image_context_size", 0) or 0

|

||||

if table_ctx or image_ctx:

|

||||

json_results = attach_media_context(json_results, table_ctx, image_ctx)

|

||||

|

||||

self.set_output("json", json_results)

|

||||

else:

|

||||

self.set_output("text", "\n".join([section_text for section_text, _ in sections]))

|

||||

|

||||

@ -23,7 +23,7 @@ from rag.utils.base64_image import id2image, image2id

|

||||

from deepdoc.parser.pdf_parser import RAGFlowPdfParser

|

||||

from rag.flow.base import ProcessBase, ProcessParamBase

|

||||

from rag.flow.splitter.schema import SplitterFromUpstream

|

||||

from rag.nlp import naive_merge, naive_merge_with_images

|

||||

from rag.nlp import attach_media_context, naive_merge, naive_merge_with_images

|

||||

from common import settings

|

||||

|

||||

|

||||

@ -34,11 +34,15 @@ class SplitterParam(ProcessParamBase):

|

||||

self.delimiters = ["\n"]

|

||||

self.overlapped_percent = 0

|

||||

self.children_delimiters = []

|

||||

self.table_context_size = 0

|

||||

self.image_context_size = 0

|

||||

|

||||

def check(self):

|

||||

self.check_empty(self.delimiters, "Delimiters.")

|

||||

self.check_positive_integer(self.chunk_token_size, "Chunk token size.")

|

||||

self.check_decimal_float(self.overlapped_percent, "Overlapped percentage: [0, 1)")

|

||||

self.check_nonnegative_number(self.table_context_size, "Table context size.")

|

||||

self.check_nonnegative_number(self.image_context_size, "Image context size.")

|

||||

|

||||

def get_input_form(self) -> dict[str, dict]:

|

||||

return {}

|

||||

@ -103,8 +107,18 @@ class Splitter(ProcessBase):

|

||||

return

|

||||

|

||||

# json

|

||||

json_result = from_upstream.json_result or []

|

||||

if self._param.table_context_size or self._param.image_context_size:

|

||||

for ck in json_result:

|

||||

if "image" not in ck and ck.get("img_id") and not (isinstance(ck.get("text"), str) and ck.get("text").strip()):

|

||||

ck["image"] = True

|

||||

attach_media_context(json_result, self._param.table_context_size, self._param.image_context_size)

|

||||

for ck in json_result:

|

||||

if ck.get("image") is True:

|

||||

del ck["image"]

|

||||

|

||||

sections, section_images = [], []

|

||||

for o in from_upstream.json_result or []:

|

||||

for o in json_result:

|

||||

sections.append((o.get("text", ""), o.get("position_tag", "")))

|

||||

section_images.append(id2image(o.get("img_id"), partial(settings.STORAGE_IMPL.get, tenant_id=self._canvas._tenant_id)))

|

||||

|

||||

|

||||

@ -105,6 +105,9 @@ class Tokenizer(ProcessBase):

|

||||

|

||||

async def _invoke(self, **kwargs):

|

||||

try:

|

||||

chunks = kwargs.get("chunks")

|

||||

kwargs["chunks"] = [c for c in chunks if c is not None]

|

||||

|

||||

from_upstream = TokenizerFromUpstream.model_validate(kwargs)

|

||||

except Exception as e:

|

||||

self.set_output("_ERROR", f"Input error: {str(e)}")

|

||||

|

||||

@ -348,7 +348,8 @@ def tokenize_table(tbls, doc, eng, batch_size=10):

|

||||

d["doc_type_kwd"] = "table"

|

||||

if img:

|

||||

d["image"] = img

|

||||

d["doc_type_kwd"] = "image"

|

||||

if d["content_with_weight"].find("<tr>") < 0:

|

||||

d["doc_type_kwd"] = "image"

|

||||

if poss:

|

||||

add_positions(d, poss)

|

||||

res.append(d)

|

||||

@ -361,7 +362,8 @@ def tokenize_table(tbls, doc, eng, batch_size=10):

|

||||

d["doc_type_kwd"] = "table"

|

||||

if img:

|

||||

d["image"] = img

|

||||

d["doc_type_kwd"] = "image"

|

||||

if d["content_with_weight"].find("<tr>") < 0:

|

||||

d["doc_type_kwd"] = "image"

|

||||

add_positions(d, poss)

|

||||

res.append(d)

|

||||

return res

|

||||

|

||||

@ -339,7 +339,7 @@ def tool_schema(tools_description: list[dict], complete_task=False):

|

||||

}

|

||||

for idx, tool in enumerate(tools_description):

|

||||

name = tool["function"]["name"]

|

||||

desc[f"{name}_{idx}"] = tool

|

||||

desc[name] = tool

|

||||

|

||||

return "\n\n".join([f"## {i+1}. {fnm}\n{json.dumps(des, ensure_ascii=False, indent=4)}" for i, (fnm, des) in enumerate(desc.items())])

|

||||

|

||||

|

||||

@ -94,7 +94,7 @@ This content will NOT be shown to the user.

|

||||

## Step 2: Structured Reflection (MANDATORY before `complete_task`)

|

||||

|

||||

### Context

|

||||

- Goal: {{ task_analysis }}

|

||||

- Goal: Reflect on the current task based on the full conversation context

|

||||

- Executed tool calls so far (if any): reflect from conversation history

|

||||

|

||||

### Task Complexity Assessment

|

||||

|

||||

@ -395,9 +395,9 @@ async def build_chunks(task, progress_callback):

|

||||

await asyncio.gather(*tasks, return_exceptions=True)

|

||||

raise

|

||||

metadata = {}

|

||||

for ck in cks:

|

||||

metadata = update_metadata_to(metadata, ck["metadata_obj"])

|

||||

del ck["metadata_obj"]

|

||||

for doc in docs:

|

||||

metadata = update_metadata_to(metadata, doc["metadata_obj"])

|

||||

del doc["metadata_obj"]

|

||||

if metadata:

|

||||

e, doc = DocumentService.get_by_id(task["doc_id"])

|

||||

if e:

|

||||

|

||||

@ -82,7 +82,7 @@ def id2image(image_id:str|None, storage_get_func: partial):

|

||||

return

|

||||

bkt, nm = image_id.split("-")

|

||||

try:

|

||||

blob = storage_get_func(bucket=bkt, filename=nm)

|

||||

blob = storage_get_func(bucket=bkt, fnm=nm)

|

||||

if not blob:

|

||||

return

|

||||

return Image.open(BytesIO(blob))

|

||||

|

||||

8

uv.lock

generated

8

uv.lock

generated

@ -3051,7 +3051,7 @@ wheels = [

|

||||

|

||||

[[package]]

|

||||

name = "infinity-sdk"

|

||||

version = "0.6.11"

|

||||

version = "0.6.13"

|

||||

source = { registry = "https://pypi.tuna.tsinghua.edu.cn/simple" }

|

||||

dependencies = [

|

||||

{ name = "datrie" },

|

||||

@ -3068,9 +3068,9 @@ dependencies = [

|

||||

{ name = "sqlglot", extra = ["rs"] },

|

||||

{ name = "thrift" },

|

||||

]

|

||||

sdist = { url = "https://pypi.tuna.tsinghua.edu.cn/packages/6e/6d/294b4c8fb36f874c92576107fab22da6d64f567fd3e24a312d7bcba5f17a/infinity_sdk-0.6.11.tar.gz", hash = "sha256:f78acd5439c3837715ab308c49be04b416bdaa42a5f4fb840682639ee39d435f", size = 29518792, upload-time = "2025-12-08T05:58:35.167Z" }

|

||||

sdist = { url = "https://pypi.tuna.tsinghua.edu.cn/packages/03/de/56fdc0fa962d5a8e0aa68d16f5321b2d88d79fceb7d0d6cfdde338b65d05/infinity_sdk-0.6.13.tar.gz", hash = "sha256:faf7bc23de7fa549a3842753eddad54ae551ada9df4fff25421658a7fa6fa8c2", size = 29518902, upload-time = "2025-12-24T10:00:01.483Z" }

|

||||

wheels = [

|

||||

{ url = "https://pypi.tuna.tsinghua.edu.cn/packages/18/cf/d7d1bc584c8f7d1cd8f75c39067179b98ca4bcbe5a86c61e7dbc2b8e692d/infinity_sdk-0.6.11-py3-none-any.whl", hash = "sha256:ec5ac3e710f29db4b875d3e24e20e391ad64270a5e5d189295cc91c362af74d1", size = 29737403, upload-time = "2025-12-08T05:54:58.798Z" },

|

||||

{ url = "https://pypi.tuna.tsinghua.edu.cn/packages/f4/a0/8f1e134fdf4ca8bebac7b62caace1816953bb5ffc720d9f0004246c8c38d/infinity_sdk-0.6.13-py3-none-any.whl", hash = "sha256:c08a523d2c27e9a7e6e88be640970530b4661a67c3e9dc3e1aa89533a822fd78", size = 29737403, upload-time = "2025-12-24T09:56:16.93Z" },

|

||||

]

|

||||

|

||||

[[package]]

|

||||

@ -6229,7 +6229,7 @@ requires-dist = [

|

||||

{ name = "grpcio-status", specifier = "==1.67.1" },

|

||||

{ name = "html-text", specifier = "==0.6.2" },

|

||||

{ name = "infinity-emb", specifier = ">=0.0.66,<0.0.67" },

|

||||

{ name = "infinity-sdk", specifier = "==0.6.11" },

|

||||

{ name = "infinity-sdk", specifier = "==0.6.13" },

|

||||

{ name = "jira", specifier = "==3.10.5" },

|

||||

{ name = "json-repair", specifier = "==0.35.0" },

|

||||

{ name = "langfuse", specifier = ">=2.60.0" },

|

||||

|

||||

@ -18,7 +18,9 @@ import { useFetchKnowledgeBaseConfiguration } from '@/hooks/use-knowledge-reques

|

||||

import { IModalProps } from '@/interfaces/common';

|

||||

import { IParserConfig } from '@/interfaces/database/document';

|

||||

import { IChangeParserConfigRequestBody } from '@/interfaces/request/document';

|

||||

import { MetadataType } from '@/pages/dataset/components/metedata/hooks/use-manage-modal';

|

||||

import {

|

||||

AutoMetadata,

|

||||

ChunkMethodItem,

|

||||

EnableTocToggle,

|

||||

ImageContextWindow,

|

||||

@ -86,6 +88,7 @@ export function ChunkMethodDialog({

|

||||

visible,

|

||||

parserConfig,

|

||||

loading,

|

||||

documentId,

|

||||

}: IProps) {

|

||||

const { t } = useTranslation();

|

||||

|

||||

@ -142,6 +145,18 @@ export function ChunkMethodDialog({

|

||||

pages: z

|

||||

.array(z.object({ from: z.coerce.number(), to: z.coerce.number() }))

|

||||

.optional(),

|

||||

metadata: z

|

||||

.array(

|

||||

z

|

||||

.object({

|

||||

key: z.string().optional(),

|

||||

description: z.string().optional(),

|

||||

enum: z.array(z.string().optional()).optional(),

|

||||

})

|

||||

.optional(),

|

||||

)

|

||||

.optional(),

|

||||

enable_metadata: z.boolean().optional(),

|

||||

}),

|

||||

})

|

||||

.superRefine((data, ctx) => {

|

||||

@ -373,6 +388,10 @@ export function ChunkMethodDialog({

|

||||

)}

|

||||

{showAutoKeywords(selectedTag) && (

|

||||

<>

|

||||

<AutoMetadata

|

||||

type={MetadataType.SingleFileSetting}

|

||||

otherData={{ documentId }}

|

||||

/>

|

||||

<AutoKeywordsFormField></AutoKeywordsFormField>

|

||||

<AutoQuestionsFormField></AutoQuestionsFormField>

|

||||

</>

|

||||

|

||||

@ -36,9 +36,11 @@ export function useDefaultParserValues() {

|

||||

// },

|

||||

entity_types: [],

|

||||

pages: [],

|

||||

metadata: [],

|

||||

enable_metadata: false,

|

||||

};

|

||||

|

||||

return defaultParserValues;

|

||||

return defaultParserValues as IParserConfig;

|

||||

}, [t]);

|

||||

|

||||

return defaultParserValues;

|

||||

|

||||

@ -35,6 +35,7 @@ import { cn } from '@/lib/utils';

|

||||

import { t } from 'i18next';

|

||||

import { Loader } from 'lucide-react';

|

||||

import { MultiSelect, MultiSelectOptionType } from './ui/multi-select';

|

||||

import { Switch } from './ui/switch';

|

||||

|

||||

// Field type enumeration

|

||||

export enum FormFieldType {

|

||||

@ -46,6 +47,7 @@ export enum FormFieldType {

|

||||

Select = 'select',

|

||||

MultiSelect = 'multi-select',

|

||||

Checkbox = 'checkbox',

|

||||

Switch = 'switch',

|

||||

Tag = 'tag',

|

||||

Custom = 'custom',

|

||||

}

|

||||

@ -154,6 +156,7 @@ export const generateSchema = (fields: FormFieldConfig[]): ZodSchema<any> => {

|

||||

}

|

||||

break;

|

||||

case FormFieldType.Checkbox:

|

||||

case FormFieldType.Switch:

|

||||

fieldSchema = z.boolean();

|

||||

break;

|

||||

case FormFieldType.Tag:

|

||||

@ -193,6 +196,8 @@ export const generateSchema = (fields: FormFieldConfig[]): ZodSchema<any> => {

|

||||

if (

|

||||

field.type !== FormFieldType.Number &&

|

||||

field.type !== FormFieldType.Checkbox &&

|

||||

field.type !== FormFieldType.Switch &&

|

||||

field.type !== FormFieldType.Custom &&

|

||||

field.type !== FormFieldType.Tag &&

|

||||

field.required

|

||||

) {

|

||||

@ -289,7 +294,10 @@ const generateDefaultValues = <T extends FieldValues>(

|

||||

const lastKey = keys[keys.length - 1];

|

||||

if (field.defaultValue !== undefined) {

|

||||

current[lastKey] = field.defaultValue;

|

||||

} else if (field.type === FormFieldType.Checkbox) {

|

||||

} else if (

|

||||

field.type === FormFieldType.Checkbox ||

|

||||

field.type === FormFieldType.Switch

|

||||

) {

|

||||

current[lastKey] = false;

|

||||

} else if (field.type === FormFieldType.Tag) {

|

||||

current[lastKey] = [];

|

||||

@ -299,7 +307,10 @@ const generateDefaultValues = <T extends FieldValues>(

|

||||

} else {

|

||||

if (field.defaultValue !== undefined) {

|

||||

defaultValues[field.name] = field.defaultValue;

|

||||

} else if (field.type === FormFieldType.Checkbox) {

|

||||

} else if (

|

||||

field.type === FormFieldType.Checkbox ||

|

||||

field.type === FormFieldType.Switch

|

||||

) {

|

||||

defaultValues[field.name] = false;

|

||||

} else if (

|

||||

field.type === FormFieldType.Tag ||

|

||||

@ -502,6 +513,32 @@ export const RenderField = ({

|

||||

)}

|

||||

/>

|

||||

);

|

||||

case FormFieldType.Switch:

|

||||

return (

|

||||

<RAGFlowFormItem

|

||||

{...field}

|

||||

labelClassName={labelClassName || field.labelClassName}

|

||||

>

|

||||

{(fieldProps) => {

|

||||

const finalFieldProps = field.onChange

|

||||

? {

|

||||

...fieldProps,

|

||||

onChange: (checked: boolean) => {

|

||||

fieldProps.onChange(checked);

|

||||

field.onChange?.(checked);

|

||||

},

|

||||

}

|

||||

: fieldProps;

|

||||

return (

|

||||

<Switch

|

||||

checked={finalFieldProps.value as boolean}

|

||||

onCheckedChange={(checked) => finalFieldProps.onChange(checked)}

|

||||

disabled={field.disabled}

|

||||

/>

|

||||

);

|

||||

}}

|

||||

</RAGFlowFormItem>

|

||||

);

|

||||

|

||||

case FormFieldType.Tag:

|

||||

return (

|

||||

|

||||

@ -15,6 +15,7 @@ import { Progress } from '@/components/ui/progress';

|

||||

import { useControllableState } from '@/hooks/use-controllable-state';

|

||||

import { cn, formatBytes } from '@/lib/utils';

|

||||

import { useTranslation } from 'react-i18next';

|

||||

import { Tooltip, TooltipContent, TooltipTrigger } from './ui/tooltip';

|

||||

|

||||

function isFileWithPreview(file: File): file is File & { preview: string } {

|

||||

return 'preview' in file && typeof file.preview === 'string';

|

||||

@ -58,10 +59,17 @@ function FileCard({ file, progress, onRemove }: FileCardProps) {

|

||||

</div>

|

||||

<div className="flex flex-col flex-1 gap-2 overflow-hidden">

|

||||

<div className="flex flex-col gap-px">

|

||||

<p className="line-clamp-1 text-sm font-medium text-foreground/80 text-ellipsis">

|

||||

{file.name}

|

||||

</p>

|

||||

<p className="text-xs text-muted-foreground">

|

||||

<Tooltip>

|

||||

<TooltipTrigger asChild>

|

||||

<p className=" w-fit line-clamp-1 text-sm font-medium text-foreground/80 text-ellipsis truncate max-w-[370px]">

|

||||

{file.name}

|

||||

</p>

|

||||

</TooltipTrigger>

|

||||

<TooltipContent className="border border-border-button">

|

||||

{file.name}

|

||||

</TooltipContent>

|

||||

</Tooltip>

|

||||

<p className="text-xs text-text-secondary">

|

||||

{formatBytes(file.size)}

|

||||

</p>

|

||||

</div>

|

||||

@ -311,7 +319,7 @@ export function FileUploader(props: FileUploaderProps) {

|

||||

/>

|

||||

</div>

|

||||

<div className="flex flex-col gap-px">

|

||||

<p className="font-medium text-text-secondary">

|

||||

<p className="font-medium text-text-secondary ">

|

||||

{title || t('knowledgeDetails.uploadTitle')}

|

||||

</p>

|

||||

<p className="text-sm text-text-disabled">

|

||||

|

||||

@ -31,14 +31,16 @@ const handleCheckChange = ({

|

||||

(value: string) => value !== item.id.toString(),

|

||||

);

|

||||

|

||||

const newValue = {

|

||||

...currentValue,

|

||||

[parentId]: newParentValues,

|

||||

};

|

||||

const newValue = newParentValues?.length

|

||||

? {

|

||||

...currentValue,

|

||||

[parentId]: newParentValues,

|

||||

}

|

||||

: { ...currentValue };

|

||||

|

||||

if (newValue[parentId].length === 0) {

|

||||

delete newValue[parentId];

|

||||

}

|

||||

// if (newValue[parentId].length === 0) {

|

||||

// delete newValue[parentId];

|

||||

// }

|

||||

|

||||

return field.onChange(newValue);

|

||||

} else {

|

||||

@ -66,20 +68,31 @@ const FilterItem = memo(

|

||||

}) => {

|

||||

return (

|

||||

<div

|

||||

className={`flex items-center justify-between text-text-primary text-xs ${level > 0 ? 'ml-4' : ''}`}

|

||||

className={`flex items-center justify-between text-text-primary text-xs ${level > 0 ? 'ml-1' : ''}`}

|

||||

>

|

||||

<FormItem className="flex flex-row space-x-3 space-y-0 items-center">

|

||||

<FormItem className="flex flex-row space-x-3 space-y-0 items-center ">

|

||||

<FormControl>

|

||||

<Checkbox

|

||||

checked={field.value?.includes(item.id.toString())}

|

||||

onCheckedChange={(checked: boolean) =>

|

||||

handleCheckChange({ checked, field, item })

|

||||

}

|

||||

/>

|

||||

<div className="flex space-x-3">

|

||||

<Checkbox

|

||||

checked={field.value?.includes(item.id.toString())}

|

||||

onCheckedChange={(checked: boolean) =>

|

||||

handleCheckChange({ checked, field, item })

|

||||

}

|

||||

// className="hidden group-hover:block"

|

||||

/>

|

||||

<FormLabel

|

||||

onClick={() =>

|

||||

handleCheckChange({

|

||||

checked: !field.value?.includes(item.id.toString()),

|

||||

field,

|

||||

item,

|

||||

})

|

||||

}

|

||||

>

|

||||

{item.label}

|

||||

</FormLabel>

|

||||

</div>

|

||||

</FormControl>

|

||||

<FormLabel onClick={(e) => e.stopPropagation()}>

|

||||

{item.label}

|

||||

</FormLabel>

|

||||

</FormItem>

|

||||

{item.count !== undefined && (

|

||||

<span className="text-sm">{item.count}</span>

|

||||

@ -107,11 +120,11 @@ export const FilterField = memo(

|

||||

<FormField

|

||||

key={item.id}

|

||||

control={form.control}

|

||||

name={parent.field as string}

|

||||

name={parent.field?.toString() as string}

|

||||

render={({ field }) => {

|

||||

if (hasNestedList) {

|

||||

return (

|

||||

<div className={`flex flex-col gap-2 ${level > 0 ? 'ml-4' : ''}`}>

|

||||

<div className={`flex flex-col gap-2 ${level > 0 ? 'ml-1' : ''}`}>

|

||||

<div

|

||||

className="flex items-center justify-between cursor-pointer"

|

||||

onClick={() => {

|

||||

@ -138,23 +151,6 @@ export const FilterField = memo(

|

||||

}}

|

||||

level={level + 1}

|

||||

/>

|

||||

// <FilterItem key={child.id} item={child} field={child.field} level={level+1} />

|

||||

// <div

|

||||

// className="flex flex-row space-x-3 space-y-0 items-center"

|

||||

// key={child.id}

|

||||

// >

|

||||

// <FormControl>

|

||||

// <Checkbox

|

||||

// checked={field.value?.includes(child.id.toString())}

|

||||

// onCheckedChange={(checked) =>

|

||||

// handleCheckChange({ checked, field, item: child })

|

||||

// }

|

||||

// />

|

||||

// </FormControl>

|

||||

// <FormLabel onClick={(e) => e.stopPropagation()}>

|

||||

// {child.label}

|

||||

// </FormLabel>

|

||||

// </div>

|

||||

))}

|

||||

</div>

|

||||

);

|

||||

|

||||

@ -11,8 +11,8 @@ import {

|

||||

useMemo,

|

||||

useState,

|

||||

} from 'react';

|

||||

import { useForm } from 'react-hook-form';

|

||||

import { ZodArray, ZodString, z } from 'zod';

|

||||

import { FieldPath, useForm } from 'react-hook-form';

|

||||

import { z } from 'zod';

|

||||

|

||||

import { Button } from '@/components/ui/button';

|

||||

|

||||

@ -71,34 +71,37 @@ function CheckboxFormMultiple({

|

||||

}, {});

|

||||

}, [resolvedFilters]);

|

||||

|

||||

// const FormSchema = useMemo(() => {

|

||||

// if (resolvedFilters.length === 0) {

|

||||

// return z.object({});

|

||||

// }

|

||||

|

||||

// return z.object(

|

||||

// resolvedFilters.reduce<

|

||||

// Record<

|

||||

// string,

|

||||

// ZodArray<ZodString, 'many'> | z.ZodObject<any> | z.ZodOptional<any>

|

||||

// >

|

||||

// >((pre, cur) => {

|

||||

// const hasNested = cur.list?.some(

|

||||

// (item) => item.list && item.list.length > 0,

|

||||

// );

|

||||

|

||||

// if (hasNested) {

|

||||

// pre[cur.field] = z

|

||||

// .record(z.string(), z.array(z.string().optional()).optional())

|

||||

// .optional();

|

||||

// } else {

|

||||

// pre[cur.field] = z.array(z.string().optional()).optional();

|

||||

// }

|

||||

|

||||

// return pre;

|

||||

// }, {}),

|

||||

// );

|

||||

// }, [resolvedFilters]);

|

||||

const FormSchema = useMemo(() => {

|

||||

if (resolvedFilters.length === 0) {

|

||||

return z.object({});

|

||||

}

|

||||

|

||||

return z.object(

|

||||

resolvedFilters.reduce<

|

||||

Record<

|

||||

string,

|

||||

ZodArray<ZodString, 'many'> | z.ZodObject<any> | z.ZodOptional<any>

|

||||

>

|

||||

>((pre, cur) => {

|

||||

const hasNested = cur.list?.some(

|

||||

(item) => item.list && item.list.length > 0,

|

||||

);

|

||||

|

||||

if (hasNested) {

|

||||

pre[cur.field] = z

|

||||

.record(z.string(), z.array(z.string().optional()).optional())

|

||||

.optional();

|

||||

} else {

|

||||

pre[cur.field] = z.array(z.string().optional()).optional();

|

||||

}

|

||||

|

||||

return pre;

|

||||

}, {}),

|

||||

);

|

||||

}, [resolvedFilters]);

|

||||

return z.object({});

|

||||

}, []);

|

||||

|

||||

const form = useForm<z.infer<typeof FormSchema>>({

|

||||

resolver: resolvedFilters.length > 0 ? zodResolver(FormSchema) : undefined,

|

||||

@ -178,7 +181,9 @@ function CheckboxFormMultiple({

|

||||

<FormField

|

||||

key={x.field}

|

||||

control={form.control}

|

||||

name={x.field}

|

||||

name={

|

||||

x.field.toString() as FieldPath<z.infer<typeof FormSchema>>

|

||||

}

|

||||

render={() => (

|

||||

<FormItem className="space-y-4">

|

||||

<div>

|

||||

@ -186,19 +191,20 @@ function CheckboxFormMultiple({

|

||||

{x.label}

|

||||

</FormLabel>

|

||||

</div>

|

||||

{x.list.map((item) => {

|

||||

return (

|

||||

<FilterField

|

||||

key={item.id}

|

||||

item={{ ...item }}

|

||||

parent={{

|

||||

...x,

|

||||

id: x.field,

|

||||

// field: `${x.field}${item.field ? '.' + item.field : ''}`,

|

||||

}}

|

||||

/>

|

||||

);

|

||||

})}

|

||||

{x.list?.length &&

|

||||

x.list.map((item) => {

|

||||

return (

|

||||

<FilterField

|

||||

key={item.id}

|

||||

item={{ ...item }}

|

||||

parent={{

|

||||

...x,

|

||||

id: x.field,

|

||||

// field: `${x.field}${item.field ? '.' + item.field : ''}`,

|

||||

}}

|

||||

/>

|

||||

);

|

||||

})}

|

||||

<FormMessage />

|

||||

</FormItem>

|

||||

)}

|

||||

|

||||

@ -14,6 +14,7 @@ import {

|

||||

IDocumentMetaRequestBody,

|

||||

} from '@/interfaces/request/document';

|

||||

import i18n from '@/locales/config';

|

||||

import { EMPTY_METADATA_FIELD } from '@/pages/dataset/dataset/use-select-filters';

|

||||

import kbService, { listDocument } from '@/services/knowledge-service';

|

||||

import api, { api_host } from '@/utils/api';

|

||||

import { buildChunkHighlights } from '@/utils/document-util';

|

||||

@ -114,6 +115,20 @@ export const useFetchDocumentList = () => {

|

||||

refetchInterval: isLoop ? 5000 : false,

|

||||

enabled: !!knowledgeId || !!id,

|

||||

queryFn: async () => {

|

||||

let run = [] as any;

|

||||

let returnEmptyMetadata = false;

|

||||

if (filterValue.run && Array.isArray(filterValue.run)) {

|

||||

run = [...(filterValue.run as string[])];

|

||||

const returnEmptyMetadataIndex = run.findIndex(

|

||||

(r: string) => r === EMPTY_METADATA_FIELD,

|

||||

);

|

||||

if (returnEmptyMetadataIndex > -1) {

|

||||

returnEmptyMetadata = true;

|

||||

run.splice(returnEmptyMetadataIndex, 1);

|

||||

}

|

||||

} else {

|

||||

run = filterValue.run;

|

||||

}

|

||||

const ret = await listDocument(

|

||||

{

|

||||

kb_id: knowledgeId || id,

|

||||

@ -123,7 +138,8 @@ export const useFetchDocumentList = () => {

|

||||

},

|

||||

{

|

||||

suffix: filterValue.type as string[],

|

||||

run_status: filterValue.run as string[],

|

||||

run_status: run as string[],

|

||||

return_empty_metadata: returnEmptyMetadata,

|

||||

metadata: filterValue.metadata as Record<string, string[]>,

|

||||

},

|

||||

);

|

||||

|

||||

@ -43,6 +43,18 @@ export interface IParserConfig {

|

||||

task_page_size?: number;

|

||||

raptor?: Raptor;

|

||||

graphrag?: GraphRag;

|

||||

image_context_window?: number;

|

||||

mineru_parse_method?: 'auto' | 'txt' | 'ocr';

|

||||

mineru_formula_enable?: boolean;

|

||||

mineru_table_enable?: boolean;

|

||||

mineru_lang?: string;

|

||||

entity_types?: string[];

|

||||

metadata?: Array<{

|

||||

key?: string;

|

||||

description?: string;

|

||||

enum?: string[];

|

||||

}>;

|

||||

enable_metadata?: boolean;

|

||||

}

|

||||

|

||||

interface Raptor {

|

||||

|

||||

@ -33,5 +33,6 @@ export interface IFetchKnowledgeListRequestParams {

|

||||

export interface IFetchDocumentListRequestBody {

|

||||

suffix?: string[];

|

||||

run_status?: string[];

|

||||

return_empty_metadata?: boolean;

|

||||

metadata?: Record<string, string[]>;

|

||||

}

|

||||

|

||||

@ -176,6 +176,15 @@ Procedural Memory: Learned skills, habits, and automated procedures.`,

|

||||

},

|

||||

knowledgeDetails: {

|

||||

metadata: {

|

||||

descriptionTip:

|

||||

'Provide descriptions or examples to guide LLM extract values for this field. If left empty, it will rely on the field name.',

|

||||

restrictTDefinedValuesTip:

|

||||

'Enum Mode: Restricts LLM extraction to match preset values only. Define values below.',

|

||||

valueExists:

|

||||

'Value already exists. Confirm to merge duplicates and combine all associated files.',

|

||||

fieldNameExists:

|

||||

'Field name already exists. Confirm to merge duplicates and combine all associated files.',

|

||||

fieldExists: 'Field already exists.',

|

||||

fieldSetting: 'Field settings',

|

||||

changesAffectNewParses: 'Changes affect new parses only.',

|

||||

editMetadataForDataset: 'View and edit metadata for ',

|

||||

@ -185,12 +194,25 @@ Procedural Memory: Learned skills, habits, and automated procedures.`,

|

||||

manageMetadata: 'Manage metadata',

|

||||

metadata: 'Metadata',

|

||||

values: 'Values',

|

||||

value: 'Value',

|

||||

action: 'Action',

|

||||

field: 'Field',

|

||||

description: 'Description',

|

||||

fieldName: 'Field name',

|

||||

editMetadata: 'Edit metadata',

|

||||

deleteWarn: 'This {{field}} will be removed from all associated files',

|

||||

deleteManageFieldAllWarn:

|

||||

'This field and all its corresponding values will be deleted from all associated files.',

|

||||

deleteManageValueAllWarn:

|

||||

'This value will be deleted from from all associated files.',

|

||||

deleteManageFieldSingleWarn:

|

||||

'This field and all its corresponding values will be deleted from this files.',

|

||||

deleteManageValueSingleWarn:

|

||||

'This value will be deleted from this files.',

|

||||

deleteSettingFieldWarn: `This field will be deleted; existing metadata won't be affected.`,

|

||||

deleteSettingValueWarn: `This value will be deleted; existing metadata won't be affected.`,

|

||||

},

|

||||

emptyMetadata: 'No metadata',

|

||||

metadataField: 'Metadata field',

|

||||

systemAttribute: 'System attribute',

|

||||

localUpload: 'Local upload',

|

||||

@ -334,9 +356,9 @@ Procedural Memory: Learned skills, habits, and automated procedures.`,

|

||||

html4excel: 'Excel to HTML',

|

||||

html4excelTip: `Use with the General chunking method. When disabled, spreadsheets (XLSX or XLS(Excel 97-2003)) in the knowledge base will be parsed into key-value pairs. When enabled, they will be parsed into HTML tables, splitting every 12 rows if the original table has more than 12 rows. See https://ragflow.io/docs/dev/enable_excel2html for details.`,

|

||||

autoKeywords: 'Auto-keyword',

|

||||