mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-02-02 16:45:08 +08:00

Compare commits

3 Commits

205a5eb9f5

...

1c38f4cefb

| Author | SHA1 | Date | |

|---|---|---|---|

| 1c38f4cefb | |||

| 74c195cd36 | |||

| e48bec1cbf |

@ -26,7 +26,7 @@ from routes import admin_bp

|

||||

from api.utils.log_utils import init_root_logger

|

||||

from api.constants import SERVICE_CONF

|

||||

from api import settings

|

||||

from admin.server.config import load_configurations, SERVICE_CONFIGS

|

||||

from config import load_configurations, SERVICE_CONFIGS

|

||||

|

||||

stop_event = threading.Event()

|

||||

|

||||

|

||||

@ -17,7 +17,7 @@

|

||||

|

||||

from flask import Blueprint, request

|

||||

|

||||

from admin.server.auth import login_verify

|

||||

from auth import login_verify

|

||||

from responses import success_response, error_response

|

||||

from services import UserMgr, ServiceMgr, UserServiceMgr

|

||||

from api.common.exceptions import AdminException

|

||||

|

||||

@ -27,7 +27,7 @@ from api.utils.crypt import decrypt

|

||||

from api.utils import health_utils

|

||||

|

||||

from api.common.exceptions import AdminException, UserAlreadyExistsError, UserNotFoundError

|

||||

from admin.server.config import SERVICE_CONFIGS

|

||||

from config import SERVICE_CONFIGS

|

||||

|

||||

|

||||

class UserMgr:

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: -1

|

||||

sidebar_position: -10

|

||||

slug: /configure_knowledge_base

|

||||

---

|

||||

|

||||

@ -58,11 +58,8 @@ You can also change a file's chunking method on the **Files** page.

|

||||

|

||||

|

||||

|

||||

:::tip NOTE

|

||||

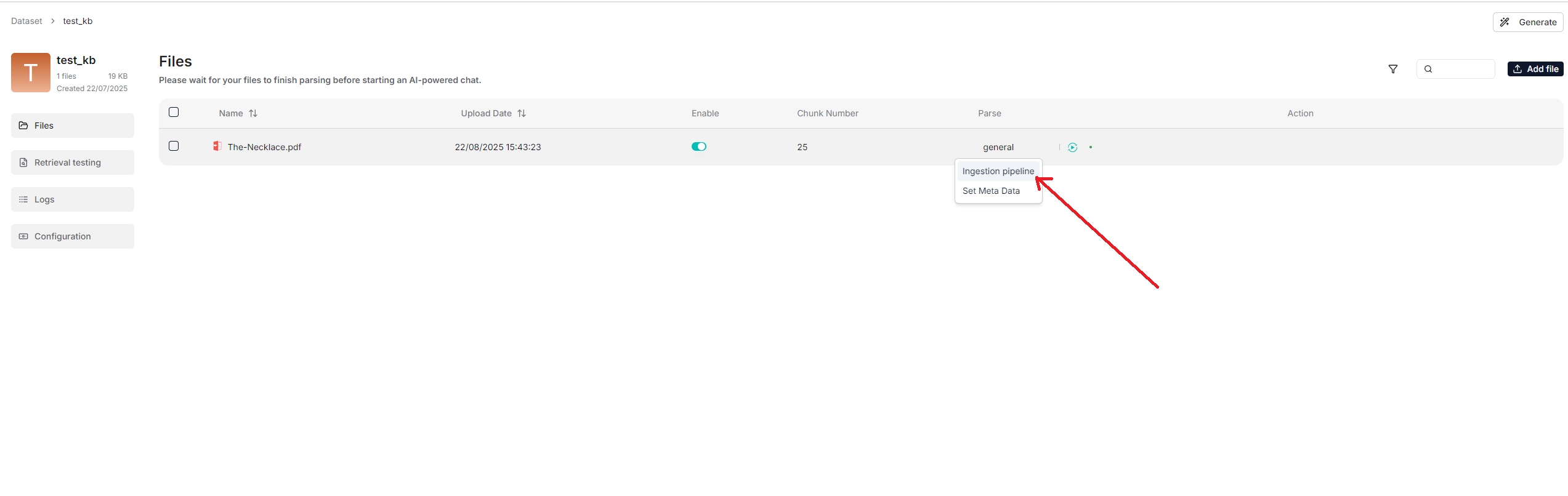

From v0.21.0, RAGFlow supports ingestion pipeline to allow for customized

|

||||

|

||||

<details>

|

||||

<summary>From v0.21.0 onward, RAGFlow supports ingestion pipeline to allow for customized data ingestion and cleansing workflows.</summary>

|

||||

<summary>From v0.21.0 onward, RAGFlow supports ingestion pipeline for customized data ingestion and cleansing workflows.</summary>

|

||||

|

||||

To use a customized data pipeline:

|

||||

|

||||

|

||||

39

docs/guides/dataset/extract_table_of_contents.md

Normal file

39

docs/guides/dataset/extract_table_of_contents.md

Normal file

@ -0,0 +1,39 @@

|

||||

---

|

||||

sidebar_position: 4

|

||||

slug: /enable_table_of_contents

|

||||

---

|

||||

|

||||

# Extract table of contents

|

||||

|

||||

Extract table of contents (TOC) from documents to provide long context RAG and improve retrieval.

|

||||

|

||||

---

|

||||

|

||||

During indexing, this technique uses LLM to extract and generate chapter information, which is added to each chunk to provide sufficient global context. At the retrieval stage, it first uses the chunks matched by search, then supplements missing chunks based on the table of contents structure. This addresses issues caused by chunk fragmentation and insufficient context, improving answer quality.

|

||||

|

||||

:::danger WARNING

|

||||

Enabling TOC extraction requires significant memory, computational resources, and tokens.

|

||||

:::

|

||||

|

||||

## Prerequisites

|

||||

|

||||

The system's default chat model is used to summarize clustered content. Before proceeding, ensure that you have a chat model properly configured:

|

||||

|

||||

|

||||

|

||||

## Quickstart

|

||||

|

||||

1. Navigate to the **Configuration** page.

|

||||

|

||||

2. Enable **TOC Enhance**.

|

||||

|

||||

3. To use this technique during retrieval, do either of the following:

|

||||

|

||||

- In the **Chat setting** panel of your chat app, switch on the **TOC Enhance** toggle.

|

||||

- If you are using an agent, click the **Retrieval** agent component to specify the dataset(s) and switch on the **TOC Enhance** toggle.

|

||||

|

||||

## Frequently asked questions

|

||||

|

||||

### Will previously parsed files be searched using the TOC enhancement feature once I enable `TOC Enhance`?

|

||||

|

||||

No. Only files parsed after you enable **TOC Enhance** will be searched using the TOC enhancement feature. To apply this feature to files parsed before enabling **TOC Enhance**, you must reparse them.

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: 1

|

||||

sidebar_position: -4

|

||||

slug: /select_pdf_parser

|

||||

---

|

||||

|

||||

@ -25,7 +25,7 @@ RAGFlow isn't one-size-fits-all. It is built for flexibility and supports deeper

|

||||

- **One**

|

||||

- To use a third-party visual model for parsing PDFs, ensure you have set a default img2txt model under **Set default models** on the **Model providers** page.

|

||||

|

||||

## Procedure

|

||||

## Quickstart

|

||||

|

||||

1. On your dataset's **Configuration** page, select a chunking method, say **General**.

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: 0

|

||||

sidebar_position: -7

|

||||

slug: /set_metada

|

||||

---

|

||||

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

---

|

||||

sidebar_position: 2

|

||||

sidebar_position: -2

|

||||

slug: /set_page_rank

|

||||

---

|

||||

|

||||

|

||||

@ -42,8 +42,8 @@ A tag set is *not* involved in document indexing or retrieval. Do not specify a

|

||||

:::

|

||||

|

||||

1. Click **+ Create dataset** to create a dataset.

|

||||

2. Navigate to the **Configuration** page of the created dataset and choose **Tag** as the default chunking method.

|

||||

3. Navigate to the **Dataset** page and upload and parse your table file in XLSX, CSV, or TXT formats.

|

||||

2. Navigate to the **Configuration** page of the created dataset, select **Built-in** in **Ingestion pipeline**, then choose **Tag** as the default chunking method from the **Built-in** drop-down menu.

|

||||

3. Go back to the **Files** page and upload and parse your table file in XLSX, CSV, or TXT formats.

|

||||

_A tag cloud appears under the **Tag view** section, indicating the tag set is created:_

|

||||

|

||||

4. Click the **Table** tab to view the tag frequency table:

|

||||

|

||||

@ -17,6 +17,7 @@ import json

|

||||

import logging

|

||||

import re

|

||||

import math

|

||||

import os

|

||||

from collections import OrderedDict

|

||||

from dataclasses import dataclass

|

||||

|

||||

@ -154,7 +155,7 @@ class Dealer:

|

||||

query_vector=q_vec,

|

||||

aggregation=aggs,

|

||||

highlight=highlight,

|

||||

field=self.dataStore.getFields(res, src),

|

||||

field=self.dataStore.getFields(res, src + ["_score"]),

|

||||

keywords=keywords

|

||||

)

|

||||

|

||||

@ -354,10 +355,8 @@ class Dealer:

|

||||

if not question:

|

||||

return ranks

|

||||

|

||||

RERANK_LIMIT = 64

|

||||

RERANK_LIMIT = int(RERANK_LIMIT//page_size + ((RERANK_LIMIT%page_size)/(page_size*1.) + 0.5)) * page_size if page_size>1 else 1

|

||||

if RERANK_LIMIT < 1: ## when page_size is very large the RERANK_LIMIT will be 0.

|

||||

RERANK_LIMIT = 1

|

||||

# Ensure RERANK_LIMIT is multiple of page_size

|

||||

RERANK_LIMIT = math.ceil(64/page_size) * page_size if page_size>1 else 1

|

||||

req = {"kb_ids": kb_ids, "doc_ids": doc_ids, "page": math.ceil(page_size*page/RERANK_LIMIT), "size": RERANK_LIMIT,

|

||||

"question": question, "vector": True, "topk": top,

|

||||

"similarity": similarity_threshold,

|

||||

@ -376,15 +375,25 @@ class Dealer:

|

||||

vector_similarity_weight,

|

||||

rank_feature=rank_feature)

|

||||

else:

|

||||

sim, tsim, vsim = self.rerank(

|

||||

sres, question, 1 - vector_similarity_weight, vector_similarity_weight,

|

||||

rank_feature=rank_feature)

|

||||

lower_case_doc_engine = os.getenv('DOC_ENGINE', 'elasticsearch')

|

||||

if lower_case_doc_engine == "elasticsearch":

|

||||

# ElasticSearch doesn't normalize each way score before fusion.

|

||||

sim, tsim, vsim = self.rerank(

|

||||

sres, question, 1 - vector_similarity_weight, vector_similarity_weight,

|

||||

rank_feature=rank_feature)

|

||||

else:

|

||||

# Don't need rerank here since Infinity normalizes each way score before fusion.

|

||||

sim = [sres.field[id].get("_score", 0.0) for id in sres.ids]

|

||||

tsim = sim

|

||||

vsim = sim

|

||||

# Already paginated in search function

|

||||

idx = np.argsort(sim * -1)[(page - 1) * page_size:page * page_size]

|

||||

begin = ((page % (RERANK_LIMIT//page_size)) - 1) * page_size

|

||||

sim = sim[begin : begin + page_size]

|

||||

sim_np = np.array(sim)

|

||||

idx = np.argsort(sim_np * -1)

|

||||

dim = len(sres.query_vector)

|

||||

vector_column = f"q_{dim}_vec"

|

||||

zero_vector = [0.0] * dim

|

||||

sim_np = np.array(sim)

|

||||

filtered_count = (sim_np >= similarity_threshold).sum()

|

||||

ranks["total"] = int(filtered_count) # Convert from np.int64 to Python int otherwise JSON serializable error

|

||||

for i in idx:

|

||||

|

||||

@ -445,8 +445,8 @@ class InfinityConnection(DocStoreConnection):

|

||||

self.connPool.release_conn(inf_conn)

|

||||

res = concat_dataframes(df_list, output)

|

||||

if matchExprs:

|

||||

res["Sum"] = res[score_column] + res[PAGERANK_FLD]

|

||||

res = res.sort_values(by="Sum", ascending=False).reset_index(drop=True).drop(columns=["Sum"])

|

||||

res["_score"] = res[score_column] + res[PAGERANK_FLD]

|

||||

res = res.sort_values(by="_score", ascending=False).reset_index(drop=True)

|

||||

res = res.head(limit)

|

||||

logger.debug(f"INFINITY search final result: {str(res)}")

|

||||

return res, total_hits_count

|

||||

|

||||

Reference in New Issue

Block a user