mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-23 03:26:53 +08:00

Miscellaneous UI updates (#6947)

### What problem does this PR solve? ### Type of change - [x] Documentation Update

This commit is contained in:

@ -119,7 +119,7 @@ No, this feature is not supported.

|

||||

Yes, we support enhancing user queries based on existing context of an ongoing conversation:

|

||||

|

||||

1. On the **Chat** page, hover over the desired assistant and select **Edit**.

|

||||

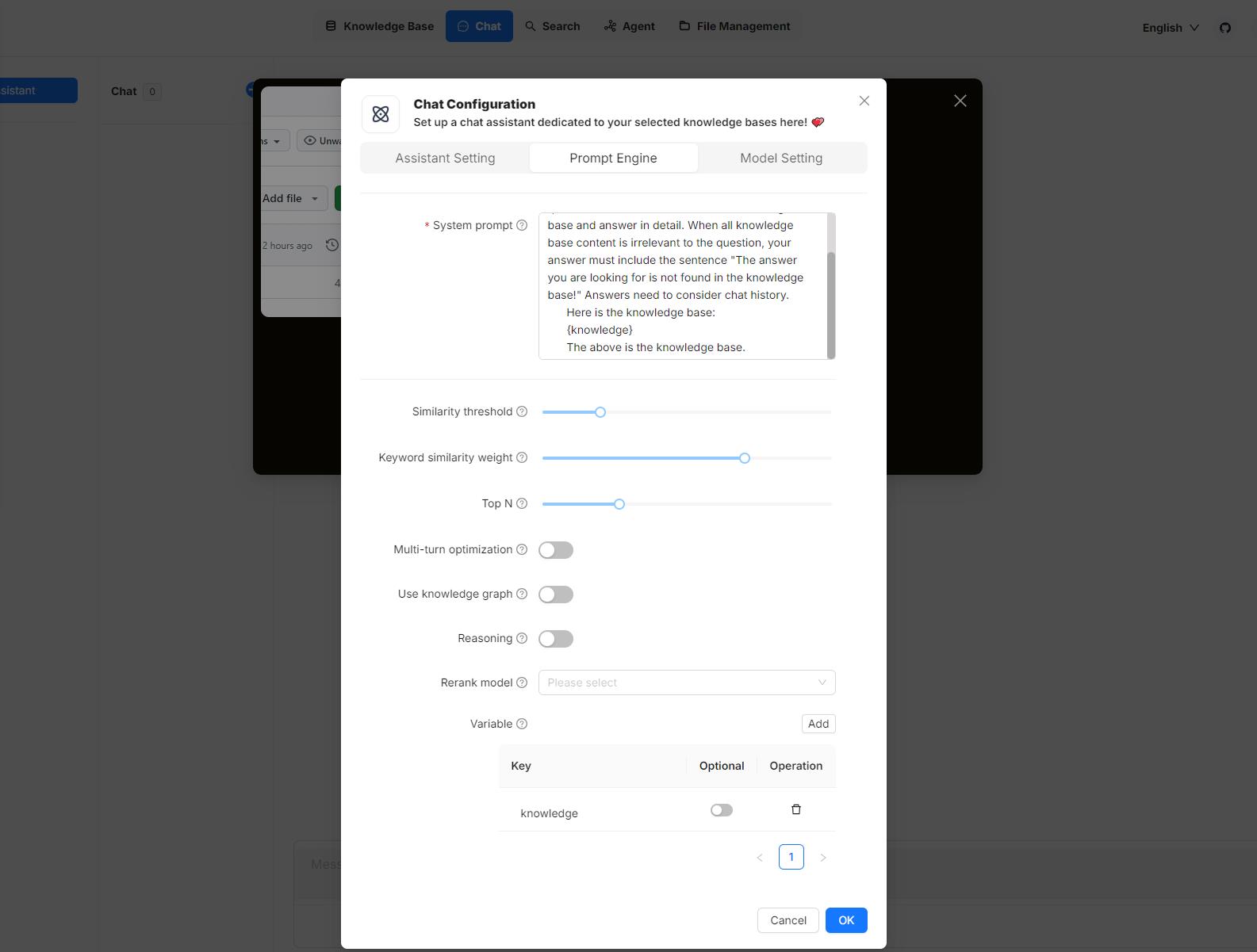

2. In the **Chat Configuration** popup, click the **Prompt Engine** tab.

|

||||

2. In the **Chat Configuration** popup, click the **Prompt engine** tab.

|

||||

3. Switch on **Multi-turn optimization** to enable this feature.

|

||||

|

||||

---

|

||||

@ -419,7 +419,7 @@ It is not recommended to manually change the 32-file batch upload limit. However

|

||||

This error occurs because there are too many chunks matching your search criteria. Try reducing the **TopN** and increasing **Similarity threshold** to fix this issue:

|

||||

|

||||

1. Click **Chat** in the middle top of the page.

|

||||

2. Right-click the desired conversation > **Edit** > **Prompt Engine**

|

||||

2. Right-click the desired conversation > **Edit** > **Prompt engine**

|

||||

3. Reduce the **TopN** and/or raise **Similarity threshold**.

|

||||

4. Click **OK** to confirm your changes.

|

||||

|

||||

|

||||

@ -12,8 +12,8 @@ A checklist to speed up question answering.

|

||||

|

||||

Please note that some of your settings may consume a significant amount of time. If you often find that your question answering is time-consuming, here is a checklist to consider:

|

||||

|

||||

- In the **Prompt Engine** tab of your **Chat Configuration** dialogue, disabling **Multi-turn optimization** will reduce the time required to get an answer from the LLM.

|

||||

- In the **Prompt Engine** tab of your **Chat Configuration** dialogue, leaving the **Rerank model** field empty will significantly decrease retrieval time.

|

||||

- In the **Prompt engine** tab of your **Chat Configuration** dialogue, disabling **Multi-turn optimization** will reduce the time required to get an answer from the LLM.

|

||||

- In the **Prompt engine** tab of your **Chat Configuration** dialogue, leaving the **Rerank model** field empty will significantly decrease retrieval time.

|

||||

- When using a rerank model, ensure you have a GPU for acceleration; otherwise, the reranking process will be *prohibitively* slow.

|

||||

|

||||

:::tip NOTE

|

||||

|

||||

@ -17,7 +17,7 @@ In RAGFlow, variables are closely linked with the system prompt. When you add a

|

||||

|

||||

## Where to set variables

|

||||

|

||||

Hover your mouse over your chat assistant, click **Edit** to open its **Chat Configuration** dialogue, then click the **Prompt Engine** tab. Here, you can work on your variables in the **System prompt** field and the **Variable** section:

|

||||

Hover your mouse over your chat assistant, click **Edit** to open its **Chat Configuration** dialogue, then click the **Prompt engine** tab. Here, you can work on your variables in the **System prompt** field and the **Variable** section:

|

||||

|

||||

|

||||

|

||||

@ -33,7 +33,7 @@ In the **Variable** section, you add, remove, or update variables.

|

||||

It does not currently make a difference whether you set `{knowledge}` to optional or mandatory, but note that this design will be updated at a later point.

|

||||

:::

|

||||

|

||||

From v0.17.0 onward, you can start an AI chat without specifying knowledge bases. In this case, we recommend removing the `{knowledge}` variable to prevent unnecessary references and keeping the **Empty response** field empty to avoid errors.

|

||||

From v0.17.0 onward, you can start an AI chat without specifying knowledge bases. In this case, we recommend removing the `{knowledge}` variable to prevent unnecessary reference and keeping the **Empty response** field empty to avoid errors.

|

||||

|

||||

### Custom variables

|

||||

|

||||

@ -46,13 +46,15 @@ Besides `{knowledge}`, you can also define your own variables to pair with the s

|

||||

|

||||

## 2. Update system prompt

|

||||

|

||||

After you add or remove variables in the **Variable** section, ensure your changes are reflected in the system prompt to avoid inconsistencies and errors. Here's an example:

|

||||

After you add or remove variables in the **Variable** section, ensure your changes are reflected in the system prompt to avoid inconsistencies or errors. Here's an example:

|

||||

|

||||

```

|

||||

You are an intelligent assistant. Please answer the question by summarizing chunks from the specified knowledge base(s)...

|

||||

|

||||

Your answers should follow a professional and {style} style.

|

||||

|

||||

...

|

||||

|

||||

Here is the knowledge base:

|

||||

{knowledge}

|

||||

The above is the knowledge base.

|

||||

|

||||

@ -19,7 +19,7 @@ You start an AI conversation by creating an assistant.

|

||||

|

||||

> RAGFlow offers you the flexibility of choosing a different chat model for each dialogue, while allowing you to set the default models in **System Model Settings**.

|

||||

|

||||

2. Update **Assistant Settings**:

|

||||

2. Update **Assistant settings**:

|

||||

|

||||

- **Assistant name** is the name of your chat assistant. Each assistant corresponds to a dialogue with a unique combination of knowledge bases, prompts, hybrid search configurations, and large model settings.

|

||||

- **Empty response**:

|

||||

@ -28,7 +28,7 @@ You start an AI conversation by creating an assistant.

|

||||

- **Show quote**: This is a key feature of RAGFlow and enabled by default. RAGFlow does not work like a black box. Instead, it clearly shows the sources of information that its responses are based on.

|

||||

- Select the corresponding knowledge bases. You can select one or multiple knowledge bases, but ensure that they use the same embedding model, otherwise an error would occur.

|

||||

|

||||

3. Update **Prompt Engine**:

|

||||

3. Update **Prompt engine**:

|

||||

|

||||

- In **System**, you fill in the prompts for your LLM, you can also leave the default prompt as-is for the beginning.

|

||||

- **Similarity threshold** sets the similarity "bar" for each chunk of text. The default is 0.2. Text chunks with lower similarity scores are filtered out of the final response.

|

||||

|

||||

@ -3,11 +3,11 @@ sidebar_position: 2

|

||||

slug: /deploy_local_llm

|

||||

---

|

||||

|

||||

# Deploy LLM locally

|

||||

# Deploy local models

|

||||

import Tabs from '@theme/Tabs';

|

||||

import TabItem from '@theme/TabItem';

|

||||

|

||||

Run models locally using Ollama, Xinference, or other frameworks.

|

||||

Deploy and run local models using Ollama, Xinference, or other frameworks.

|

||||

|

||||

---

|

||||

|

||||

|

||||

@ -5,7 +5,7 @@ slug: /join_or_leave_team

|

||||

|

||||

# Join or leave a team

|

||||

|

||||

Accept a team invite to join a team, decline a team invite, or leave a team.

|

||||

Accept an invite to join a team, decline an invite, or leave a team.

|

||||

|

||||

---

|

||||

|

||||

@ -23,7 +23,7 @@ You cannot invite users to a team unless you are its owner.

|

||||

## Prerequisites

|

||||

|

||||

1. Ensure that your Email address that received the team invitation is associated with a RAGFlow user account.

|

||||

2. To view and update the team owner's shared knowledge base, the team owner must set a knowledge base's **Permissions** to **Team**.

|

||||

2. The team owner should share his knowledge bases by setting their **Permission** to **Team**.

|

||||

|

||||

## Accept or decline team invite

|

||||

|

||||

|

||||

@ -74,7 +74,7 @@ Released on March 3, 2025.

|

||||

|

||||

### New features

|

||||

|

||||

- AI chat: Implements Deep Research for agentic reasoning. To activate this, enable the **Reasoning** toggle under the **Prompt Engine** tab of your chat assistant dialogue.

|

||||

- AI chat: Implements Deep Research for agentic reasoning. To activate this, enable the **Reasoning** toggle under the **Prompt engine** tab of your chat assistant dialogue.

|

||||

- AI chat: Leverages Tavily-based web search to enhance contexts in agentic reasoning. To activate this, enter the correct Tavily API key under the **Assistant settings** tab of your chat assistant dialogue.

|

||||

- AI chat: Supports starting a chat without specifying knowledge bases.

|

||||

- AI chat: HTML files can also be previewed and referenced, in addition to PDF files.

|

||||

|

||||

Reference in New Issue

Block a user