mirror of

https://github.com/infiniflow/ragflow.git

synced 2026-01-30 23:26:36 +08:00

Docs: Knowledge base renamed to dataset. (#10269)

### What problem does this PR solve? ### Type of change - [x] Documentation Update

This commit is contained in:

@ -3,6 +3,6 @@

|

||||

"position": 0,

|

||||

"link": {

|

||||

"type": "generated-index",

|

||||

"description": "Guides on configuring a knowledge base."

|

||||

"description": "Guides on configuring a dataset."

|

||||

}

|

||||

}

|

||||

|

||||

@ -6,7 +6,7 @@ slug: /autokeyword_autoquestion

|

||||

# Auto-keyword Auto-question

|

||||

import APITable from '@site/src/components/APITable';

|

||||

|

||||

Use a chat model to generate keywords or questions from each chunk in the knowledge base.

|

||||

Use a chat model to generate keywords or questions from each chunk in the dataset.

|

||||

|

||||

---

|

||||

|

||||

@ -18,7 +18,7 @@ Enabling this feature increases document indexing time and uses extra tokens, as

|

||||

|

||||

## What is Auto-keyword?

|

||||

|

||||

Auto-keyword refers to the auto-keyword generation feature of RAGFlow. It uses a chat model to generate a set of keywords or synonyms from each chunk to correct errors and enhance retrieval accuracy. This feature is implemented as a slider under **Page rank** on the **Configuration** page of your knowledge base.

|

||||

Auto-keyword refers to the auto-keyword generation feature of RAGFlow. It uses a chat model to generate a set of keywords or synonyms from each chunk to correct errors and enhance retrieval accuracy. This feature is implemented as a slider under **Page rank** on the **Configuration** page of your dataset.

|

||||

|

||||

**Values**:

|

||||

|

||||

@ -33,7 +33,7 @@ Auto-keyword refers to the auto-keyword generation feature of RAGFlow. It uses a

|

||||

|

||||

## What is Auto-question?

|

||||

|

||||

Auto-question is a feature of RAGFlow that automatically generates questions from chunks of data using a chat model. These questions (e.g. who, what, and why) also help correct errors and improve the matching of user queries. The feature usually works with FAQ retrieval scenarios involving product manuals or policy documents. And you can find this feature as a slider under **Page rank** on the **Configuration** page of your knowledge base.

|

||||

Auto-question is a feature of RAGFlow that automatically generates questions from chunks of data using a chat model. These questions (e.g. who, what, and why) also help correct errors and improve the matching of user queries. The feature usually works with FAQ retrieval scenarios involving product manuals or policy documents. And you can find this feature as a slider under **Page rank** on the **Configuration** page of your dataset.

|

||||

|

||||

**Values**:

|

||||

|

||||

@ -48,7 +48,7 @@ Auto-question is a feature of RAGFlow that automatically generates questions fro

|

||||

|

||||

## Tips from the community

|

||||

|

||||

The Auto-keyword or Auto-question values relate closely to the chunking size in your knowledge base. However, if you are new to this feature and unsure which value(s) to start with, the following are some value settings we gathered from our community. While they may not be accurate, they provide a starting point at the very least.

|

||||

The Auto-keyword or Auto-question values relate closely to the chunking size in your dataset. However, if you are new to this feature and unsure which value(s) to start with, the following are some value settings we gathered from our community. While they may not be accurate, they provide a starting point at the very least.

|

||||

|

||||

```mdx-code-block

|

||||

<APITable>

|

||||

|

||||

@ -3,6 +3,6 @@

|

||||

"position": 11,

|

||||

"link": {

|

||||

"type": "generated-index",

|

||||

"description": "Best practices on configuring a knowledge base."

|

||||

"description": "Best practices on configuring a dataset."

|

||||

}

|

||||

}

|

||||

|

||||

@ -13,7 +13,7 @@ A checklist to speed up document parsing and indexing.

|

||||

Please note that some of your settings may consume a significant amount of time. If you often find that document parsing is time-consuming, here is a checklist to consider:

|

||||

|

||||

- Use GPU to reduce embedding time.

|

||||

- On the configuration page of your knowledge base, switch off **Use RAPTOR to enhance retrieval**.

|

||||

- On the configuration page of your dataset, switch off **Use RAPTOR to enhance retrieval**.

|

||||

- Extracting knowledge graph (GraphRAG) is time-consuming.

|

||||

- Disable **Auto-keyword** and **Auto-question** on the configuration page of your knowledge base, as both depend on the LLM.

|

||||

- **v0.17.0+:** If all PDFs in your knowledge base are plain text and do not require GPU-intensive processes like OCR (Optical Character Recognition), TSR (Table Structure Recognition), or DLA (Document Layout Analysis), you can choose **Naive** over **DeepDoc** or other time-consuming large model options in the **Document parser** dropdown. This will substantially reduce document parsing time.

|

||||

- Disable **Auto-keyword** and **Auto-question** on the configuration page of your dataset, as both depend on the LLM.

|

||||

- **v0.17.0+:** If all PDFs in your dataset are plain text and do not require GPU-intensive processes like OCR (Optical Character Recognition), TSR (Table Structure Recognition), or DLA (Document Layout Analysis), you can choose **Naive** over **DeepDoc** or other time-consuming large model options in the **Document parser** dropdown. This will substantially reduce document parsing time.

|

||||

|

||||

@ -3,28 +3,28 @@ sidebar_position: -1

|

||||

slug: /configure_knowledge_base

|

||||

---

|

||||

|

||||

# Configure knowledge base

|

||||

# Configure dataset

|

||||

|

||||

Knowledge base, hallucination-free chat, and file management are the three pillars of RAGFlow. RAGFlow's AI chats are based on knowledge bases. Each of RAGFlow's knowledge bases serves as a knowledge source, *parsing* files uploaded from your local machine and file references generated in **File Management** into the real 'knowledge' for future AI chats. This guide demonstrates some basic usages of the knowledge base feature, covering the following topics:

|

||||

Most of RAGFlow's chat assistants and Agents are based on datasets. Each of RAGFlow's datasets serves as a knowledge source, *parsing* files uploaded from your local machine and file references generated in **File Management** into the real 'knowledge' for future AI chats. This guide demonstrates some basic usages of the dataset feature, covering the following topics:

|

||||

|

||||

- Create a knowledge base

|

||||

- Configure a knowledge base

|

||||

- Search for a knowledge base

|

||||

- Delete a knowledge base

|

||||

- Create a dataset

|

||||

- Configure a dataset

|

||||

- Search for a dataset

|

||||

- Delete a dataset

|

||||

|

||||

## Create knowledge base

|

||||

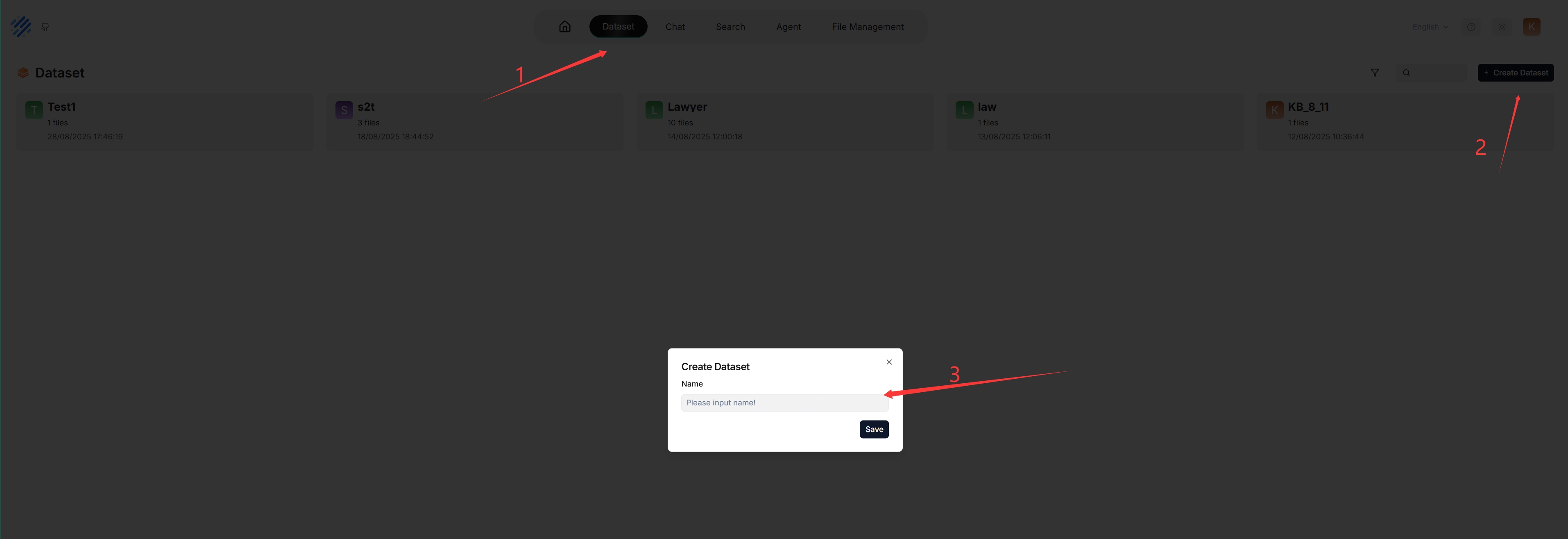

## Create dataset

|

||||

|

||||

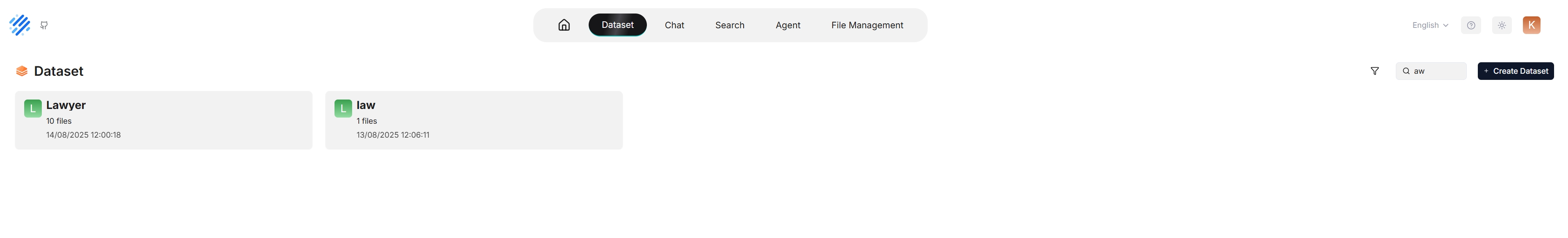

With multiple knowledge bases, you can build more flexible, diversified question answering. To create your first knowledge base:

|

||||

With multiple datasets, you can build more flexible, diversified question answering. To create your first dataset:

|

||||

|

||||

|

||||

|

||||

|

||||

_Each time a knowledge base is created, a folder with the same name is generated in the **root/.knowledgebase** directory._

|

||||

_Each time a dataset is created, a folder with the same name is generated in the **root/.knowledgebase** directory._

|

||||

|

||||

## Configure knowledge base

|

||||

## Configure dataset

|

||||

|

||||

The following screenshot shows the configuration page of a knowledge base. A proper configuration of your knowledge base is crucial for future AI chats. For example, choosing the wrong embedding model or chunking method would cause unexpected semantic loss or mismatched answers in chats.

|

||||

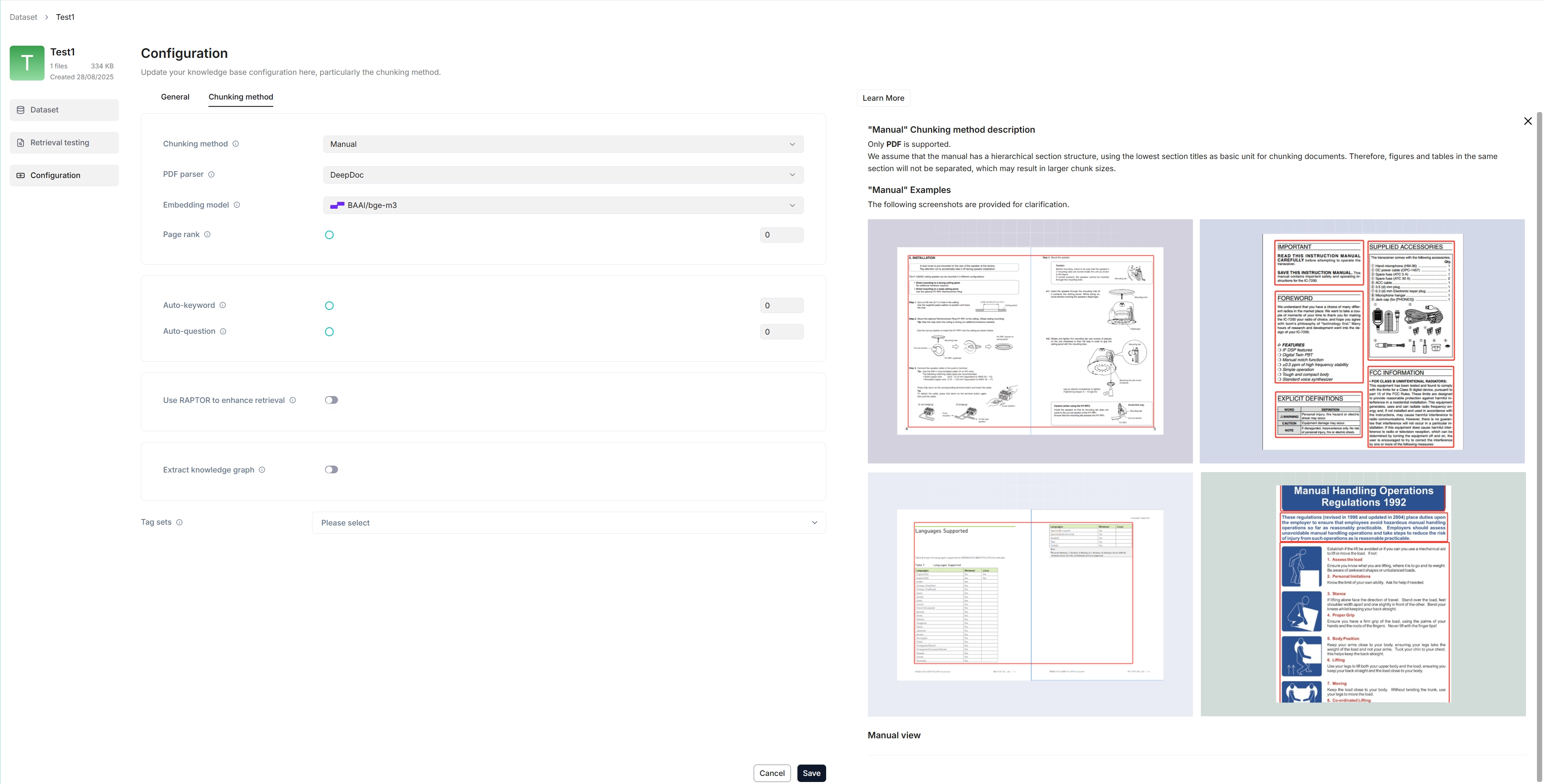

The following screenshot shows the configuration page of a dataset. A proper configuration of your dataset is crucial for future AI chats. For example, choosing the wrong embedding model or chunking method would cause unexpected semantic loss or mismatched answers in chats.

|

||||

|

||||

|

||||

|

||||

|

||||

This section covers the following topics:

|

||||

|

||||

@ -52,7 +52,7 @@ RAGFlow offers multiple chunking template to facilitate chunking files of differ

|

||||

| Presentation | | PDF, PPTX |

|

||||

| Picture | | JPEG, JPG, PNG, TIF, GIF |

|

||||

| One | Each document is chunked in its entirety (as one). | DOCX, XLSX, XLS (Excel 97-2003), PDF, TXT |

|

||||

| Tag | The knowledge base functions as a tag set for the others. | XLSX, CSV/TXT |

|

||||

| Tag | The dataset functions as a tag set for the others. | XLSX, CSV/TXT |

|

||||

|

||||

You can also change a file's chunking method on the **Datasets** page.

|

||||

|

||||

@ -60,7 +60,7 @@ You can also change a file's chunking method on the **Datasets** page.

|

||||

|

||||

### Select embedding model

|

||||

|

||||

An embedding model converts chunks into embeddings. It cannot be changed once the knowledge base has chunks. To switch to a different embedding model, you must delete all existing chunks in the knowledge base. The obvious reason is that we *must* ensure that files in a specific knowledge base are converted to embeddings using the *same* embedding model (ensure that they are compared in the same embedding space).

|

||||

An embedding model converts chunks into embeddings. It cannot be changed once the dataset has chunks. To switch to a different embedding model, you must delete all existing chunks in the dataset. The obvious reason is that we *must* ensure that files in a specific dataset are converted to embeddings using the *same* embedding model (ensure that they are compared in the same embedding space).

|

||||

|

||||

The following embedding models can be deployed locally:

|

||||

|

||||

@ -73,19 +73,19 @@ These two embedding models are optimized specifically for English and Chinese, s

|

||||

|

||||

### Upload file

|

||||

|

||||

- RAGFlow's **File Management** allows you to link a file to multiple knowledge bases, in which case each target knowledge base holds a reference to the file.

|

||||

- In **Knowledge Base**, you are also given the option of uploading a single file or a folder of files (bulk upload) from your local machine to a knowledge base, in which case the knowledge base holds file copies.

|

||||

- RAGFlow's **File Management** allows you to link a file to multiple datasets, in which case each target dataset holds a reference to the file.

|

||||

- In **Knowledge Base**, you are also given the option of uploading a single file or a folder of files (bulk upload) from your local machine to a dataset, in which case the dataset holds file copies.

|

||||

|

||||

While uploading files directly to a knowledge base seems more convenient, we *highly* recommend uploading files to **File Management** and then linking them to the target knowledge bases. This way, you can avoid permanently deleting files uploaded to the knowledge base.

|

||||

While uploading files directly to a dataset seems more convenient, we *highly* recommend uploading files to **File Management** and then linking them to the target datasets. This way, you can avoid permanently deleting files uploaded to the dataset.

|

||||

|

||||

### Parse file

|

||||

|

||||

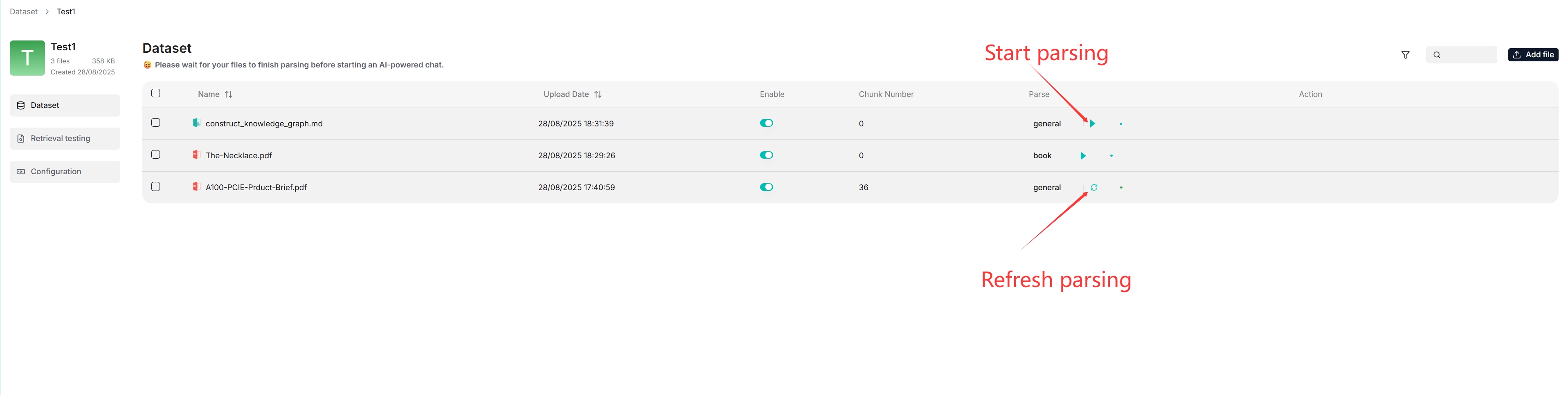

File parsing is a crucial topic in knowledge base configuration. The meaning of file parsing in RAGFlow is twofold: chunking files based on file layout and building embedding and full-text (keyword) indexes on these chunks. After having selected the chunking method and embedding model, you can start parsing a file:

|

||||

File parsing is a crucial topic in dataset configuration. The meaning of file parsing in RAGFlow is twofold: chunking files based on file layout and building embedding and full-text (keyword) indexes on these chunks. After having selected the chunking method and embedding model, you can start parsing a file:

|

||||

|

||||

|

||||

|

||||

- As shown above, RAGFlow allows you to use a different chunking method for a particular file, offering flexibility beyond the default method.

|

||||

- As shown above, RAGFlow allows you to enable or disable individual files, offering finer control over knowledge base-based AI chats.

|

||||

- As shown above, RAGFlow allows you to enable or disable individual files, offering finer control over dataset-based AI chats.

|

||||

|

||||

### Intervene with file parsing results

|

||||

|

||||

@ -122,17 +122,17 @@ RAGFlow uses multiple recall of both full-text search and vector search in its c

|

||||

|

||||

See [Run retrieval test](./run_retrieval_test.md) for details.

|

||||

|

||||

## Search for knowledge base

|

||||

## Search for dataset

|

||||

|

||||

As of RAGFlow v0.20.5, the search feature is still in a rudimentary form, supporting only knowledge base search by name.

|

||||

As of RAGFlow v0.20.5, the search feature is still in a rudimentary form, supporting only dataset search by name.

|

||||

|

||||

|

||||

|

||||

|

||||

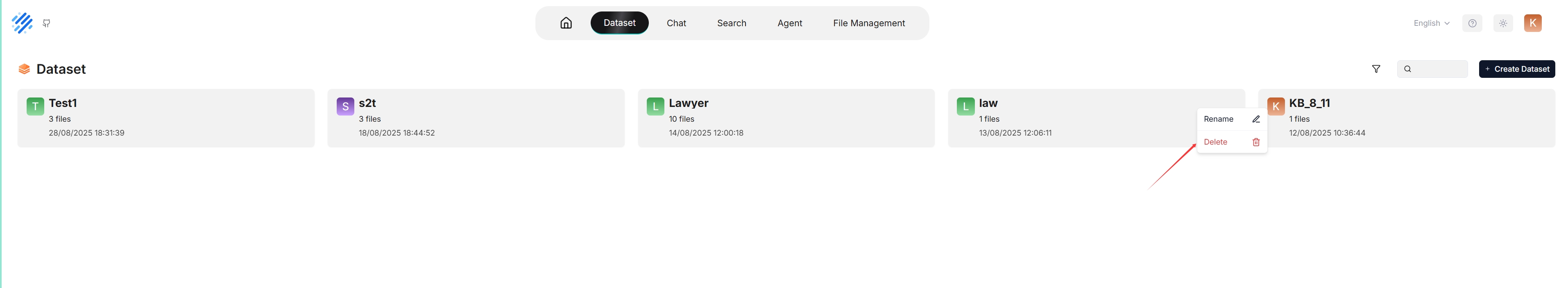

## Delete knowledge base

|

||||

## Delete dataset

|

||||

|

||||

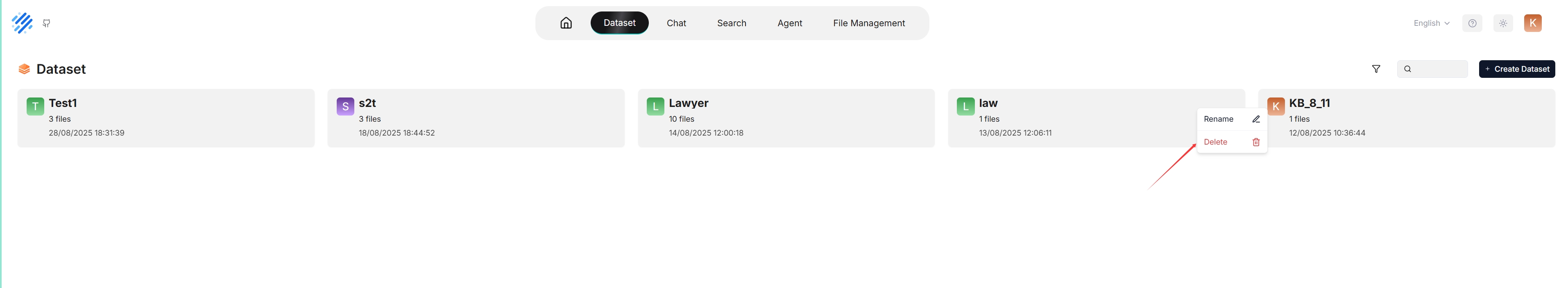

You are allowed to delete a knowledge base. Hover your mouse over the three dot of the intended knowledge base card and the **Delete** option appears. Once you delete a knowledge base, the associated folder under **root/.knowledge** directory is AUTOMATICALLY REMOVED. The consequence is:

|

||||

You are allowed to delete a dataset. Hover your mouse over the three dot of the intended dataset card and the **Delete** option appears. Once you delete a dataset, the associated folder under **root/.knowledge** directory is AUTOMATICALLY REMOVED. The consequence is:

|

||||

|

||||

- The files uploaded directly to the knowledge base are gone;

|

||||

- The files uploaded directly to the dataset are gone;

|

||||

- The file references, which you created from within **File Management**, are gone, but the associated files still exist in **File Management**.

|

||||

|

||||

|

||||

|

||||

|

||||

@ -5,7 +5,7 @@ slug: /construct_knowledge_graph

|

||||

|

||||

# Construct knowledge graph

|

||||

|

||||

Generate a knowledge graph for your knowledge base.

|

||||

Generate a knowledge graph for your dataset.

|

||||

|

||||

---

|

||||

|

||||

@ -13,7 +13,7 @@ To enhance multi-hop question-answering, RAGFlow adds a knowledge graph construc

|

||||

|

||||

|

||||

|

||||

From v0.16.0 onward, RAGFlow supports constructing a knowledge graph on a knowledge base, allowing you to construct a *unified* graph across multiple files within your knowledge base. When a newly uploaded file starts parsing, the generated graph will automatically update.

|

||||

From v0.16.0 onward, RAGFlow supports constructing a knowledge graph on a dataset, allowing you to construct a *unified* graph across multiple files within your dataset. When a newly uploaded file starts parsing, the generated graph will automatically update.

|

||||

|

||||

:::danger WARNING

|

||||

Constructing a knowledge graph requires significant memory, computational resources, and tokens.

|

||||

@ -37,7 +37,7 @@ The system's default chat model is used to generate knowledge graph. Before proc

|

||||

|

||||

### Entity types (*Required*)

|

||||

|

||||

The types of the entities to extract from your knowledge base. The default types are: **organization**, **person**, **event**, and **category**. Add or remove types to suit your specific knowledge base.

|

||||

The types of the entities to extract from your dataset. The default types are: **organization**, **person**, **event**, and **category**. Add or remove types to suit your specific dataset.

|

||||

|

||||

### Method

|

||||

|

||||

@ -62,12 +62,12 @@ In a knowledge graph, a community is a cluster of entities linked by relationshi

|

||||

|

||||

## Procedure

|

||||

|

||||

1. On the **Configuration** page of your knowledge base, switch on **Extract knowledge graph** or adjust its settings as needed, and click **Save** to confirm your changes.

|

||||

1. On the **Configuration** page of your dataset, switch on **Extract knowledge graph** or adjust its settings as needed, and click **Save** to confirm your changes.

|

||||

|

||||

- *The default knowledge graph configurations for your knowledge base are now set and files uploaded from this point onward will automatically use these settings during parsing.*

|

||||

- *The default knowledge graph configurations for your dataset are now set and files uploaded from this point onward will automatically use these settings during parsing.*

|

||||

- *Files parsed before this update will retain their original knowledge graph settings.*

|

||||

|

||||

2. The knowledge graph of your knowledge base does *not* automatically update *until* a newly uploaded file is parsed.

|

||||

2. The knowledge graph of your dataset does *not* automatically update *until* a newly uploaded file is parsed.

|

||||

|

||||

_A **Knowledge graph** entry appears under **Configuration** once a knowledge graph is created._

|

||||

|

||||

@ -75,13 +75,13 @@ In a knowledge graph, a community is a cluster of entities linked by relationshi

|

||||

4. To use the created knowledge graph, do either of the following:

|

||||

|

||||

- In the **Chat setting** panel of your chat app, switch on the **Use knowledge graph** toggle.

|

||||

- If you are using an agent, click the **Retrieval** agent component to specify the knowledge base(s) and switch on the **Use knowledge graph** toggle.

|

||||

- If you are using an agent, click the **Retrieval** agent component to specify the dataset(s) and switch on the **Use knowledge graph** toggle.

|

||||

|

||||

## Frequently asked questions

|

||||

|

||||

### Can I have different knowledge graph settings for different files in my knowledge base?

|

||||

### Can I have different knowledge graph settings for different files in my dataset?

|

||||

|

||||

Yes, you can. Just one graph is generated per knowledge base. The smaller graphs of your files will be *combined* into one big, unified graph at the end of the graph extraction process.

|

||||

Yes, you can. Just one graph is generated per dataset. The smaller graphs of your files will be *combined* into one big, unified graph at the end of the graph extraction process.

|

||||

|

||||

### Does the knowledge graph automatically update when I remove a related file?

|

||||

|

||||

@ -89,7 +89,7 @@ Nope. The knowledge graph does *not* automatically update *until* a newly upload

|

||||

|

||||

### How to remove a generated knowledge graph?

|

||||

|

||||

To remove the generated knowledge graph, delete all related files in your knowledge base. Although the **Knowledge graph** entry will still be visible, the graph has actually been deleted.

|

||||

To remove the generated knowledge graph, delete all related files in your dataset. Although the **Knowledge graph** entry will still be visible, the graph has actually been deleted.

|

||||

|

||||

### Where is the created knowledge graph stored?

|

||||

|

||||

|

||||

@ -12,7 +12,7 @@ Convert complex Excel spreadsheets into HTML tables.

|

||||

When using the **General** chunking method, you can enable the **Excel to HTML** toggle to convert spreadsheet files into HTML tables. If it is disabled, spreadsheet tables will be represented as key-value pairs. For complex tables that cannot be simply represented this way, you must enable this feature.

|

||||

|

||||

:::caution WARNING

|

||||

The feature is disabled by default. If your knowledge base contains spreadsheets with complex tables and you do not enable this feature, RAGFlow will not throw an error but your tables are likely to be garbled.

|

||||

The feature is disabled by default. If your dataset contains spreadsheets with complex tables and you do not enable this feature, RAGFlow will not throw an error but your tables are likely to be garbled.

|

||||

:::

|

||||

|

||||

## Scenarios

|

||||

@ -27,12 +27,12 @@ Works with complex tables that cannot be represented as key-value pairs. Example

|

||||

|

||||

## Procedure

|

||||

|

||||

1. On your knowledge base's **Configuration** page, select **General** as the chunking method.

|

||||

1. On your dataset's **Configuration** page, select **General** as the chunking method.

|

||||

|

||||

_The **Excel to HTML** toggle appears._

|

||||

|

||||

2. Enable **Excel to HTML** if your knowledge base contains complex spreadsheet tables that cannot be represented as key-value pairs.

|

||||

3. Leave **Excel to HTML** disabled if your knowledge base has no spreadsheet tables or if its spreadsheet tables can be represented as key-value pairs.

|

||||

2. Enable **Excel to HTML** if your dataset contains complex spreadsheet tables that cannot be represented as key-value pairs.

|

||||

3. Leave **Excel to HTML** disabled if your dataset has no spreadsheet tables or if its spreadsheet tables can be represented as key-value pairs.

|

||||

4. If question-answering regarding complex tables is unsatisfactory, check if **Excel to HTML** is enabled.

|

||||

|

||||

## Frequently asked questions

|

||||

|

||||

@ -43,7 +43,7 @@ The system's default chat model is used to summarize clustered content. Before p

|

||||

|

||||

## Configurations

|

||||

|

||||

The RAPTOR feature is disabled by default. To enable it, manually switch on the **Use RAPTOR to enhance retrieval** toggle on your knowledge base's **Configuration** page.

|

||||

The RAPTOR feature is disabled by default. To enable it, manually switch on the **Use RAPTOR to enhance retrieval** toggle on your dataset's **Configuration** page.

|

||||

|

||||

### Prompt

|

||||

|

||||

|

||||

@ -5,11 +5,11 @@ slug: /run_retrieval_test

|

||||

|

||||

# Run retrieval test

|

||||

|

||||

Conduct a retrieval test on your knowledge base to check whether the intended chunks can be retrieved.

|

||||

Conduct a retrieval test on your dataset to check whether the intended chunks can be retrieved.

|

||||

|

||||

---

|

||||

|

||||

After your files are uploaded and parsed, it is recommended that you run a retrieval test before proceeding with the chat assistant configuration. Running a retrieval test is *not* an unnecessary or superfluous step at all! Just like fine-tuning a precision instrument, RAGFlow requires careful tuning to deliver optimal question answering performance. Your knowledge base settings, chat assistant configurations, and the specified large and small models can all significantly impact the final results. Running a retrieval test verifies whether the intended chunks can be recovered, allowing you to quickly identify areas for improvement or pinpoint any issue that needs addressing. For instance, when debugging your question answering system, if you know that the correct chunks can be retrieved, you can focus your efforts elsewhere. For example, in issue [#5627](https://github.com/infiniflow/ragflow/issues/5627), the problem was found to be due to the LLM's limitations.

|

||||

After your files are uploaded and parsed, it is recommended that you run a retrieval test before proceeding with the chat assistant configuration. Running a retrieval test is *not* an unnecessary or superfluous step at all! Just like fine-tuning a precision instrument, RAGFlow requires careful tuning to deliver optimal question answering performance. Your dataset settings, chat assistant configurations, and the specified large and small models can all significantly impact the final results. Running a retrieval test verifies whether the intended chunks can be recovered, allowing you to quickly identify areas for improvement or pinpoint any issue that needs addressing. For instance, when debugging your question answering system, if you know that the correct chunks can be retrieved, you can focus your efforts elsewhere. For example, in issue [#5627](https://github.com/infiniflow/ragflow/issues/5627), the problem was found to be due to the LLM's limitations.

|

||||

|

||||

During a retrieval test, chunks created from your specified chunking method are retrieved using a hybrid search. This search combines weighted keyword similarity with either weighted vector cosine similarity or a weighted reranking score, depending on your settings:

|

||||

|

||||

@ -65,7 +65,7 @@ Using a knowledge graph in a retrieval test will significantly increase the time

|

||||

To perform a [cross-language search](../../references/glossary.mdx#cross-language-search), select one or more target languages from the dropdown menu. The system’s default chat model will then translate your query entered in the Test text field into the selected target language(s). This translation ensures accurate semantic matching across languages, allowing you to retrieve relevant results regardless of language differences.

|

||||

|

||||

:::tip NOTE

|

||||

- When selecting target languages, please ensure that these languages are present in the knowledge base to guarantee an effective search.

|

||||

- When selecting target languages, please ensure that these languages are present in the dataset to guarantee an effective search.

|

||||

- If no target language is selected, the system will search only in the language of your query, which may cause relevant information in other languages to be missed.

|

||||

:::

|

||||

|

||||

@ -75,7 +75,7 @@ This field is where you put in your testing query.

|

||||

|

||||

## Procedure

|

||||

|

||||

1. Navigate to the **Retrieval testing** page of your knowledge base, enter your query in **Test text**, and click **Testing** to run the test.

|

||||

1. Navigate to the **Retrieval testing** page of your dataset, enter your query in **Test text**, and click **Testing** to run the test.

|

||||

2. If the results are unsatisfactory, tune the options listed in the Configuration section and rerun the test.

|

||||

|

||||

*The following is a screenshot of a retrieval test conducted without using knowledge graph. It demonstrates a hybrid search combining weighted keyword similarity and weighted vector cosine similarity. The overall hybrid similarity score is 28.56, calculated as 25.17 (term similarity score) x 0.7 + 36.49 (vector similarity score) x 0.3:*

|

||||

|

||||

@ -27,7 +27,7 @@ RAGFlow isn't one-size-fits-all. It is built for flexibility and supports deeper

|

||||

|

||||

## Procedure

|

||||

|

||||

1. On your knowledge base's **Configuration** page, select a chunking method, say **General**.

|

||||

1. On your dataset's **Configuration** page, select a chunking method, say **General**.

|

||||

|

||||

_The **PDF parser** dropdown menu appears._

|

||||

|

||||

|

||||

@ -9,7 +9,7 @@ Add metadata to an uploaded file

|

||||

|

||||

---

|

||||

|

||||

On the **Dataset** page of your knowledge base, you can add metadata to any uploaded file. This approach enables you to 'tag' additional information like URL, author, date, and more to an existing file. In an AI-powered chat, such information will be sent to the LLM with the retrieved chunks for content generation.

|

||||

On the **Dataset** page of your dataset, you can add metadata to any uploaded file. This approach enables you to 'tag' additional information like URL, author, date, and more to an existing file. In an AI-powered chat, such information will be sent to the LLM with the retrieved chunks for content generation.

|

||||

|

||||

For example, if you have a dataset of HTML files and want the LLM to cite the source URL when responding to your query, add a `"url"` parameter to each file's metadata.

|

||||

|

||||

|

||||

@ -11,15 +11,15 @@ Create a step-retrieval strategy using page rank.

|

||||

|

||||

## Scenario

|

||||

|

||||

In an AI-powered chat, you can configure a chat assistant or an agent to respond using knowledge retrieved from multiple specified knowledge bases (datasets), provided that they employ the same embedding model. In situations where you prefer information from certain knowledge base(s) to take precedence or to be retrieved first, you can use RAGFlow's page rank feature to increase the ranking of chunks from these knowledge bases. For example, if you have configured a chat assistant to draw from two knowledge bases, knowledge base A for 2024 news and knowledge base B for 2023 news, but wish to prioritize news from year 2024, this feature is particularly useful.

|

||||

In an AI-powered chat, you can configure a chat assistant or an agent to respond using knowledge retrieved from multiple specified datasets (datasets), provided that they employ the same embedding model. In situations where you prefer information from certain dataset(s) to take precedence or to be retrieved first, you can use RAGFlow's page rank feature to increase the ranking of chunks from these datasets. For example, if you have configured a chat assistant to draw from two datasets, dataset A for 2024 news and dataset B for 2023 news, but wish to prioritize news from year 2024, this feature is particularly useful.

|

||||

|

||||

:::info NOTE

|

||||

It is important to note that this 'page rank' feature operates at the level of the entire knowledge base rather than on individual files or documents.

|

||||

It is important to note that this 'page rank' feature operates at the level of the entire dataset rather than on individual files or documents.

|

||||

:::

|

||||

|

||||

## Configuration

|

||||

|

||||

On the **Configuration** page of your knowledge base, drag the slider under **Page rank** to set the page rank value for your knowledge base. You are also allowed to input the intended page rank value in the field next to the slider.

|

||||

On the **Configuration** page of your dataset, drag the slider under **Page rank** to set the page rank value for your dataset. You are also allowed to input the intended page rank value in the field next to the slider.

|

||||

|

||||

:::info NOTE

|

||||

The page rank value must be an integer. Range: [0,100]

|

||||

@ -36,4 +36,4 @@ If you set the page rank value to a non-integer, say 1.7, it will be rounded dow

|

||||

|

||||

If you configure a chat assistant's **similarity threshold** to 0.2, only chunks with a hybrid score greater than 0.2 x 100 = 20 will be retrieved and sent to the chat model for content generation. This initial filtering step is crucial for narrowing down relevant information.

|

||||

|

||||

If you have assigned a page rank of 1 to knowledge base A (2024 news) and 0 to knowledge base B (2023 news), the final hybrid scores of the retrieved chunks will be adjusted accordingly. A chunk retrieved from knowledge base A with an initial score of 50 will receive a boost of 1 x 100 = 100 points, resulting in a final score of 50 + 1 x 100 = 150. In this way, chunks retrieved from knowledge base A will always precede chunks from knowledge base B.

|

||||

If you have assigned a page rank of 1 to dataset A (2024 news) and 0 to dataset B (2023 news), the final hybrid scores of the retrieved chunks will be adjusted accordingly. A chunk retrieved from dataset A with an initial score of 50 will receive a boost of 1 x 100 = 100 points, resulting in a final score of 50 + 1 x 100 = 150. In this way, chunks retrieved from dataset A will always precede chunks from dataset B.

|

||||

@ -9,9 +9,9 @@ Use a tag set to auto-tag chunks in your datasets.

|

||||

|

||||

---

|

||||

|

||||

Retrieval accuracy is the touchstone for a production-ready RAG framework. In addition to retrieval-enhancing approaches like auto-keyword, auto-question, and knowledge graph, RAGFlow introduces an auto-tagging feature to address semantic gaps. The auto-tagging feature automatically maps tags in the user-defined tag sets to relevant chunks within your knowledge base based on similarity with each chunk. This automation mechanism allows you to apply an additional "layer" of domain-specific knowledge to existing datasets, which is particularly useful when dealing with a large number of chunks.

|

||||

Retrieval accuracy is the touchstone for a production-ready RAG framework. In addition to retrieval-enhancing approaches like auto-keyword, auto-question, and knowledge graph, RAGFlow introduces an auto-tagging feature to address semantic gaps. The auto-tagging feature automatically maps tags in the user-defined tag sets to relevant chunks within your dataset based on similarity with each chunk. This automation mechanism allows you to apply an additional "layer" of domain-specific knowledge to existing datasets, which is particularly useful when dealing with a large number of chunks.

|

||||

|

||||

To use this feature, ensure you have at least one properly configured tag set, specify the tag set(s) on the **Configuration** page of your knowledge base (dataset), and then re-parse your documents to initiate the auto-tagging process. During this process, each chunk in your dataset is compared with every entry in the specified tag set(s), and tags are automatically applied based on similarity.

|

||||

To use this feature, ensure you have at least one properly configured tag set, specify the tag set(s) on the **Configuration** page of your dataset, and then re-parse your documents to initiate the auto-tagging process. During this process, each chunk in your dataset is compared with every entry in the specified tag set(s), and tags are automatically applied based on similarity.

|

||||

|

||||

## Scenarios

|

||||

|

||||

@ -19,7 +19,7 @@ Auto-tagging applies in situations where chunks are so similar to each other tha

|

||||

|

||||

## 1. Create tag set

|

||||

|

||||

You can consider a tag set as a closed set, and the tags to attach to the chunks in your dataset (knowledge base) are *exclusively* from the specified tag set. You use a tag set to "inform" RAGFlow which chunks to tag and which tags to apply.

|

||||

You can consider a tag set as a closed set, and the tags to attach to the chunks in your dataset are *exclusively* from the specified tag set. You use a tag set to "inform" RAGFlow which chunks to tag and which tags to apply.

|

||||

|

||||

### Prepare a tag table file

|

||||

|

||||

@ -41,8 +41,8 @@ As a rule of thumb, consider including the following entries in your tag table:

|

||||

A tag set is *not* involved in document indexing or retrieval. Do not specify a tag set when configuring your chat assistant or agent.

|

||||

:::

|

||||

|

||||

1. Click **+ Create knowledge base** to create a knowledge base.

|

||||

2. Navigate to the **Configuration** page of the created knowledge base and choose **Tag** as the default chunking method.

|

||||

1. Click **+ Create dataset** to create a dataset.

|

||||

2. Navigate to the **Configuration** page of the created dataset and choose **Tag** as the default chunking method.

|

||||

3. Navigate to the **Dataset** page and upload and parse your table file in XLSX, CSV, or TXT formats.

|

||||

_A tag cloud appears under the **Tag view** section, indicating the tag set is created:_

|

||||

|

||||

@ -53,7 +53,7 @@ A tag set is *not* involved in document indexing or retrieval. Do not specify a

|

||||

|

||||

Once a tag set is created, you can apply it to your dataset:

|

||||

|

||||

1. Navigate to the **Configuration** page of your knowledge base (dataset).

|

||||

1. Navigate to the **Configuration** page of your dataset.

|

||||

2. Select the tag set from the **Tag sets** dropdown and click **Save** to confirm.

|

||||

|

||||

:::tip NOTE

|

||||

@ -94,9 +94,9 @@ If you add new table files to your tag set, it is at your own discretion whether

|

||||

|

||||

Yes, you can. Usually one tag set suffices. When using multiple tag sets, ensure they are independent of each other; otherwise, consider merging your tag sets.

|

||||

|

||||

### Difference between a tag set and a standard knowledge base?

|

||||

### Difference between a tag set and a standard dataset?

|

||||

|

||||

A standard knowledge base is a dataset. It will be searched by RAGFlow's document engine and the retrieved chunks will be fed to the LLM. In contrast, a tag set is used solely to attach tags to chunks within your dataset. It does not directly participate in the retrieval process, and you should not choose a tag set when selecting datasets for your chat assistant or agent.

|

||||

A standard dataset is a dataset. It will be searched by RAGFlow's document engine and the retrieved chunks will be fed to the LLM. In contrast, a tag set is used solely to attach tags to chunks within your dataset. It does not directly participate in the retrieval process, and you should not choose a tag set when selecting datasets for your chat assistant or agent.

|

||||

|

||||

### Difference between auto-tag and auto-keyword?

|

||||

|

||||

|

||||

Reference in New Issue

Block a user